I am working on a binary classification problem using balanced bagging random forest, neural networks and boosting techniques. my dataset size is 977 and class proportion is 77:23.

I had 61 features in my dataset. However, after lot of feature selection activities, I arrived at 5 features. SoBut yes, these 5 features were identified using random forest estimator in RFECV, Borutapy etc. So, with 5 features, I thought that my Xgboost model will not overfit and provide me better performance in test set but still the Xgboost model overfits and produces poor results on test set. However, Random forest does similar performance on both train and test. Can help me understand why does this happen?

performance shown below for train and test

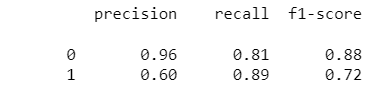

Random Forest - train data

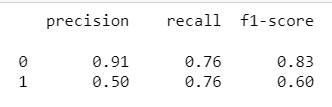

Random Forest - test data

roc_auc for random forest - 81

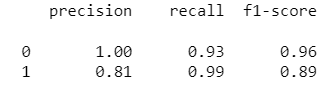

Xgboost - train data

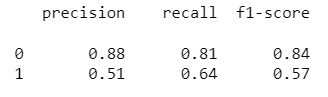

Xgboost - test data

roc_auc for xgboost - 0.81