Notes about my following SEDE queries: Upon new SEDE refresh, you may have to run the cross-site queries a few times for them to finally complete). Ignore the datapoints for the current month (cropped out of the screenshots). That data is not yet complete. Ex. Things like roomba have "lag".

The alternative hypothesis for the above chart is that the number of questions available for users to answer has simply fallen, on account of question rates falling. This claim is hard to swallow given current data.

The total volume of questions available to frequent answerers continues to rise

You're kidding me, right?

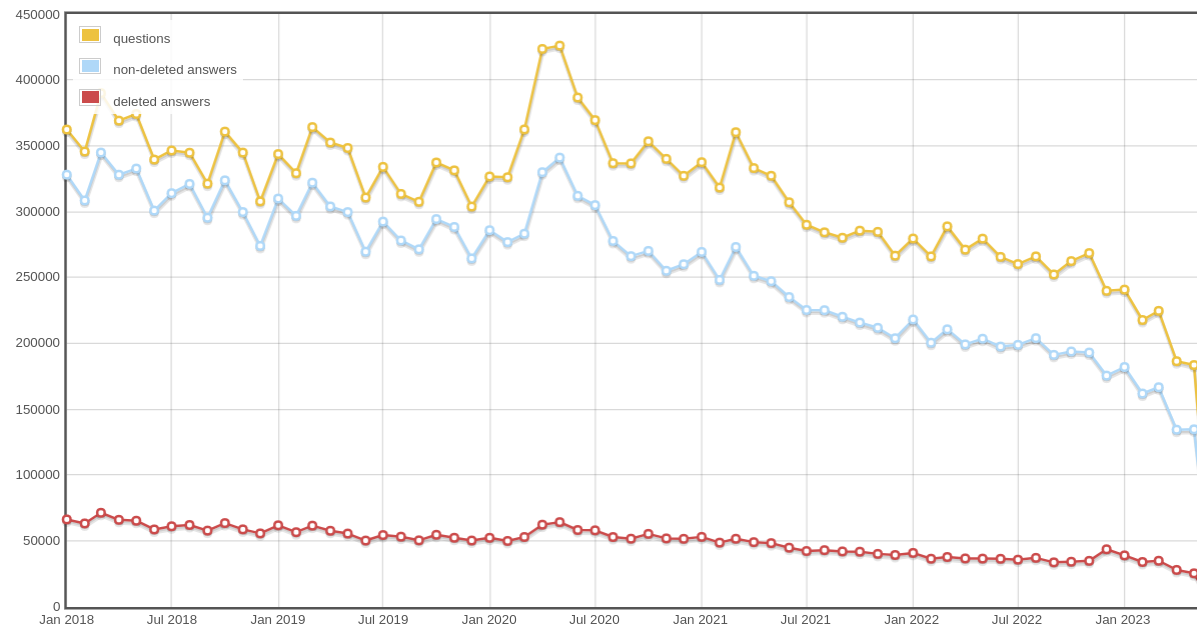

Your assertion that the number of available questions is rising is completely contrary to reality and using a warped (IMO) view of the data. Here's a network-wide query of new contributions per month since 2018.

Here's a site-specific version of the query if you're interested.

Just in case it's not obvious enough, question influx rate is dropping. A lot. And so is answer influx rate.

Did you forget that in your new Code of Conduct, you state:

Broadly speaking, we do not allow and may remove misleading information that:

- Misrepresents, denies, or makes unsubstantiated claims about historical or newsworthy events or the people involved in them

Why aren't we instead talking about the problem of loss of askers and questions? There are no answers without questions. Losing questions is logically a more root problem. Why not give attention to the more root problem?

The way I see it, answer rates are dropping as a result of question rates dropping - not because of the wrongful suspensions that you are trying so hard to believe are happening.

I have two reasons to believe that answers are dropping because of questions dropping:

Some pretty simple logic: Again, there can be no answers if there are no questions. Answers are to questions, and answerability is largely dependent on question characteristics.

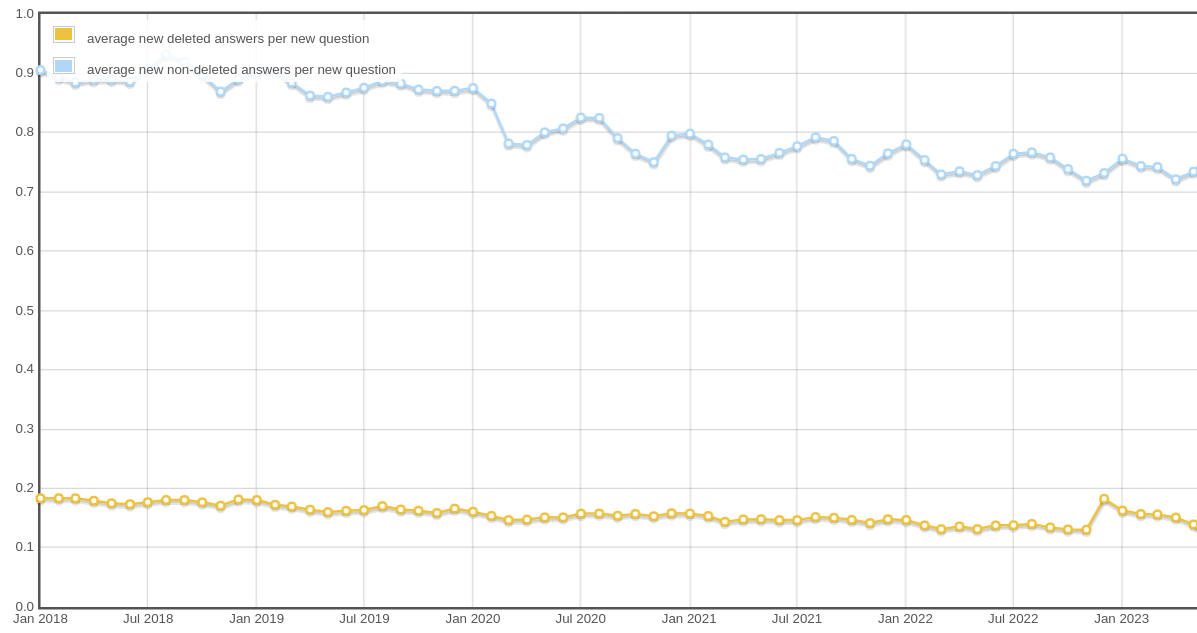

That's certainly what the consistent proportion of new answers to new questions per month suggests to me.

Though there was a (not at all surprising) uptick in new deleted answers per new (deleted or not) question last December with the ChatGPT release and ban policy on SO, the proportion of new (non-deleted) answers to new questions (deleted or not) is hovering fairly constantly around 0.74 and has not experienced any dramatic change in the several years before, and since the release of ChatGPT. It has been fluctuating smoothly with a gradual decline from an average of ~0.8 in 2020. In that sense, from where I stand, I think you are missing the forest for the trees. Here's a network-wide query of average new answers per new question per month since 2018

Notice how the trend for non-deleted answers is smooth from Nov 2022 to Dec 2022 to Jan 2023. Here's a site-specific version of the query if you're interested.

The smoothness of the monthly new non-deleted answers in proportion to new questions and the noticeable jump in deleted ones leads me to believe that - largely speaking (I did see some high-rep users violate the policy) - we didn't significantly lose our consistently-answer-contributing userbase, and instead, just rapidly gained a bunch of gold reputation prospectors trying to get rich quick and easy with the fun new toy without any regard for possible damage done to the ecosystem, and we were able (thanks to mods and flaggers, and CMs who cooperated with mods in blessing the ban policy) to keep the current and long-term damage under control in protection of the Stack Exchange network's reputation as a trusted source of information.

Let me add to that my own experience: My rate of answering on SO has been in decline recently, and it's not because I'm spending less time or effort looking for things to answer. There was a period earlier this year when I was averaging ~10 answers per day. Now it's more like ~3. It's largely because I've been getting fewer questions in my tags. A few months ago, I used to wake up to over a page of new questions, and now I often wake up to less than a page.

I'm very much inclined to believe that you're pointing your fingers at the wrong place and the wrong people.

The volume of users who post 3 or more answers per week has dropped rapidly since GPT’s release

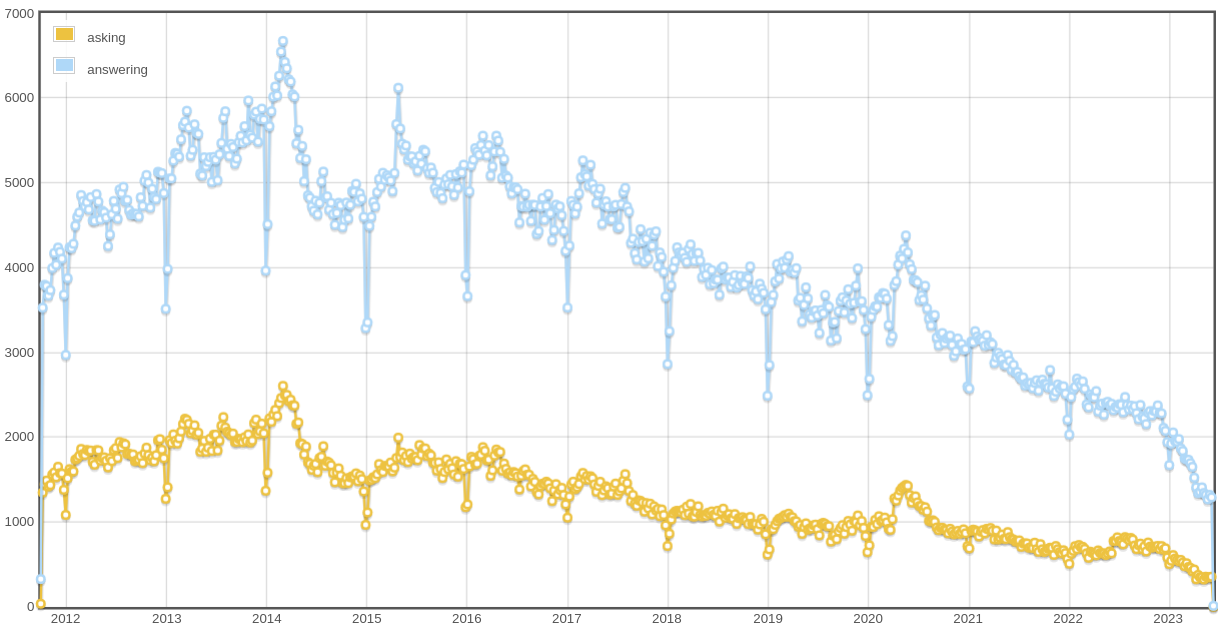

In your obsession with answerers, you are missing that according to your methodology and logic, askers seem to be "leaving" even faster. Continuing on your weird focus on answers instead of questions, here's a SEDE query I made of SO graphing number of contributors who asked three or more questions or wrote three or more answers in a week since October 2011 (basically extending your graph to actually show the historical trend and put question-askers in the picture as well):

By my rough calculation, between the beginning of Jan. 2016 to late Nov. 2022, we were losing 3-per-week-askers on SO at a rate of roughly 144.57/year ((1700-1000)/6.917), and 3-per-week-answerers at roughly 462.63/year ((5500-2300)/6.917), and between late Nov. 2022 to late May 2023, 3-per-week-askers at roughly 750.00/year ((700-325)/0.5), and 3-per-week-answerers at roughly 2100.00/year ((2300-1250)/0.5). 3-question-per-week-asker loss rate increased ~5.9 times, and 3-answers-per-week-answerers loss rate increased ~4.54 times.

I.e. by my rough estimate and your approach and logic, your "dedicated asker-base" has been "leaving" 1.14 times faster than your "dedicated answerer-base". Am I significantly wrong there? Have you paid any attention to that? If I'm in the right ballpark, then you're making much ado about a smaller problem and ignoring a bigger one, and destroying your relationship with the more dedicated part of your userbase in the process...

~1.14 is twice your purported "7% of dedicated answerers leaving", and I see no data to support that that problem is any fault of the moderators or curators in enacting ChatGPT policies. If anything, from what I've been reading on Reddit, people are going to ChatGPT to ask their questions instead of Stack Overflow.

In total, the rate at which frequent answerers leave the site quadrupled since GPT’s release.

You've skipped an important step (and it's why I've been putting "leaving" in double-quotes). What does writing fewer answers have to do with leaving the site? Again, there are fewer questions. I'm still here. Again, I'm writing fewer answers largely because there are fewer questions.

If you're concerned about traffic dropping with the rise of ChatGPT, I just can't understand why the first thing you'd think to do is basically allow ChatGPT answers instead of looking at things like improving your platform and making it more usable, improving user experience / guidance / onboarding, fixing bugs, and looking at highly-requested features.

When I read peoples' comments on Reddit about why they're leaving, it's often a case of What about the community is "toxic" to new users?. See Mithical's answer to that question- it's why I bring up improving user guidance and onboarding. In fact, you have a project to work on that: Introducing new user onboarding project. What happened to that project?

Like - c'mon. Stick to your guns. Stack Exchange succeeded because its whole approach to being a Q&A platform was valuable, and I'm convinced that it can and will continue being valuable without allowing LLM-generated answers.

7% of the people who post 3 or more answers in a week are suspended within three weeks

I don't see 7% as significant compared to the rough order of a ~25% decline in overall post contributions comparing November 2022 to May 2023. Again, I'm so confused why we're all sitting here talking about this mouse when there's a whole elephant in the same room.

no Community Manager will tell you that removing 7% of the users who try to actively participate in a community per week is remotely tenable for a healthy community.

:/ Just 6 months ago you were quite supportive of SO's ChatGPT policy, for reasons of community and platform health.

You wrote:

https://stackoverflow.com/help/answering-limit

We slow down new user contributions in order to ensure the integrity of the site and that users take the time they need to craft a good answer.

https://stackoverflow.com/help/gpt-policy

Stack Overflow is a community built upon trust. The community trusts that users are submitting answers that reflect what they actually know to be accurate and that they and their peers have the knowledge and skill set to verify and validate those answers. The system relies on users to verify and validate contributions by other users with the tools we offer, including responsible use of upvotes and downvotes. Currently, contributions generated by GPT most often do not meet these standards and therefore are not contributing to a trustworthy environment. This trust is broken when users copy and paste information into answers without validating that the answer provided by GPT is correct, ensuring that the sources used in the answer are properly cited (a service GPT does not provide), and verifying that the answer provided by GPT clearly and concisely answers the question asked.

[...] In order for Stack Overflow to maintain a strong standard as a reliable source for correct and verified information, such answers must be edited or replaced. However, because GPT is good enough to convince users of the site that the answer holds merit, signals the community typically use to determine the legitimacy of their peers’ contributions frequently fail to detect severe issues with GPT-generated answers. As a result, information that is objectively wrong makes its way onto the site. In its current state, GPT risks breaking readers’ trust that our site provides answers written by subject-matter experts.

Supposing every suspension is accurate, the magnitude raises serious concerns about long-term sustainability for the site

In the quote just above, you were concerned about the long-term sustainability of the site in support of suspensions for ChatGPT-generated content:

Moderators are empowered (at their discretion) to issue immediate suspensions of up to 30 days to users who are copying and pasting GPT content onto the site, with or without prior notice or warning.

It's so confusing and frustrating for me to watch you suddenly do a 180 to this direction, contradicting yourself. From my point of view, it's just inexplicable. I'm not quite angry, but I'm certainly flabbergasted.

However, since the advent of GPT, the % of content produced by frequent answerers has started to collapse unexpectedly. Given the absence of question scarcity as a factor for answerers (note the above chart), the clear inference is that a large portion of frequent answerers are leaving the site, or the site is suddenly not effective at retaining new frequent answerers.

Again, the data does not support this. The rate of incoming questions is dramatically dropping, and with it, the rate of answers to those questions. And that inference of yours is not clear to me. Again, what does fewer answers have to do with people leaving? Until I see actual data that directly supports that conclusion, I'll be here pressing X.

In your draft approach, I'd like to see data focusing on the sizes (number of characters) of initial drafts instead of the number of drafts.

Like - why have you ruled out that people are just getting craftier about evading detection, and pasting into the answer input, and then doing editing there to make the content look less like it was ChatGPT/LLM-generated? Because that's totally what I'd expect to be happening over time - especially with suspensions being handed out. And that's very same reason I'm doubtful of your following

These days, however, it’s clear that the rate of GPT answers on Stack Overflow is extremely small.

Stray question: Do the drafts you're looking at include content that gets posted quickly enough that there is no intermediate draft?

Some folks have asked us why this metric is capable of reporting negative numbers. The condensed answer is that the metric has noise. If the true value is zero, sometimes it will report a value higher than zero, and sometimes a value lower than zero. Since we know how much noise the metric has, we know what the largest value for GPT-suspect posts should be.

Can you clarify and expand on this?

- In the graph, what's the light blue line, and the dark blue line?

- How/why does the metric have noise?

- What does "true value" mean? Are you saying you've found a 100% accurate way to detect ChatGPT answers? (obviously not, but I can't understand what else "true value" would mean. Are you referring to your own technique? Because as I've already explained, I think it has flaws (unless I've misunderstood your explanation of it).)

While we could not recover all of the GPT suspension appeals sent to the Stack Exchange inbox, we could characterize some of them.

... what do you mean "Could not recover"? You mean you lost them? If so,... how? status-completed: this was clarified here.

We are, as of right now, operating under the evidence-backed assumption that humans cannot identify the difference between GPT and non-GPT posts with sufficient operational accuracy to apply these methods to the platform as a whole.

Have you talked with NotTheDr01ds and sideshowbarker? Ex. see NotTheDr01ds' post here, and sideshowbarker's post here. I'm sure they'd beg to differ. Also, this statement seems to lose the nuance of true positive and false positive rate. I think humans can have an excellent true-positive rate with some basic heuristics while erring on "the side of caution".

Under this assumption, it is impossible for us to generate a list of cases where we know moderators have made a mistake. If we were to do so, it would imply that we have a method by which we can know that the incorrect action was taken.

Again, have you talked with mods like sideshowbarker and users like NotTheDr01ds? I'm sure they could give you some practical, useful, concrete heuristics.

none of the hypotheses generated by the company can explain away the relationship between % of frequent answerer suspensions and the decrease in frequent answerers, in the context of falling actual GPT post rates.

Again, I think you're missing something obvious - namely that questions are incoming at a lower rate as people go to ChatGPT instead of Stack Exchange to ask questions. I almost see the relation you're staring at to be a spurious one. I don't get why you're ignoring the relation between decreased rate of incoming questions, and decrease rate of answers. As I've shown, answer influx rates has remained consistently proportional to question influx rate for much more than 5 years.

Suppose we are right in this assessment and GPT removal actions are not reasonably defensible. How long can we afford to wait? To what extent can we continue to risk the network’s short-term integrity against the long-term risks of the current environment? Any good community management policy must balance the risk of action against the risk of inaction in any given situation, and the evidence does not presently favor inaction.

Again, I find it funny and sad how consistent that is with the GPT policy Help Center page that was written if you just replace "GPT removal actions" with "GPT addition actions".

if there is no future for a network where mods can assess posts on the basis of GPT authorship (where we are today)

... why? I don't follow the reasoning. Again, as I've said, Stack Exchange succeeded because its approach provided real value, and I don't see how that value is gone with the rise of ChatGPT. I plan to write up a new Q&A specifically about this, or reuse ones where I've already written answers, such as here and here.