If you have a significant interaction and you are using typical linear modeling, then the "main effects" represented by individual-predictor coefficients are at best hard to interpret and at worst outright confusing.

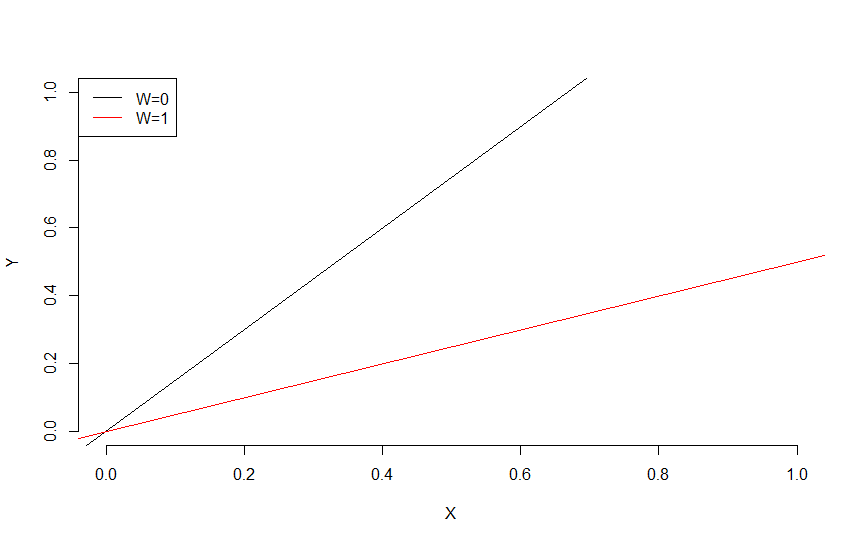

In the usual coding of predictors in R, what is typically taken to be the "main effect" for a categorical predictor like condition is its regression coefficient in a linear model. With an interaction in your situation, that is its association with outcome only when time = 0. That might be too early to see an association of condition with outcome if the conditions only differed starting at time = 0. See the plot in the answer from @AdamO, treating x as your time and w as your treatment.

The corresponding "main effect" for a continuous predictor like time would be its association with outcome when condition is at its reference level. If that reference level is "control" it's quite possible that you wouldn't have a "significant" "main effect" coefficient for time either, if the outcome is constant without an intervention.

The interaction coefficient tells you how much the association of time with outcome depends on the value of condition, and vice-versa. If that's appreciable (not even necessarily "significant"), the apparent "main effect" of one predictor can depend on the coding of a different predictor with which it interacts.

The best way to evaluate the importance of a predictor involved in an interaction requires evaluating all of the terms involving it, for example with likelihood-ratio tests or Wald tests on its sets of coefficients.

Finally, there's no requirement to do an initial ANOVA if you already planned to do all of the pairwise comparisons. So your Tukey pairwise comparisons are OK to perform in any case.