In today's data-intensive world, processing speed can be a significant bottleneck in Python applications. While Python offers simplicity and versatility, its Global Interpreter Lock (GIL) can limit performance in CPU-bound tasks. This is where Python's multiprocessing module shines, offering a robust solution to leverage multiple CPU cores and achieve true parallel execution. This comprehensive guide explores how multiprocessing works, when to use it, and practical implementation strategies to supercharge your Python applications.

Understanding Python's Multiprocessing Module

Python multiprocessing module was introduced to overcome the limitations imposed by the Global Interpreter Lock (GIL). The GIL prevents multiple native threads from executing Python bytecode simultaneously, which means that even on multi-core systems, threading in Python doesn't provide true parallelism for CPU-bound tasks.

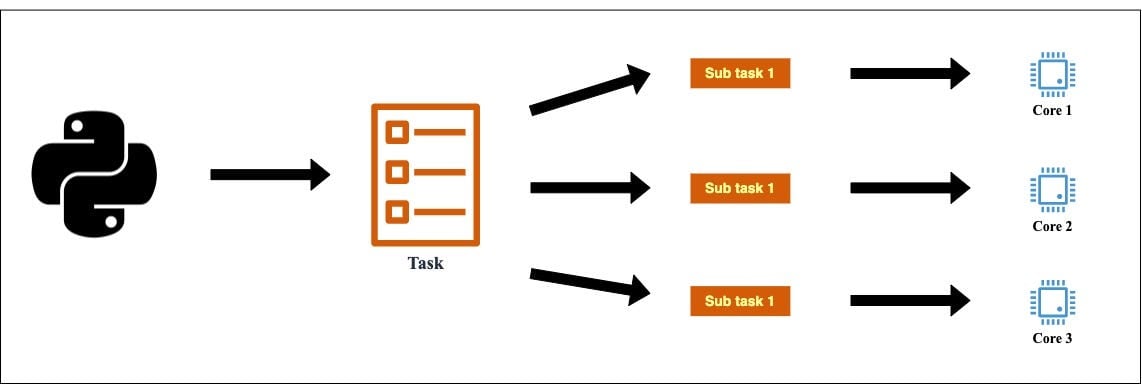

Multiprocessing circumvents this limitation by creating separate Python processes rather than threads. Each process has its own Python interpreter and memory space, allowing multiple processes to execute code truly in parallel across different CPU cores.

Key Benefits of Multiprocessing

- True Parallelism: Unlike threading, multiprocessing enables CPU-bound code to execute simultaneously across multiple cores.

- Resource Isolation: Each process has its own memory space, preventing the sharing issues that can occur with threading.

- Fault Tolerance: A crash in one process doesn't necessarily bring down other processes.

- Scalability: Applications can be designed to scale across multiple cores and even multiple machines.

When to Use Multiprocessing

Multiprocessing isn't always the right solution. Here's when you should consider using it:

Ideal Use Cases

- CPU-Intensive Tasks: Calculations, data processing, and simulations that require significant computation.

- Batch Processing: Processing large datasets in chunks where operations on each chunk are independent.

- Algorithm Parallelization: Algorithms that can be divided into independent parts (like certain machine learning training processes).

- Image and Video Processing: Operations like filtering, transformations, or feature extraction that can be applied independently to different portions of the data.

When to Avoid Multiprocessing

- I/O-Bound Tasks: For operations limited by input/output rather than CPU (like web scraping or database operations), asynchronous programming or threading may be more appropriate.

- Small Datasets: The overhead of process creation and communication can outweigh the benefits for small tasks.

- Highly Interdependent Tasks: Tasks requiring frequent synchronization between processes may see reduced performance due to communication overhead.

Getting Started with Multiprocessing

Let's explore the basic patterns for implementing multiprocessing in Python.

The Process Class

The most basic way to use multiprocessing is with the Process class:

import multiprocessing def worker(num): """Worker function""" print(f'Worker: {num}') return if __name__ == '__main__': processes = [] # Create 5 processes for i in range(5): p = multiprocessing.Process(target=worker, args=(i,)) processes.append(p) p.start() # Wait for all processes to complete for p in processes: p.join()This example creates five separate processes, each executing the worker function with a different argument.

The Pool Class

For batch processing tasks, the Pool class provides a convenient way to distribute work across multiple processes:

from multiprocessing import Pool def f(x): return x*x if __name__ == '__main__': with Pool(processes=4) as pool: results = pool.map(f, range(10)) print(results) # Output: [0, 1, 4, 9, 16, 25, 36, 49, 64, 81]The Pool class automatically divides the input data among available processes and manages them for you. This is often the simplest way to parallelize operations on large datasets.

Advanced Multiprocessing Techniques

Once you've mastered the basics, you can explore these more advanced techniques:

Process Communication

Processes in Python don't share memory by default, so multiprocessing provides several mechanisms for communication:

Queues

from multiprocessing import Process, Queue def f(q): q.put('hello') if __name__ == '__main__': q = Queue() p = Process(target=f, args=(q,)) p.start() print(q.get()) # Output: 'hello' p.join()Pipes

from multiprocessing import Process, Pipe def f(conn): conn.send('hello') conn.close() if __name__ == '__main__': parent_conn, child_conn = Pipe() p = Process(target=f, args=(child_conn,)) p.start() print(parent_conn.recv()) # Output: 'hello' p.join()Shared Memory

For cases where you need to share large amounts of data between processes:

from multiprocessing import Process, Value, Array def f(n, a): n.value = 3.1415927 for i in range(len(a)): a[i] = -a[i] if __name__ == '__main__': num = Value('d', 0.0) arr = Array('i', range(10)) p = Process(target=f, args=(num, arr)) p.start() p.join() print(num.value) # Output: 3.1415927 print(arr[:]) # Output: [0, -1, -2, -3, -4, -5, -6, -7, -8, -9]Process Pools with Callbacks

You can add callbacks to be executed when tasks complete:

from multiprocessing import Pool import time def f(x): return x*x def callback_func(result): print(f"Task result: {result}") if __name__ == '__main__': pool = Pool(processes=4) for i in range(10): pool.apply_async(f, args=(i,), callback=callback_func) pool.close() pool.join()Performance Optimization Tips

To get the most out of multiprocessing, consider these optimization strategies:

Optimal Process Count

The ideal number of processes depends on your specific task and system capabilities:

import multiprocessing # Use the number of CPU cores for CPU-bound tasks num_processes = multiprocessing.cpu_count() # For I/O-bound tasks, you might want to use more # num_processes = multiprocessing.cpu_count() * 2Chunking Data

When processing large datasets, using appropriate chunk sizes can significantly improve performance:

from multiprocessing import Pool def process_chunk(chunk): return [x*x for x in chunk] if __name__ == '__main__': data = list(range(10000)) with Pool(processes=4) as pool: # Process data in chunks of 100 results = pool.map(process_chunk, [data[i:i+100] for i in range(0, len(data), 100)]) # Flatten the results flattened = [item for sublist in results for item in sublist]Minimizing Communication

Excessive communication between processes can create bottlenecks:

- Pass all necessary data to a process at initialization when possible.

- Return results in larger batches rather than item-by-item.

- Use shared memory for large datasets that need to be accessed by multiple processes.

Common Pitfalls and Solutions

The "if name == 'main'" Guard

Always protect your multiprocessing code with this guard to prevent infinite process spawning:

# This is crucial in multiprocessing code if __name__ == '__main__': # Your multiprocessing code here passPickling Limitations

Multiprocessing relies on pickling for inter-process communication, which has limitations:

- Not all objects can be pickled (like file handles or database connections).

- Methods of custom classes may not be picklable.

Solution: Use basic data types or ensure your objects are picklable.

Resource Leaks

Always properly close and join processes to prevent resource leaks:

from multiprocessing import Pool if __name__ == '__main__': pool = Pool(processes=4) # Do work with the pool # Always close and join pool.close() # No more tasks will be submitted pool.join() # Wait for all worker processes to exitReal-World Application Example

Let's look at a practical example: parallel image processing with multiprocessing.

from multiprocessing import Pool from PIL import Image, ImageFilter import os def process_image(image_path): # Open the image img = Image.open(image_path) # Apply a filter filtered = img.filter(ImageFilter.BLUR) # Save with new name save_path = f"processed_{os.path.basename(image_path)}" filtered.save(save_path) return save_path if __name__ == '__main__': # List of image paths to process image_paths = ["image1.jpg", "image2.jpg", "image3.jpg", "image4.jpg"] # Create a pool with 4 processes with Pool(processes=4) as pool: # Process images in parallel results = pool.map(process_image, image_paths) print(f"Processed images: {results}")Summary

Python's multiprocessing module offers a powerful solution for achieving true parallelism in CPU-bound applications. By distributing work across multiple processes, you can fully leverage modern multi-core systems and significantly improve execution speed for suitable tasks.

More Articles from Unixmen

Python Main Function: Understanding and Using if __name__ == “__main__”