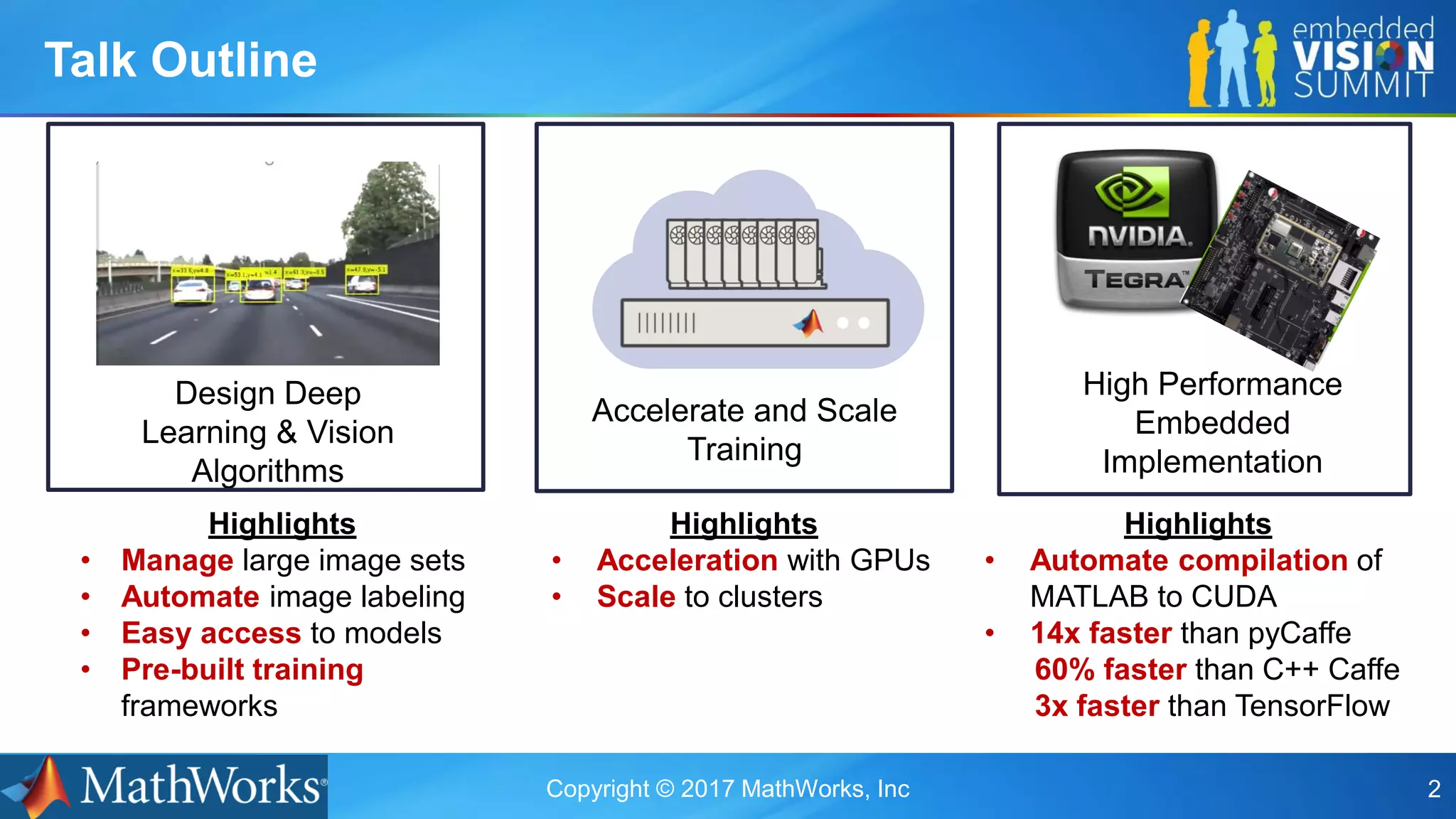

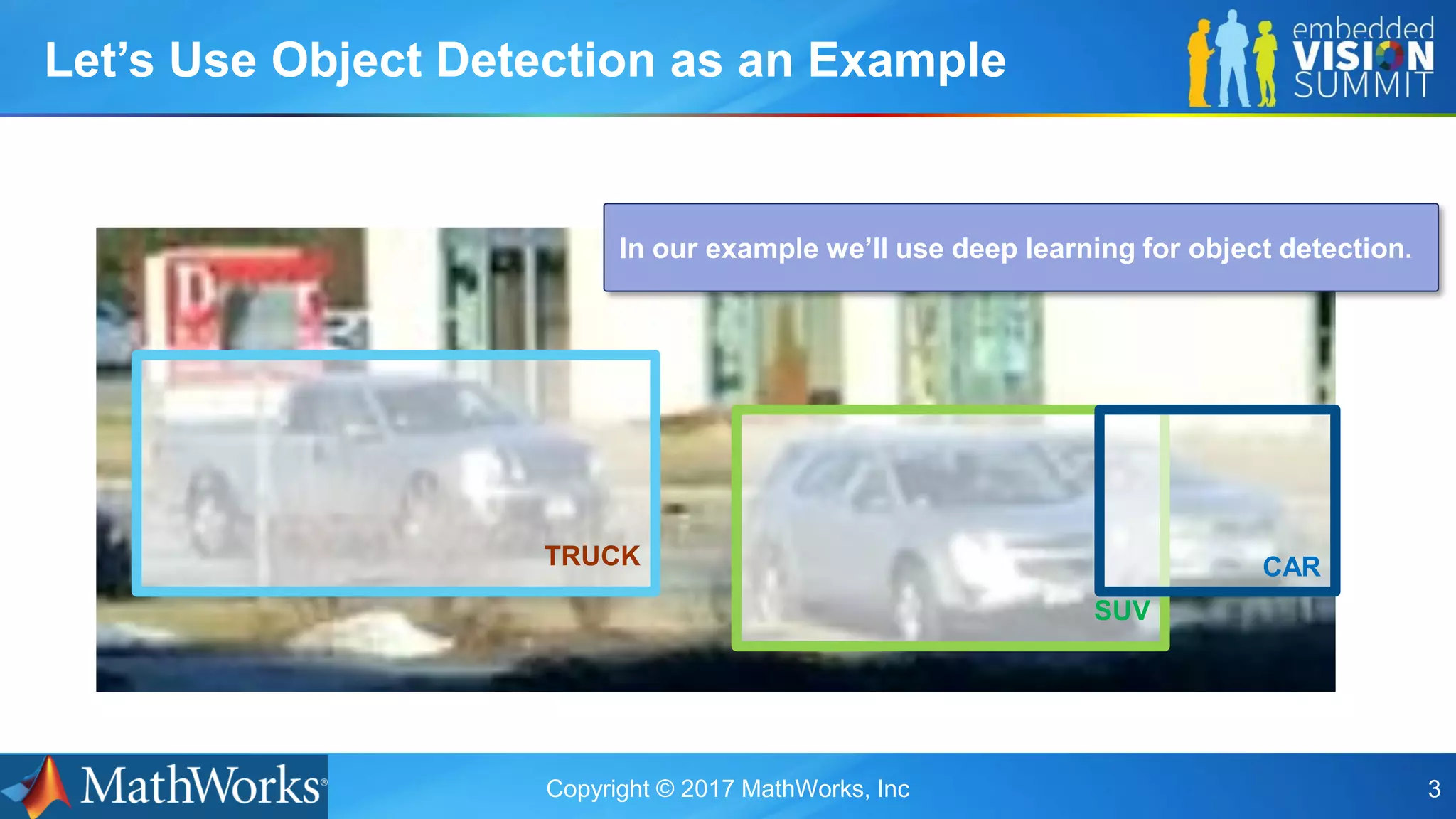

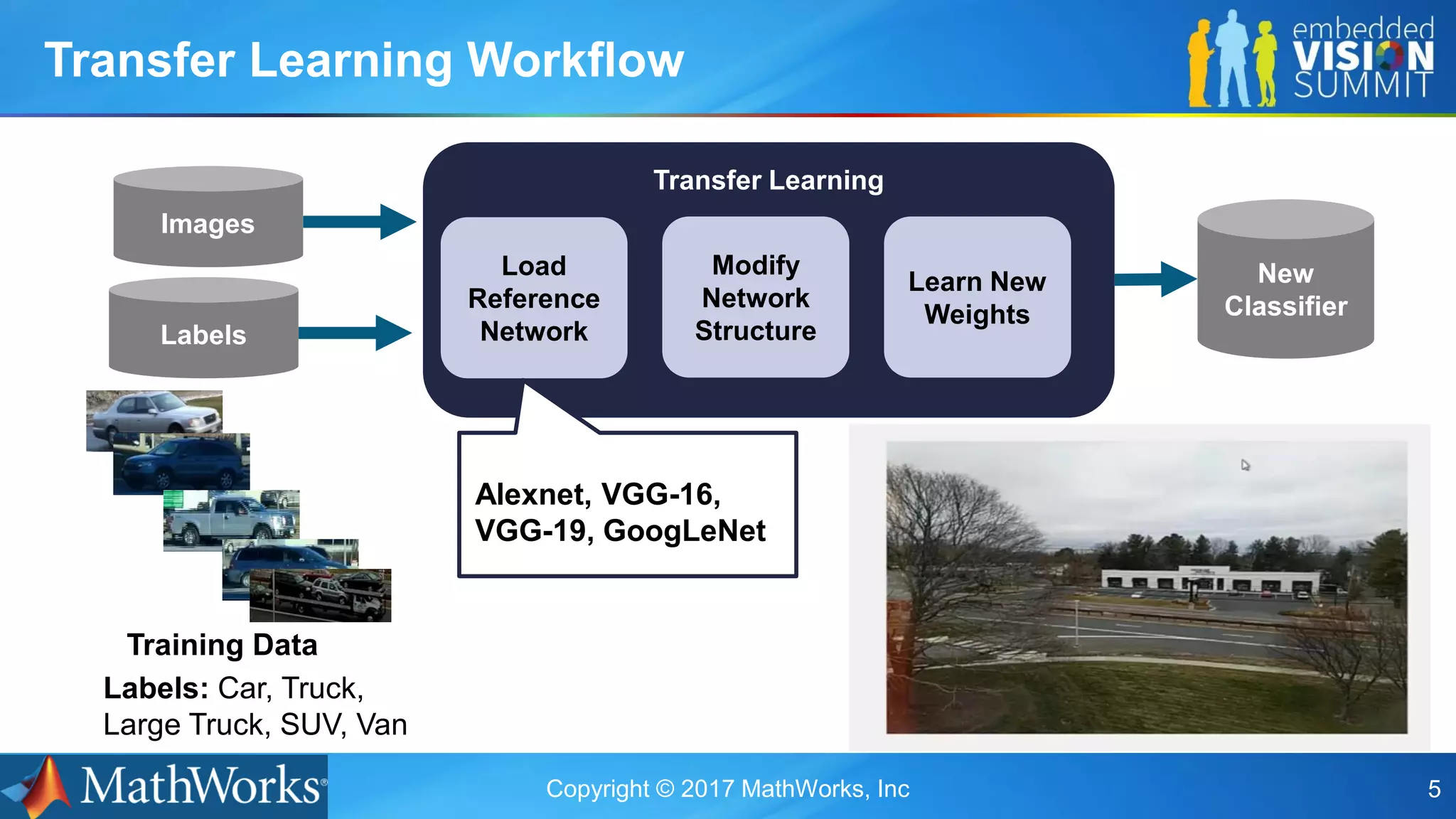

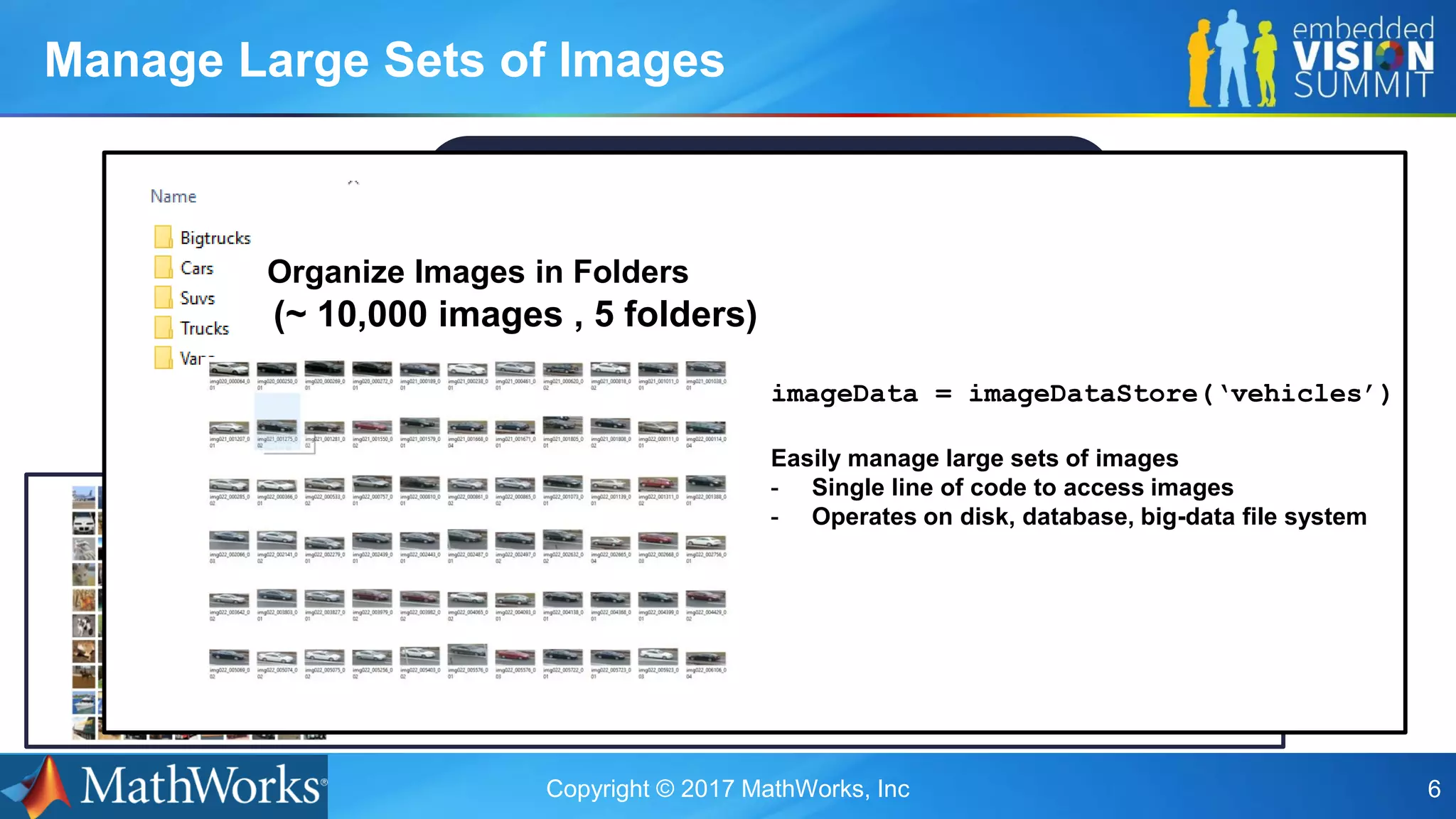

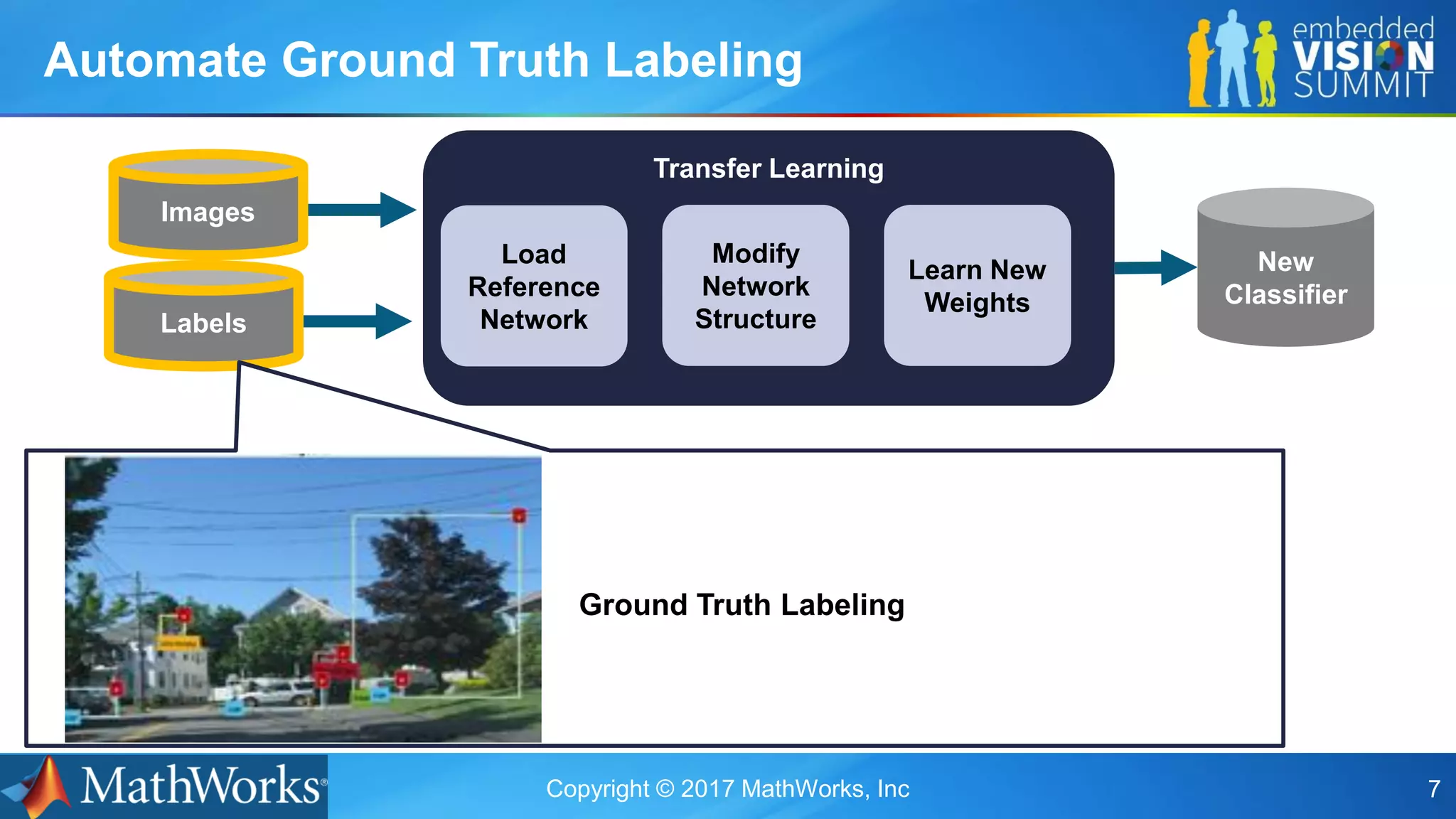

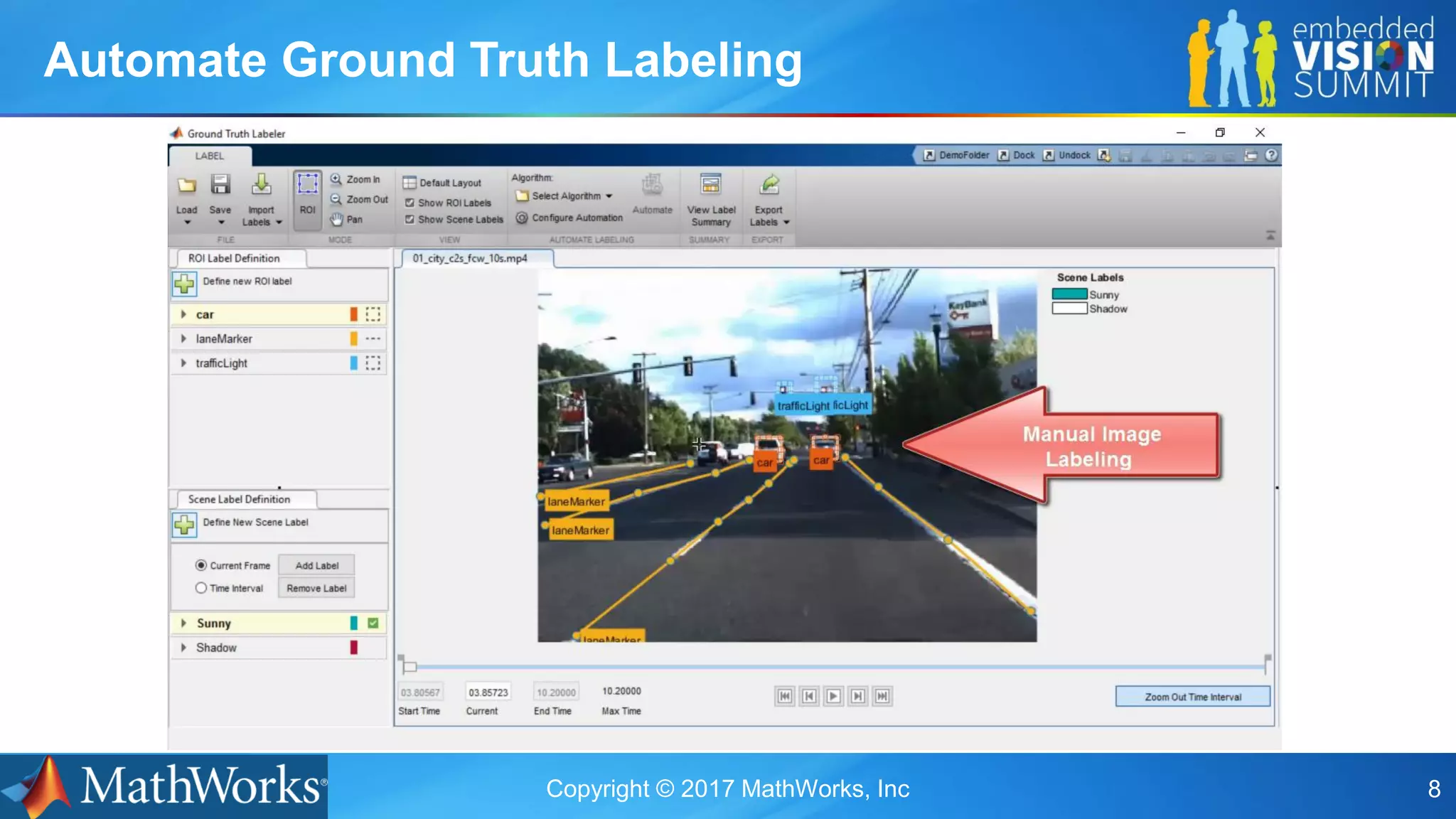

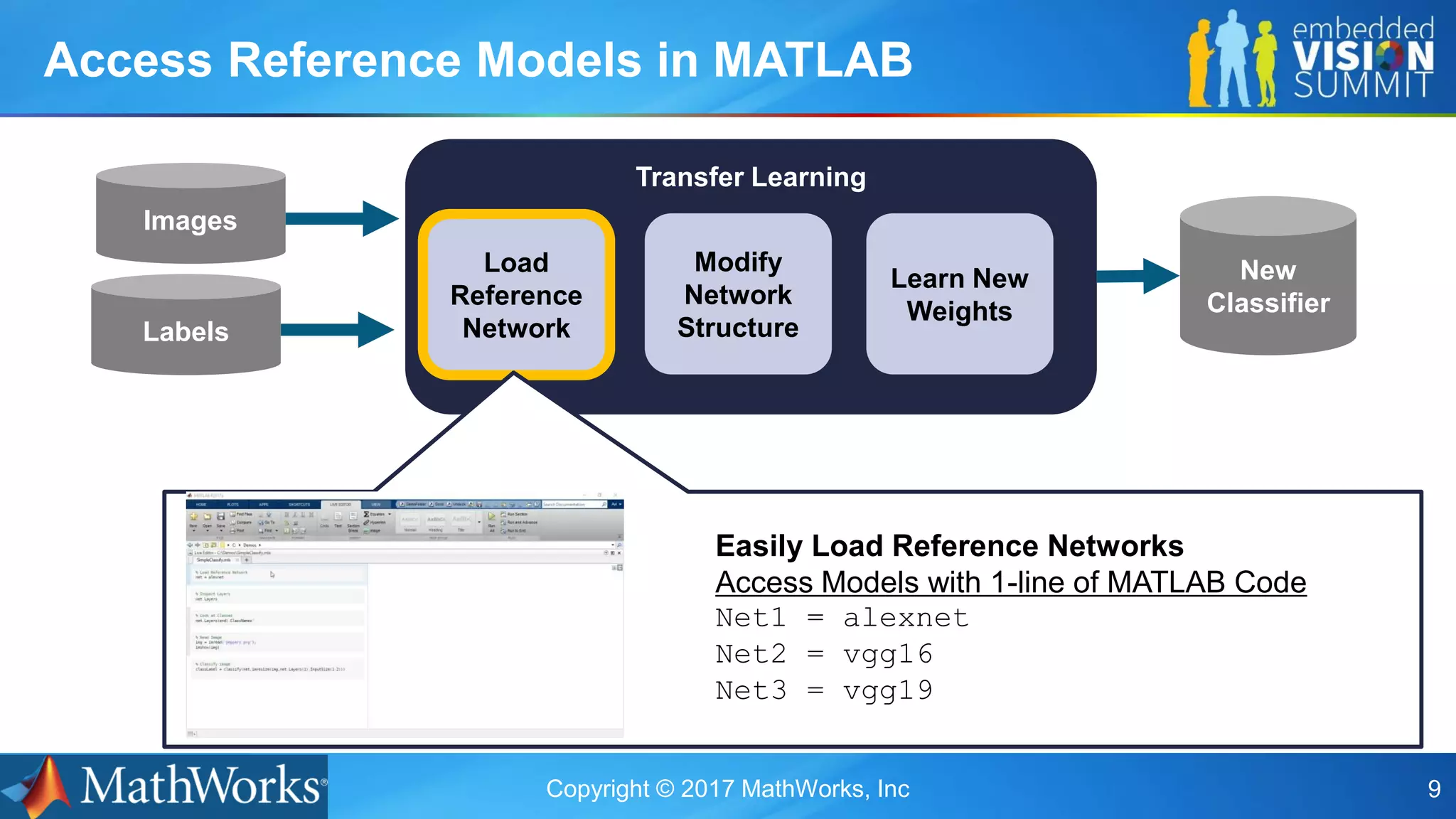

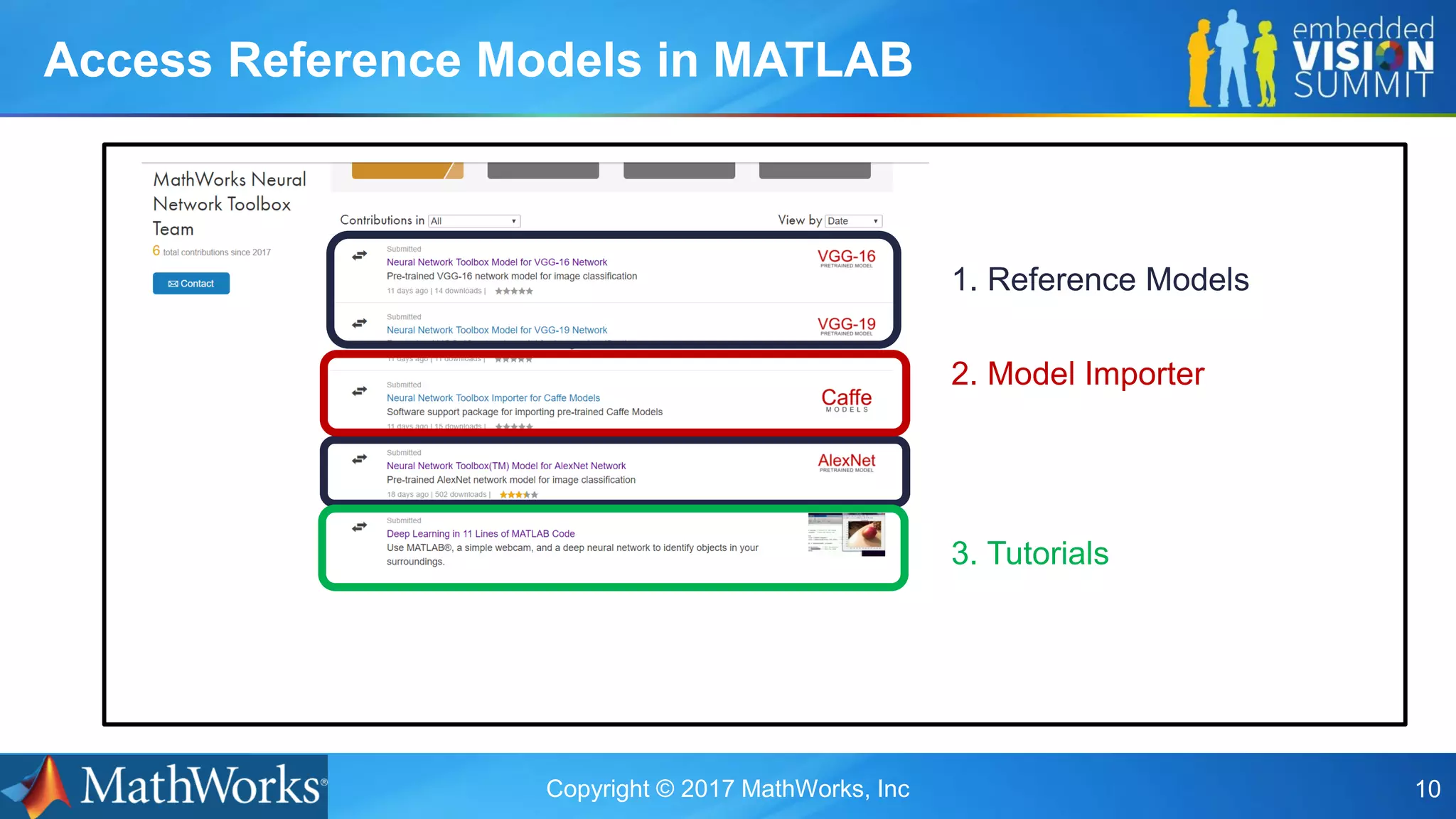

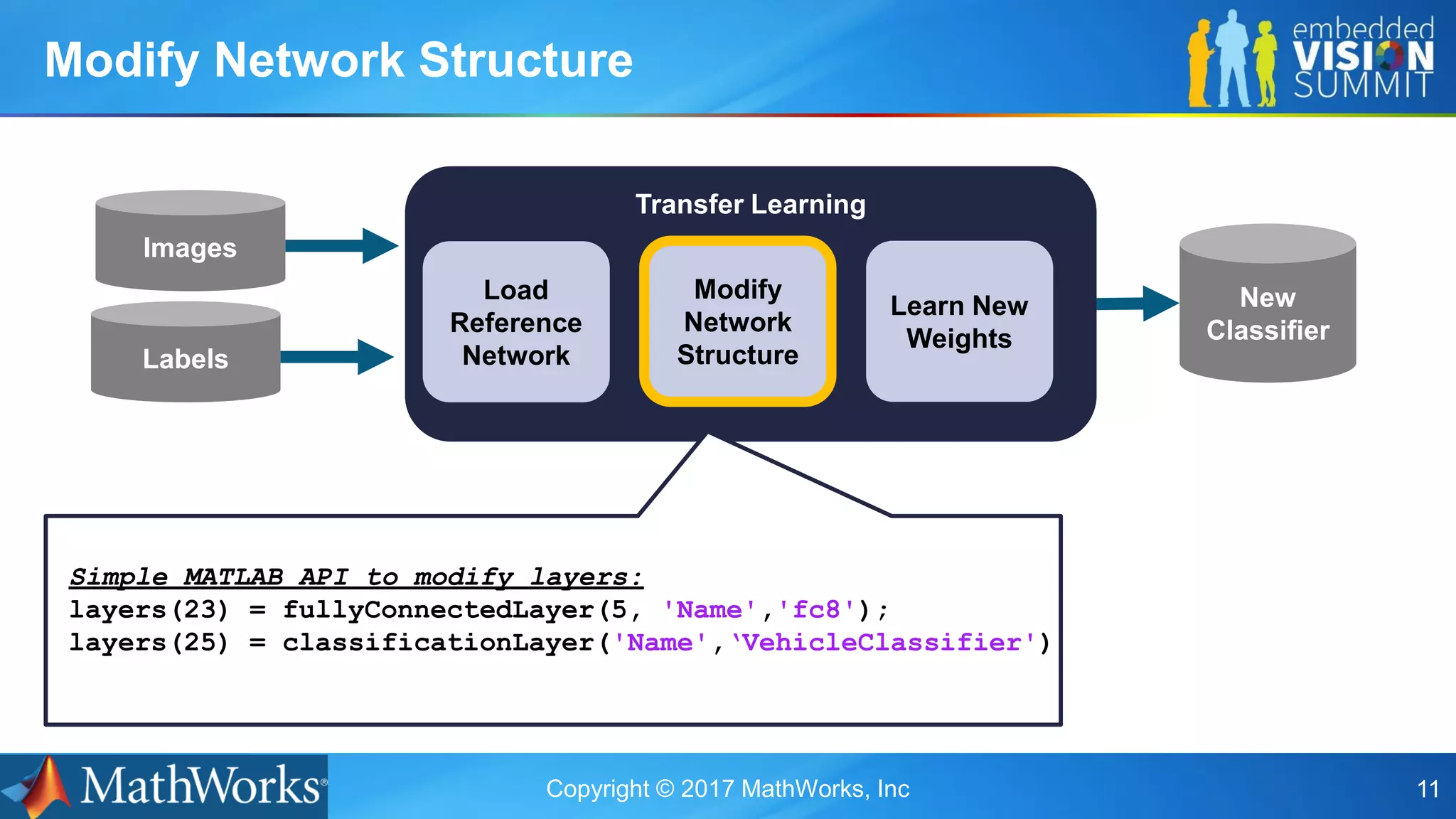

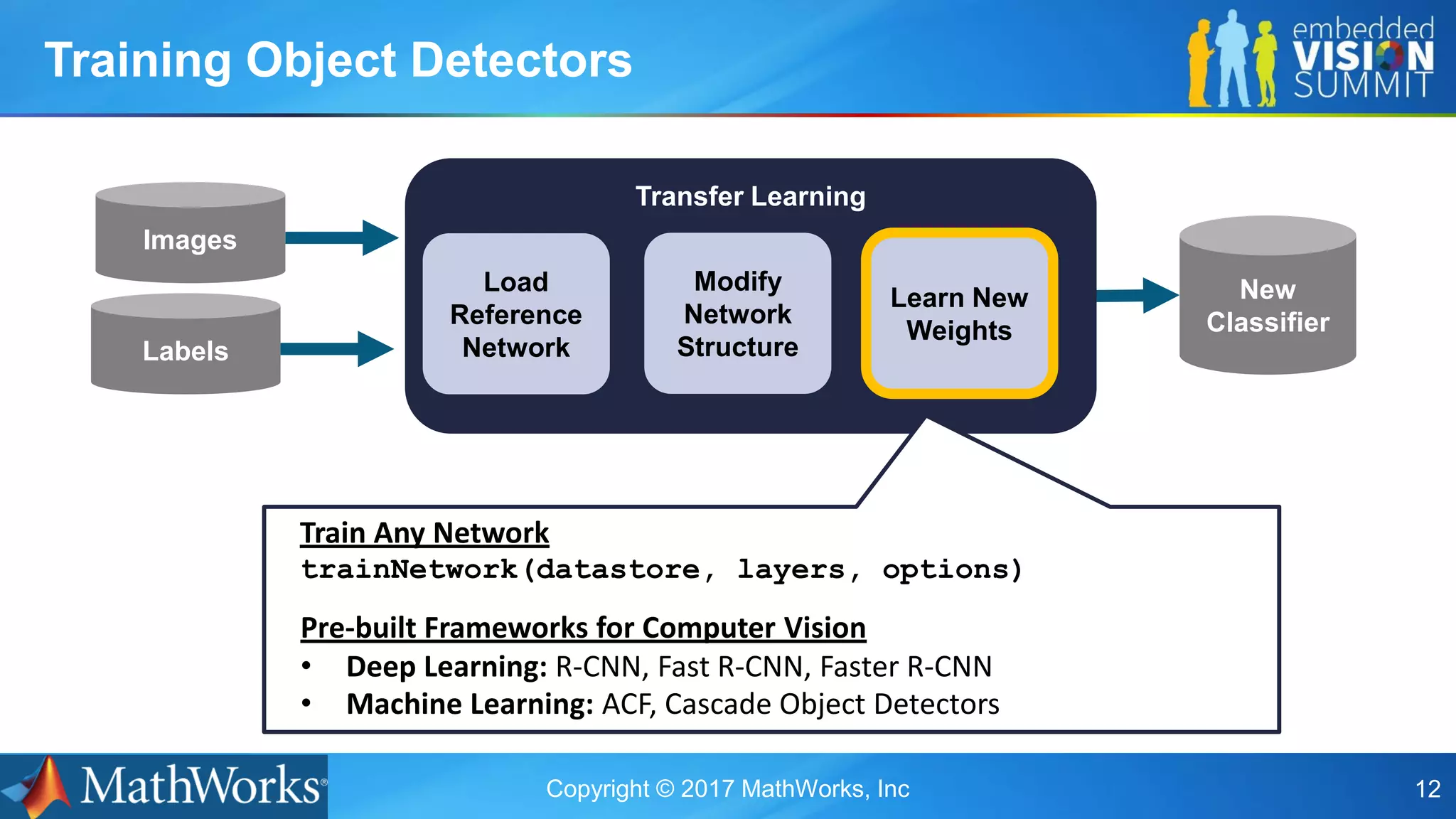

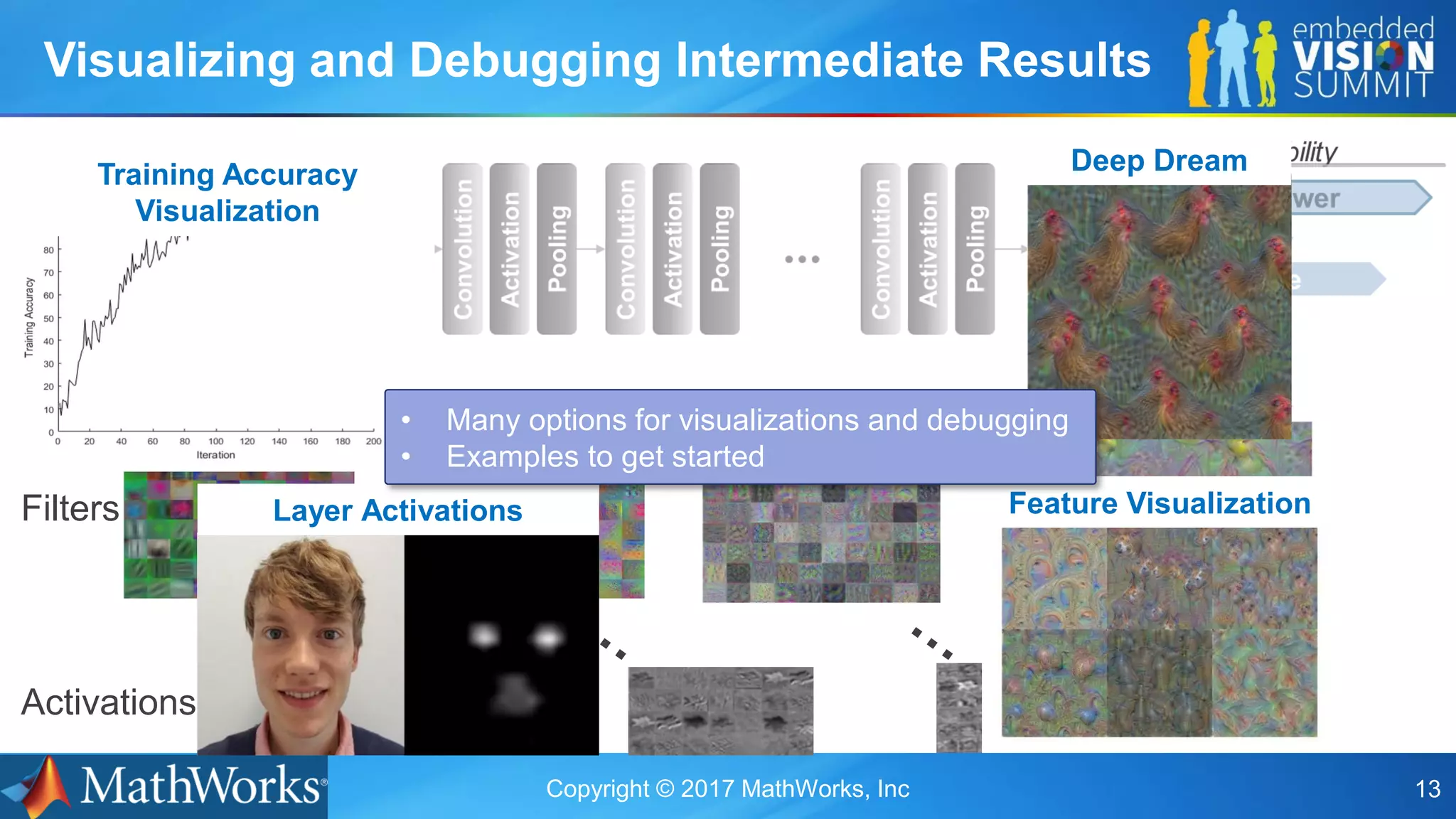

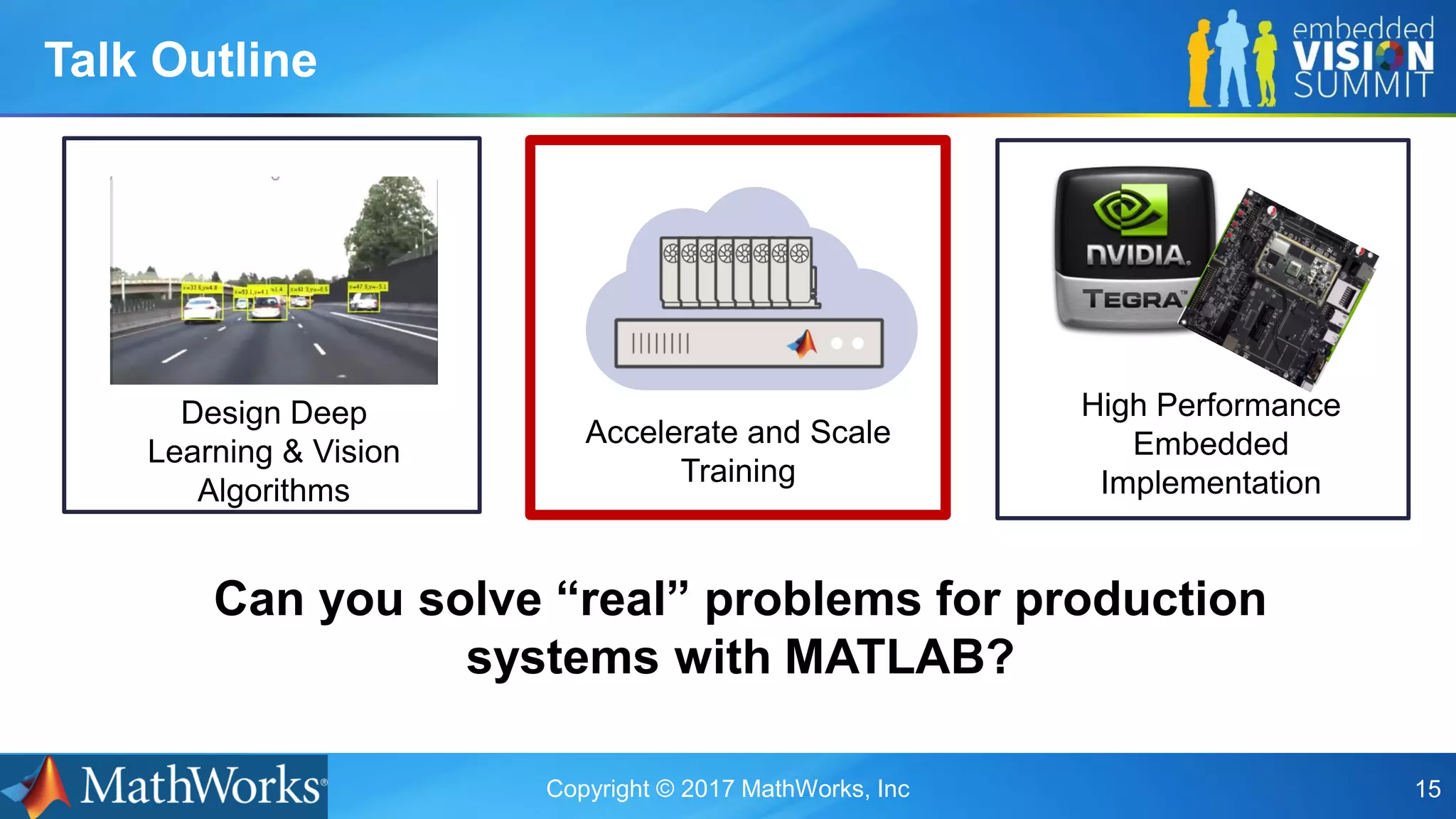

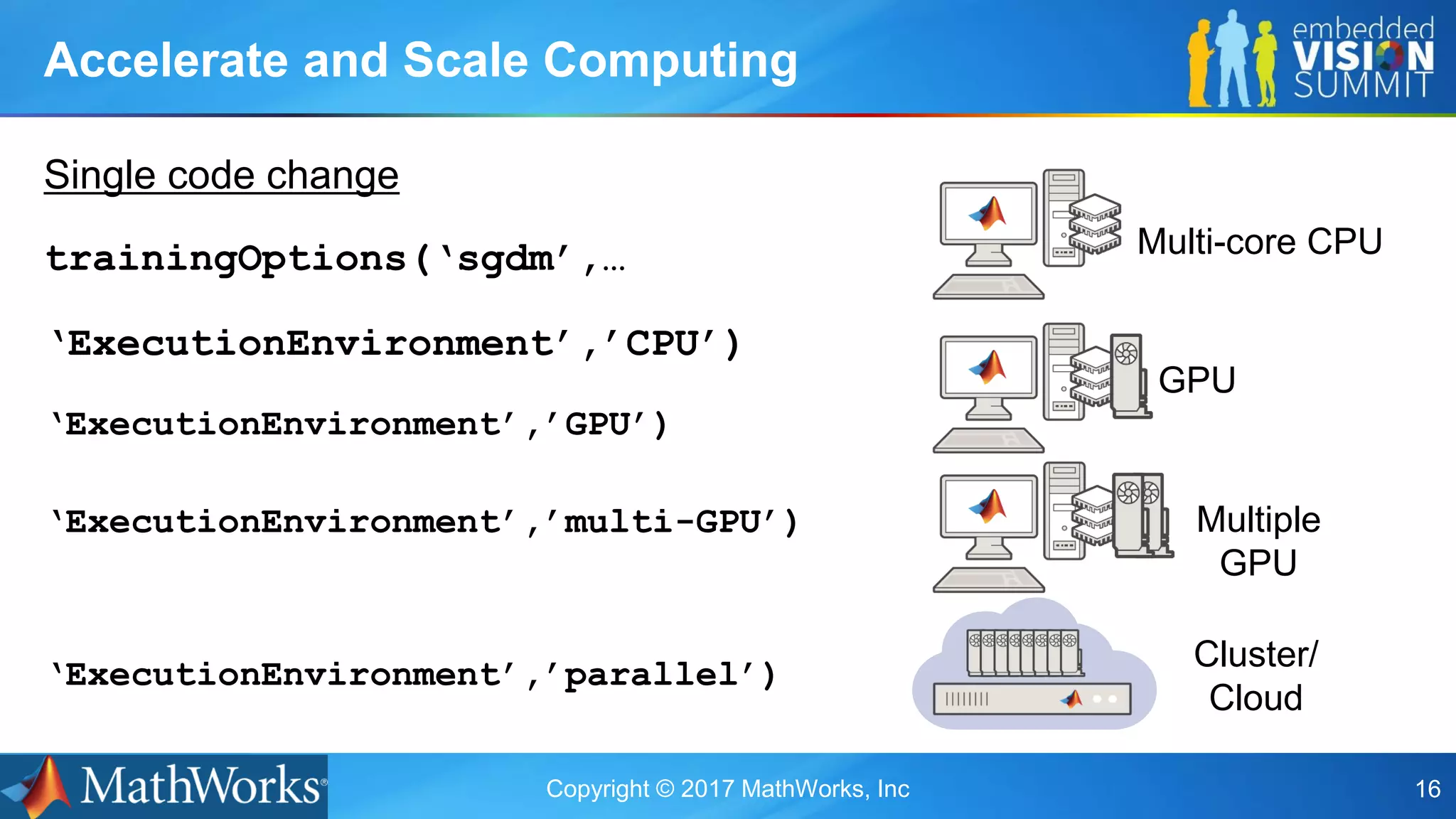

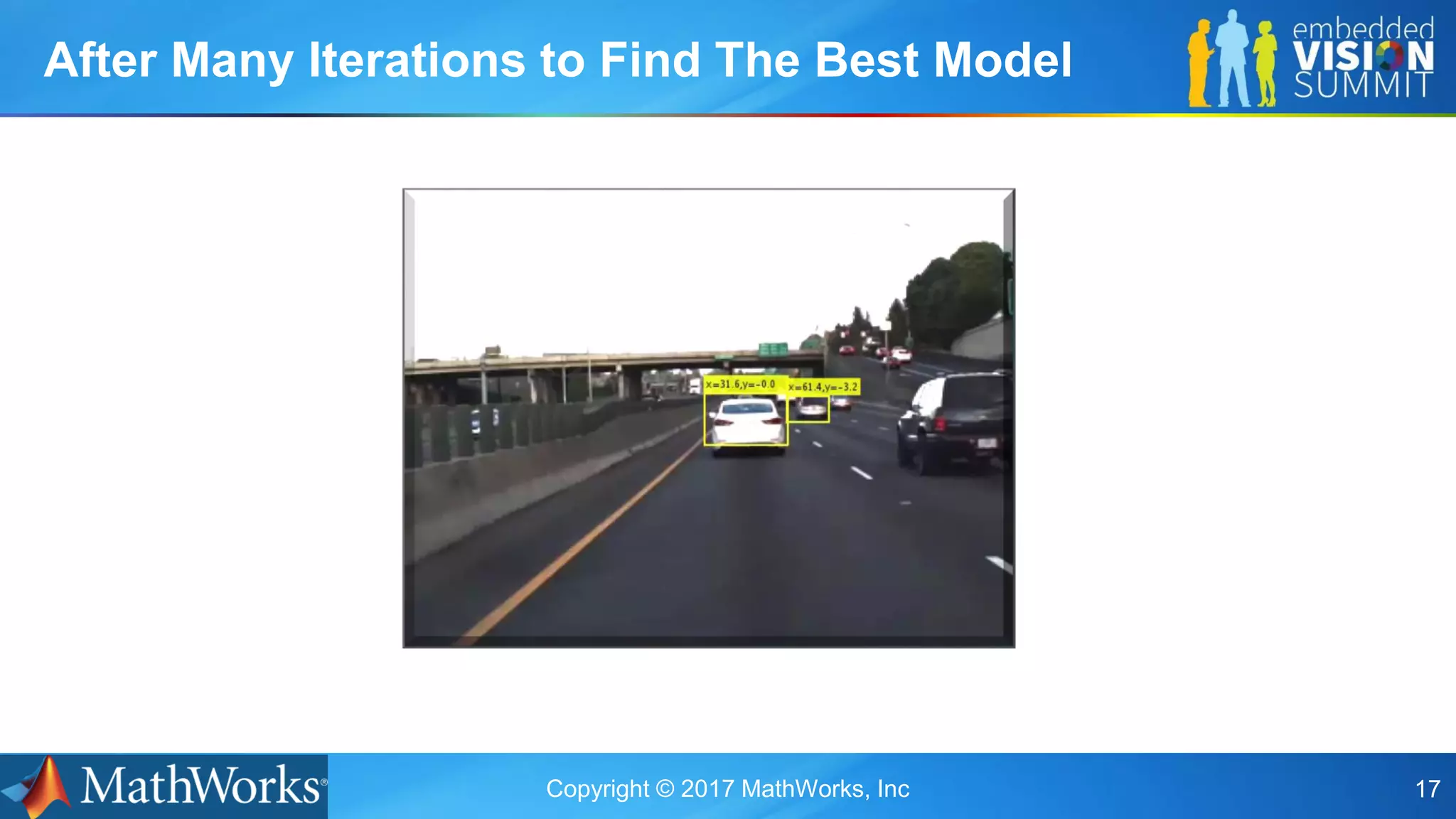

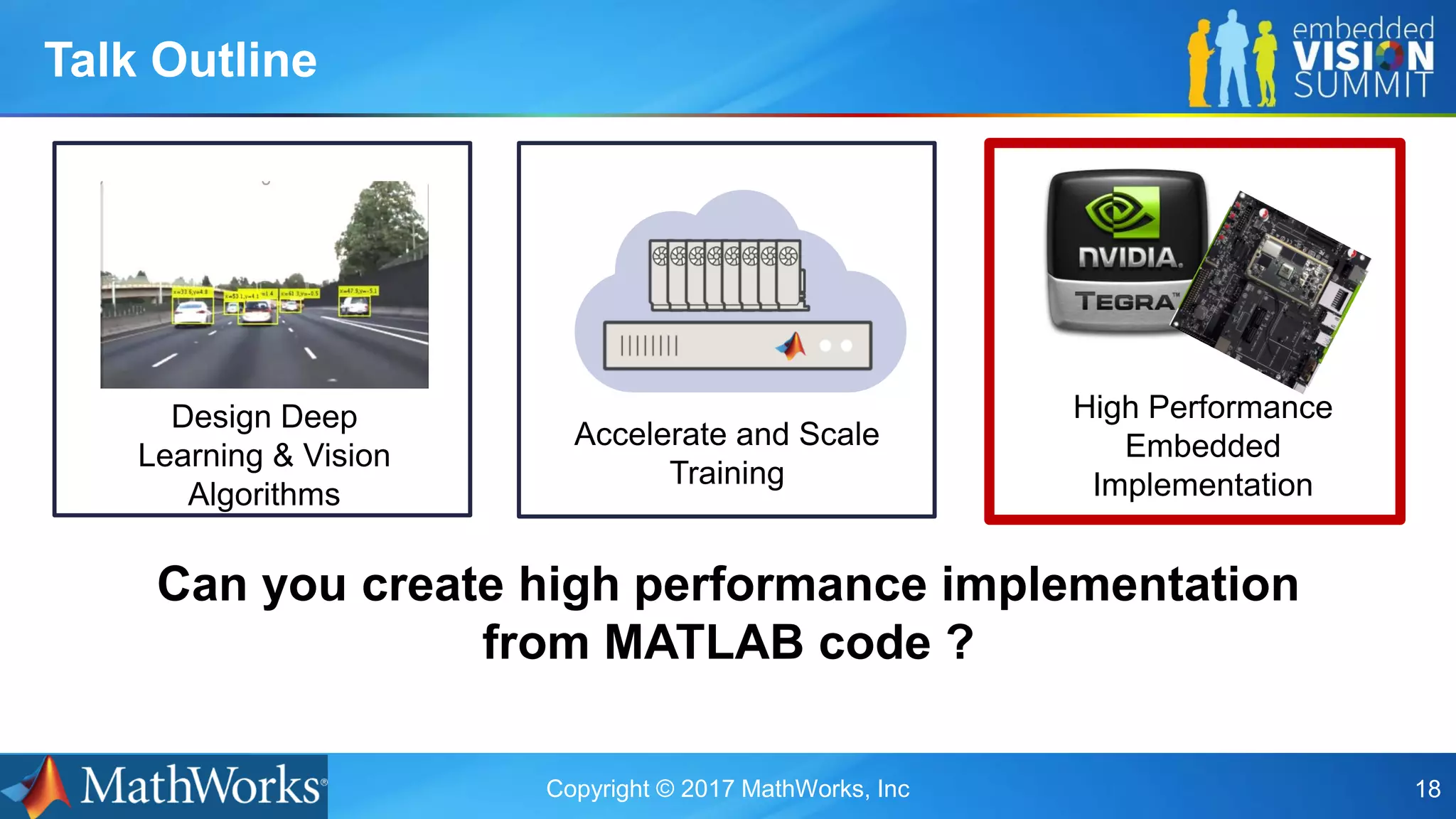

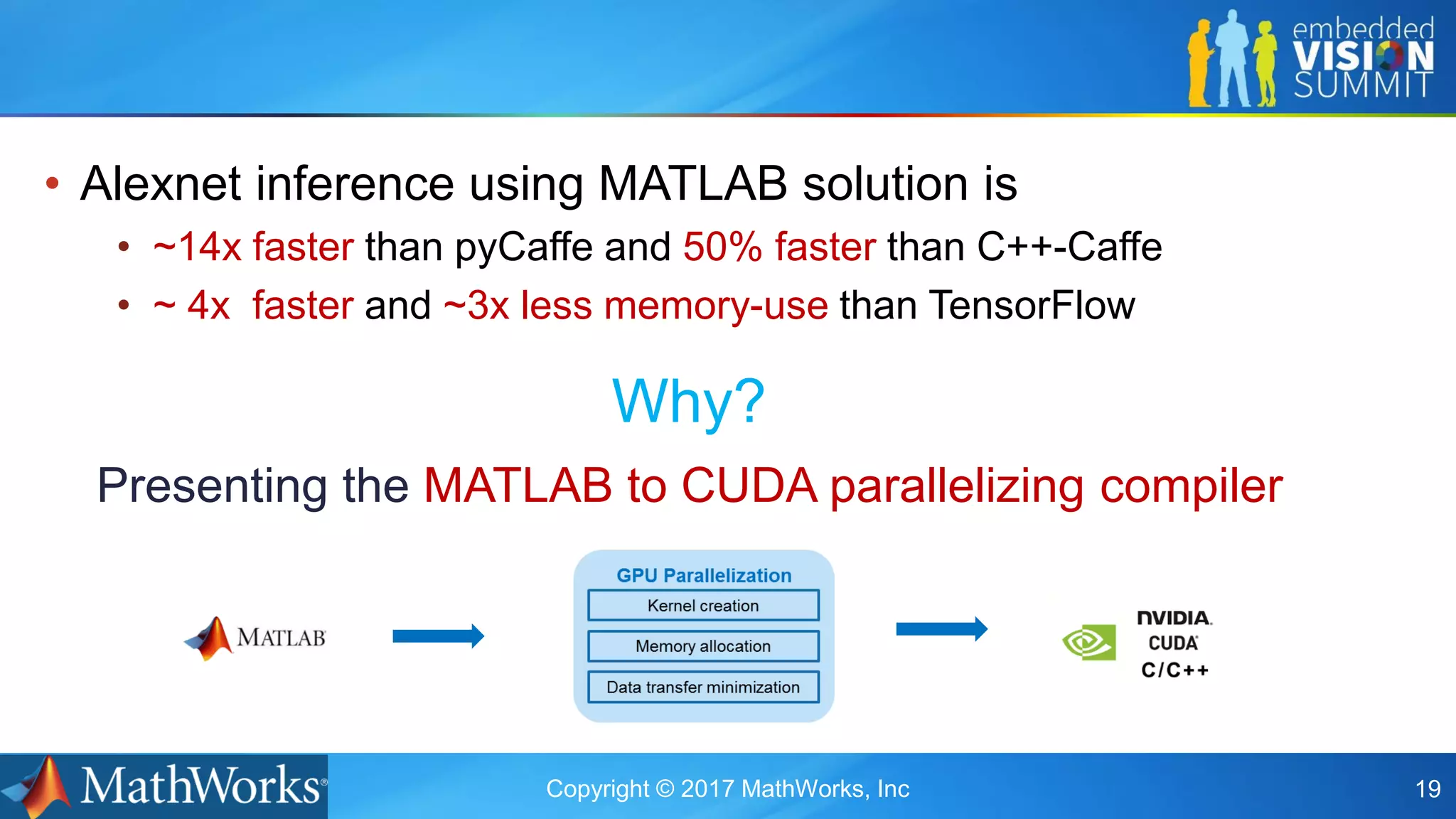

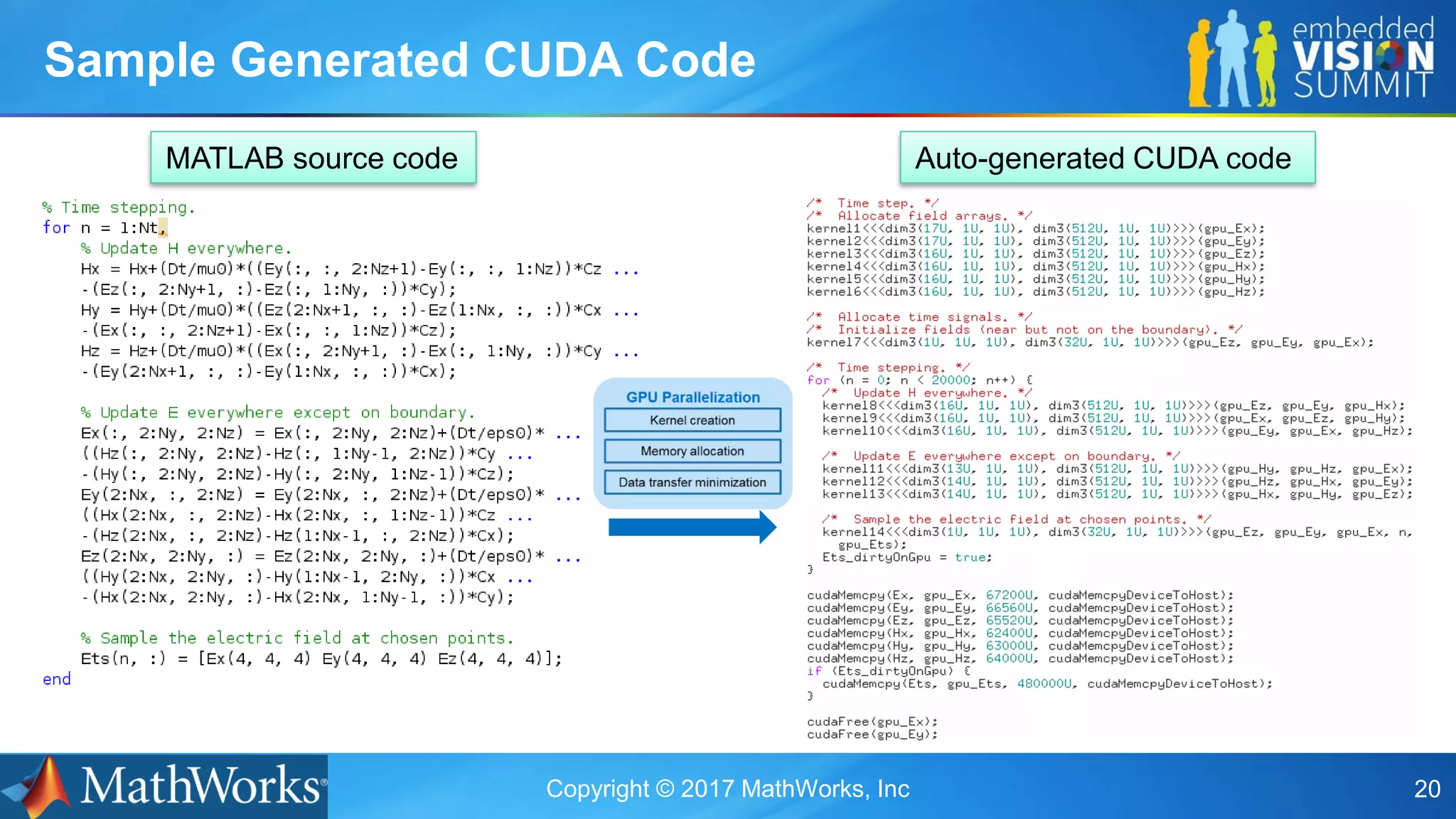

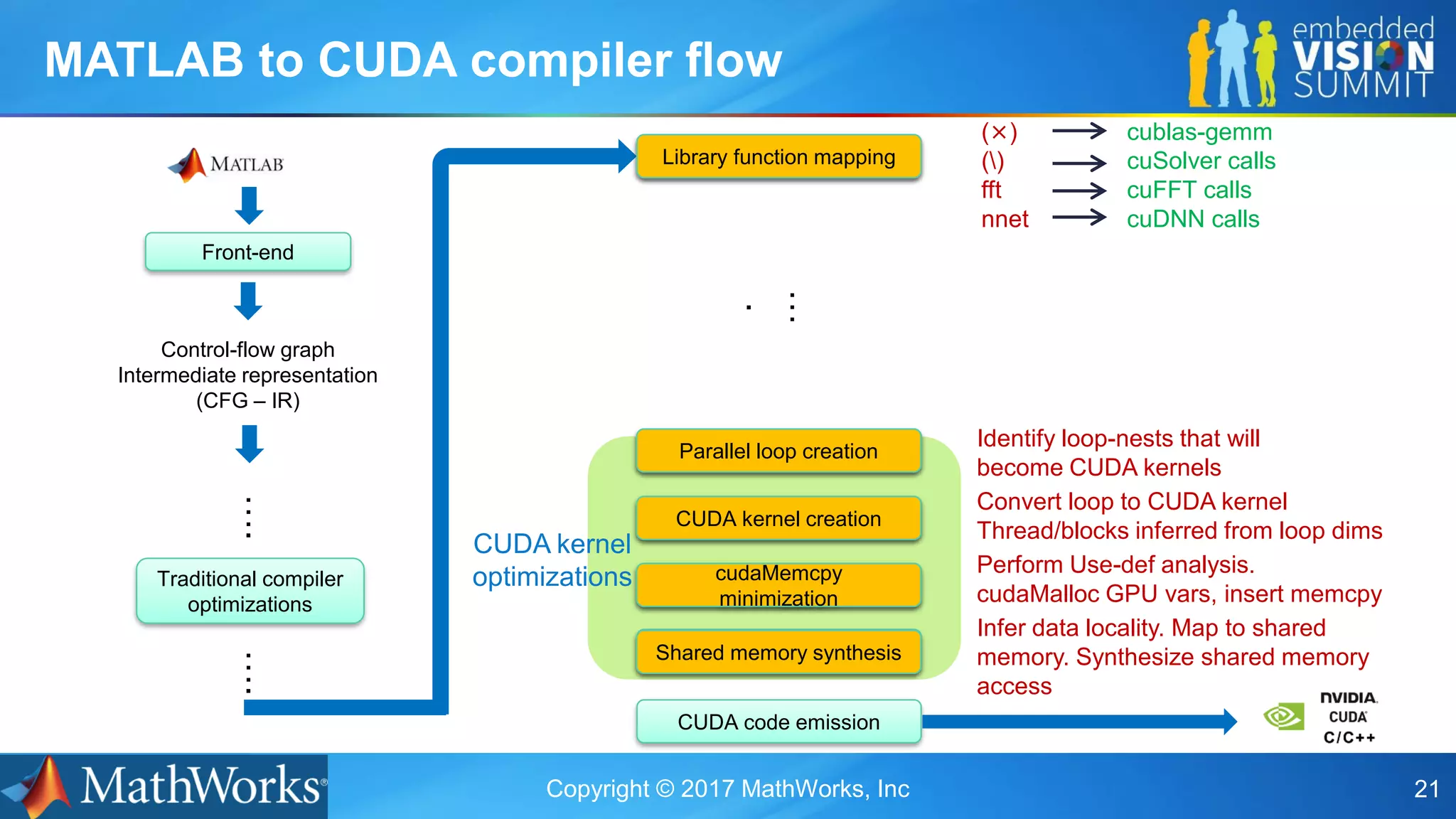

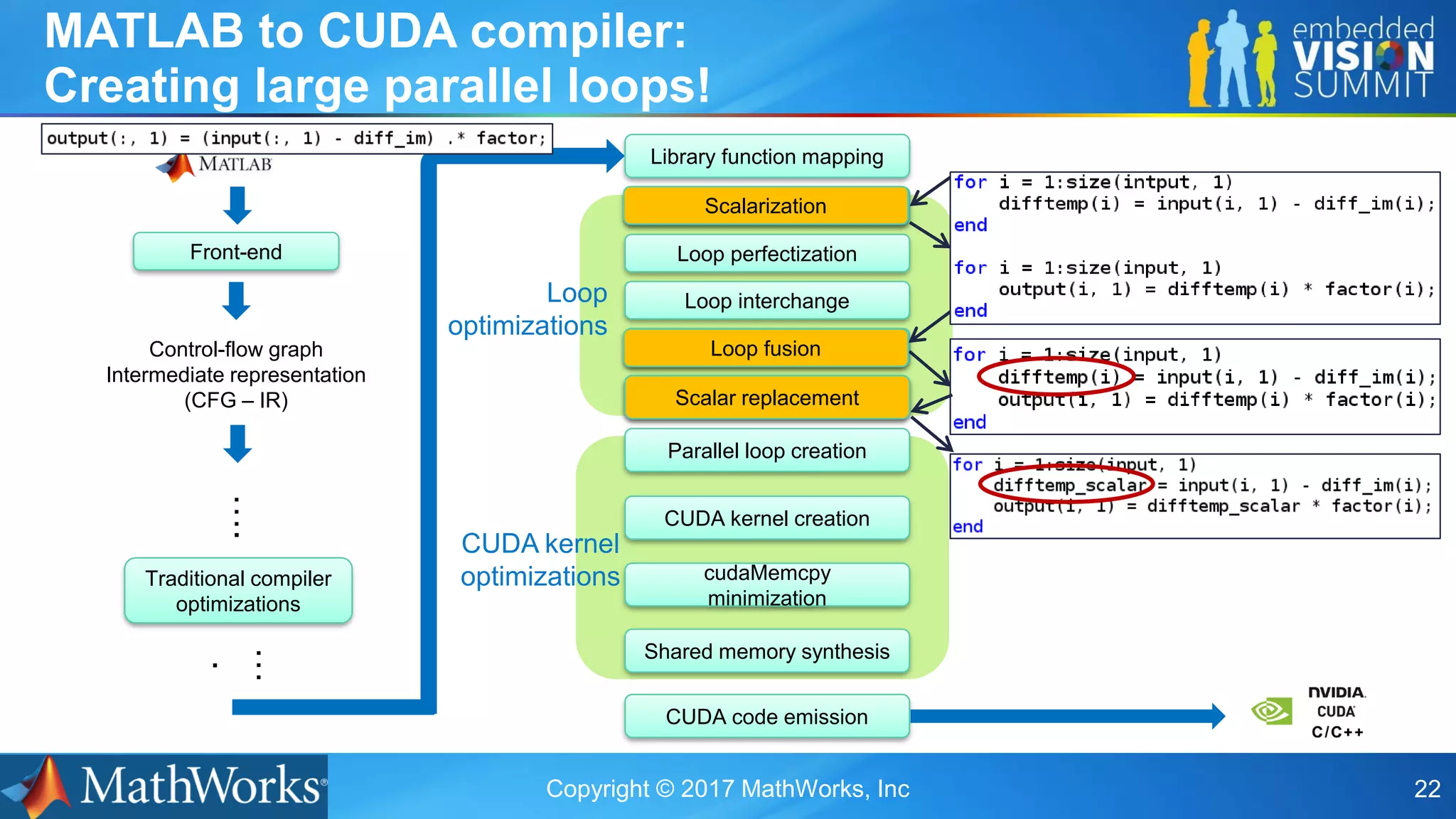

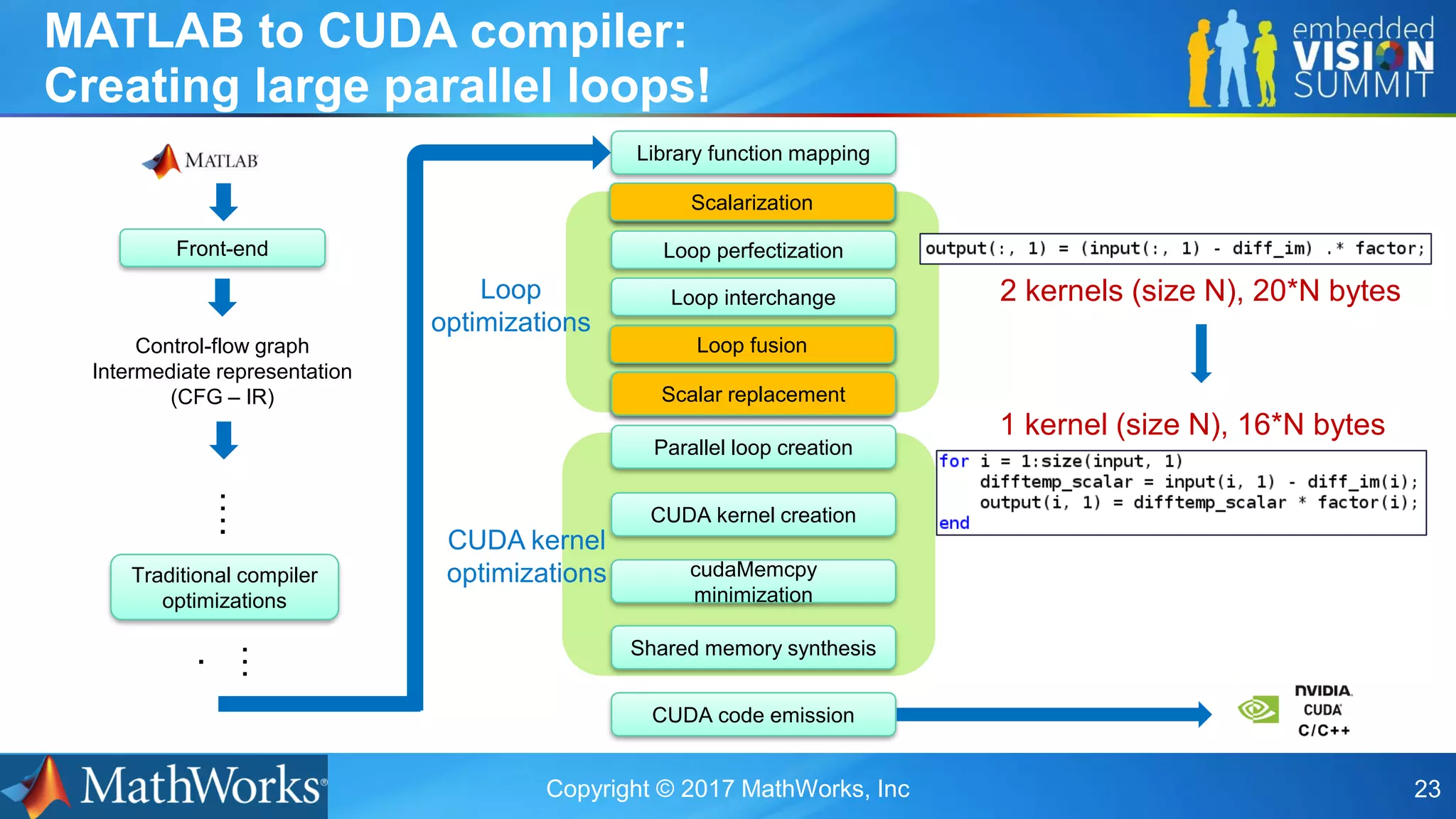

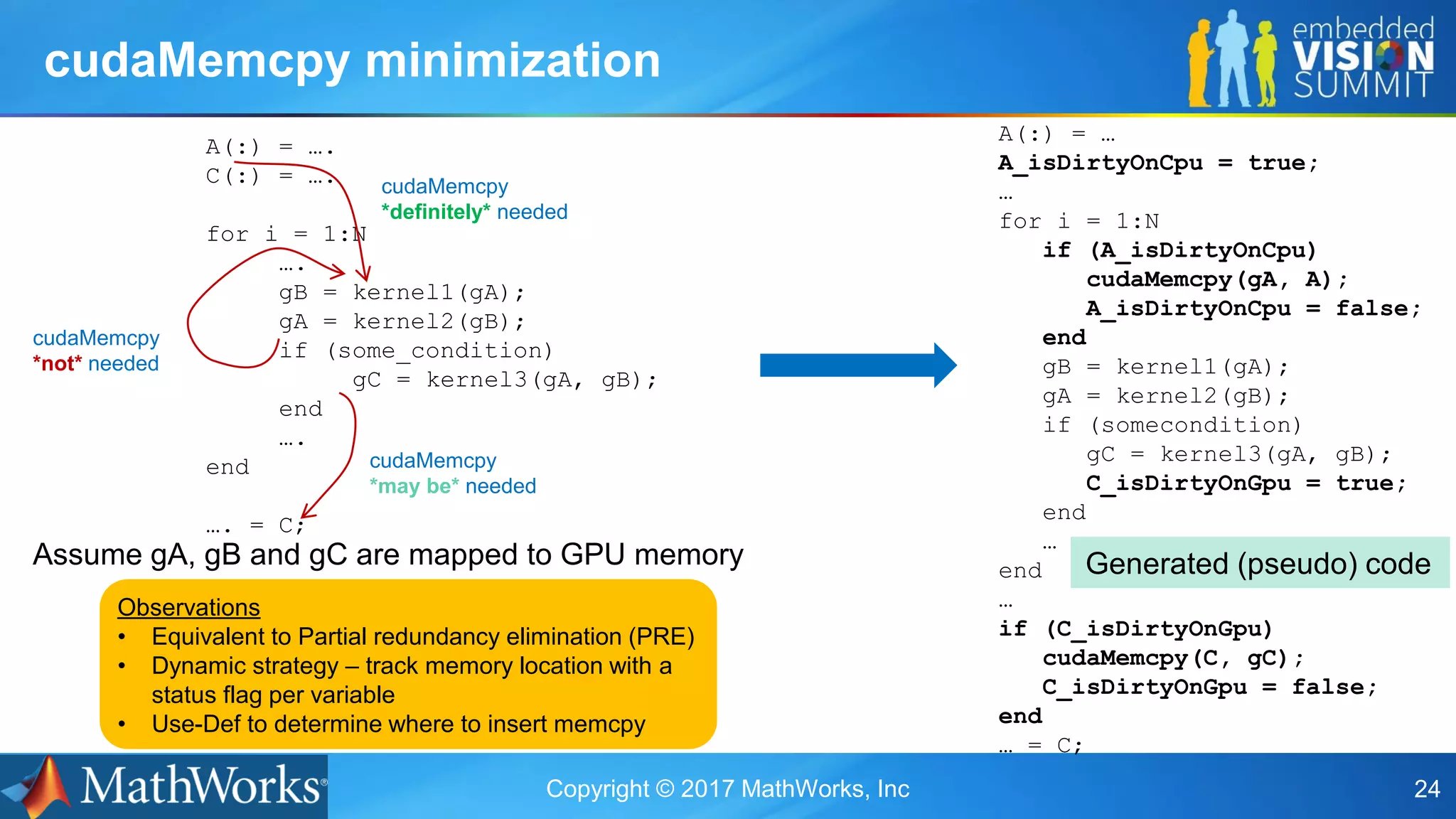

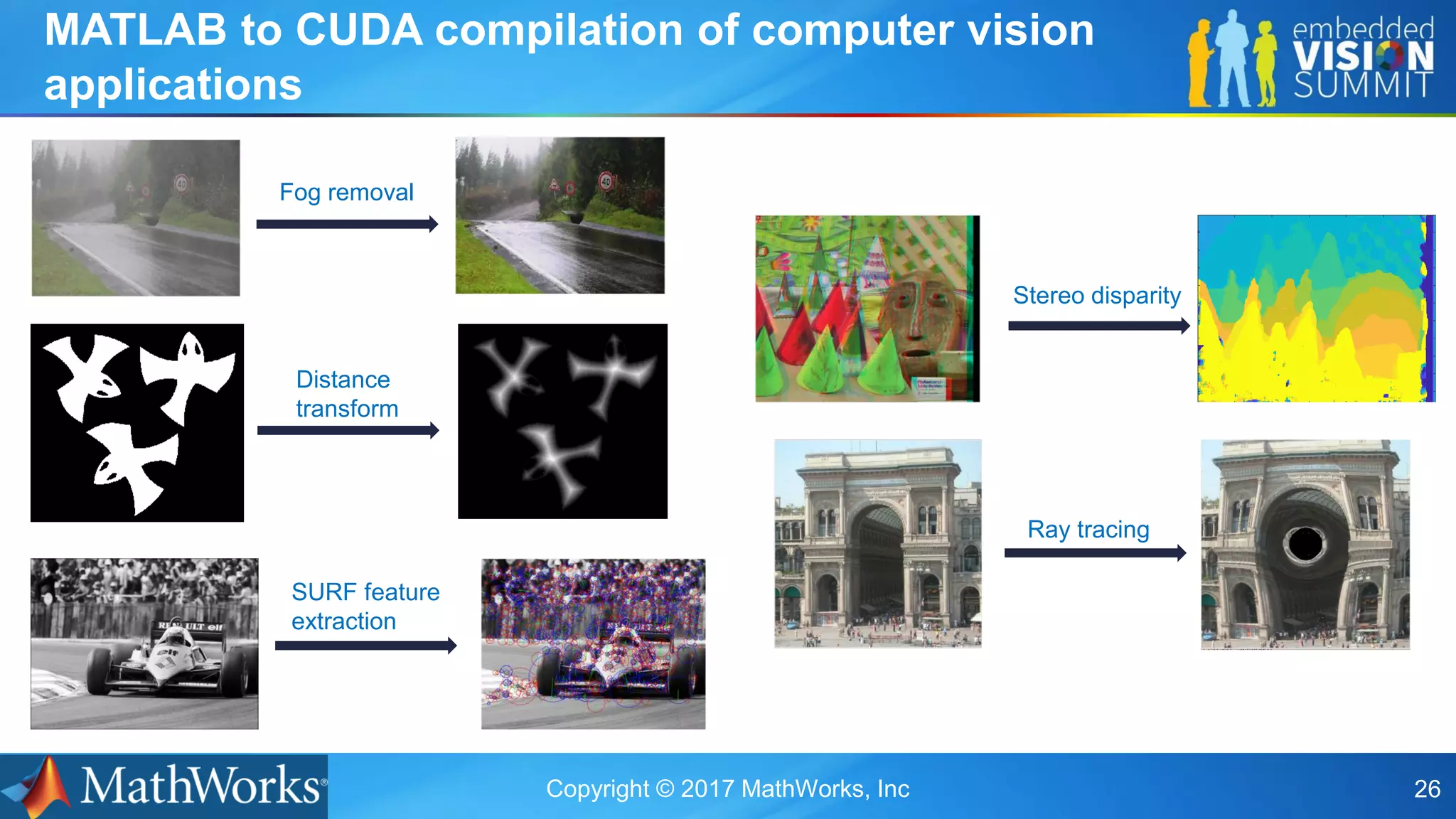

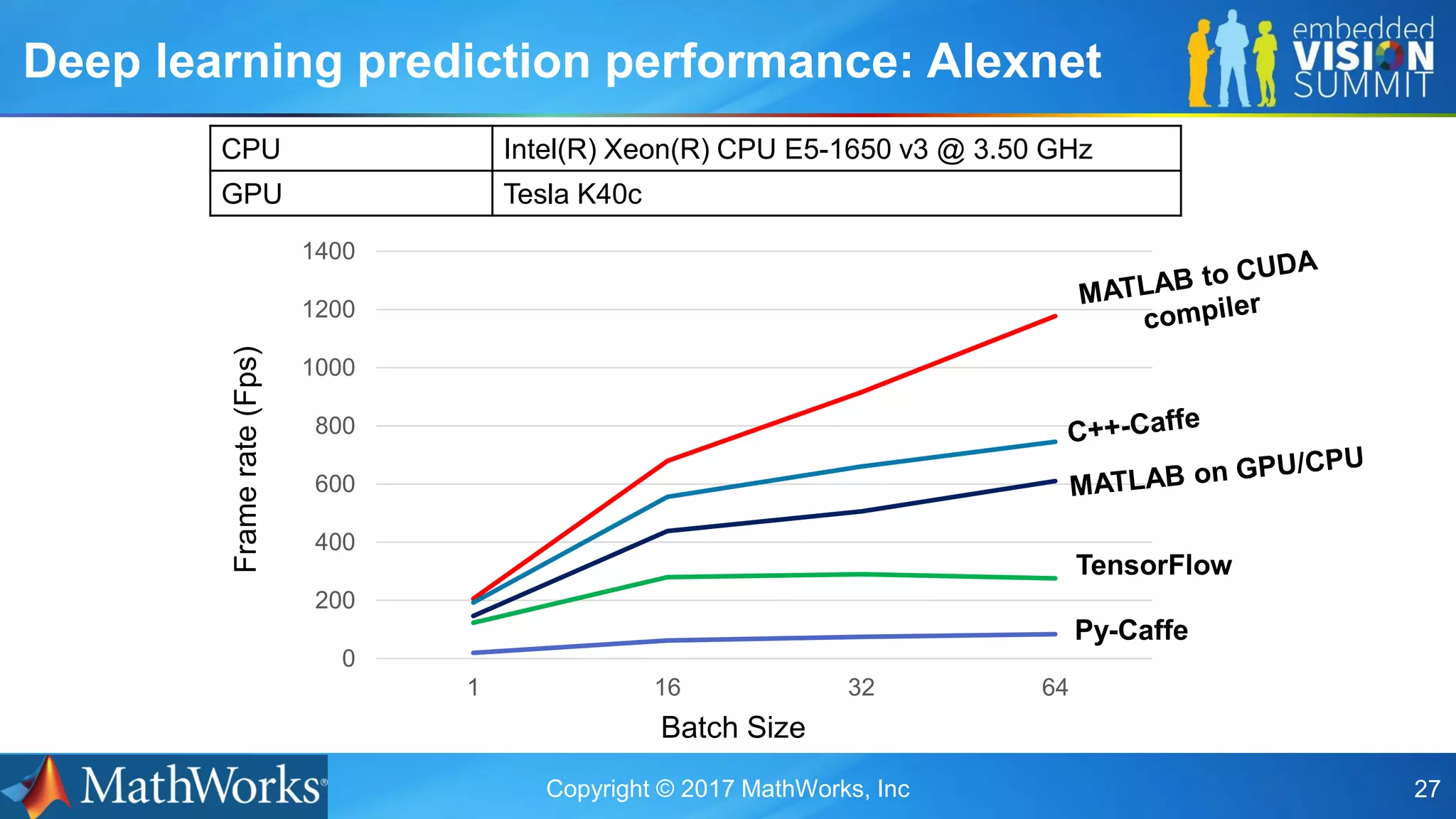

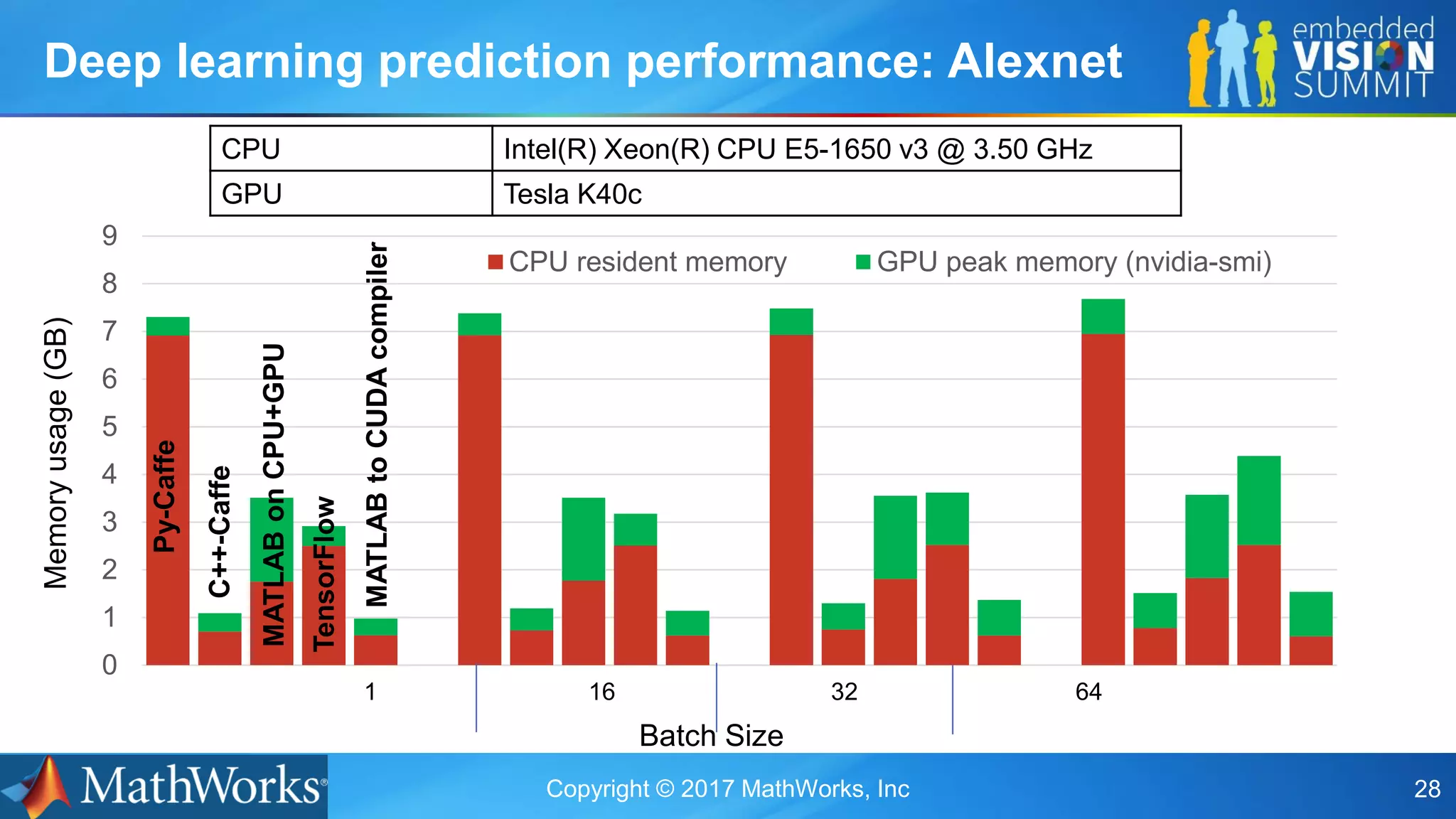

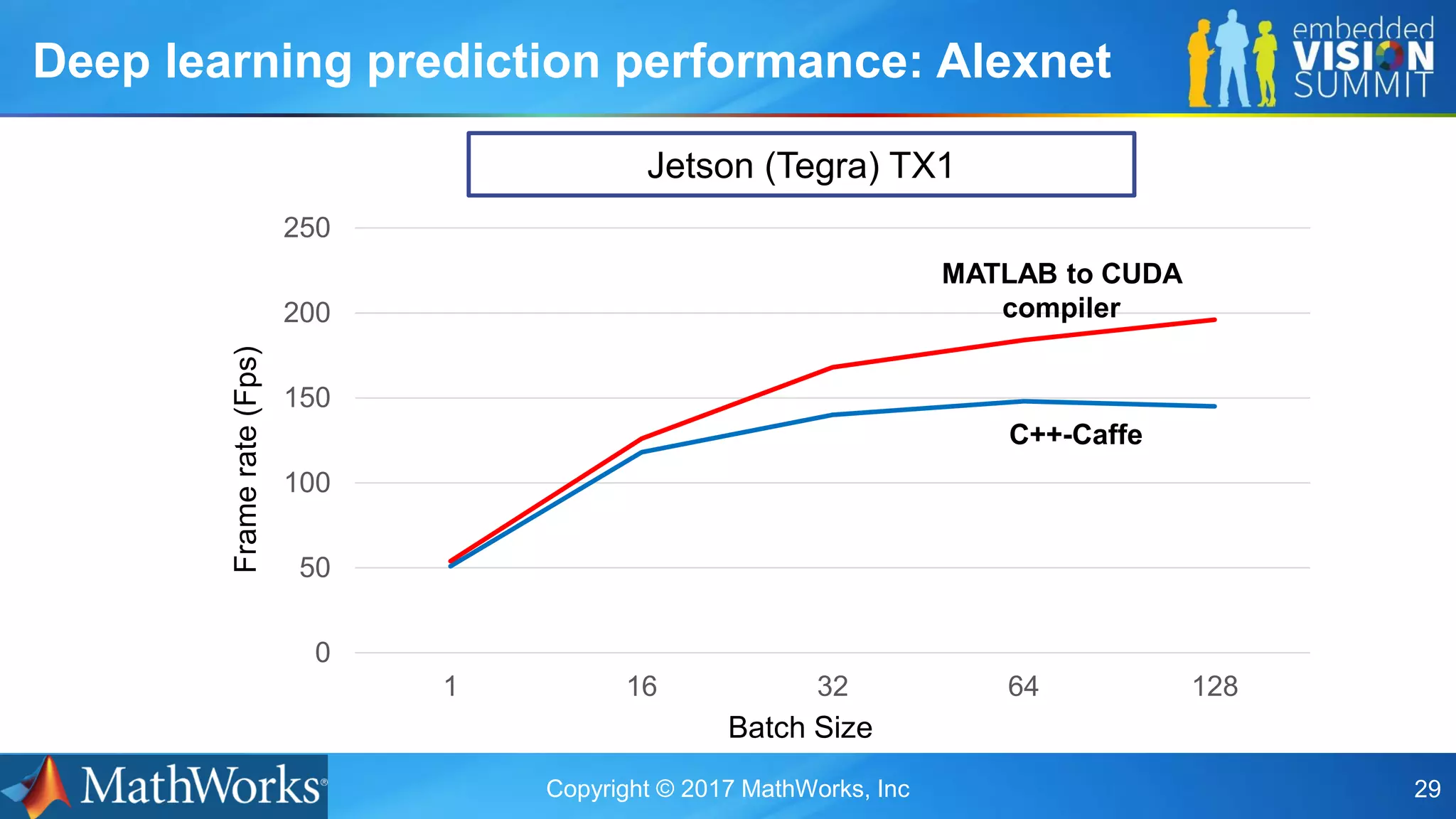

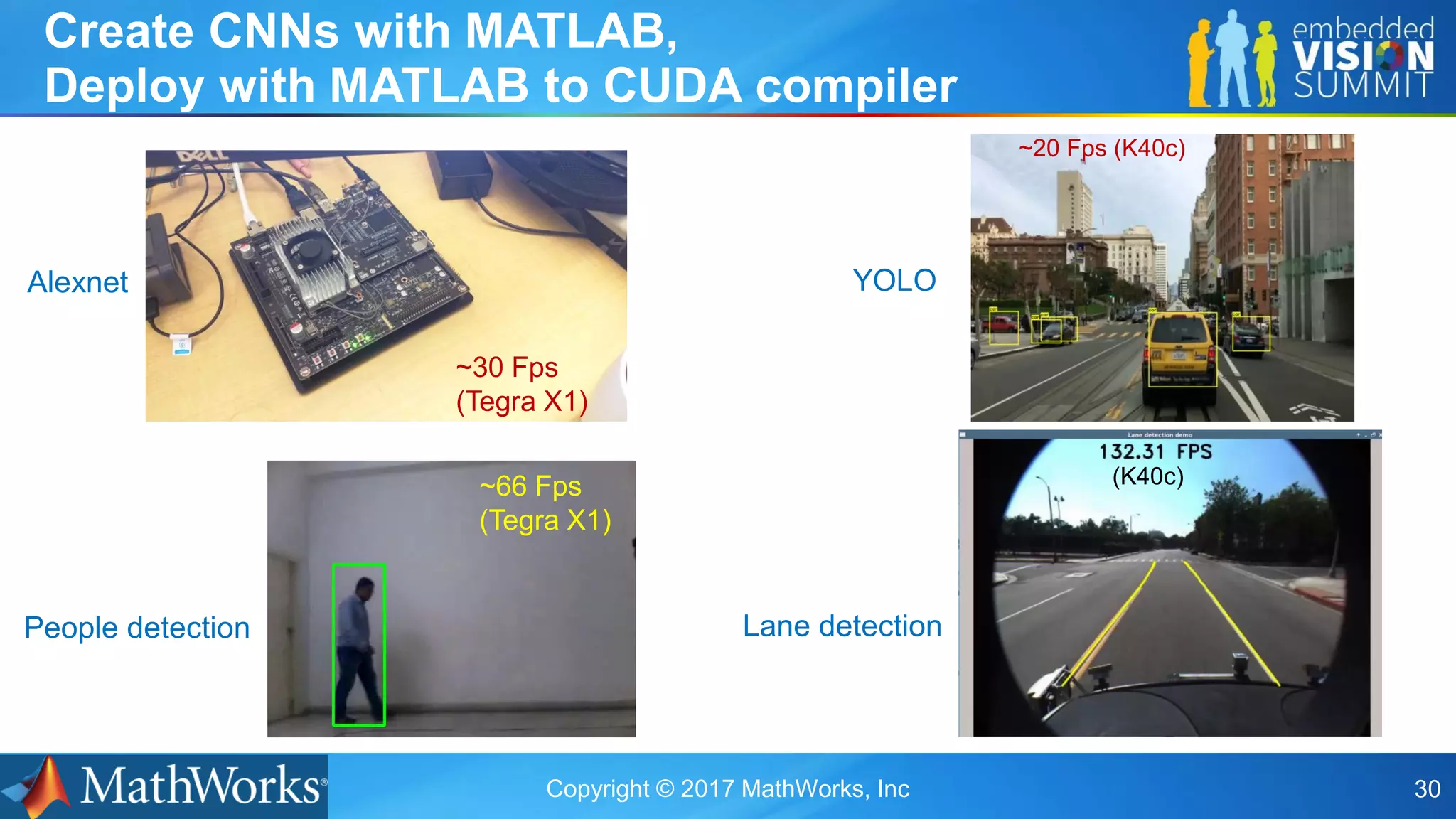

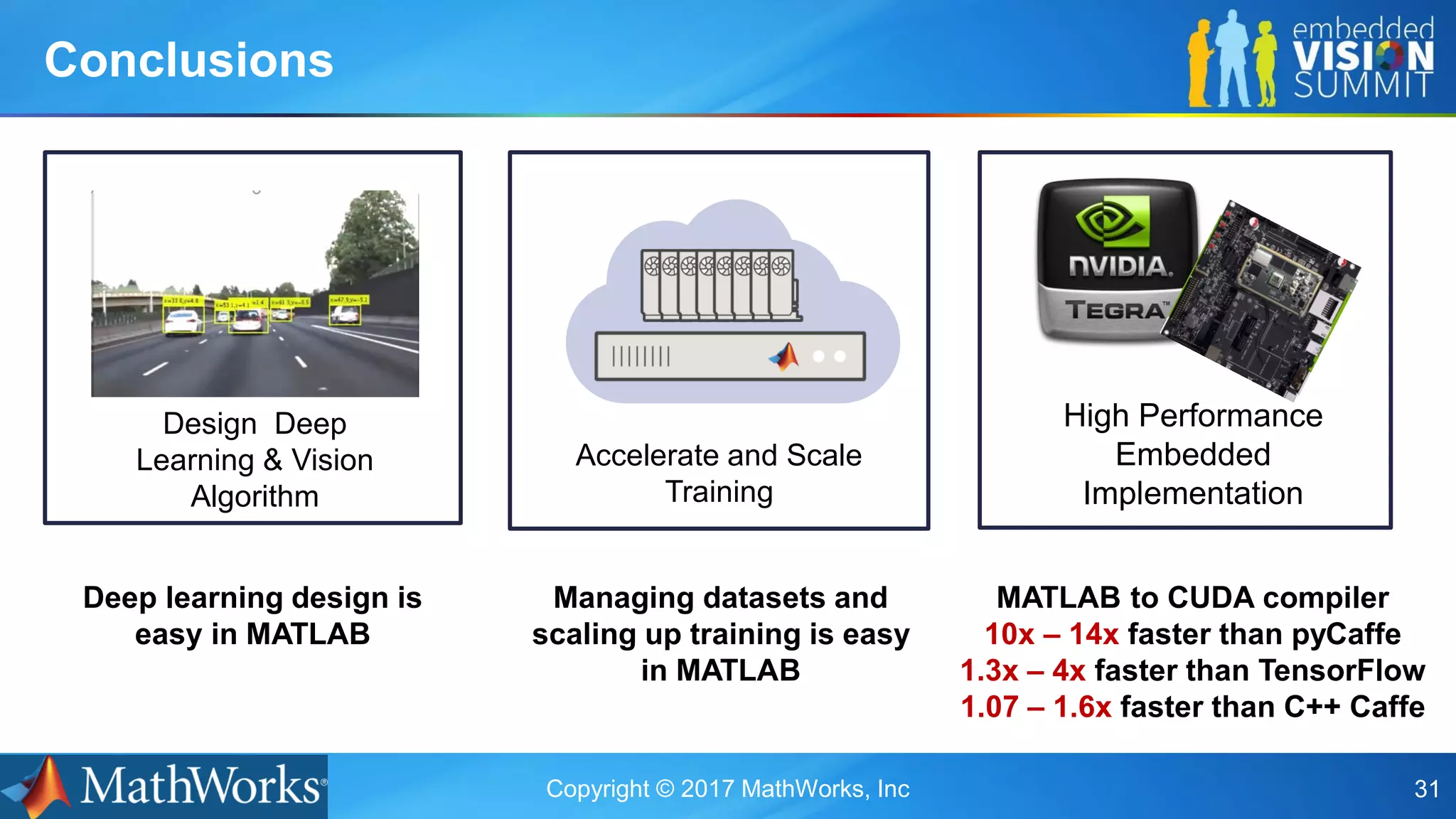

The document discusses the development of deep learning and vision algorithms in MATLAB targeting embedded GPUs, highlighting key features like managing large image sets and automating image labeling. It emphasizes high performance embedded implementations, showcasing the efficiency of MATLAB to CUDA compilation, which is significantly faster than other frameworks. Additionally, it illustrates the ease of use of reference models and tools for training and modifying network structures.