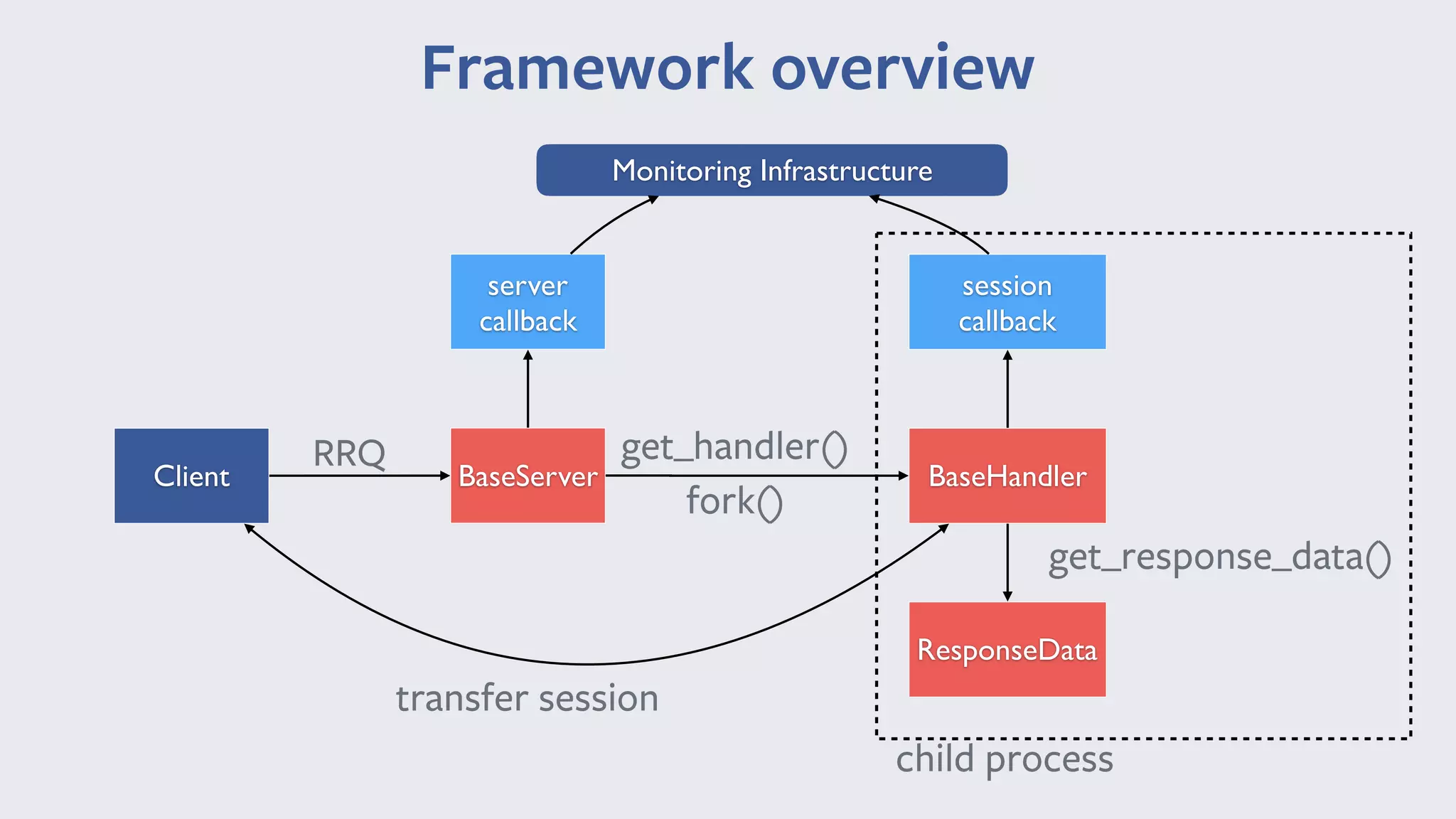

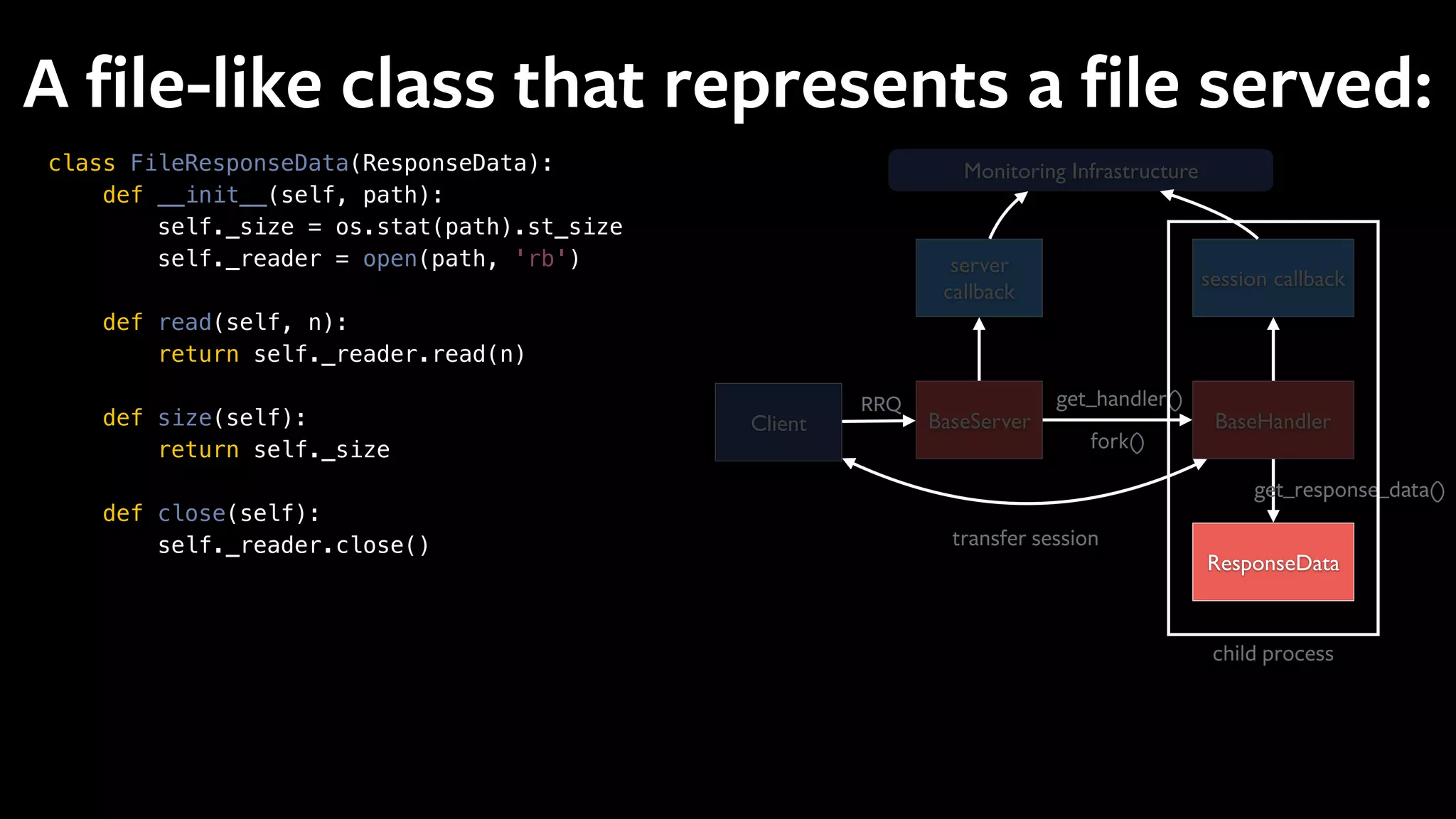

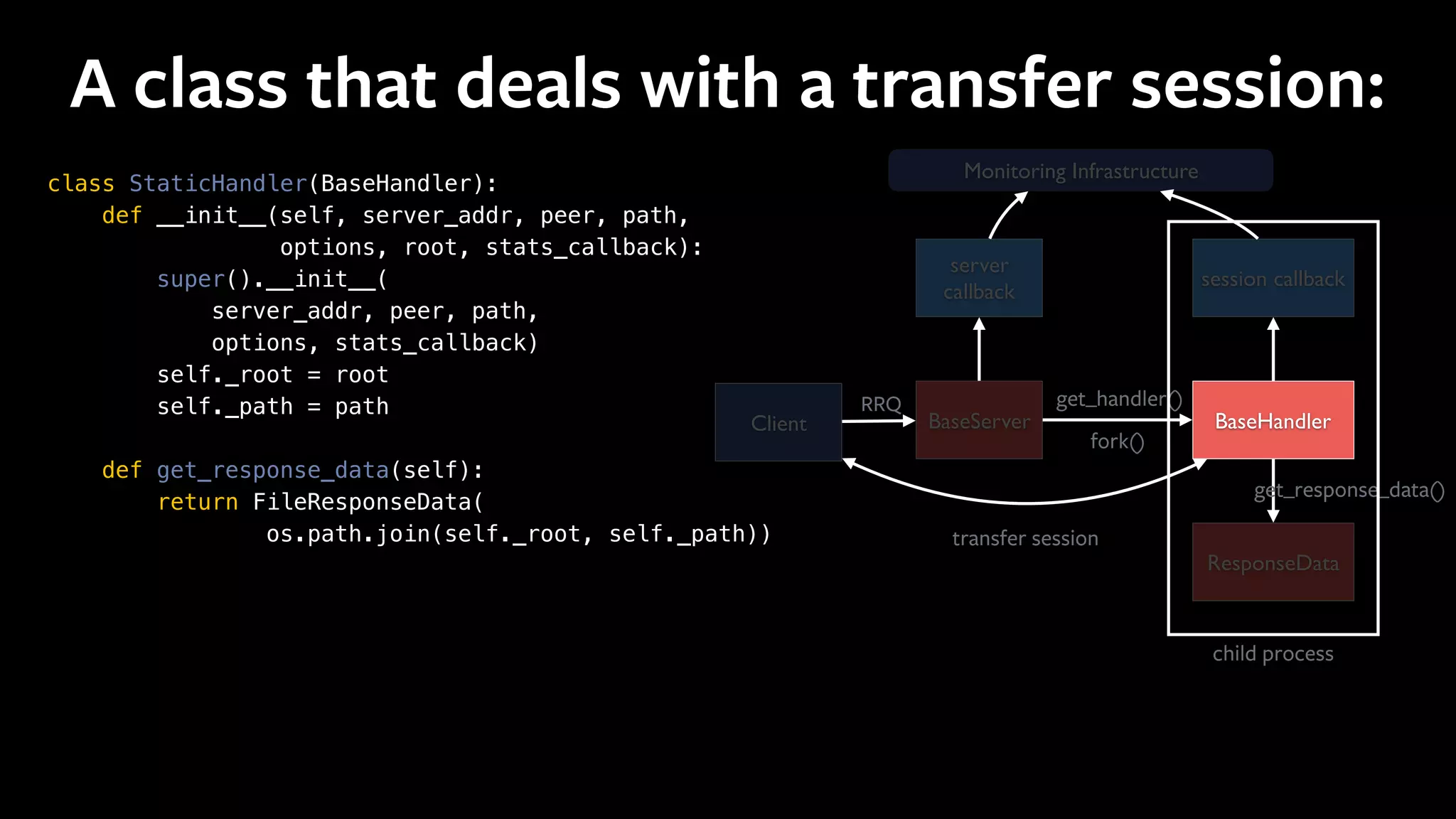

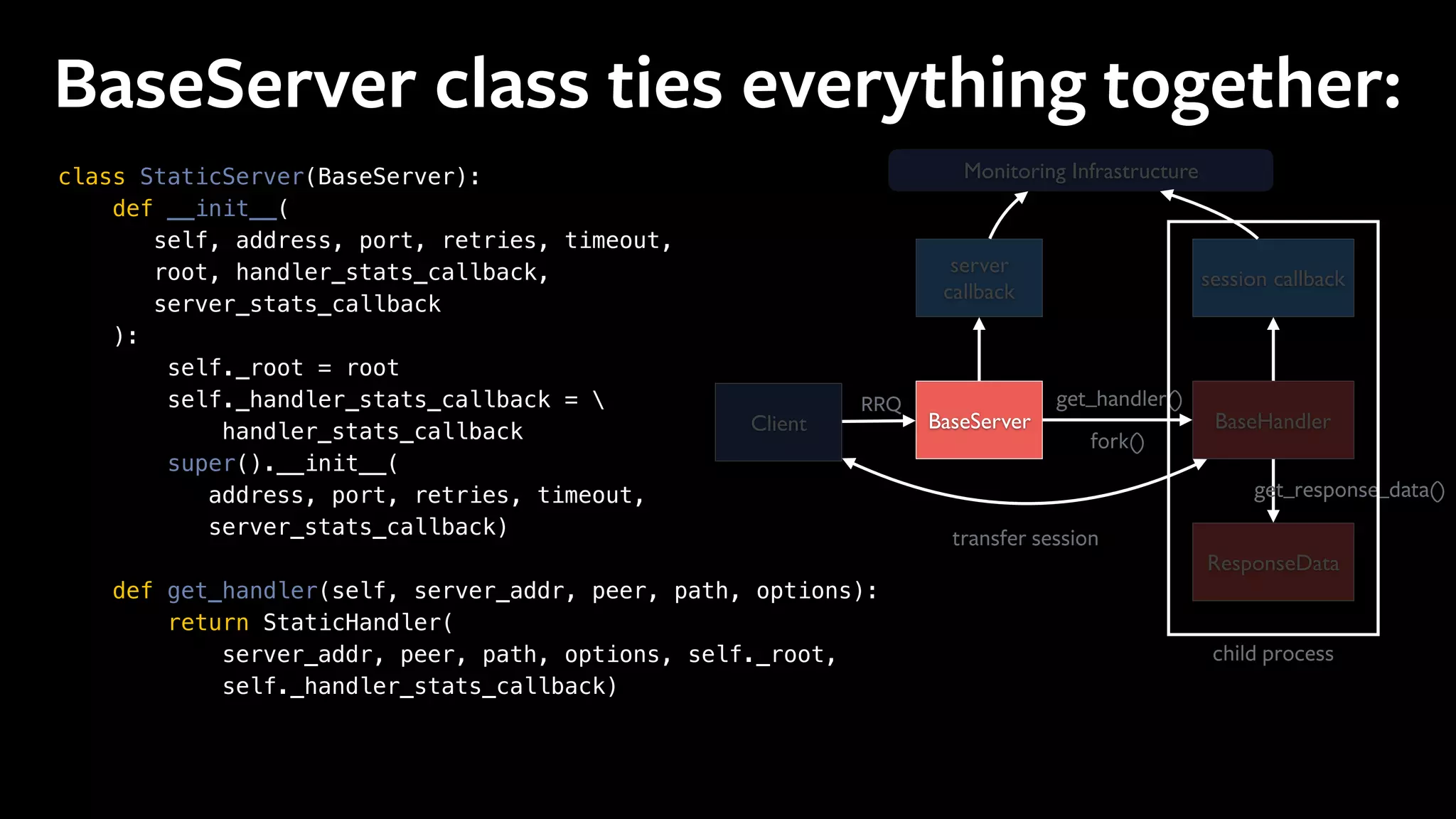

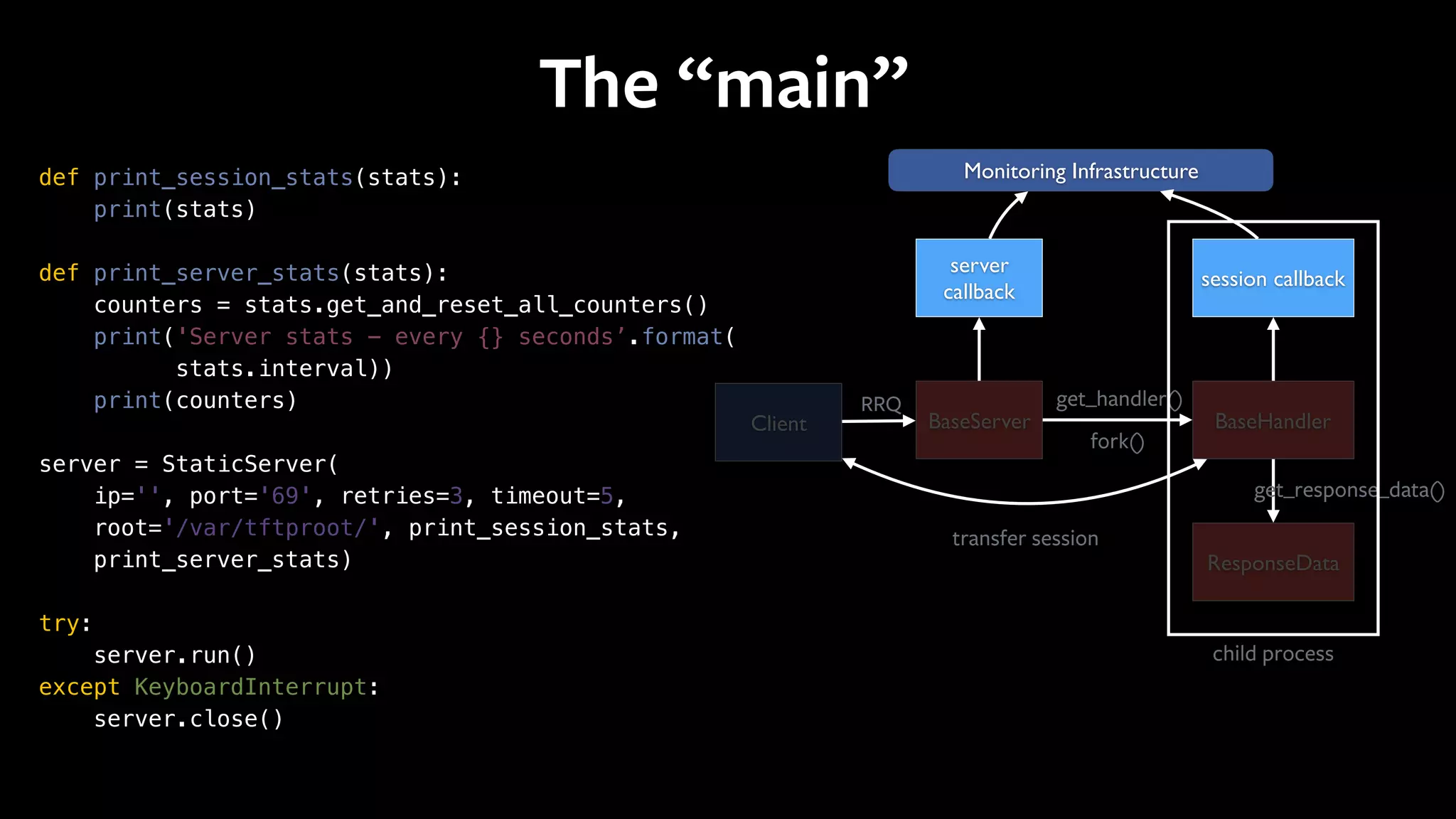

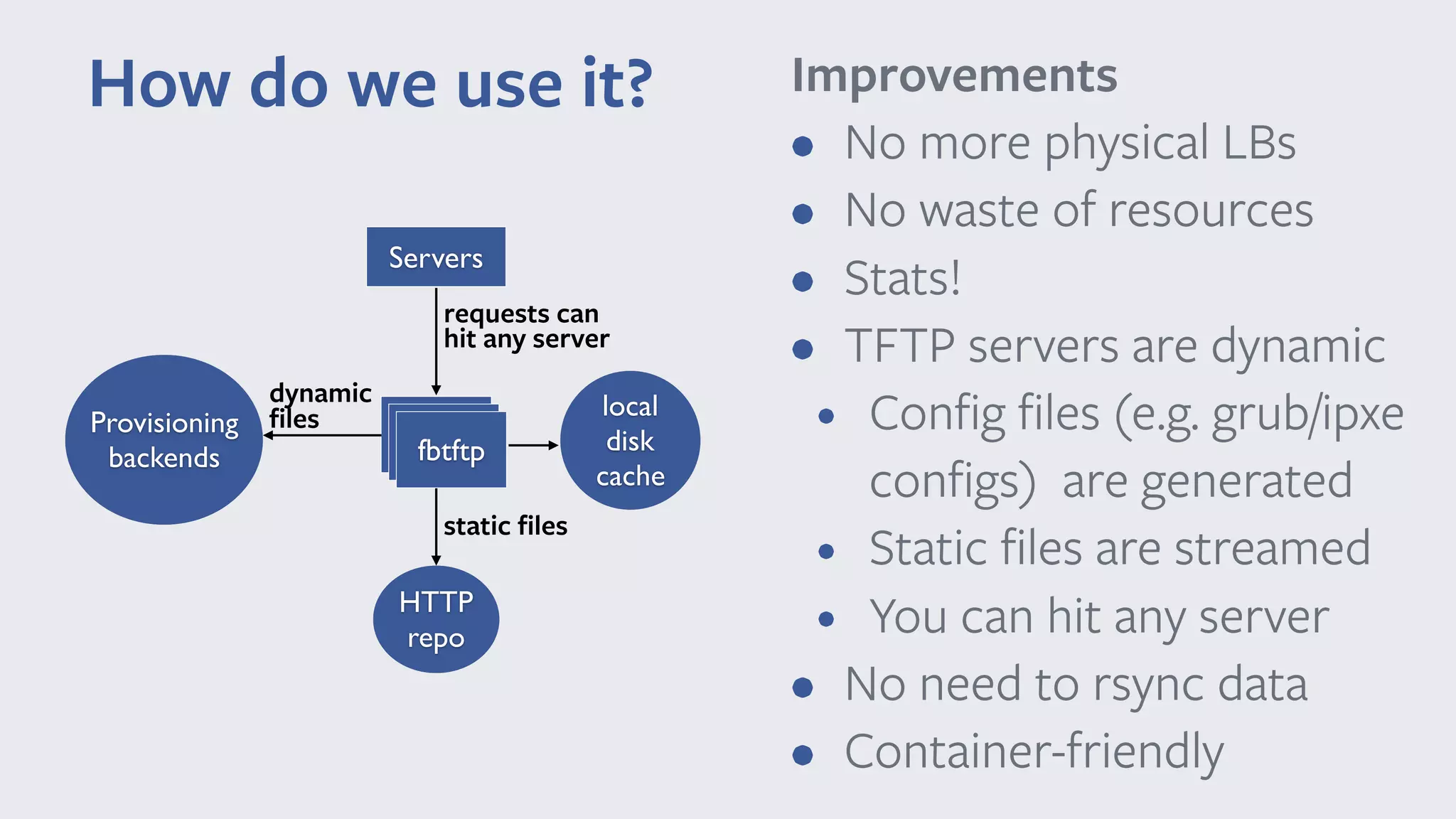

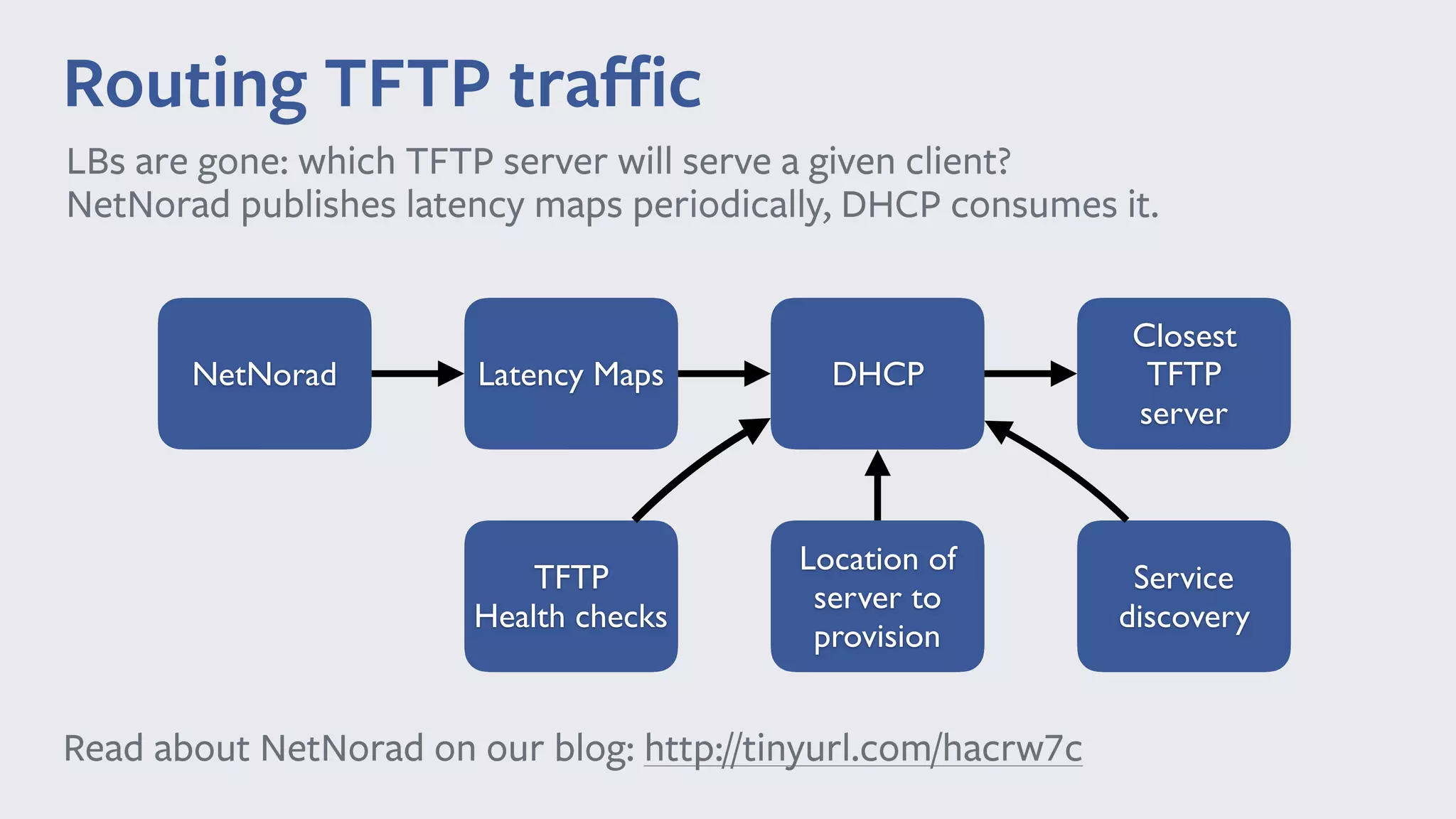

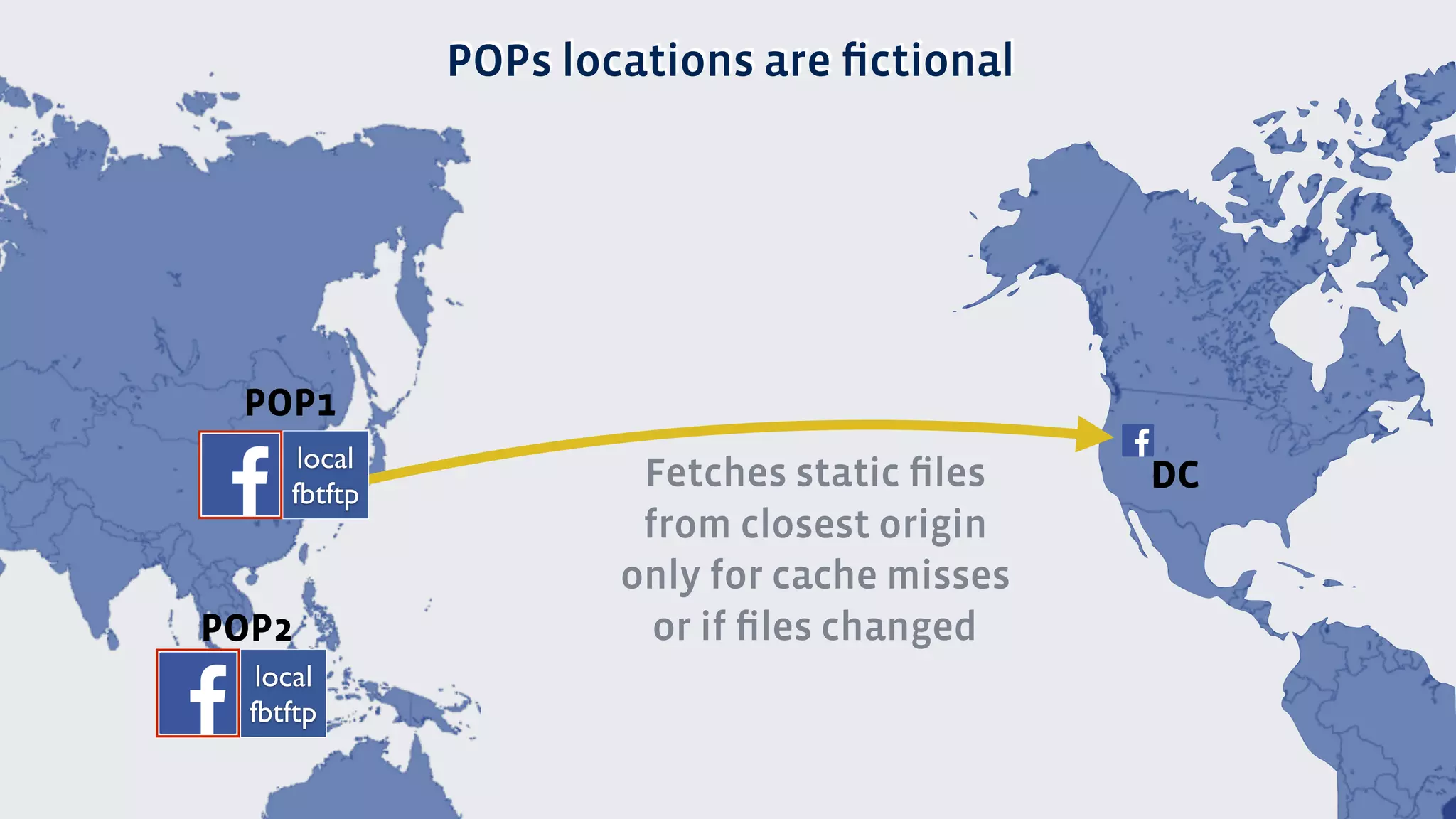

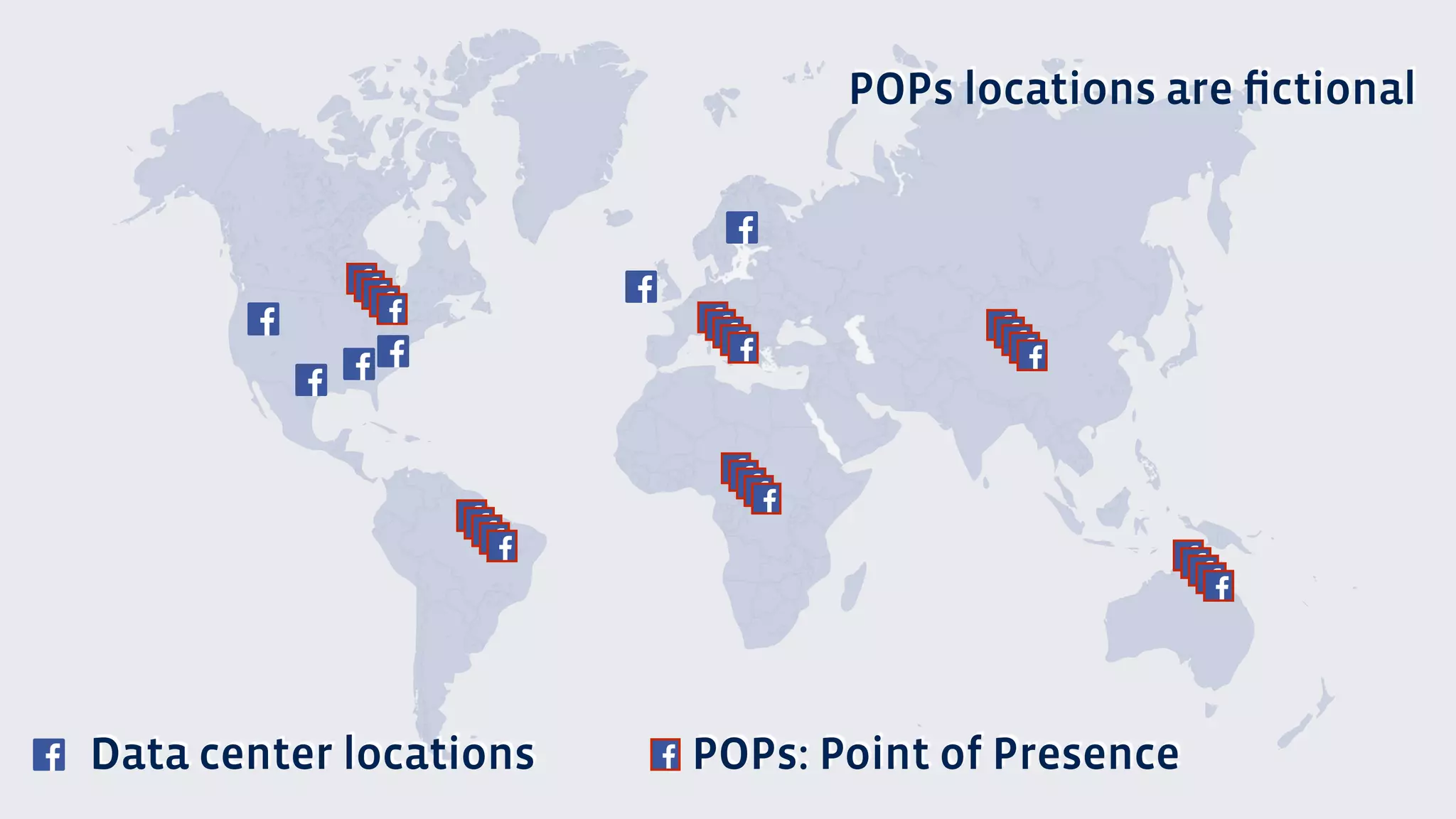

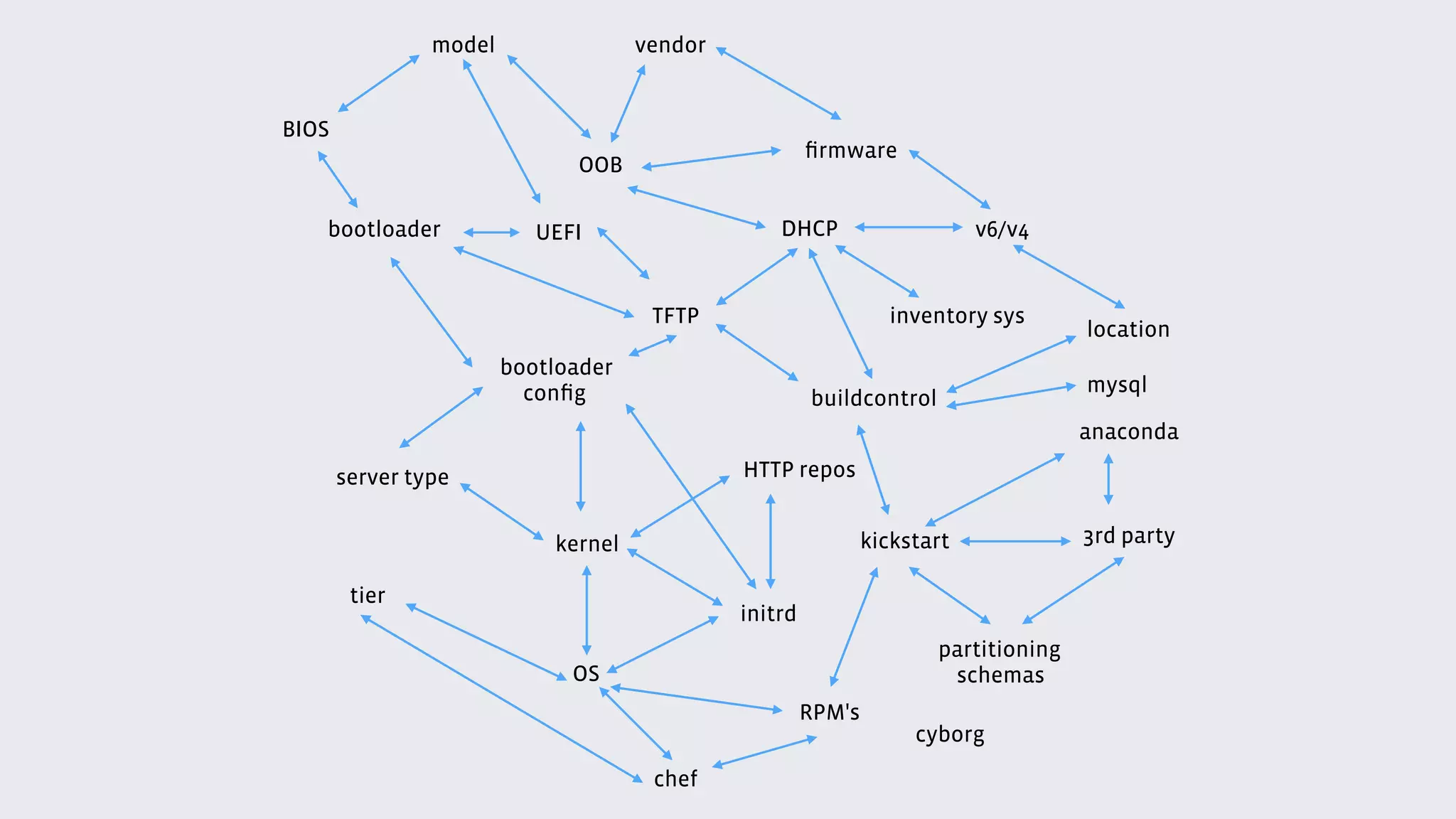

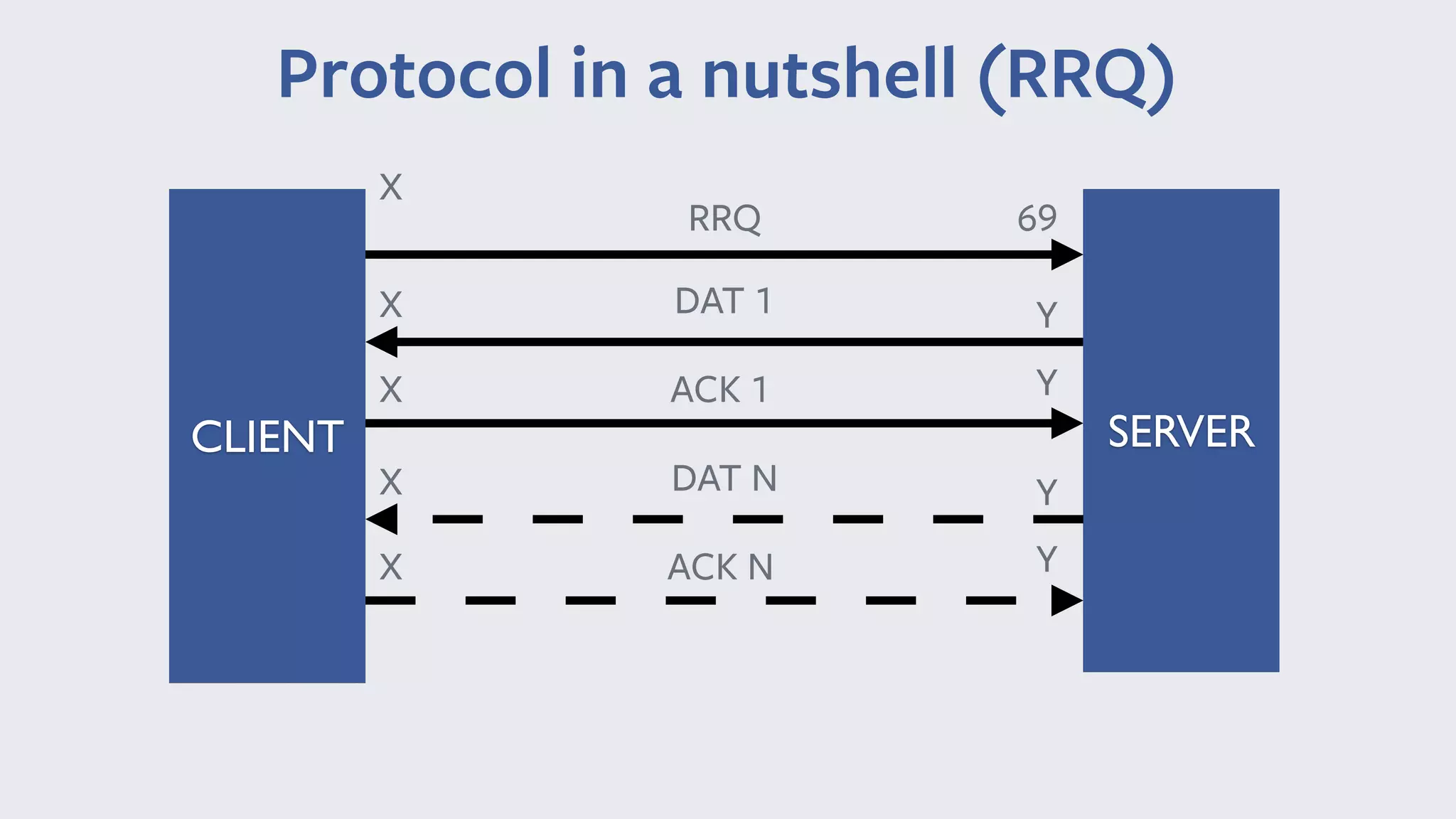

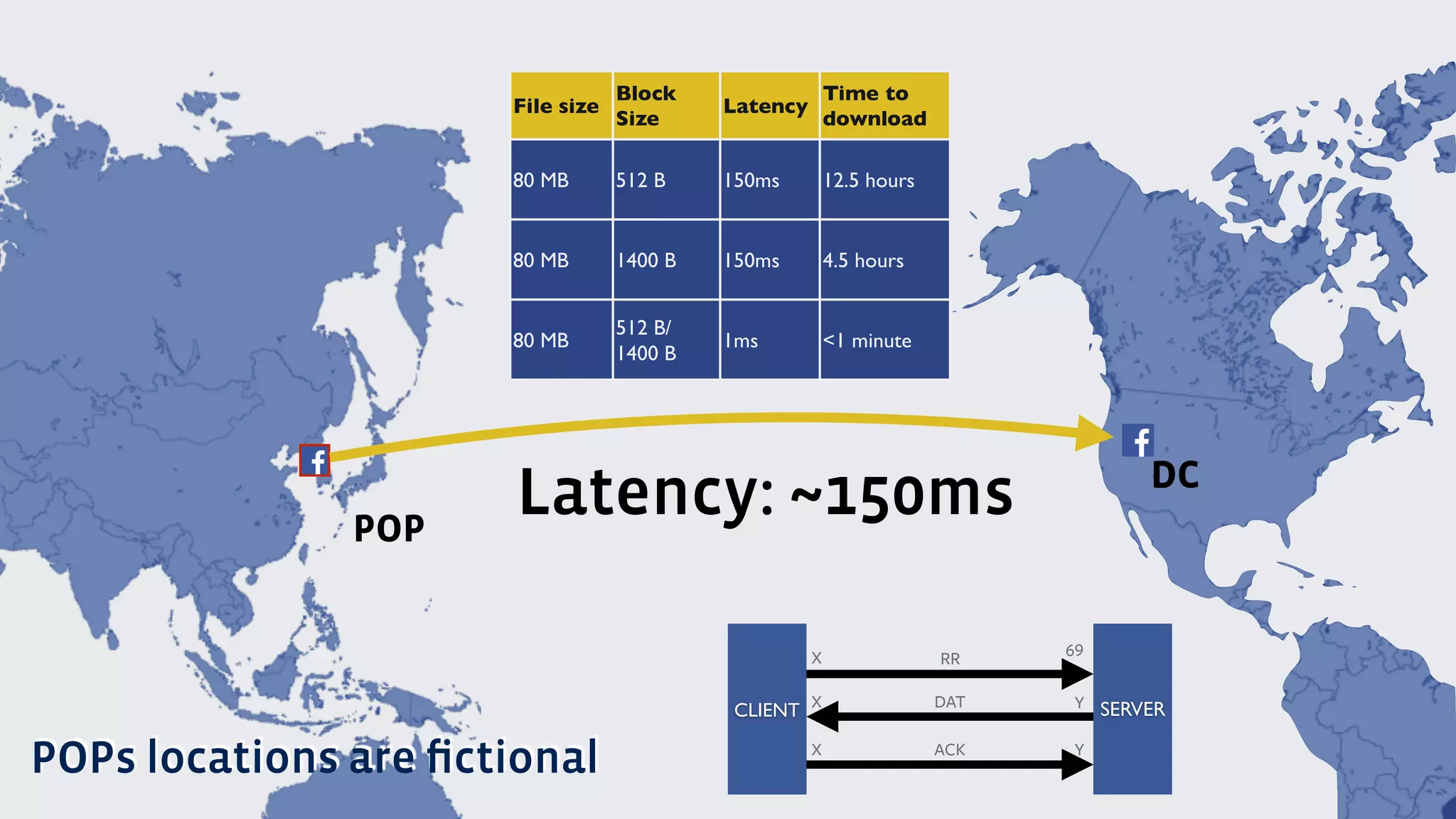

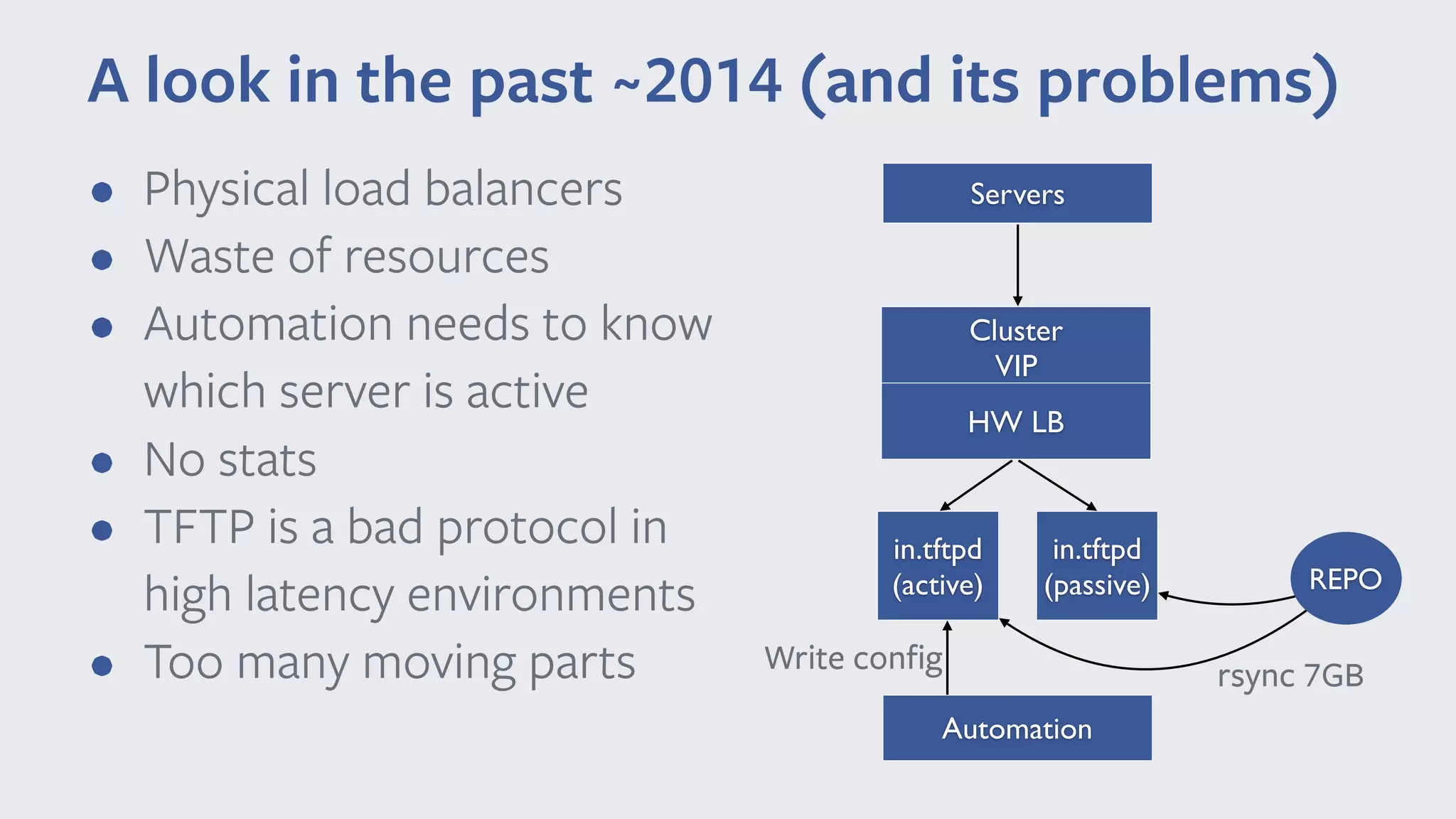

Angelo Failla, a production engineer at Facebook Ireland, discusses the development of fbtftp, a Python3 framework designed for dynamic TFTP servers. This solution addresses issues related to traditional TFTP protocols, enhancing data center provisioning by eliminating physical load balancers, supporting dynamic configurations, and improving overall efficiency. The framework allows for better statistics tracking and serves files dynamically from local storage, streamlining operations in high-latency environments.

![It’s common in Data Center/ISP environments Simple protocol specifications Easy to implement UDP based -> produces small code footprint Fits in small boot ROMs Embedded devices and network equipment Traditionally used for netboot (with DHCPv[46])](https://image.slidesharecdn.com/fbtftp-europython2016-angelofailla-160718153727/75/FBTFTP-an-opensource-framework-to-build-dynamic-tftp-servers-12-2048.jpg)

![DHCPv[46] - KEA TFTP NBP NETBOOT ANACONDA CHEF REBOOT PROVISIONEDPOWER ON • fetches config via tftp • fetches kernel/initrd

(via http or tftp) • provides NBPs • provides config files for NBPs • provides kernel/initrd • provides network config • provides path for NBPs binaries Provisioning phases](https://image.slidesharecdn.com/fbtftp-europython2016-angelofailla-160718153727/75/FBTFTP-an-opensource-framework-to-build-dynamic-tftp-servers-13-2048.jpg)

![• Supports only RRQ (fetch operation) • Main TFTP spec[1], Option Extension[2], Block size option[3], Timeout Interval and Transfer Size Options[4]. • Extensible: • Define your own logic • Push your own statistics (per session or global) We built FBTFTP… …A python3 framework to build dynamic TFTP servers [1] RFC1350, [2] RFC2347, [3] RFC2348, [4] RFC2349](https://image.slidesharecdn.com/fbtftp-europython2016-angelofailla-160718153727/75/FBTFTP-an-opensource-framework-to-build-dynamic-tftp-servers-19-2048.jpg)