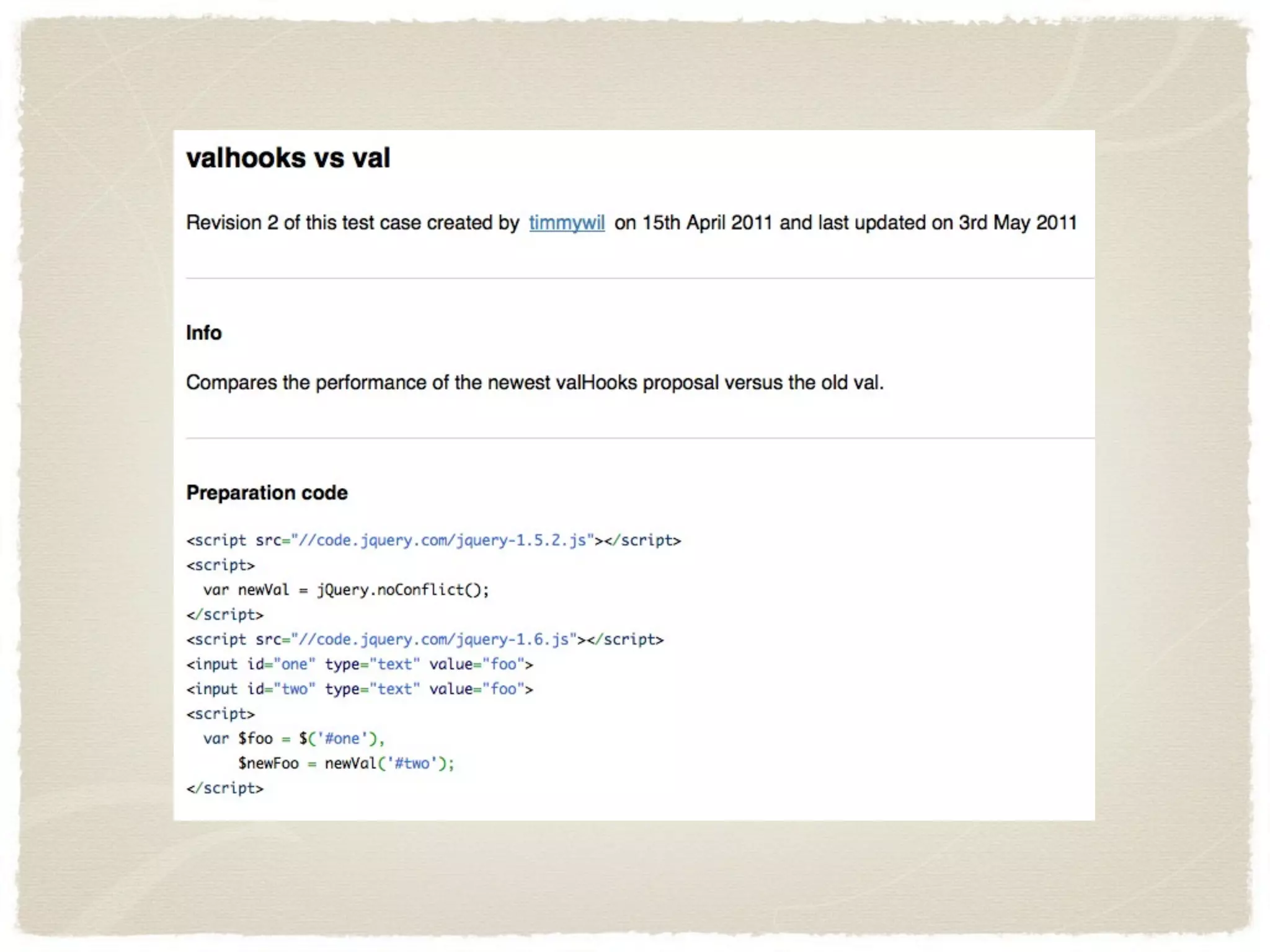

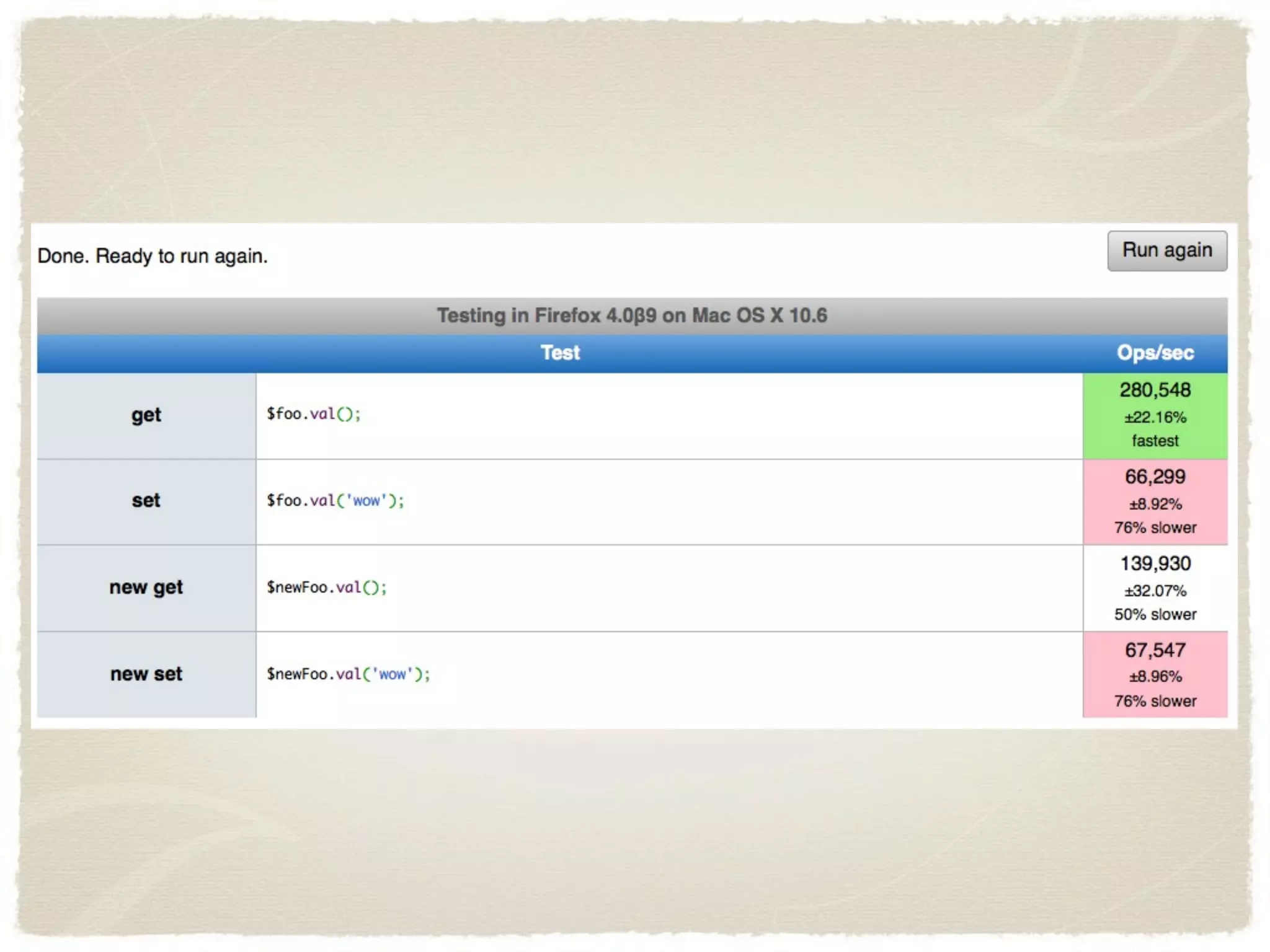

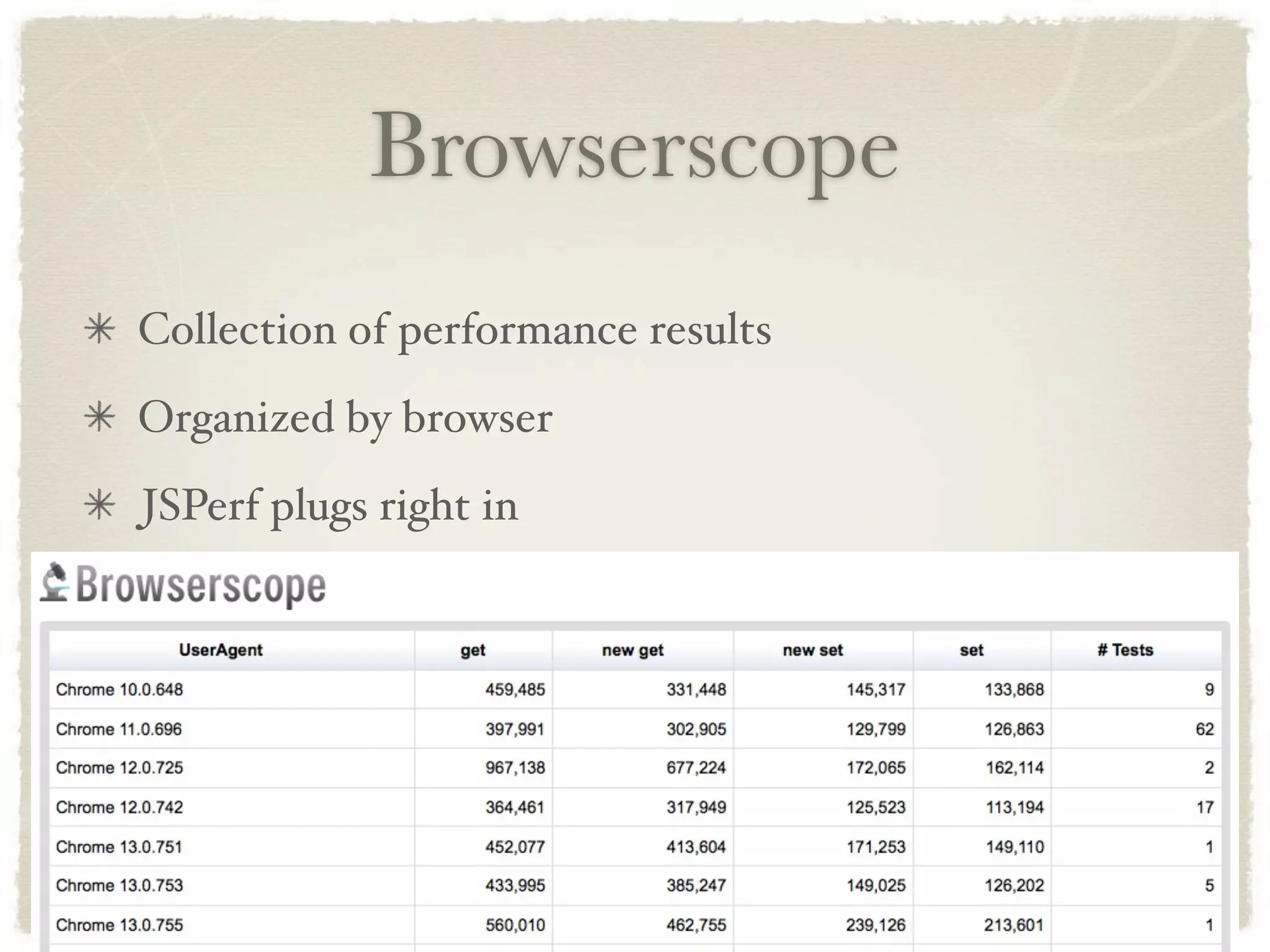

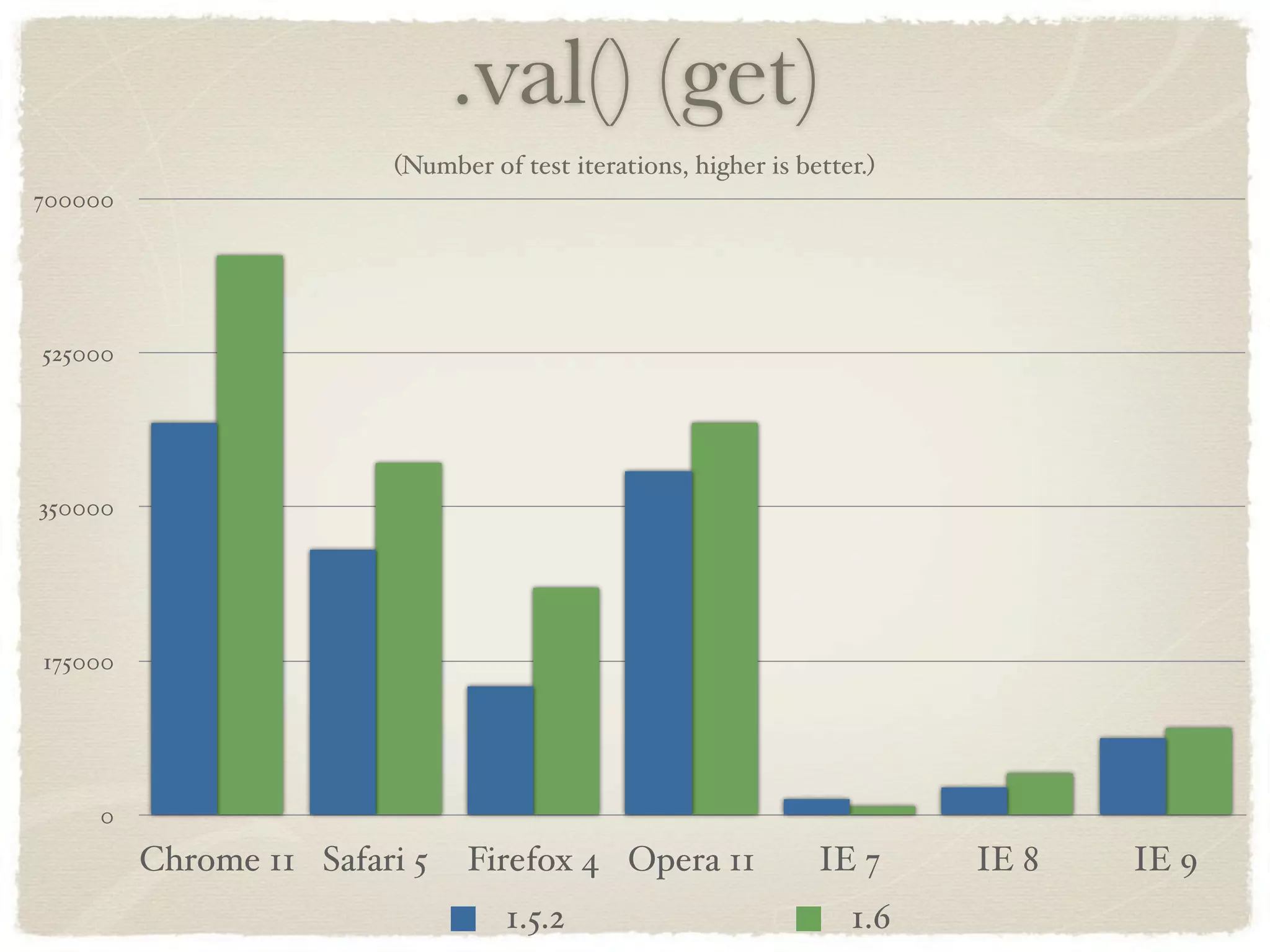

This document discusses holistic performance analysis and optimization in JavaScript. It emphasizes the importance of proving performance changes with benchmarks, considering the full browser stack and real-world use cases, maintaining clean code, prioritizing cross-browser compatibility, and clearly communicating results. Key tools mentioned include JSPerf for benchmarking, BrowserScope for hosting performance data, and Google Code Search for understanding real-world usage of APIs. The document advocates optimizing against past performance rather than competitors.