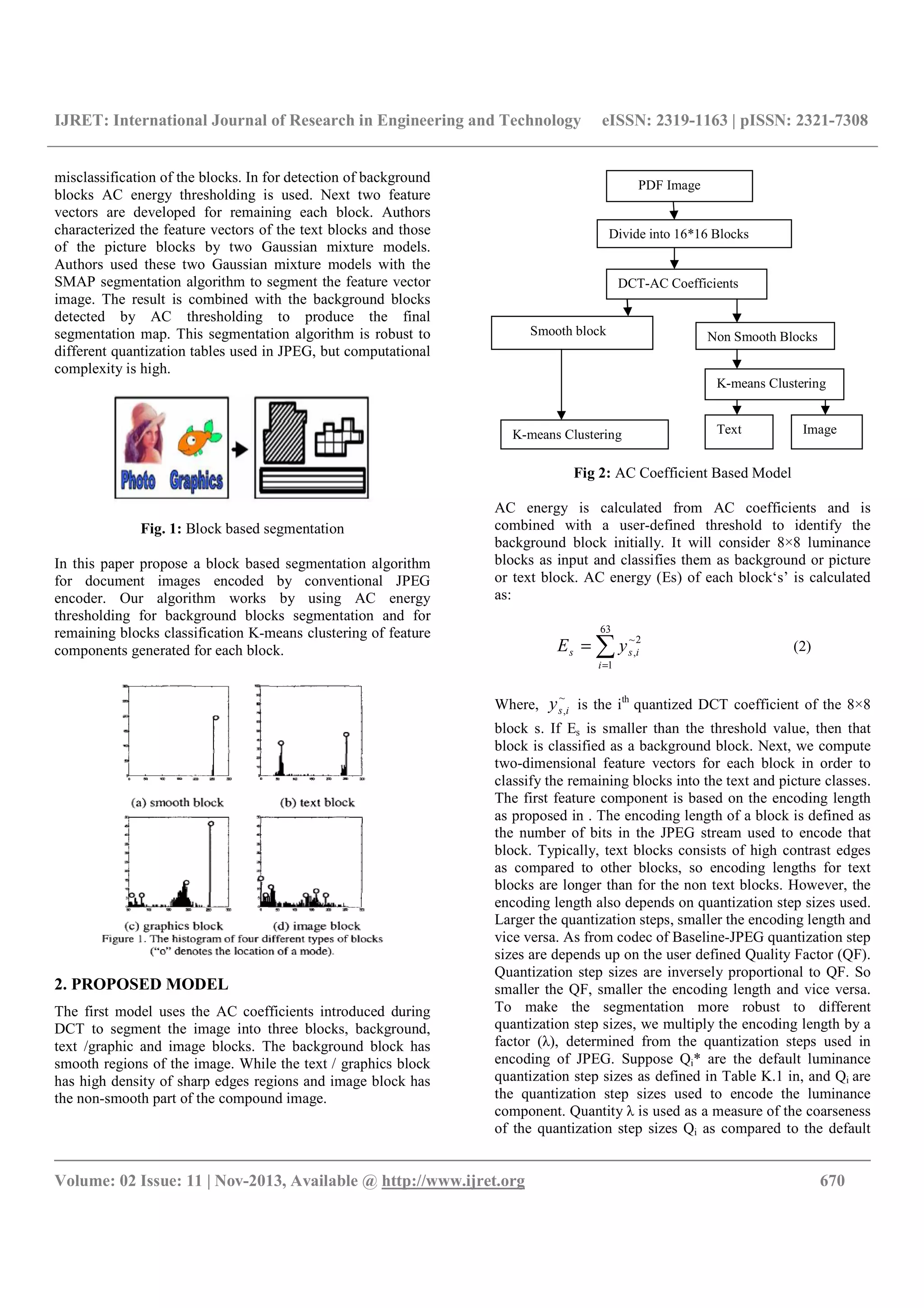

This document presents an improved block-based segmentation algorithm for JPEG compressed document images, addressing undesirable compression artifacts. It classifies blocks into background, text/graphic, and image types using AC energy thresholding and k-means clustering techniques. The proposed method aims to enhance segmentation robustness across various quantization settings, demonstrating effective results on test images.

![IJRET: International Journal of Research in Engineering and Technology eISSN: 2319-1163 | pISSN: 2321-7308 __________________________________________________________________________________________ Volume: 02 Issue: 11 | Nov-2013, Available @ http://www.ijret.org 669 IMPROVED BLOCK BASED SEGMENTATION FOR JPEG COMPRESSED DOCUMENT IMAGES P.Mahesh1 , P.Rajesh2 , I.Suneetha3 1 M.Tech Student, 2 Asst Professor, 3 Assoc Professor, Annamacharya Institute of Technology and Sciences, AP, India, p.maheshreddy169@gmail.com, rajshjoyful.smily@gmail.com, iralasuneetha.aits@gmail.com Abstract Image Compression is to minimize the size in bytes of a graphics file without degrading the quality of the image to an unacceptable level. The compound image compression normally based on three classification methods that is object based, layer based and block based. This paper presents a block-based segmentation. for visually lossless compression of scanned documents that contain not only photographic images but also text and graphic images. In low bit rate applications they suffer with undesirable compression artifacts, especially for document images. Existing methods can reduce these artifacts by using post processing methods without changing the encoding process. Some of these post processing methods requires classification of the encoded blocks into different categories. Keywords- AC energy, Discrete Cosine Transform (DCT), JPEG, K-means clustering, Threshold value --------------------------------------------------------------------***---------------------------------------------------------------------- 1. INTRODUCTION JPEG is one of the most widely used block based image compression technique. Although JPEG was first developed for natural images, in practice, it is also used for document images due to its simple structure. However, document images encoded by the JPEG algorithm exhibit undesirable compression artifacts. In particular, ringing artifacts significantly reduce the sharpness and clarity of the text and graphics in the decoded image. Many decoding schemes have been proposed to improve the quality of JPEG encoded images. Such methods neither require modification to current encoders, nor an increase in bit rate. Compression of a compound image is more critical than natural image. Basically a compound image is a combination of text, graphics and pictures. Most methods use different algorithms for various functionalities. Compound image compressions normally based on the following categories: Object Based, Layer Based and Block Based Most of the recent researches in this field mainly based on either layer based or block based. In object Based method a page is divided into regions, where each region follows exact object boundaries. An object maybe a photograph, a graphical object, a letter, etc. The main drawback of this method is its complexity. In layer-based method, a page is divided into rectangular layers. Most of the Layer based methods follow the 3-layer MRC model. The basic three layers MRC model represents a color image as two color-image layers [Foreground (FG), Background (BG), and a binary Mask layer (mask)]. The mask layer describes how to reconstruct the final image from the FG/BG layers. All these methods first divides the JPEG encoded image into different classes of regions and each region is decoded by using algorithm specially designed for that class of region. This region selection is done on the block basis, i.e. each encoded block is first classified as one of the required region. This paper focuses on jpeg coded document images. In a discriminative function based on DCT coefficients is calculated for every block. This function value is compared with a threshold parameter to classify the block. Discriminative function is defined as follows: ∑∑= = = 7 0 7 0 )],([)( u s v f vuygsD (1) Where =)(xgi ≠ otherwise andxifx 0 0|||)(|log2 ys(u,v) is DCT coefficients of blocks ‘s’ Df(s) ≈0 => ‘s’ is a background block if Df(s) ˂T => ‘s’ is a picture block if Df(s) ≥ T => ‘s’ is a Non picture block Threshold value proposed in above segmentation algorithm is not robust to different quantization tables used in baseline JPEG. Threshold value proposed gives good results, when default quantization matrices mentioned by JPEG standard are used. But when user defined Quality factor (QF) changes, obviously quantization matrices will change. It leads to](https://image.slidesharecdn.com/improvedblockbasedsegmentationforjpeg-140807004356-phpapp01/75/Improved-block-based-segmentation-for-jpeg-1-2048.jpg)

![IJRET: International Journal of Research in Engineering and Technology eISSN: 2319-1163 | pISSN: 2321-7308 __________________________________________________________________________________________ Volume: 02 Issue: 11 | Nov-2013, Available @ http://www.ijret.org 671 Qi*. Larger quantization step sizes (Qi) corresponds to larger values of λ and vice versa. ∑ ∑ = i ii i ii QQ QQ )( )( ** * λ (3) First feature component of the blocks is defined as: ×= γ λ1,sD (Encoding length of blocks) (4) Threshold value used for AC thresholding and γ used in Ds, 1 are referred from . This value are determined from training process and then uses a set of training images consisting of fifty four digital and scanned images. Each image is manually segmented and JPEG encoded with nine different quantization matrices, corresponding to λj with j=1 to 9. For the i th image encoded by the j th quantization matrix, they first computed the average encoding lengths of the text blocks and the picture blocks, denoted by ui,j and vi,j respectively. The parameter γ is then determined from the following optimization problem: ])()[(minminargˆ 54 1 9 1 2 , 2 , , ∑∑= = −+−= i j jijij vu vvuu γγ γ λλγ (5) where u, v are average encoding lengths of the text blocks and the picture blocks by using default quantization step size as defined in Table K.1 in. It is determined as γ=0.5 and threshold value as 200 (ac ε =200). Due to multiplication of λ with the encoding length, effect of quantization step sizes on Ds,1 will be reduced. Because, larger quantization step sizes leads to small values of encoding length and large values of λ, but the product Ds,1 remains same. Similarly, for smaller quantization step sizes the same will occur. So the range of Ds,1 for a particular class of block does not change with the quantization step sizes or QF. It depends only on the class of blocks and value is high for text class blocks as compared to remaining blocks. Generally text block is a combination of two colors foreground intensity and background intensity. So to take this advantage second feature component (Ds,2) is developed to find how close a block is to being a two color block. We take the luminance component decoded by the convectional JPEG decoder and use k-means clustering [6] to separate the pixels in a 16×16 window centered at the block s into two groups. Let C1, s and C2, s denote the two cluster means. Then second feature component is computed by the difference of two cluster means, given in Eq.(6) || ,2,12, sss CCD −= (6) If difference of two cluster means is high, value of Ds,2 is large, means block is a two color block. Otherwise block is a single color block. As explained above, text block is combination of two colors, so obviously Ds,2 value is high for text class blocks as compared to remaining blocks. Compute vector Ds, for every non background block is defined sum of two feature components as: 2,1, sss DDD += (7) As explained above for text blocks Ds,1 and Ds,2 are high when compared to picture blocks. So their sum Ds is also high value for text blocks compared to picture blocks. So we divide this vector Ds into two clusters by using k-means clustering and the resulting clusters with high mean value are segmented as text blocks and remaining blocks are named as picture blocks. Fig. 3: K-means Clustering Analysis K-means clustering is a method of cluster analysis, which partitions the given n observations into required k clusters such that each observation belongs to the cluster with the nearest mean. So for a given a set of observations (x1, x2…. xn), k-means clustering aims to minimize the sum of squares within each cluster S={S1, S2 … Sk},: ∑∑= − k i sx ij s ij x 1 2 ||||minarg ε µ (8) Where iµ is the mean of all points in Si.](https://image.slidesharecdn.com/improvedblockbasedsegmentationforjpeg-140807004356-phpapp01/75/Improved-block-based-segmentation-for-jpeg-3-2048.jpg)

![IJRET: International Journal of Research in Engineering and Technology eISSN: 2319-1163 | pISSN: 2321-7308 __________________________________________________________________________________________ Volume: 02 Issue: 11 | Nov-2013, Available @ http://www.ijret.org 672 3. SIMULATION RESULTS Proposed algorithm performance can be evaluated by taking seven different images. Each of these images contains some text and/or graphics. Segmentation algorithm results for all test images encoded by baseline JPEG at different compression ratios. Consider a test image-1 as in Fig. 1.Segmentation results of JPEG encoded test image-1 at QF values of 10, 25, and 60 are shown below in Fig. 4, Fig. 5 and Fig. 6 respectively. In resultant images, red color blocks represent the text region, yellow color blocks represent picture region and white color blocks represent background blocks. For all QF values, proposed segmentation gives almost same classification of regions. Fig. 4: Original Test Image-1 Fig. 5: Segmentation Results (QF=10) Fig. 6: Segmentation Results (QF=25) Fig. 7: Segmentation Results (QF=60) CONCLUSIONS Block based segmentation algorithm for JPEG encoded document images has been successfully implemented by using MATLAB software. Visual inspection of the simulation results indicate that proposed algorithm is robust to different quantization step sizes used in JPEG encoding. This paper considers true color images only. Block segmentation techniques can also be extended to gray scale images by small changes in the algorithm and take it as other image formats. The future work is the development of segmentation algorithm for documented images input take it as other image formats exclude JPEG. REFERENCES [1] E. Y. Lam, “Compound document compression with model-based biased reconstruction,”J.Electron. Imag., vol. 13, pp. 191–197, Jan. 2004. [2] Tak-Shing Wong, Charles A. Bouman, Ilya Pollak, and Zhigang Fan, “A Document Image Model and Estimation Algorithm for Optimized JPEG Decompression,” IEEE Transactions On Image Processing, vol. 18, no. 11, November. 2009. [3] B. Oztan, A. Malik, Z. Fan, and R. Eschbach, “Removal of artifacts from JPEG compressed document images,” presented at the SPIE Color Imaging XII: Processing, Hardcopy, and Applications, Jan. 2007. [4] L. Bottou, P. Haffner, P. G. Howard, P. Simard, Y. Bengio, and Y. Lecun, “High quality document image compression with DjVu,” J.Electron. Imag., vol. 7, pp. 410–425, 1998. [5] C. A. Bouman and M. Shapiro, “A multiscale random field model for Bayesian image segmentation,” IEEE Trans. Image Process., vol. 3, no. 2, pp. 162–177, Mar. 1994. [6] J. McQueen, “Some methods for classification and analysis of multivariate observations,” in Proc. 5th Berkeley Symp. Mathematical Statistics and Probability, pp. 281–297, 1967. [7] ISO/IEC 10918-1: Digital Compression and Coding of Continuous Tone Still Images, Part 1, Requirements](https://image.slidesharecdn.com/improvedblockbasedsegmentationforjpeg-140807004356-phpapp01/75/Improved-block-based-segmentation-for-jpeg-4-2048.jpg)

![IJRET: International Journal of Research in Engineering and Technology eISSN: 2319-1163 | pISSN: 2321-7308 __________________________________________________________________________________________ Volume: 02 Issue: 11 | Nov-2013, Available @ http://www.ijret.org 673 and Guidelines, International Organization for Standardization 1994. [8] G. K. Wallace, "The JPEG still picture compression standard," IEEE Transactions on Consumer Electronics, vol. 38, no. 1, pp. 18-34, Feb. 1992. [9] K. Konstantinides and D. Tretter,“A JPEG variable quantization method for compound documents,” IEEE Trans. Image Process., vol. 9, no. 7, pp. 1282–1287, Jul. 2000. [10] Averbuch, A. Schclar, and D. Donoho, “Deblocking of block-transform compressed images using weighted sums of symmetrically aligned pixels,” IEEE Trans. Image Process., vol. 14, no. 2, pp. 200–212, Feb. 2005. [11] H. Siddiqui and C. A. Bouman, “Training-based descreening,” IEEE Trans. Image Process., vol. 16, no. 3, pp. 789–802, Mar. 2007. [12] Averbuch, A. Schclar, and D. Donoho, “Deblocking of block-transform compressed images using weighted sums of symmetrically aligned pixels,” IEEE Trans. Image Process., vol. 14, no. 2, pp.200–212, Feb. 2005. [13] C.A.Bouman,I.pollak” A Document Image Model and Estimation Algorithm for Optimized JPEG ecompression “vol.18 pages 2518-2535,Nov.2009. [14] Xiwen OwenZhao, Zhihai HenryHe, “Lossless Image Compression Using Super-Spatial StructurePrediction”, IEEE Signal Processing Letters, vol. 17, no. 4, April 2010 [15] Jaemoon Kim, Jungsoo Kim and Chong-Min Kyung , “A Lossless Embedded Compression Algorithm for High Definition Video Coding” 978-1-4244-4291 / 09 2009 IEEE. ICME 2009 [16] Yu Liu, Student Member, and King Ngi Ngan, “Weighted Adaptive Lifting-Based Wavelet Transform for Image Coding “,IEEE Transactions on Image Processing, vol. 17, NO. 4, Apr 2008. [17] R. de Queiroz and R. Eschbach, “Fast segmentation of JPEGcompressed documents,” J. Electron. Imag., vol.7, Apr.1998, pp.367-377 [18] H. T. Fung, K. J. Parker, “Color, complex document segmentation and compression,” Proc. of the SPIE, vol.3027, San Jose, CA, 1997 [19] Zhe-Ming Lu, Hui Pei ,”Hybrid Image Compression Scheme Based on PVQ and DCTVQ “,IEICE - Transactions on Information and Systems archive, Vol E88-D , Issue 10 ,October 2006. BIOGRAPHIES Mr. P. Mahesh received the B.Tech Degree in E.I.C.E from N.B.K.R.IST, Vidyanagar, Nellore under Affliated Sri Venkateswara University (SVU), He is pursuing M.tech Degree with the Specialization of ECE (DSCE) in Annamacharya Institute of Technology and Sciences (AITS), Tirupati, AP, India MS. I. Suneetha received the B.Tech and M.Tech Degrees in E.C.E from Sri Venkateswara University College of Engineering (SVUCE), Tirupati, India in 2000 and 2003 respectively. She is pursuing her Ph.D Degree at SVUCE, Tirupati and working as Associate Professor & Head, E.C.E department, Annamacharya Institute of Technology and Sciences (AITS), Tirupati. Her teaching and research area of interest includes 1D & 2D signal processing.](https://image.slidesharecdn.com/improvedblockbasedsegmentationforjpeg-140807004356-phpapp01/75/Improved-block-based-segmentation-for-jpeg-5-2048.jpg)