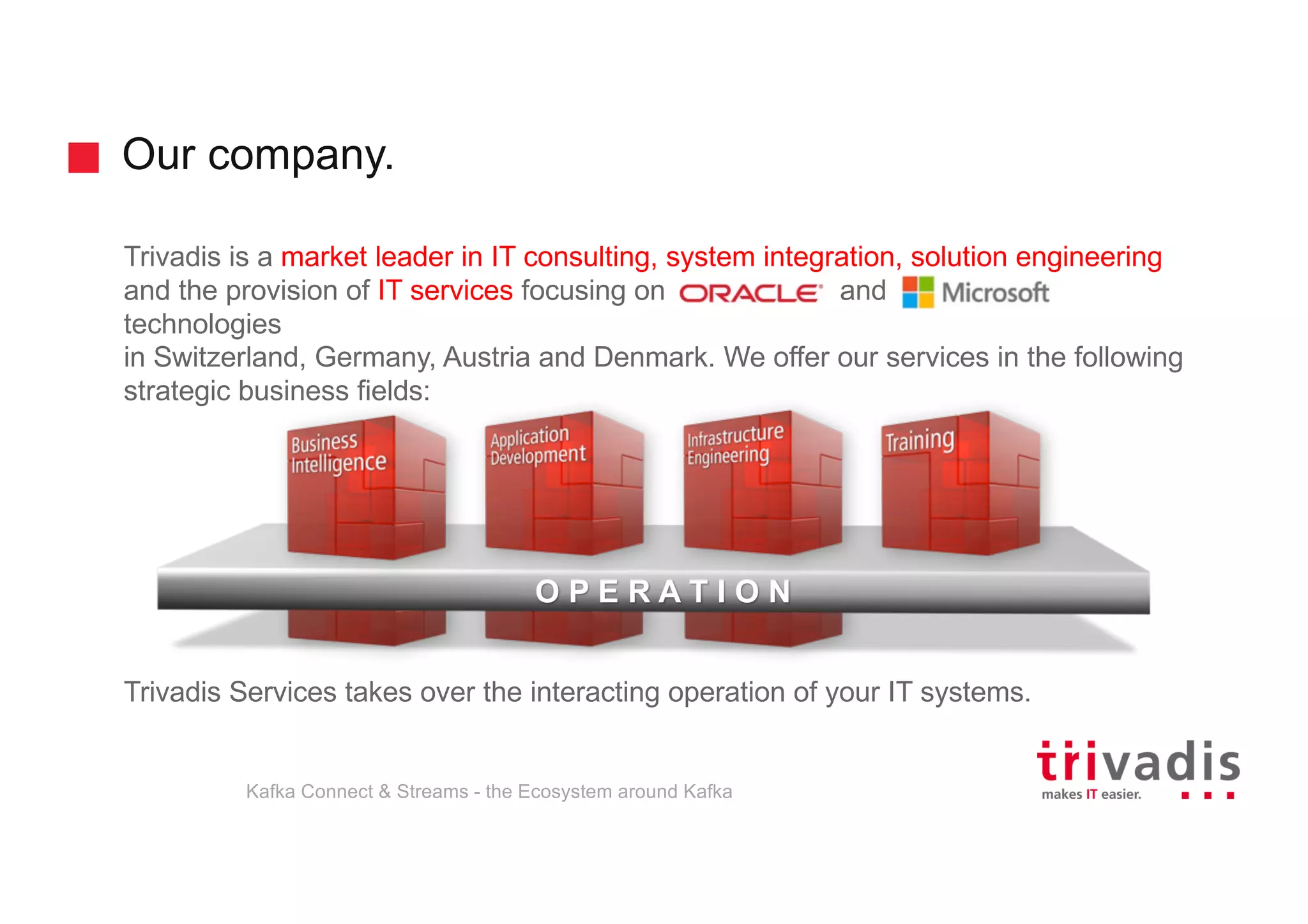

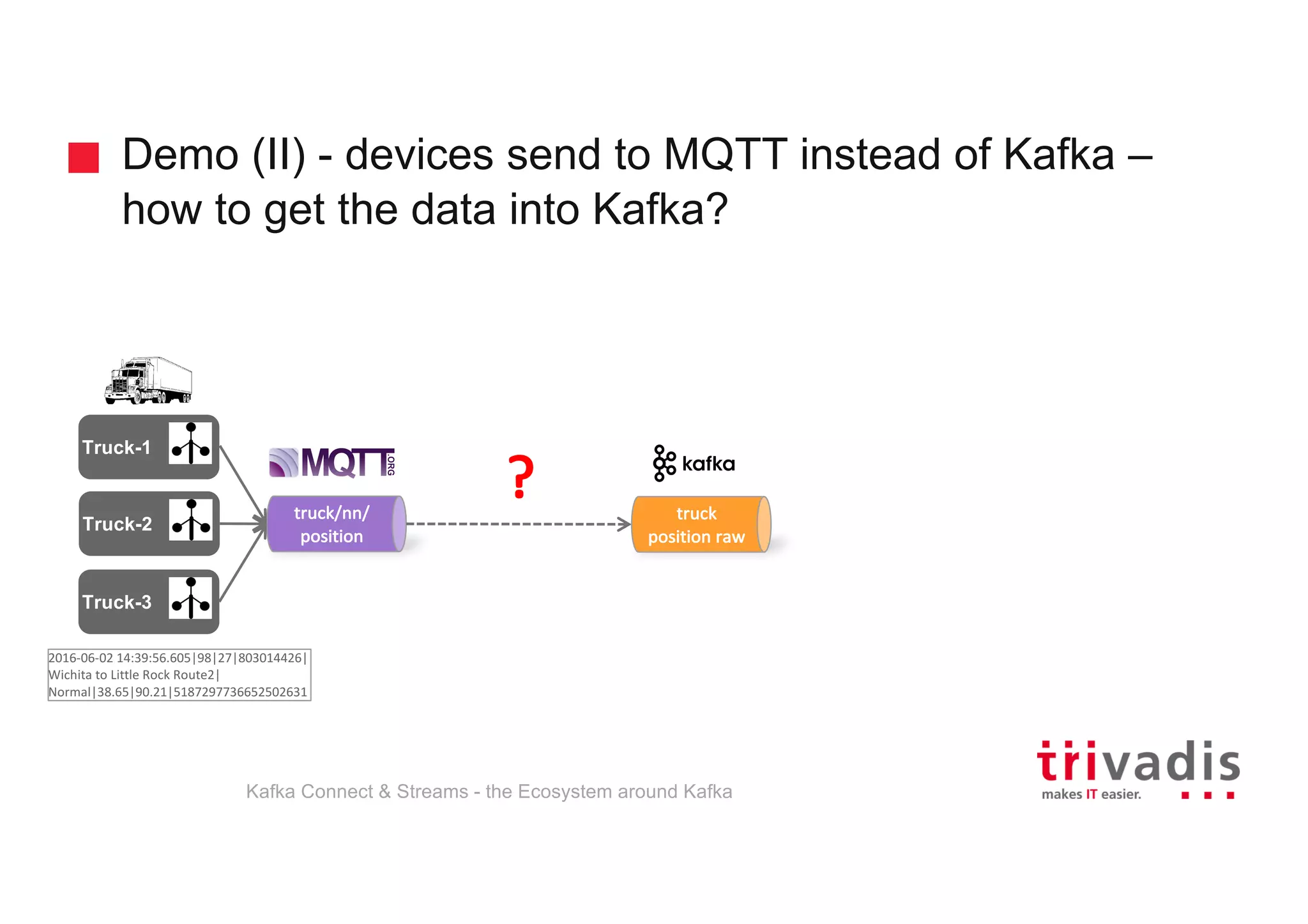

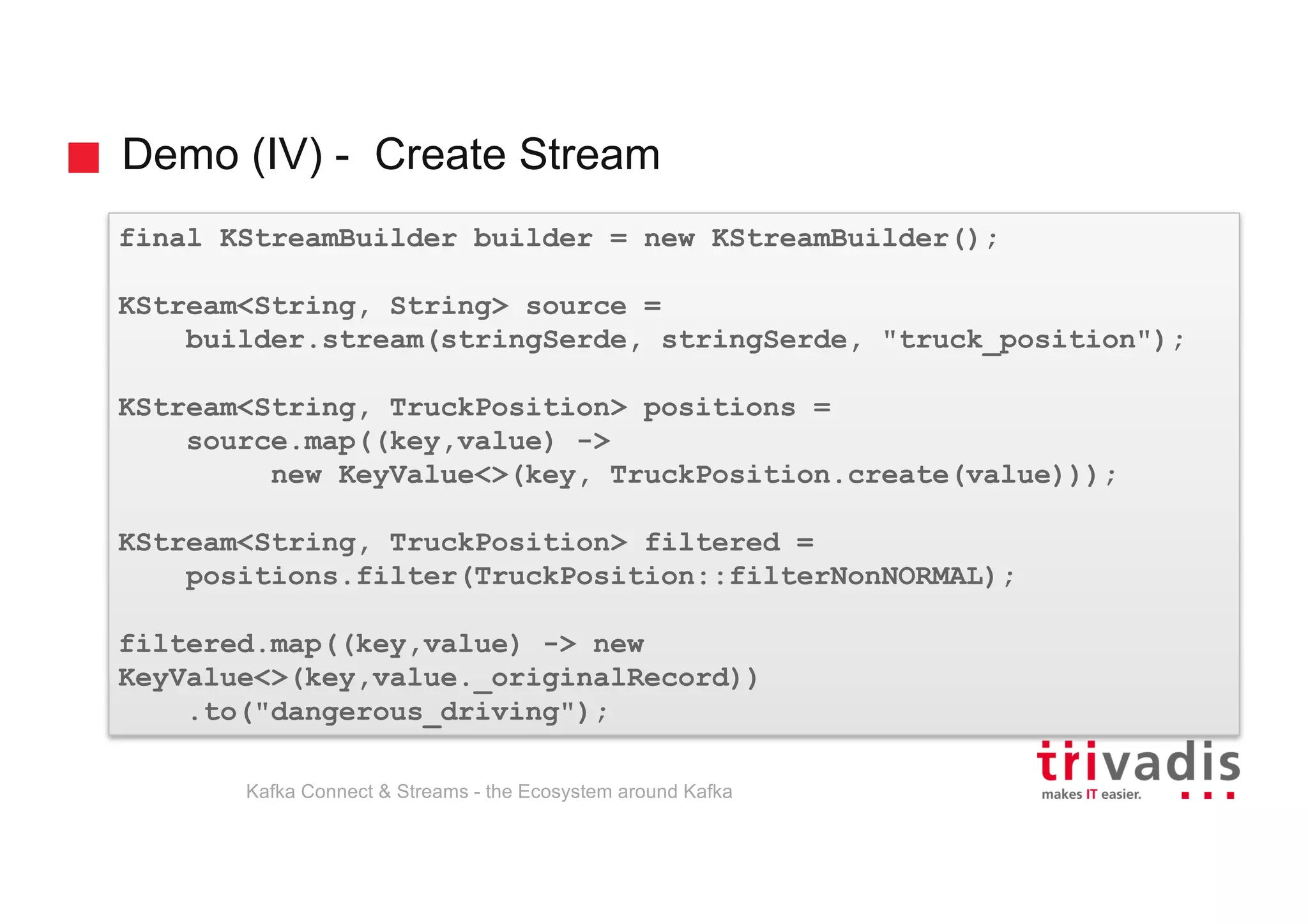

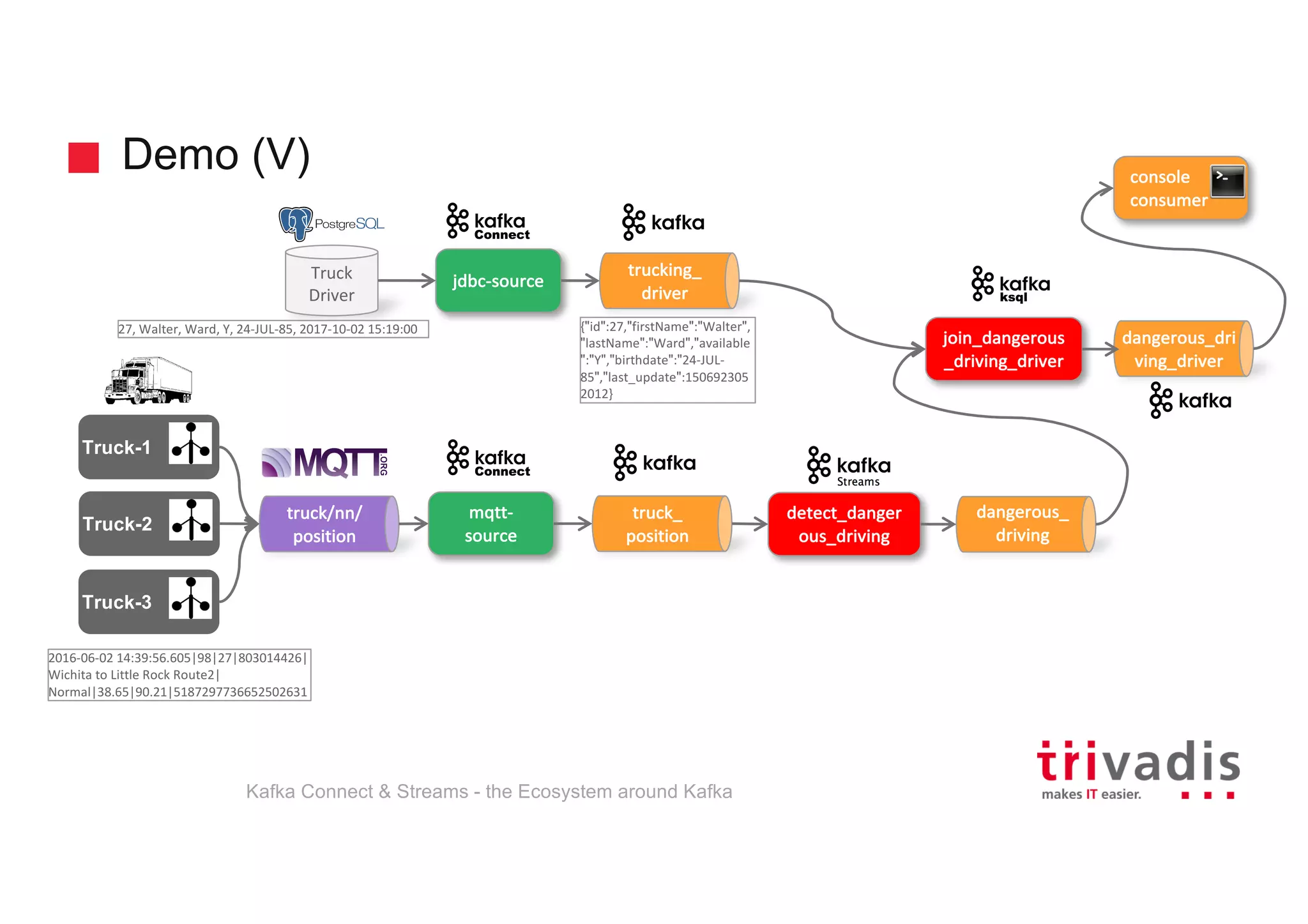

The document provides an overview of Apache Kafka, focusing on its components such as Kafka Connect and Kafka Streams, along with the ecosystem surrounding it. It highlights the operational capabilities of Trivadis, a major IT consulting firm, and outlines various aspects of Kafka, including architecture, data retention, and transformations within the Kafka Connect framework. Additionally, it discusses the practical implementation and the advantages of using Kafka for event streaming and processing in software applications.