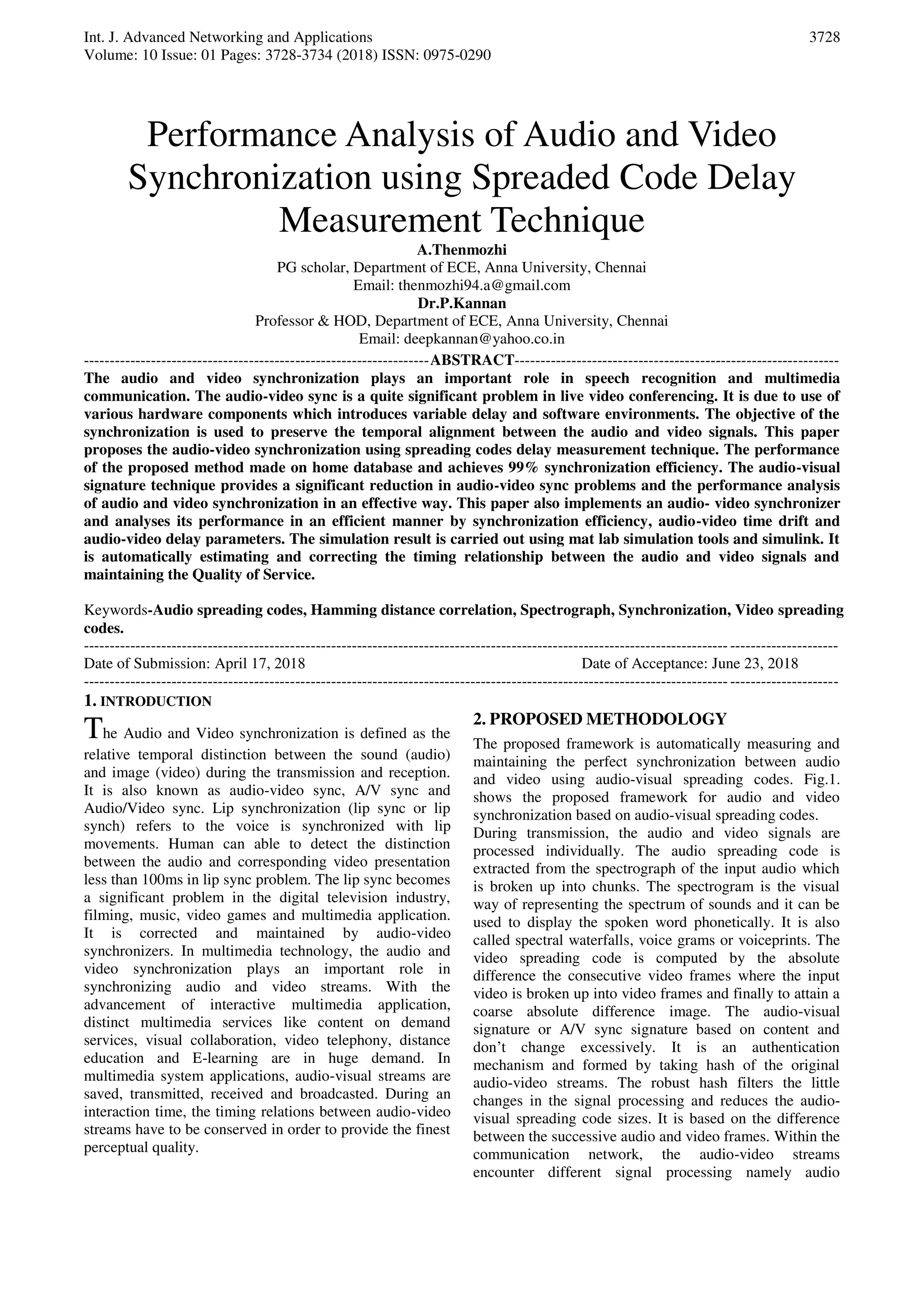

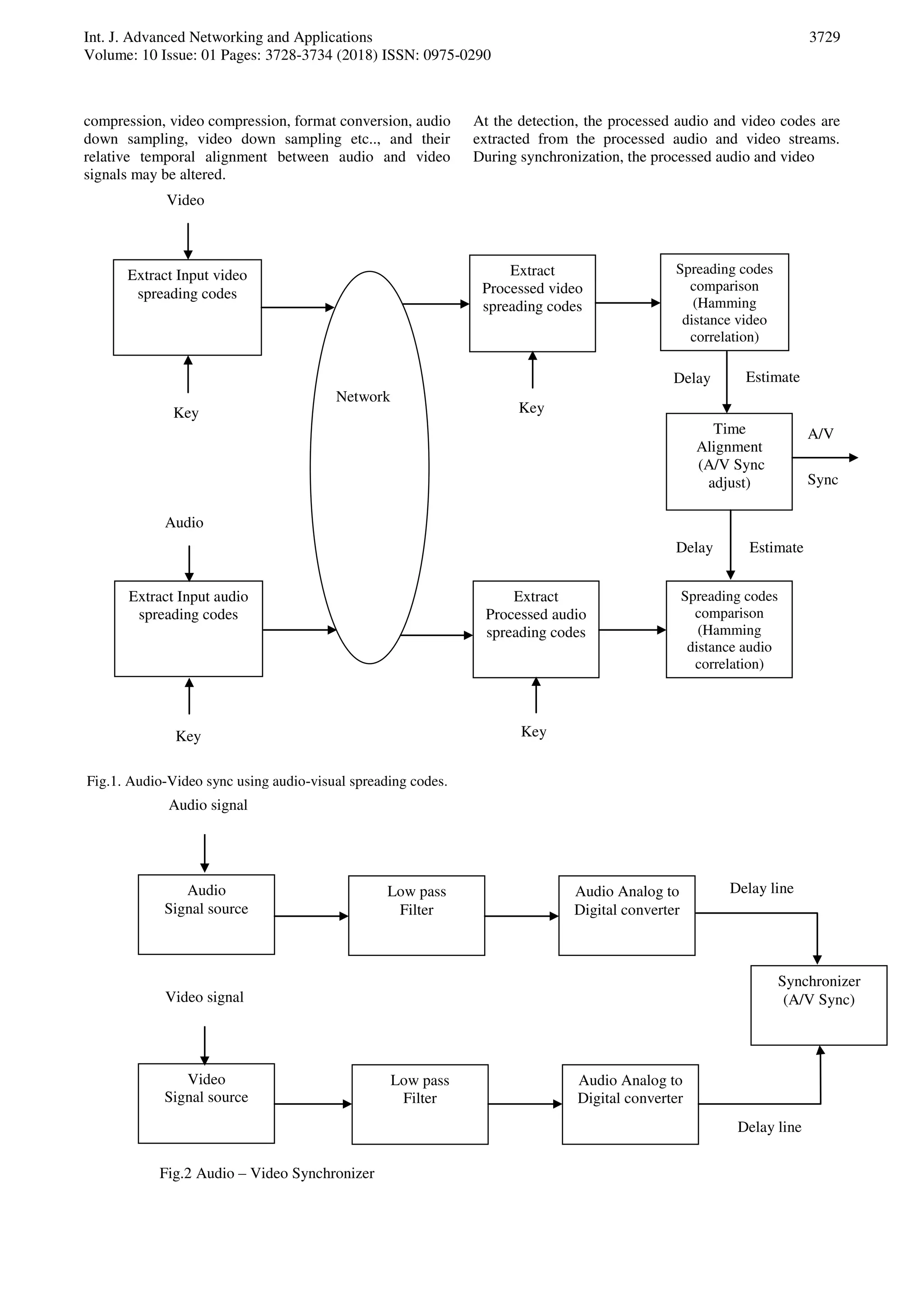

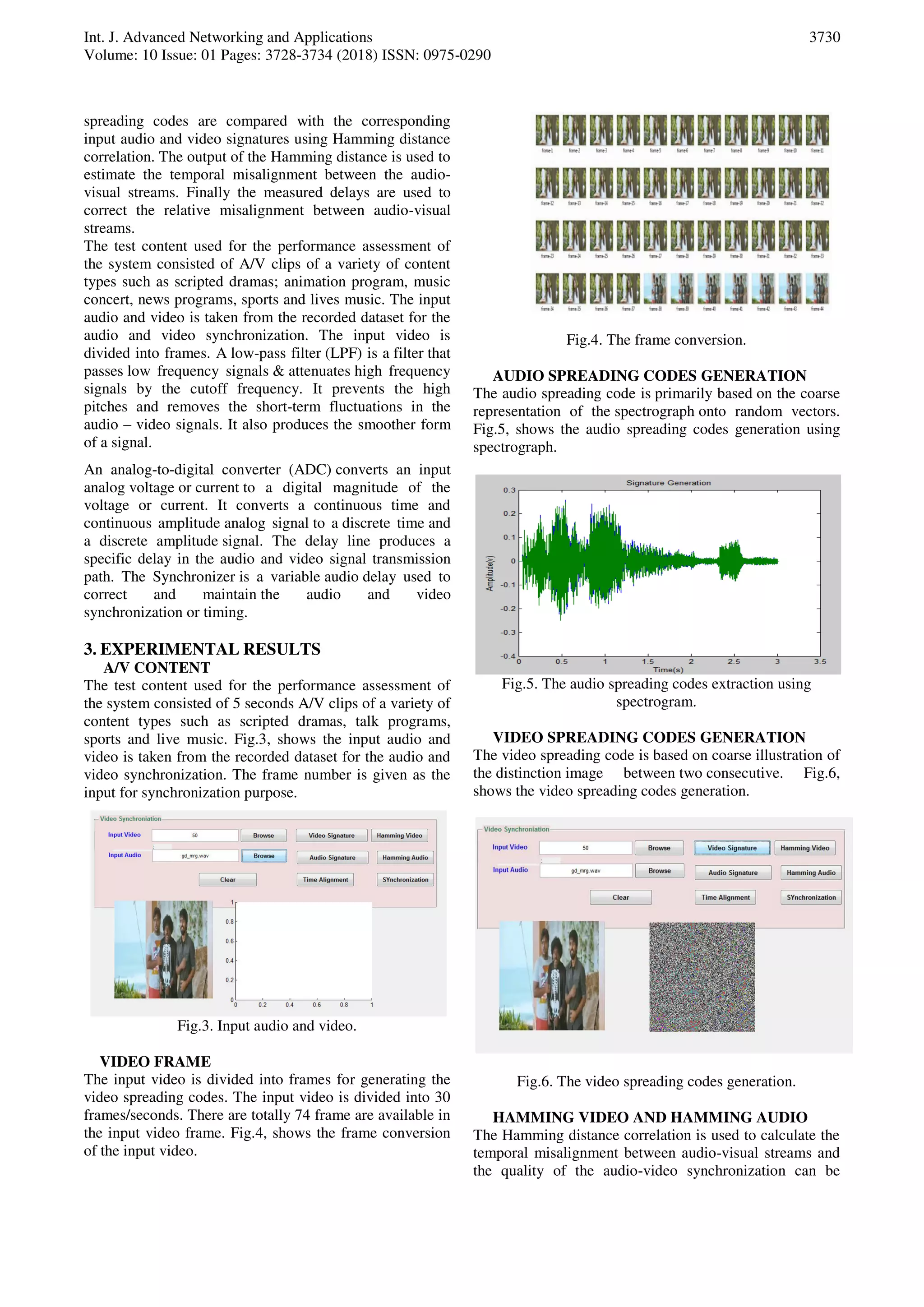

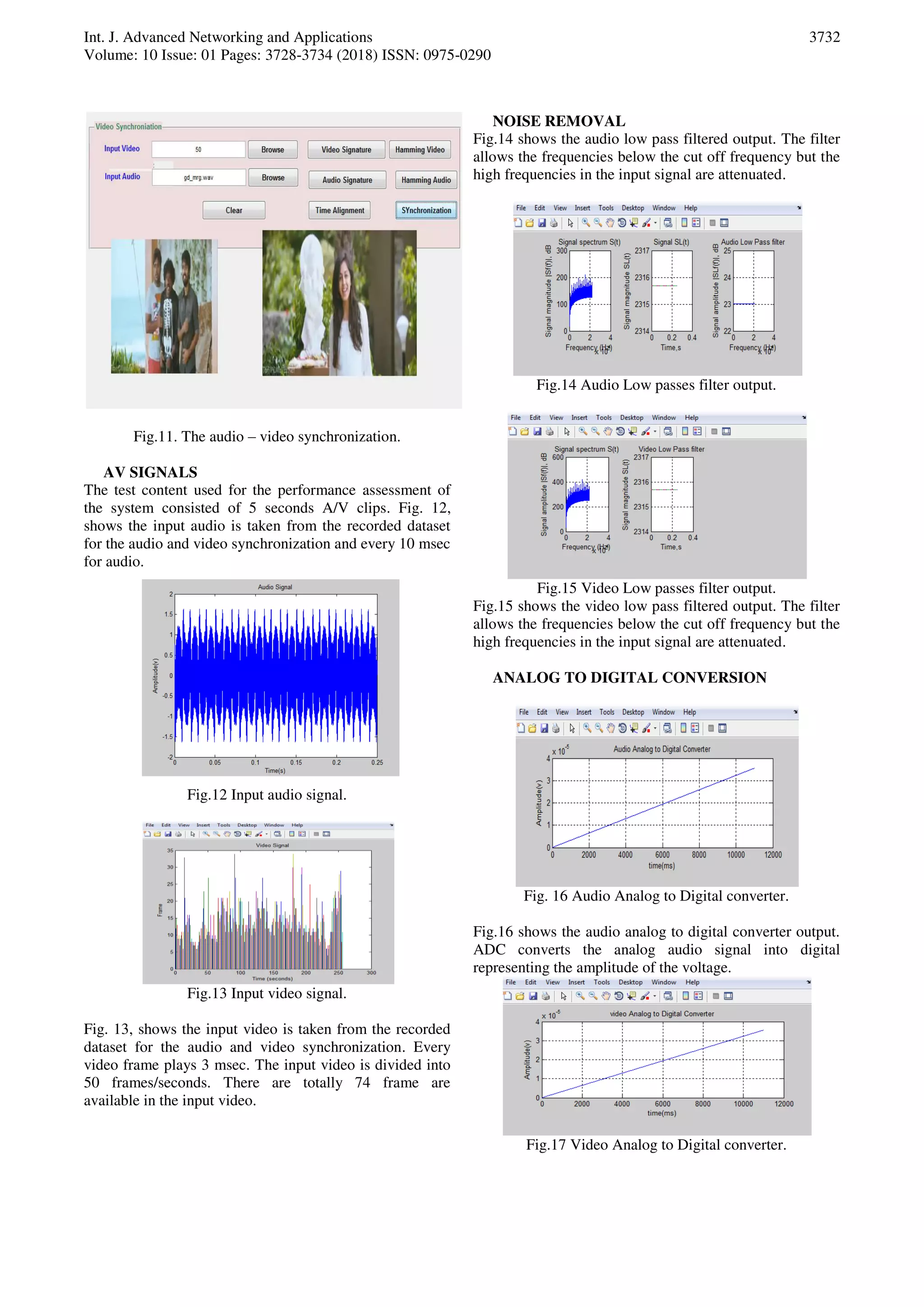

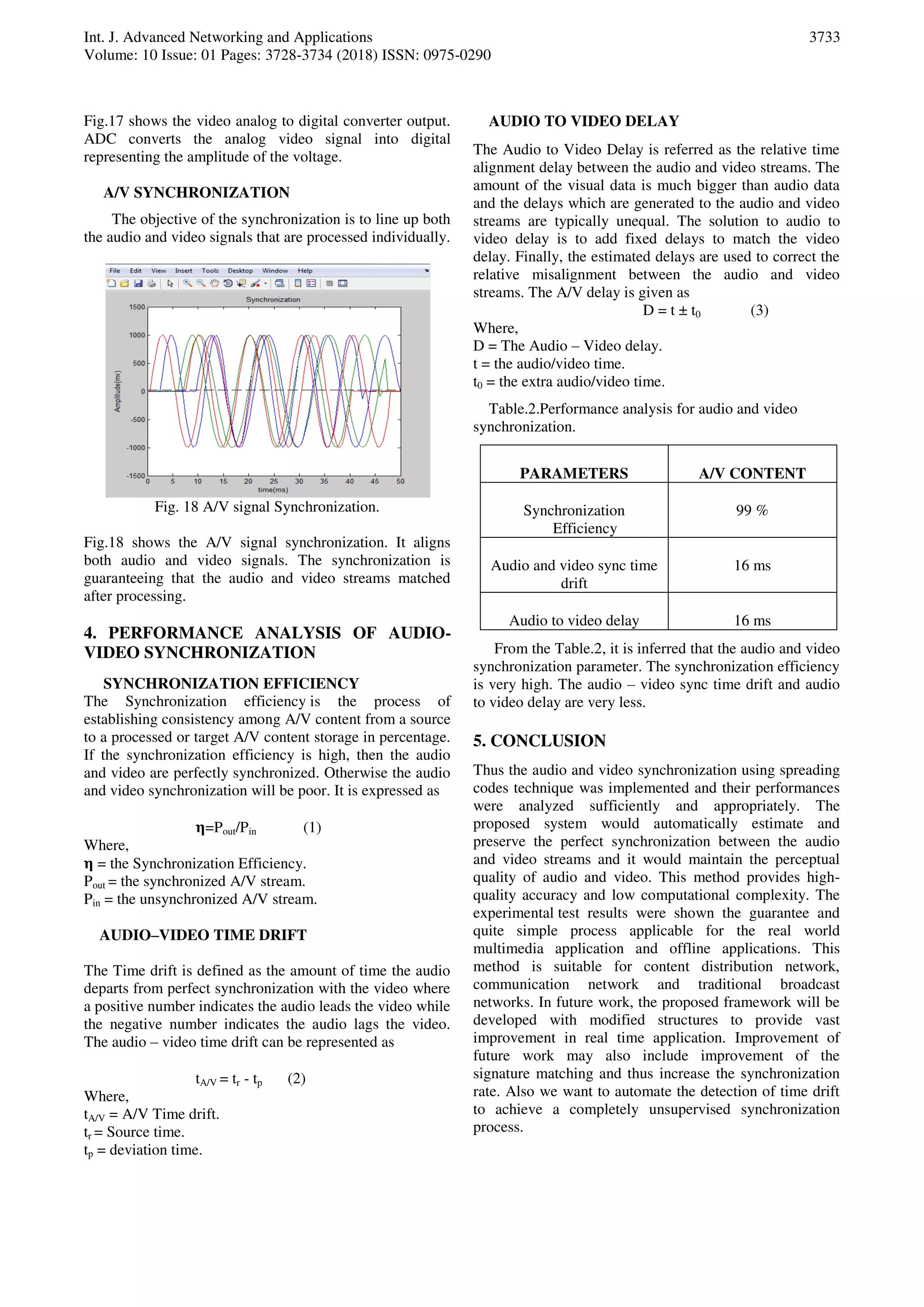

This paper discusses a technique for audio-video synchronization using spreading codes delay measurement to address issues arising from variable delays in multimedia communications, especially in live video conferencing. The proposed method achieved a synchronization efficiency of 99% through MATLAB simulations, effectively correcting the temporal alignment between audio and video signals. The findings suggest the approach is suitable for various multimedia applications and includes plans for future enhancements to improve synchronization processes.

![Int. J. Advanced Networking and Applications Volume: 10 Issue: 01 Pages: 3728-3734 (2018) ISSN: 0975-0290 3734 6. ACKNOWLEDGMENT At first, I thank Lord Almighty to give knowledge to complete the survey. I would like to thank my professors, colleagues, family and friends who encouraged and helped us in preparing this paper. REFERENCES [1] Alka Jindal, Sucharu Aggarwal, “Comprehensive overview of various lip synchronization techniques” IEEE International transaction on Biometrics and Security technologies, 2008. [2] Anitha Sheela.k, Balakrishna Gudla, Srinivasa Rao Chalamala, Yegnanarayana.B, “Improved lip contour extraction for visual speech recognition” IEEE International transaction on Consumer Electronics,pp.459-462, 2015. [3] N. J. Bryan, G. J. Mysore and P. Smaragdis, “Clustering and synchronizing multicamera video via landmark cross-correlation,” in IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), March 2012, pp. 2389–2392. [4] Claus Bauer, Kent Terry, Regunathan Radhakrishnan, “Audio and video signature for synchronization” IEEE International conference on Multimedia and Exposition Community (ICME), pp.1549-1552, 2008. [5] N. Dave, N. M. Patel. "Phoneme and Viseme based Approach for Lip Synchronization.", International Journal of Signal Processing, Image Processing and Pattern Recognition, pp. 385-394, 2014. [6] Dragan Sekulovski, Hans Weda, Mauro Barbieri and Prarthana Shrestha, “Synchronization of Multiple Camera Videos Using Audio-Visual Features,” in IEEE Transactions On Multimedia, Vol. 12, No. 1, January 2010. [7] Fumei Liu, Wenliang, Zeliang Zhang, “Review of the visual feature extraction research” IEEE 5th International Conference on software Engineering and Service Science, pp.449-452, 2014. [8] Josef Chalaupka, Nguyen Thein Chuong, “Visual feature extraction for isolated word visual only speech recognition of Vietnamese” IEEE 36th International conference on Telecommunication and signal processing (TSP), pp.459-463, 2013. [9] K. Kumar, V. Libal, E. Marcheret, J. Navratil, G.Potamianos and G. Ramaswamy, “Audio- Visual speech synchronization detection using a bimodal linear prediction model”. in Computer Vision and Pattern Recognition Workshops, 2009, p. 54. [10]Laszlo Boszormenyi, Mario Guggenberger, Mathias Lux, “Audio Align-synchronization of A/V streams based on audio data” IEEE International journal on Multimedia, pp.382-383, 2012. [11]Y. Liu, Y. Sato, “Recovering audio-to-video synchronization by audiovisual correlation analysis”. in Pattern Recognition, 2008, p. 2. [12]C. Lu and M. Mandal, “An efficient technique for motion-based view-variant video sequences synchronization,” in IEEE International Conference on Multimedia and Expo, July 2011, pp. 1–6. [13]Luca Lombardi, Waqqas ur Rehman Butt, “A survey of automatic lip reading approaches” IEEE 8th International Conference Digital Information Management (ICDIM), pp.299-302, 2013. [14]Namrata Dave, “A lip localization based visual feature extraction methods” An International journal on Electrical and computer Engineering, vol.4, no.4, December 2015. [15]P. Shrstha, M. Barbieri, and H. Weda, “Synchronization of multi-camera video recordings based on audio,” in Proceedings of the 15th international conference on Multimedia 2007, pp.545–548. Author Details A. Thenmozhi (S.Anbazhagan) completed B.E (Electronics and Communication Engineering) in 2016 from Anna University, Chennai. She has published 2 papers in National and International Conference proceedings. Her area of interest includes Electronic System Design, Signal Processing, Image Processing and Digital Communication. Dr. P. Kannan (Pauliah Nadar Kannan) received the B.E. degree from Manonmaniam Sundarnar University, Tirunelveli, India, in 2000, the M.E. degree from the Anna University, Chennai, India, in 2007, and the Ph.D degree from the Anna University Chennai, Tamil Nadu, India, in 2015. He has been Professor with the Department of Electronics and Communication Engineering, PET Engineering College Vallioor, Tirunelveli District, Tamil Nadu, India. His current research interests include computer vision, biometrics, and Very Large Scale Integration Architectures.](https://image.slidesharecdn.com/v10i1-10-180809121253/75/Performance-Analysis-of-Audio-and-Video-Synchronization-using-Spreaded-Code-Delay-Measurement-Technique-7-2048.jpg)