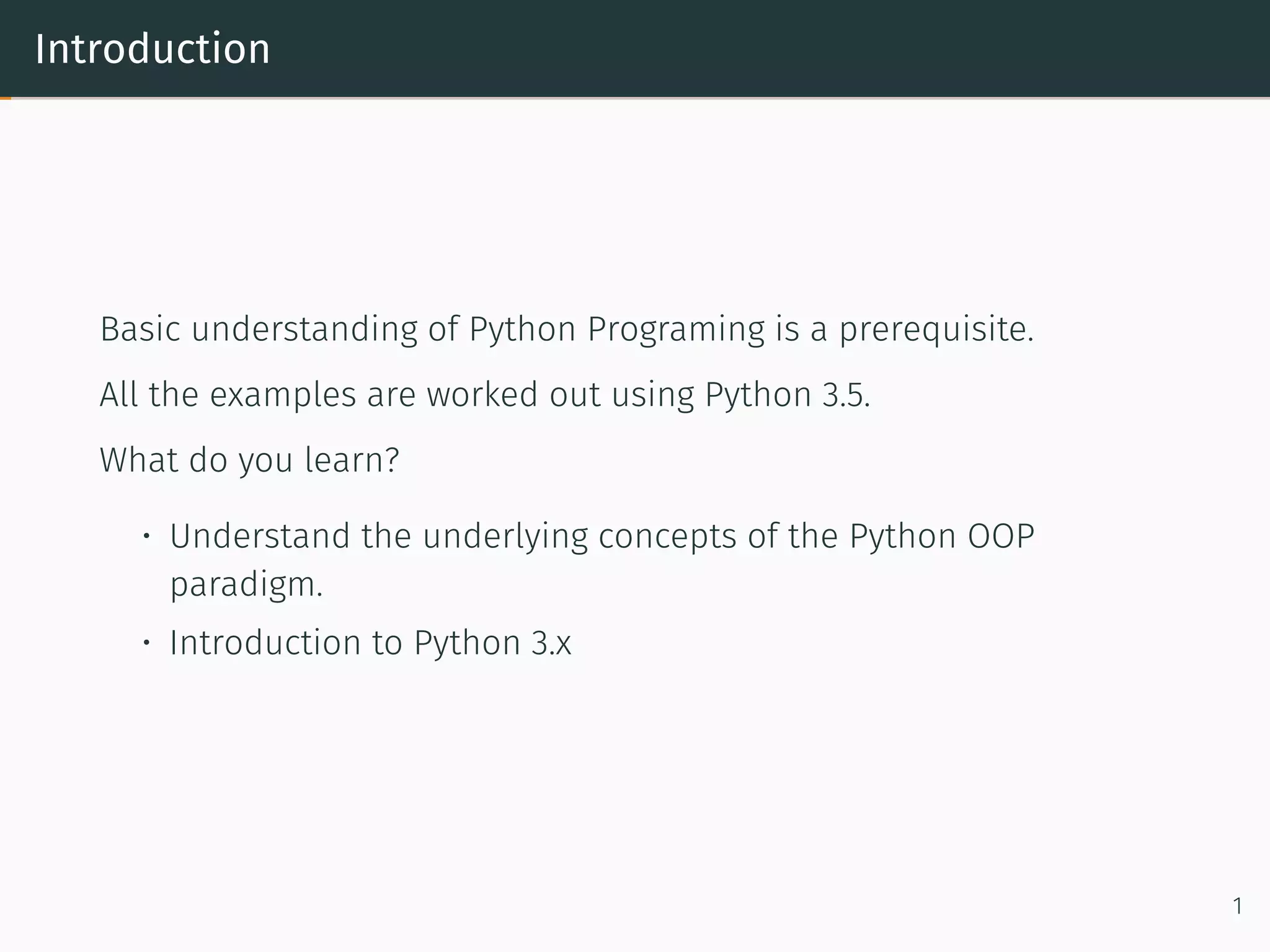

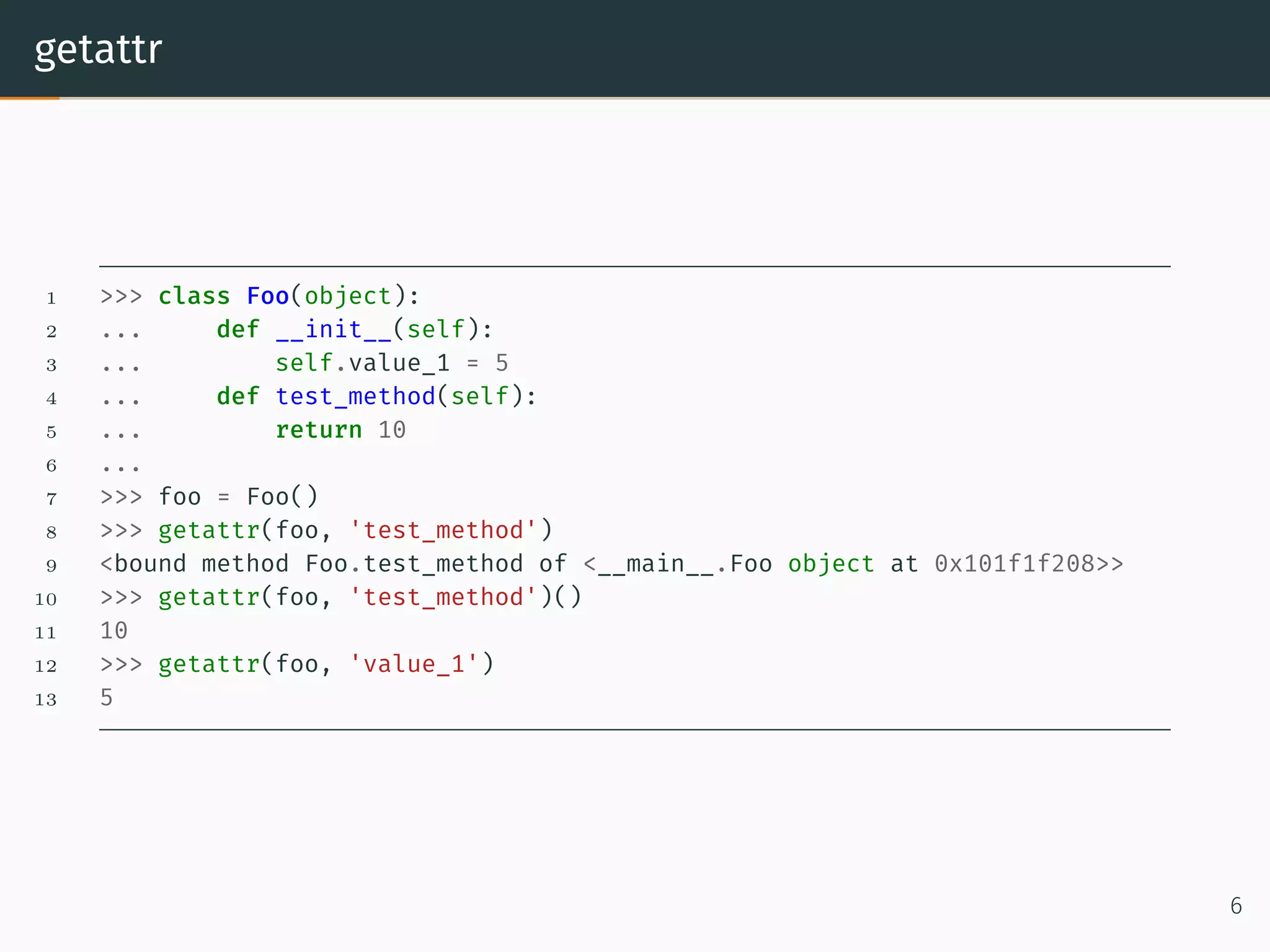

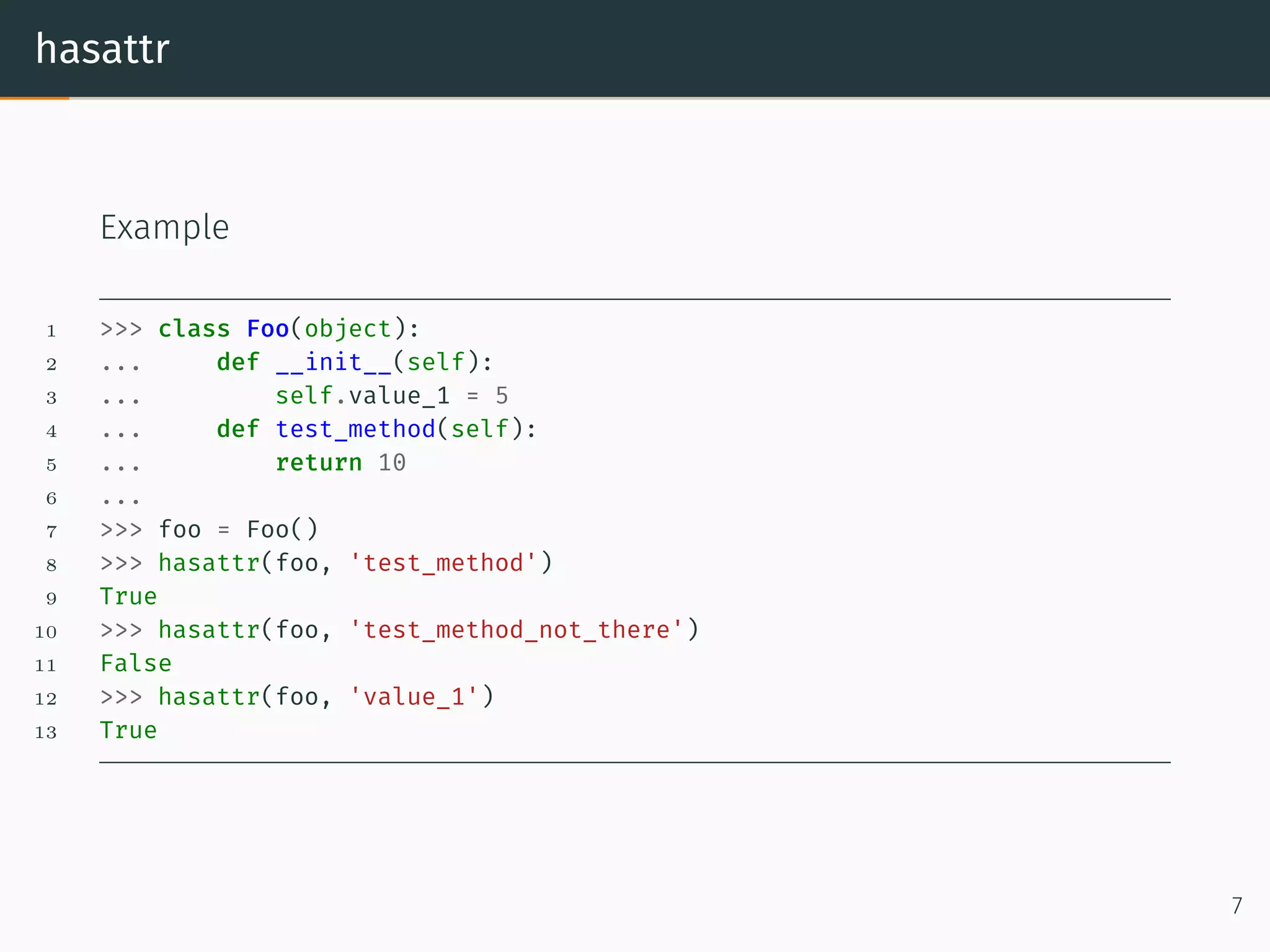

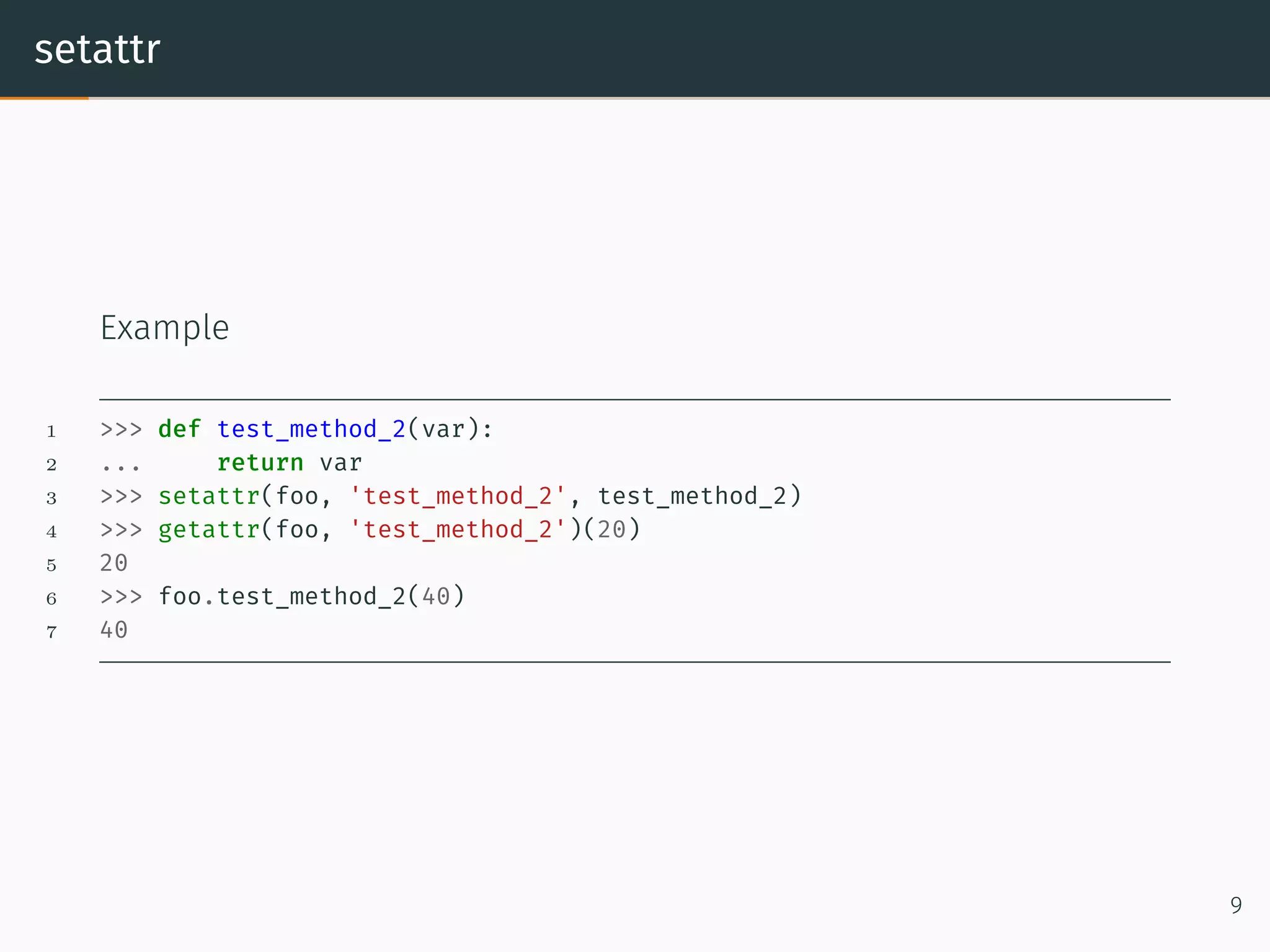

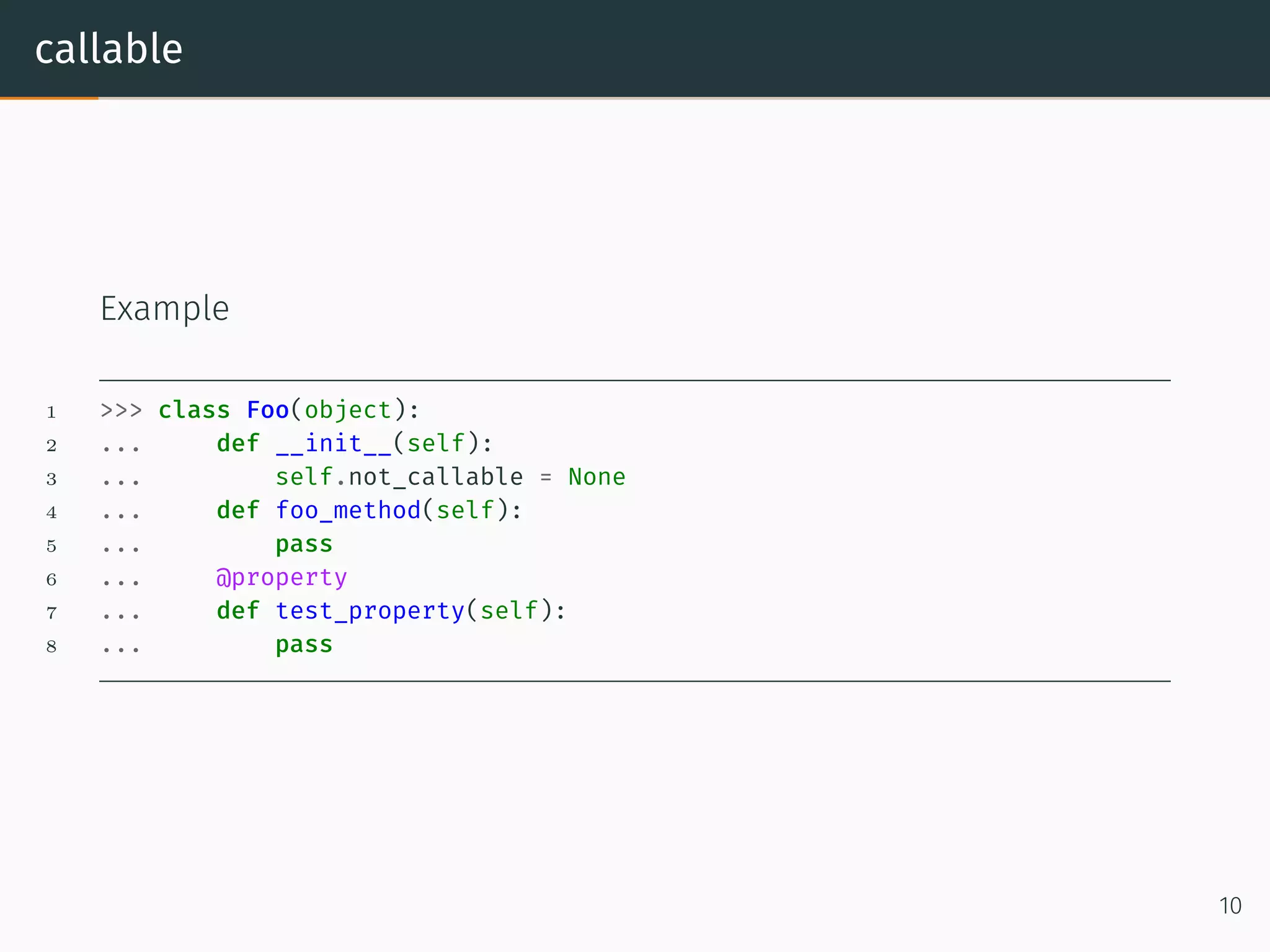

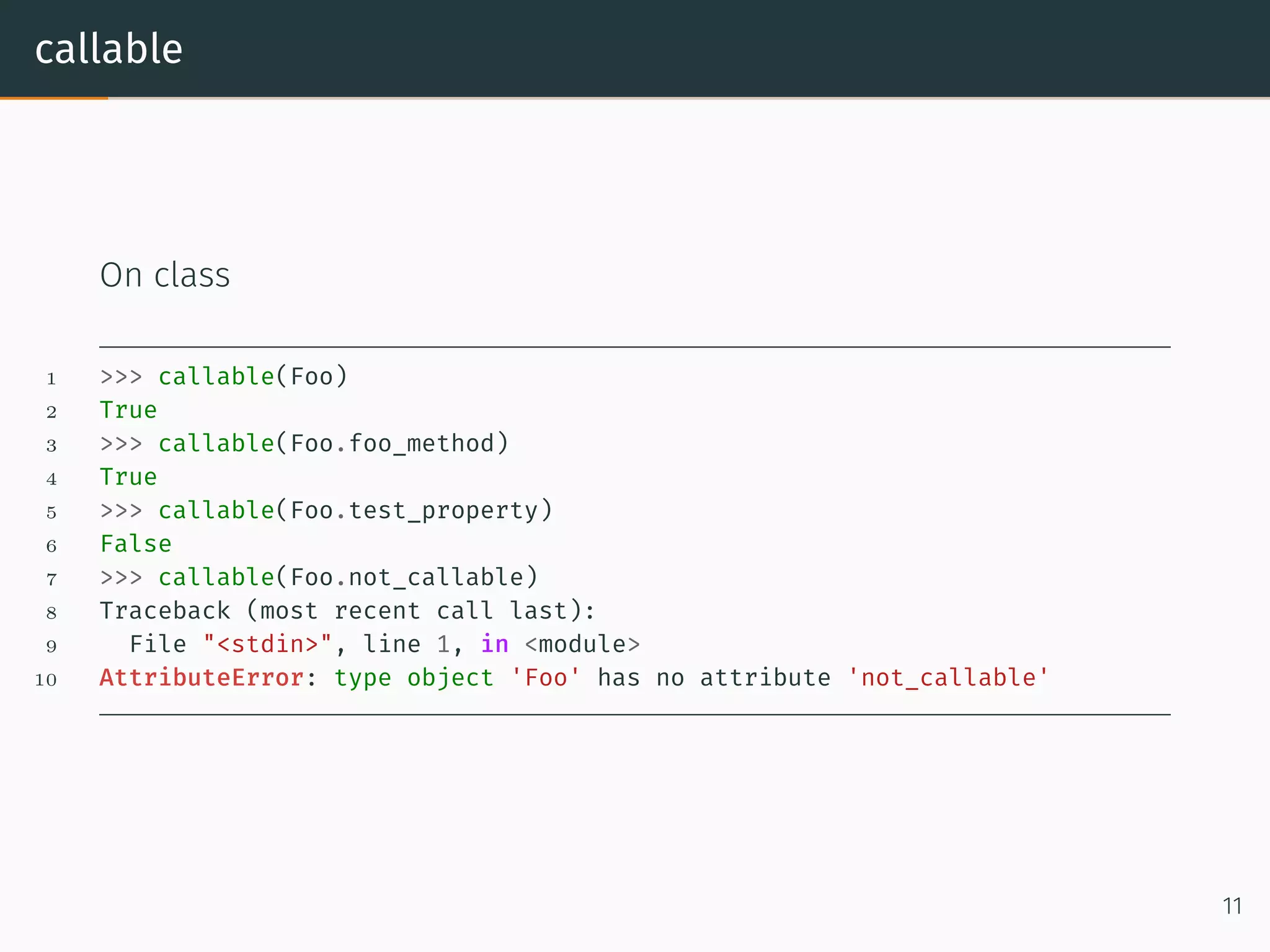

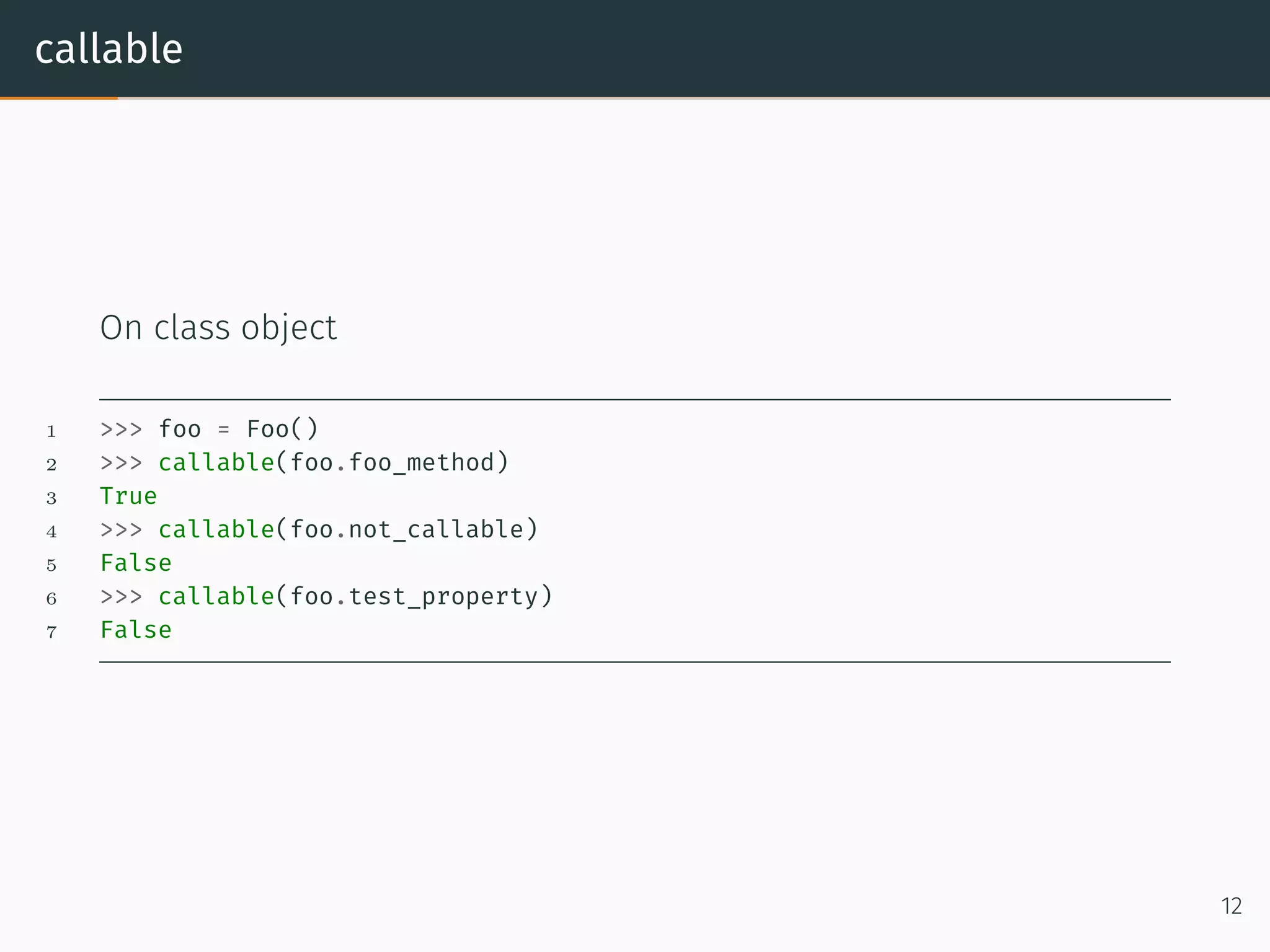

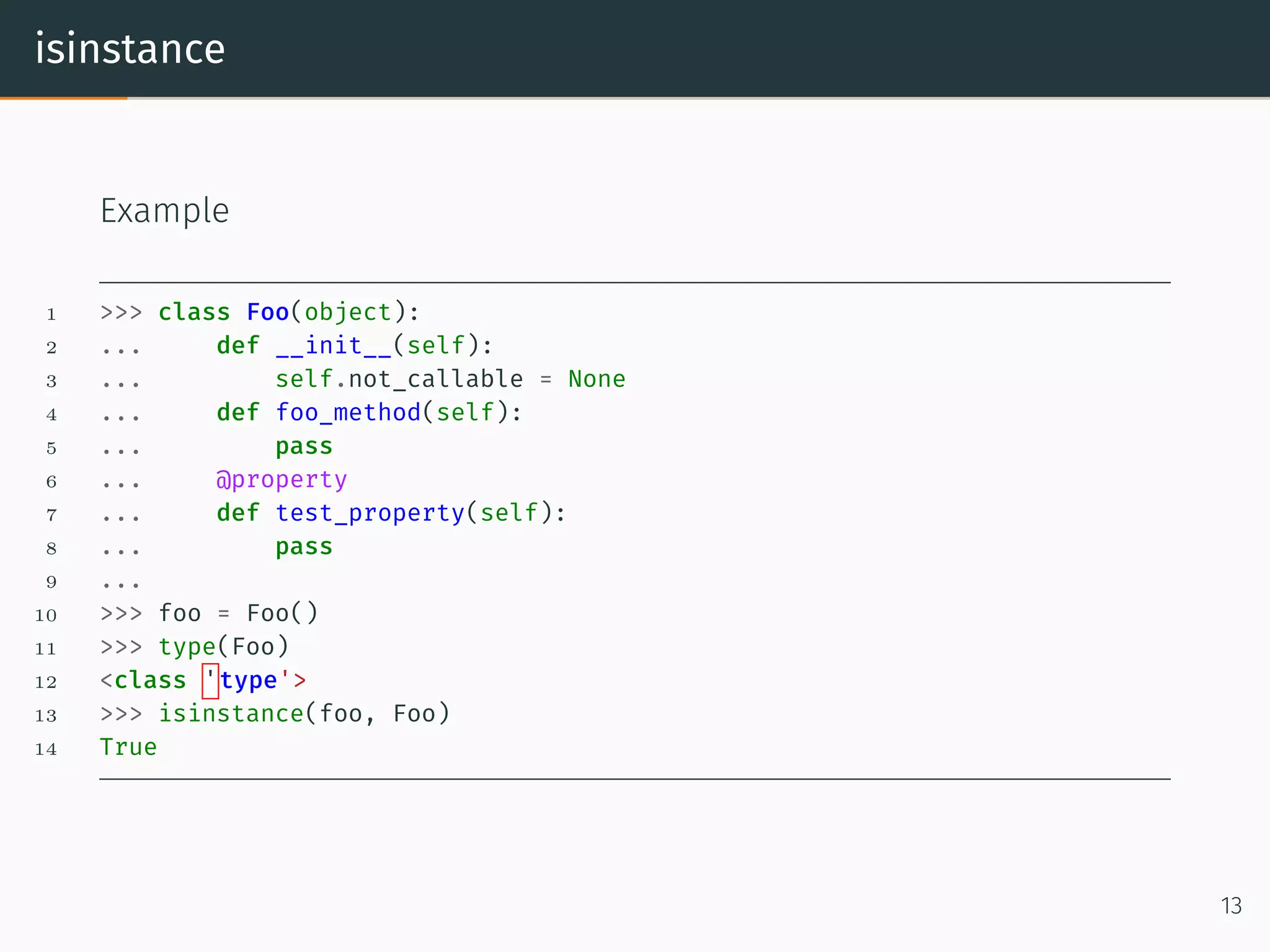

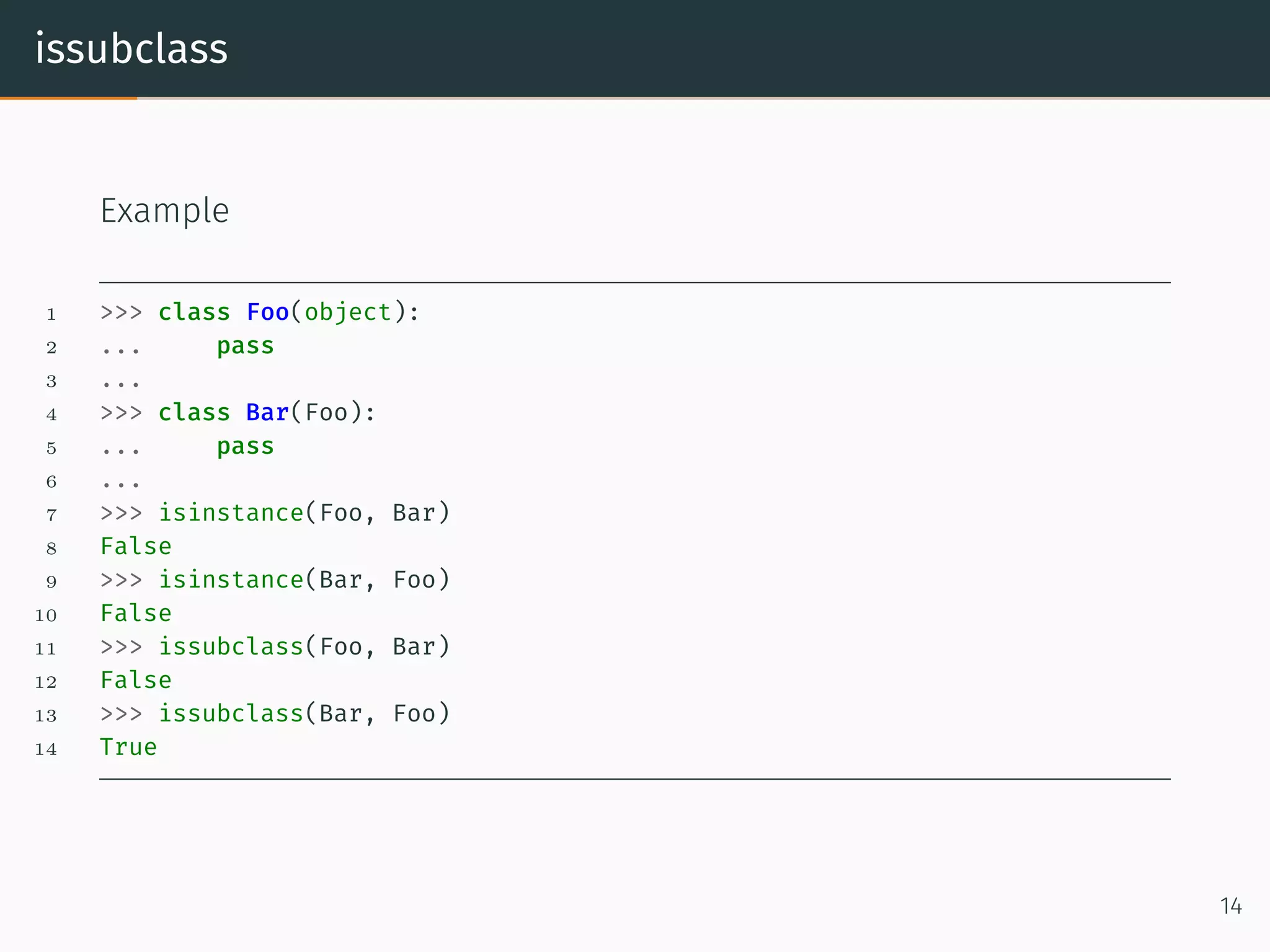

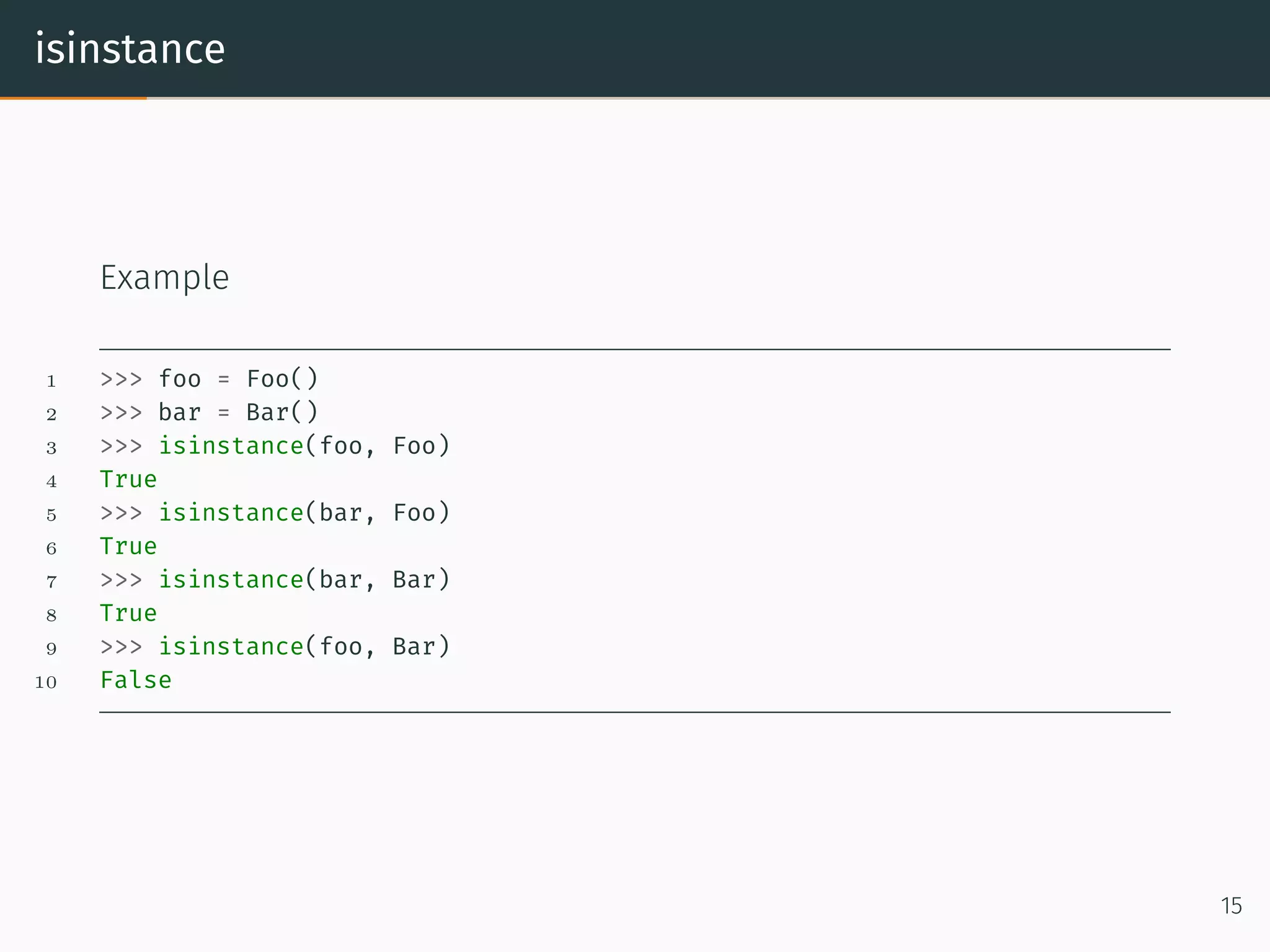

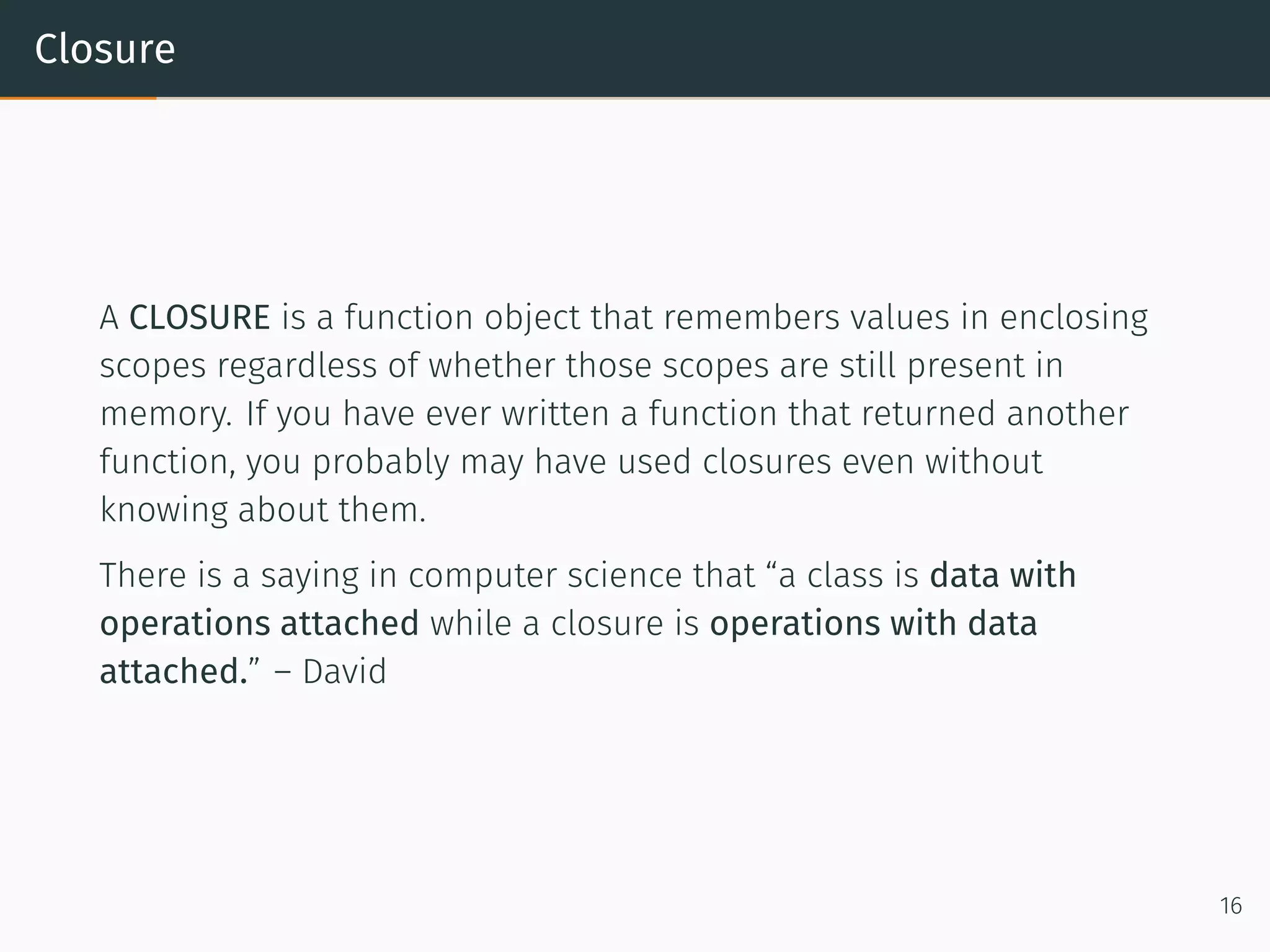

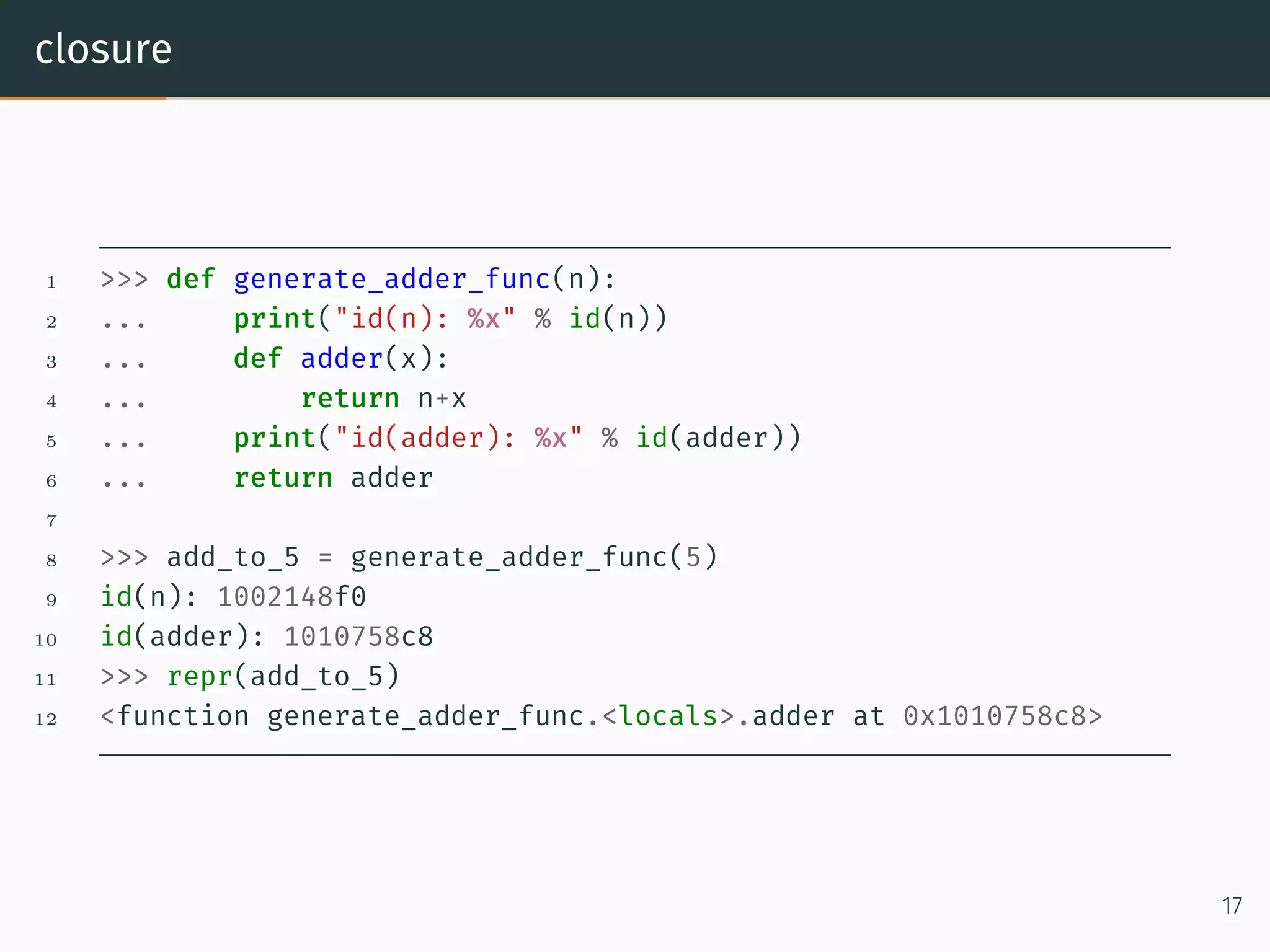

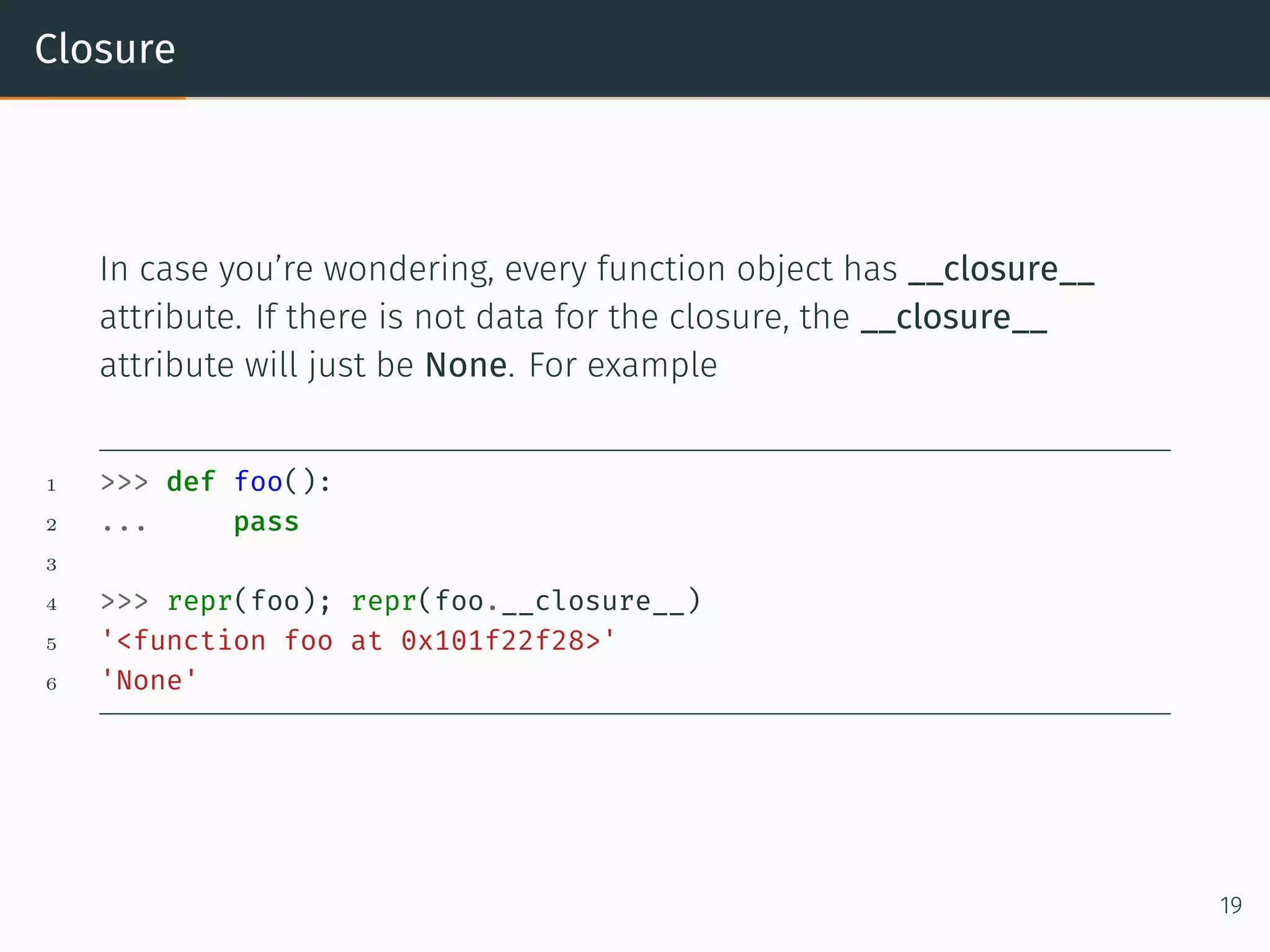

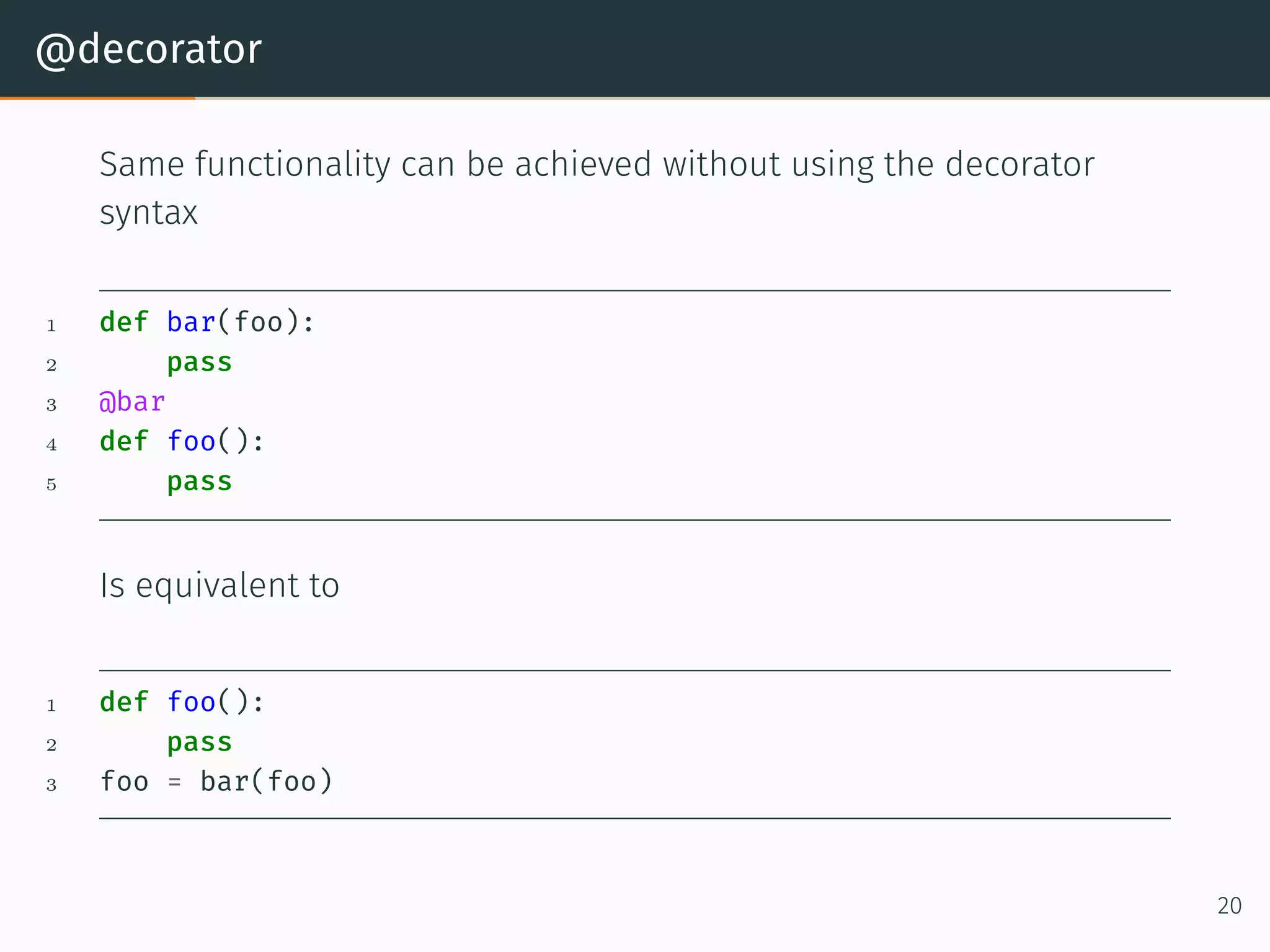

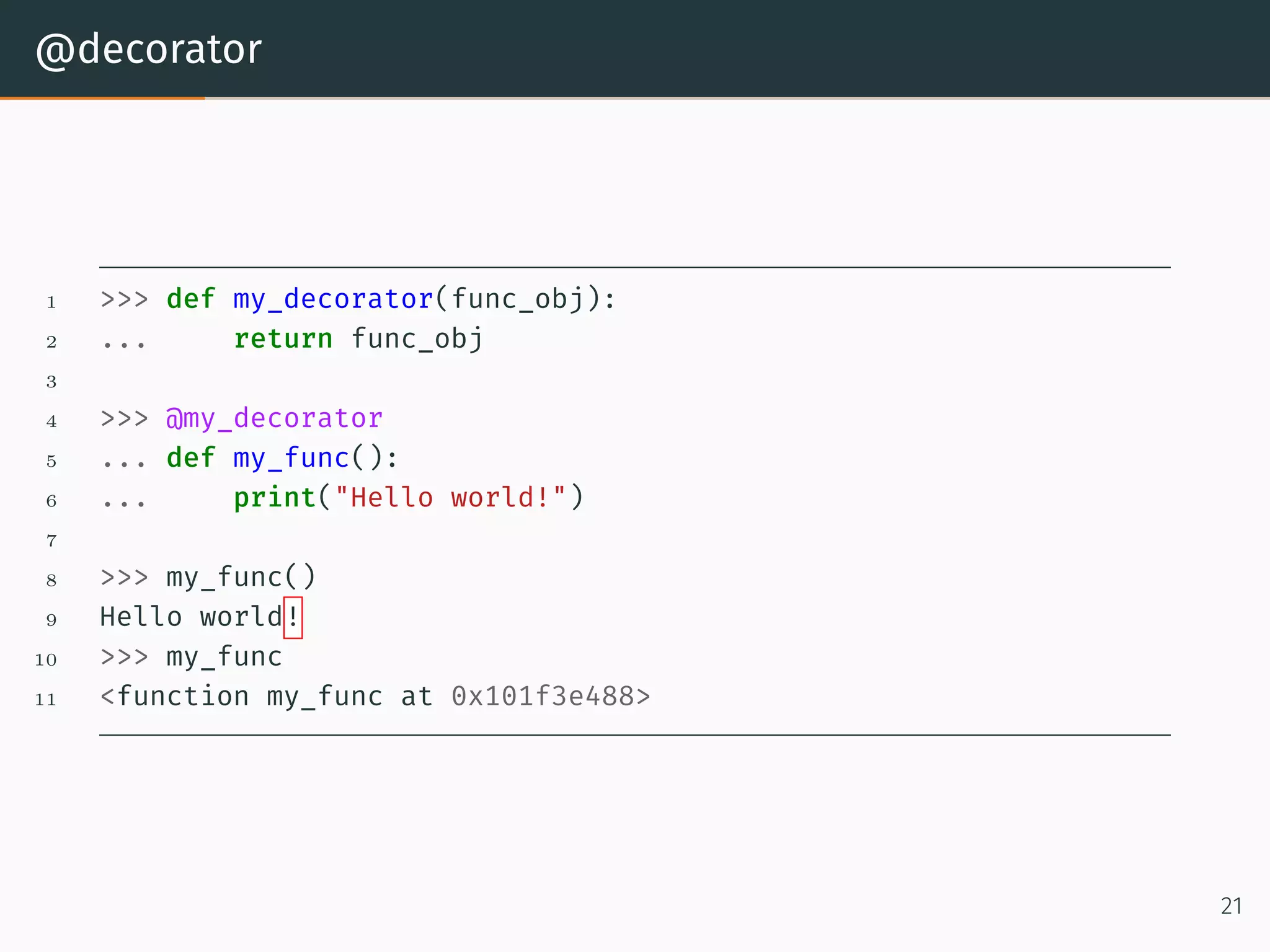

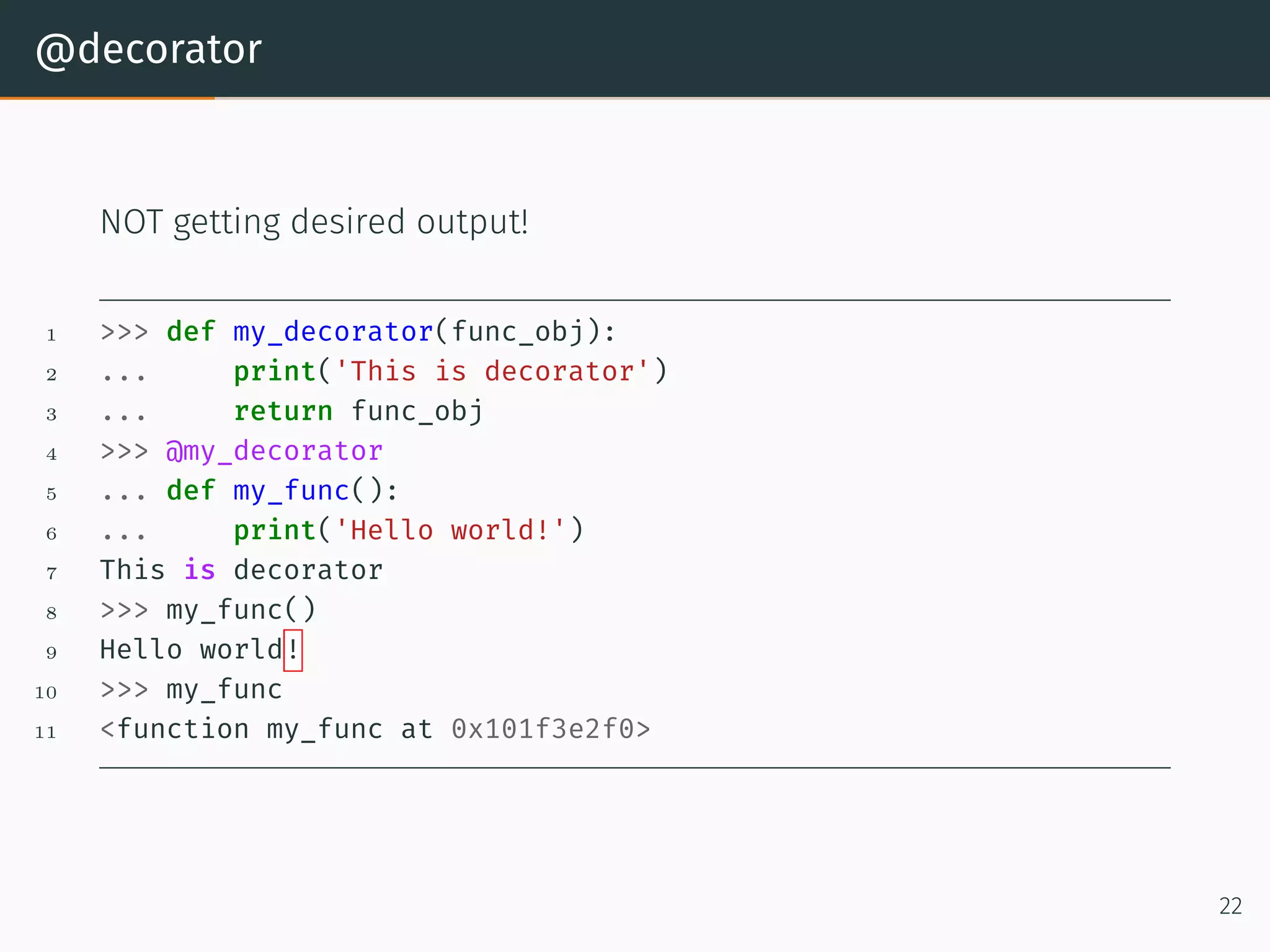

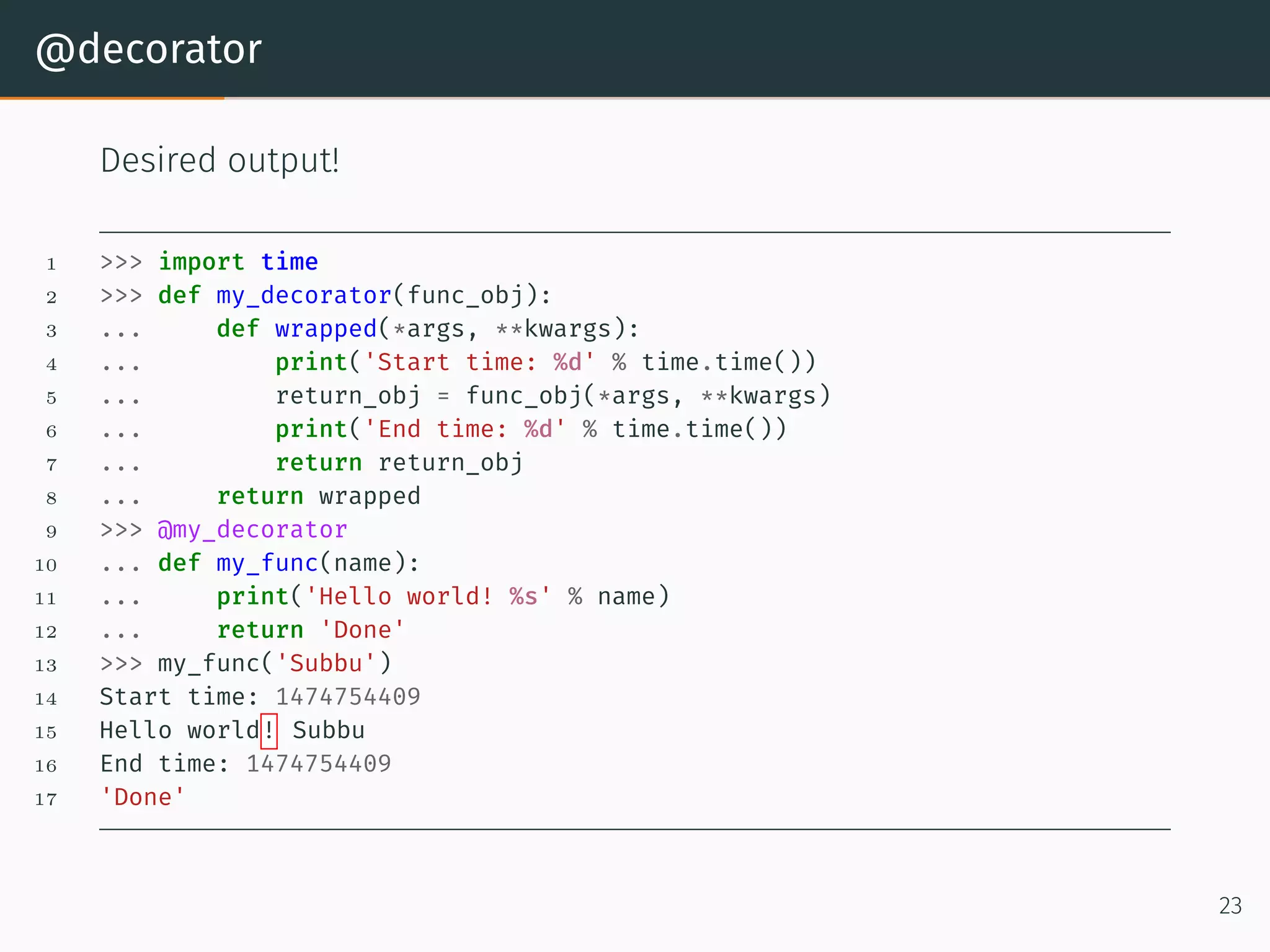

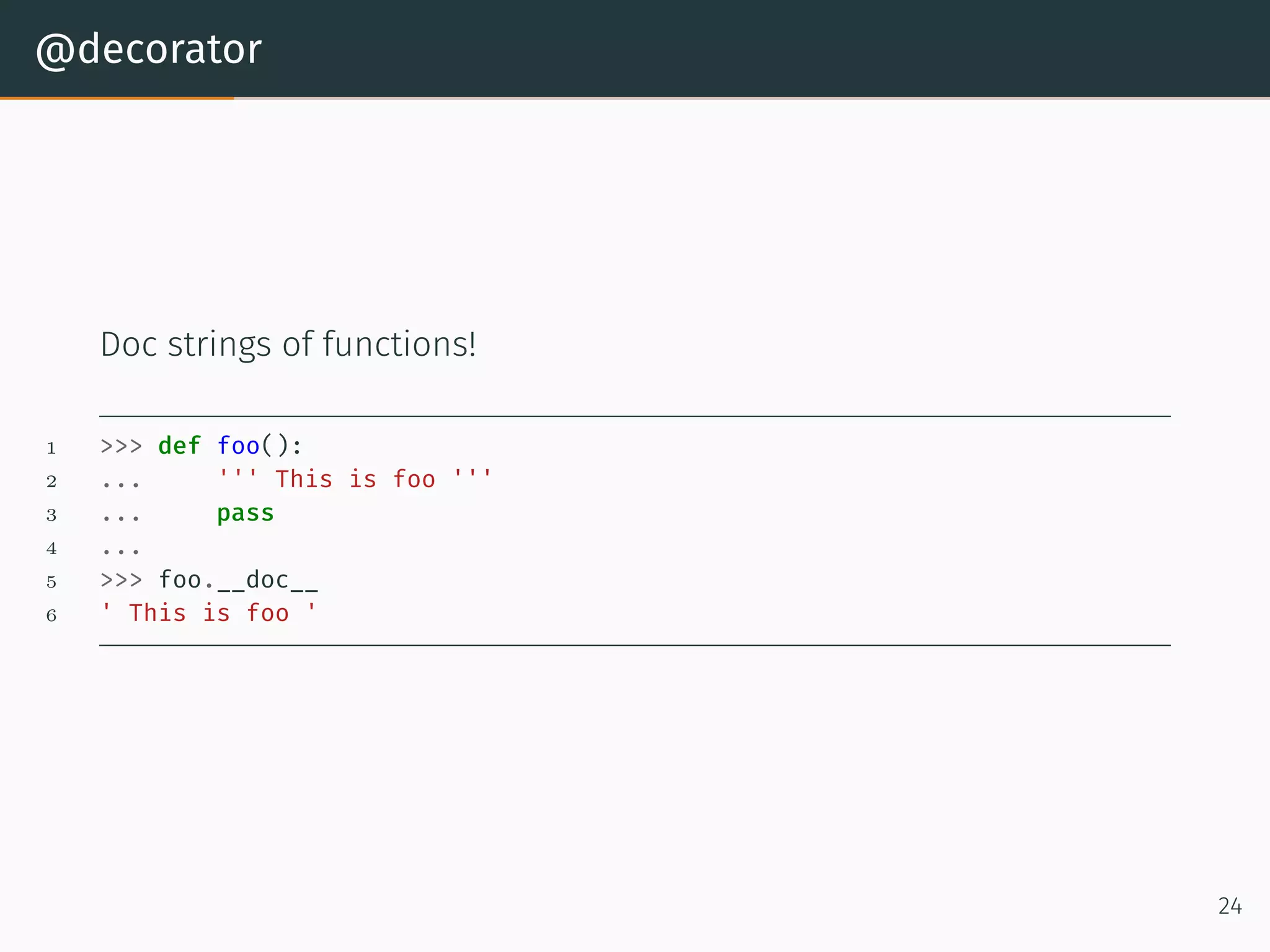

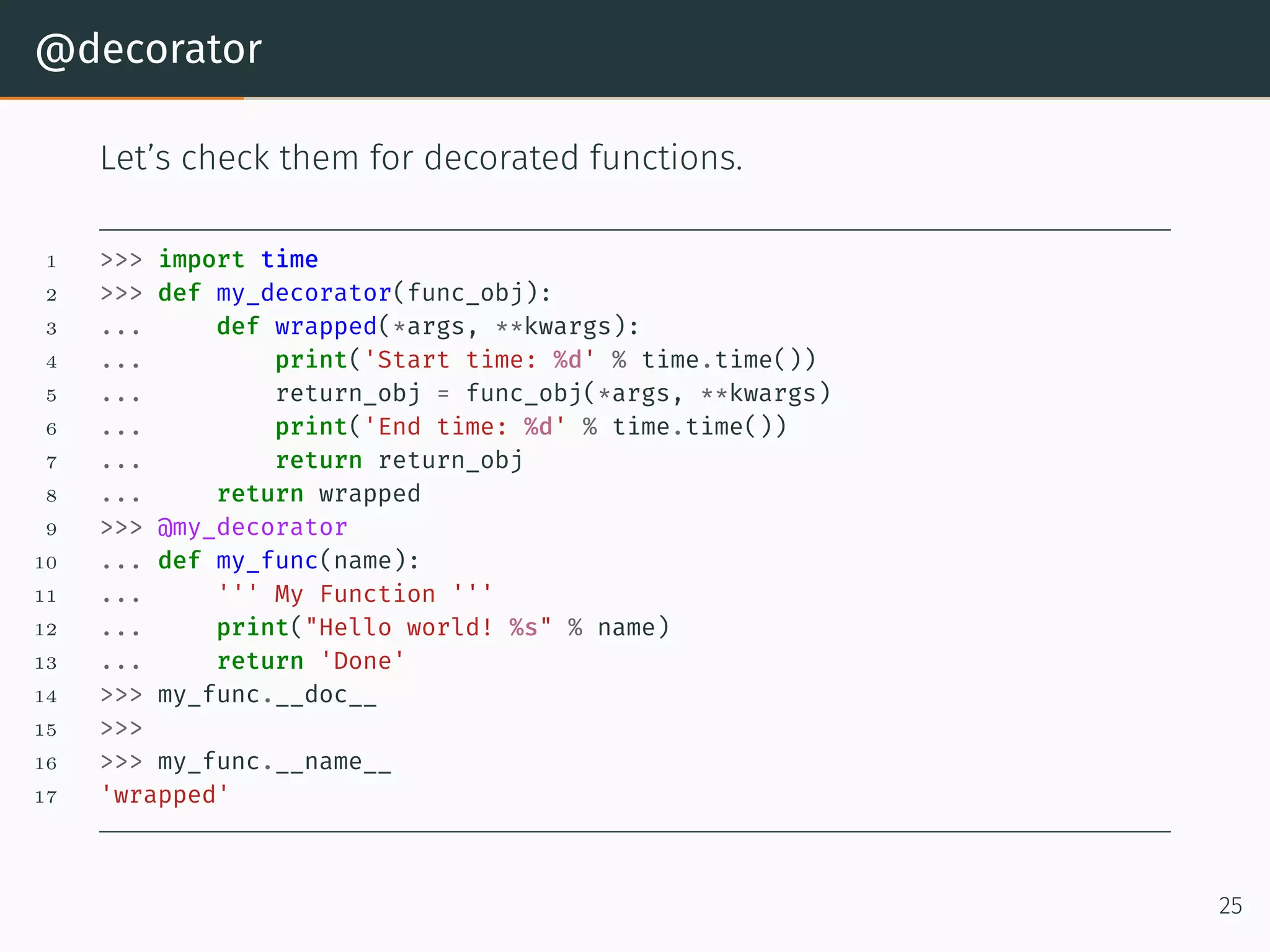

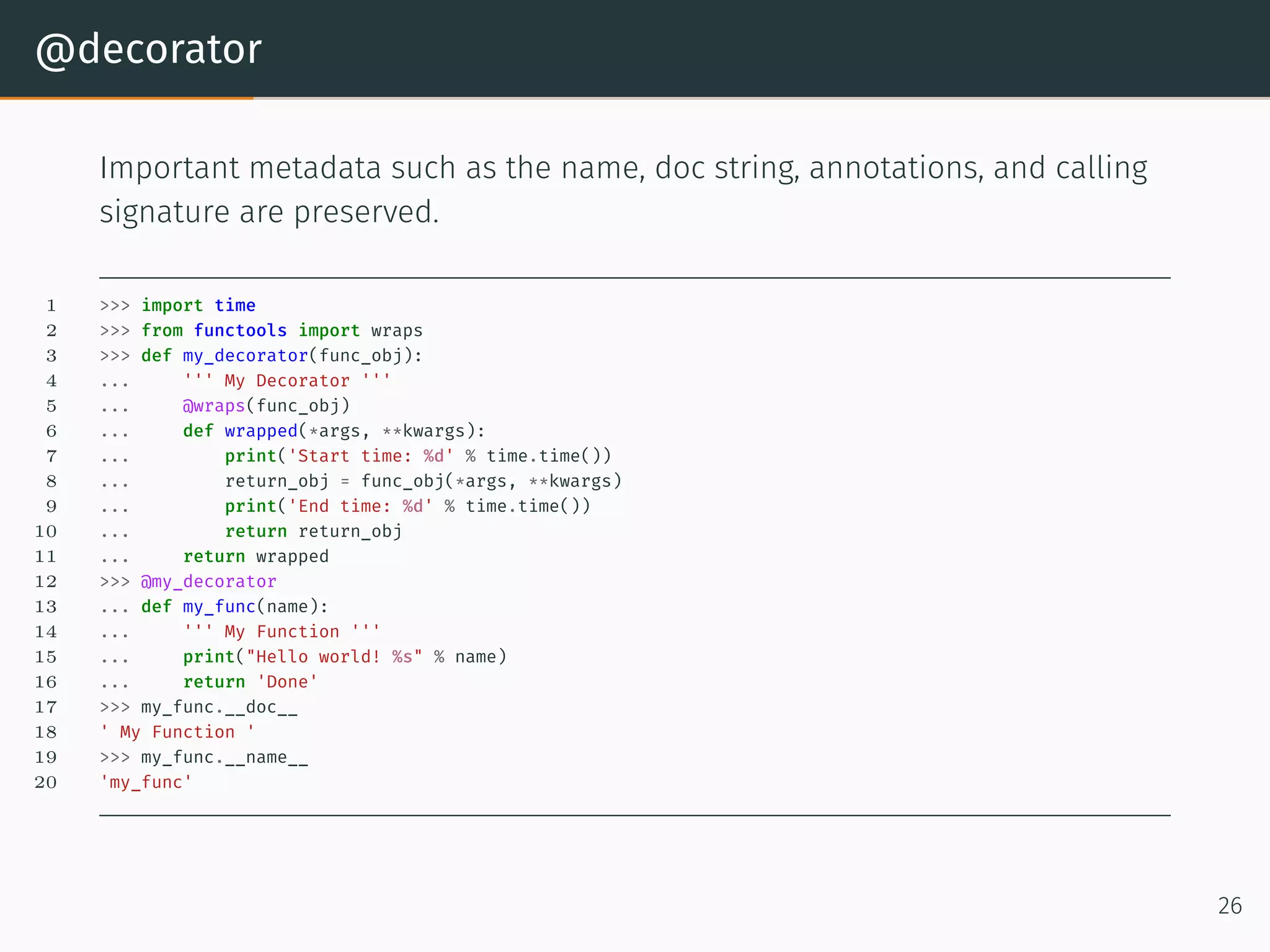

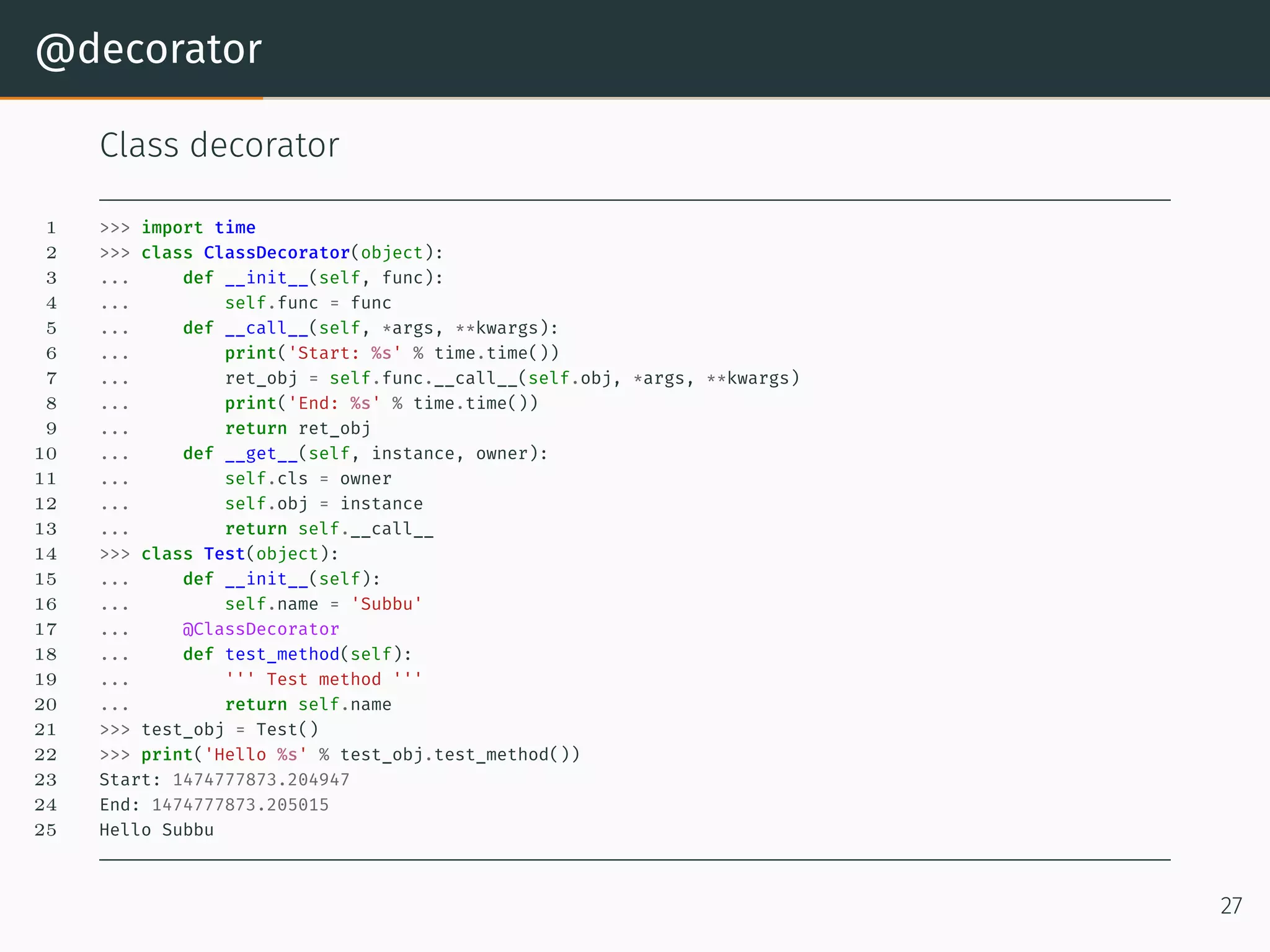

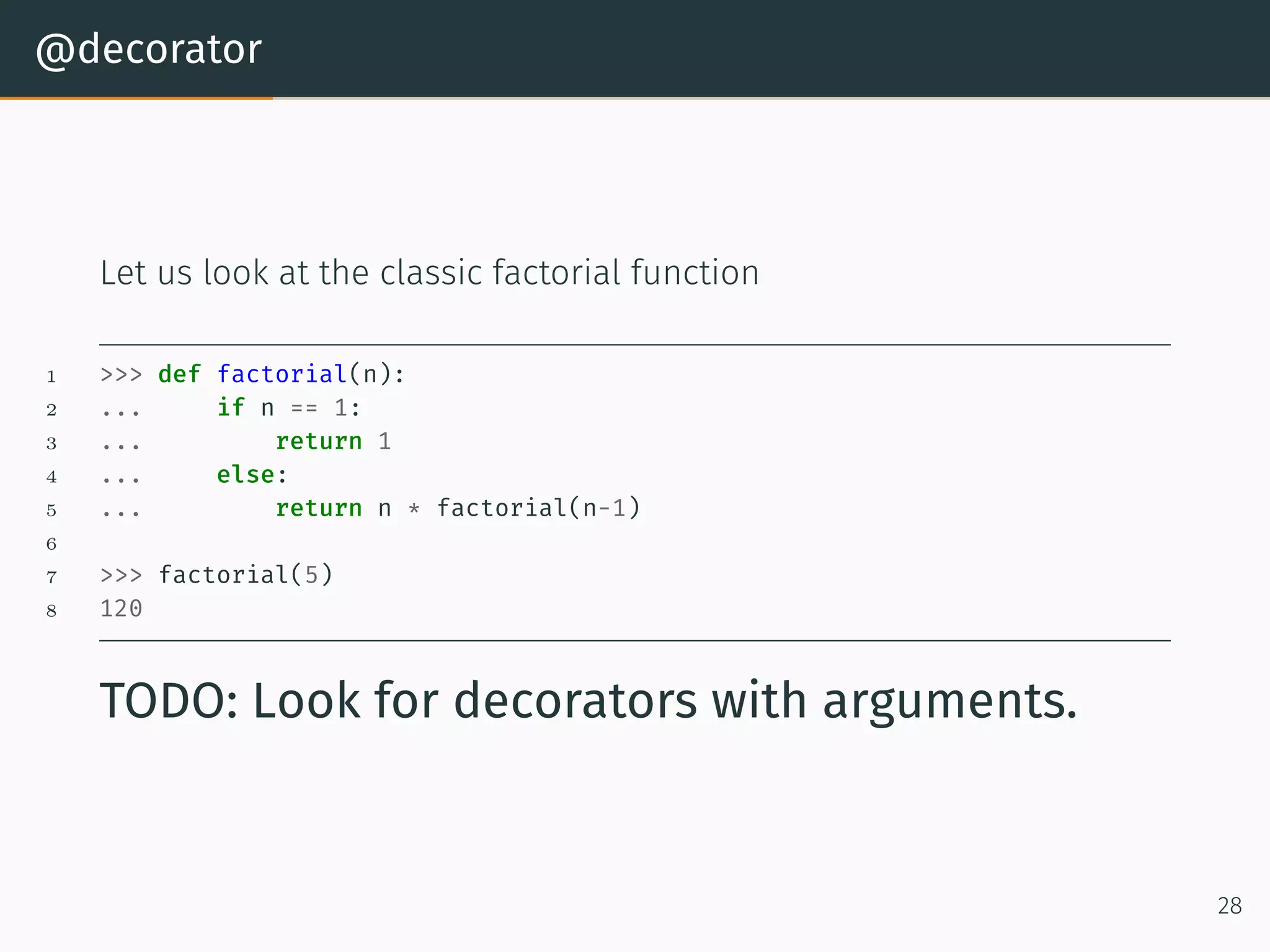

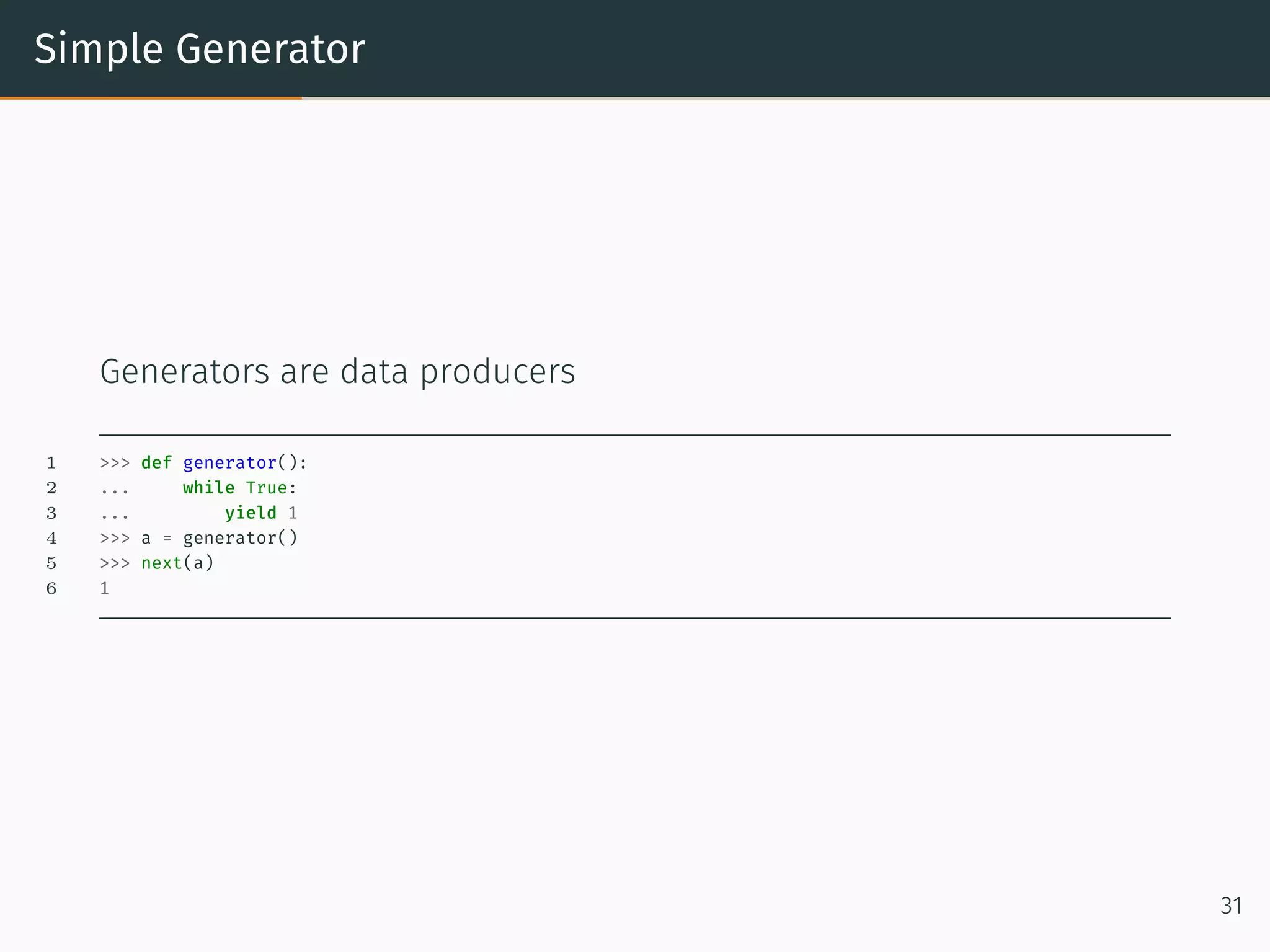

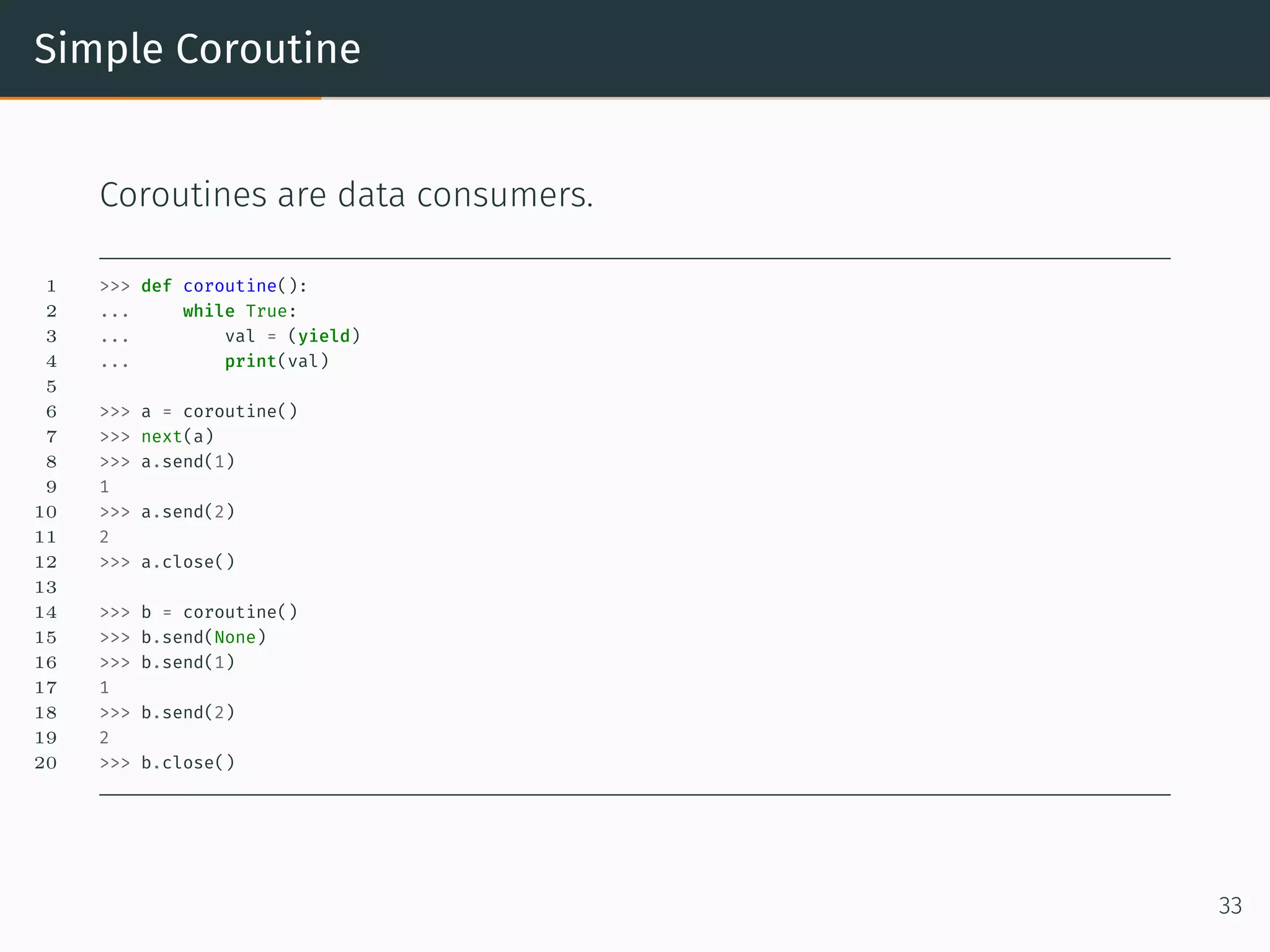

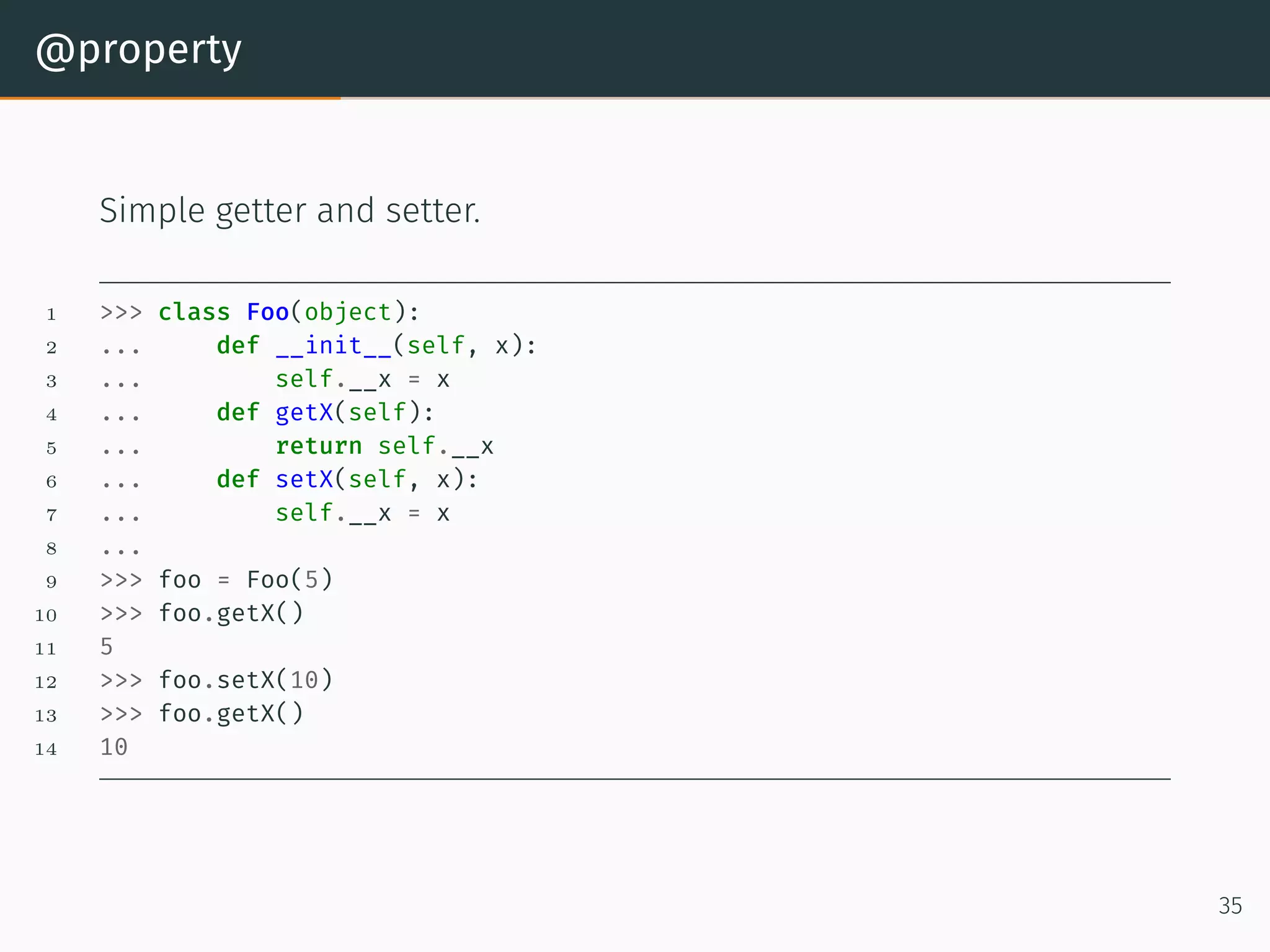

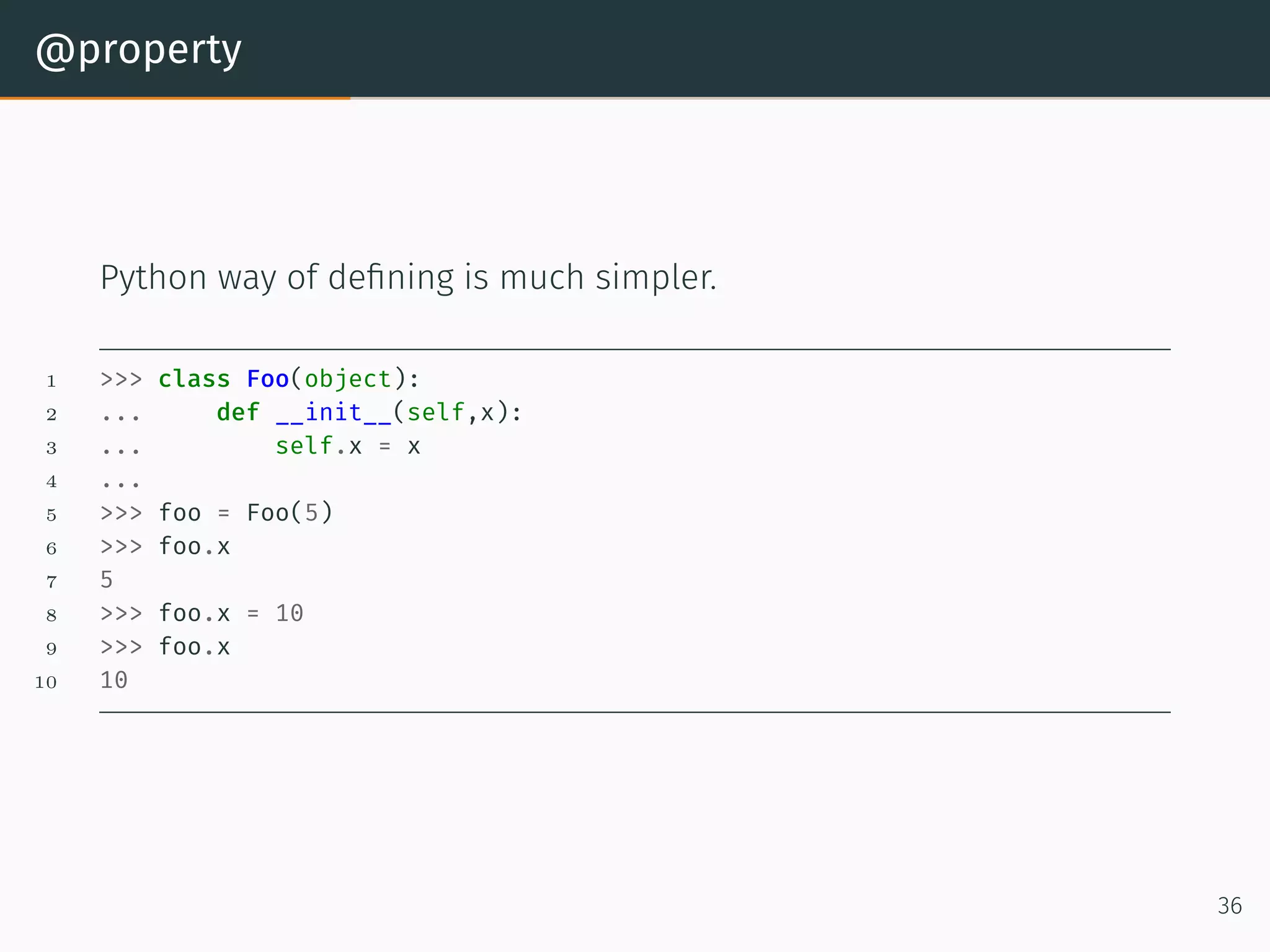

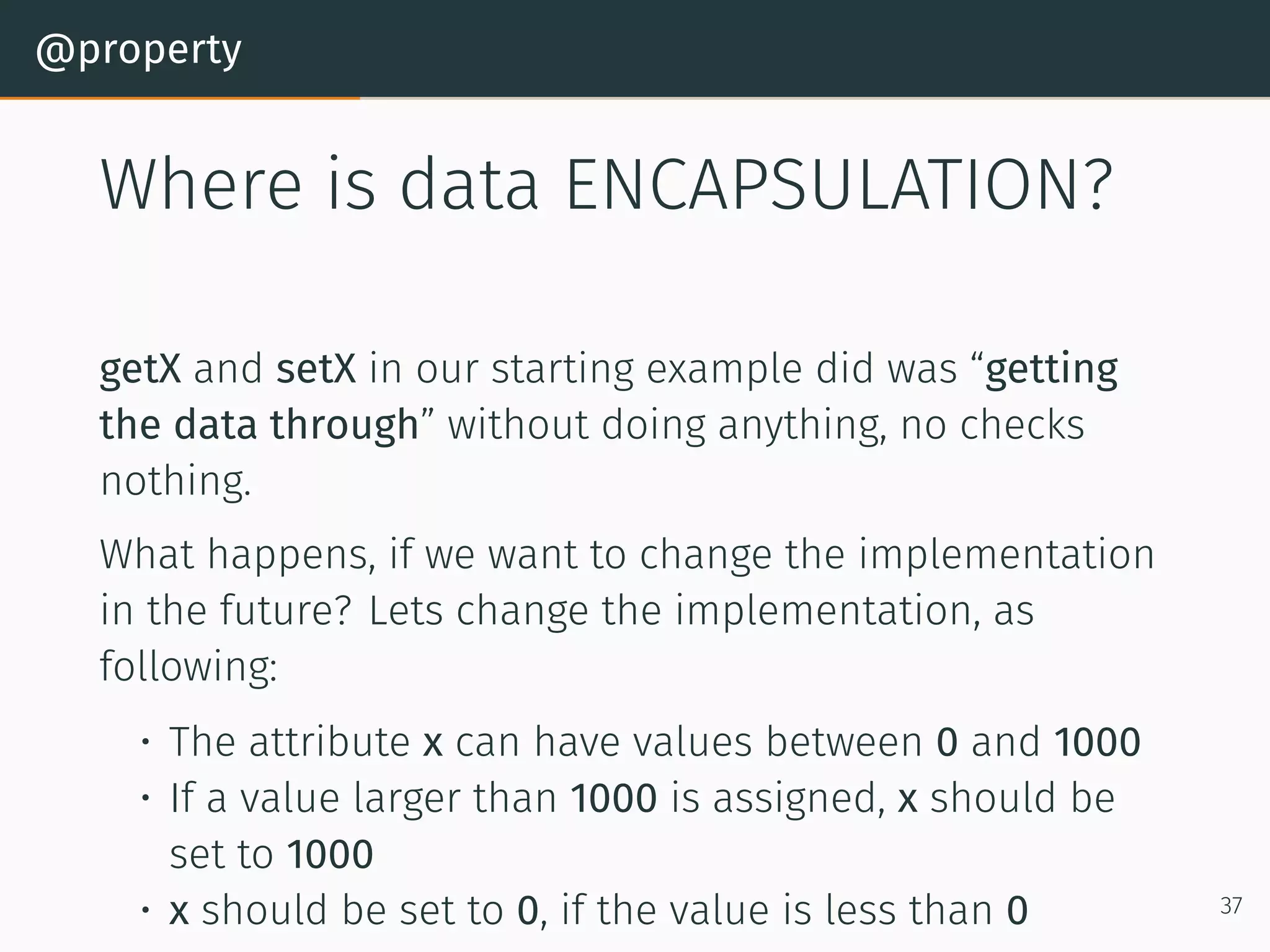

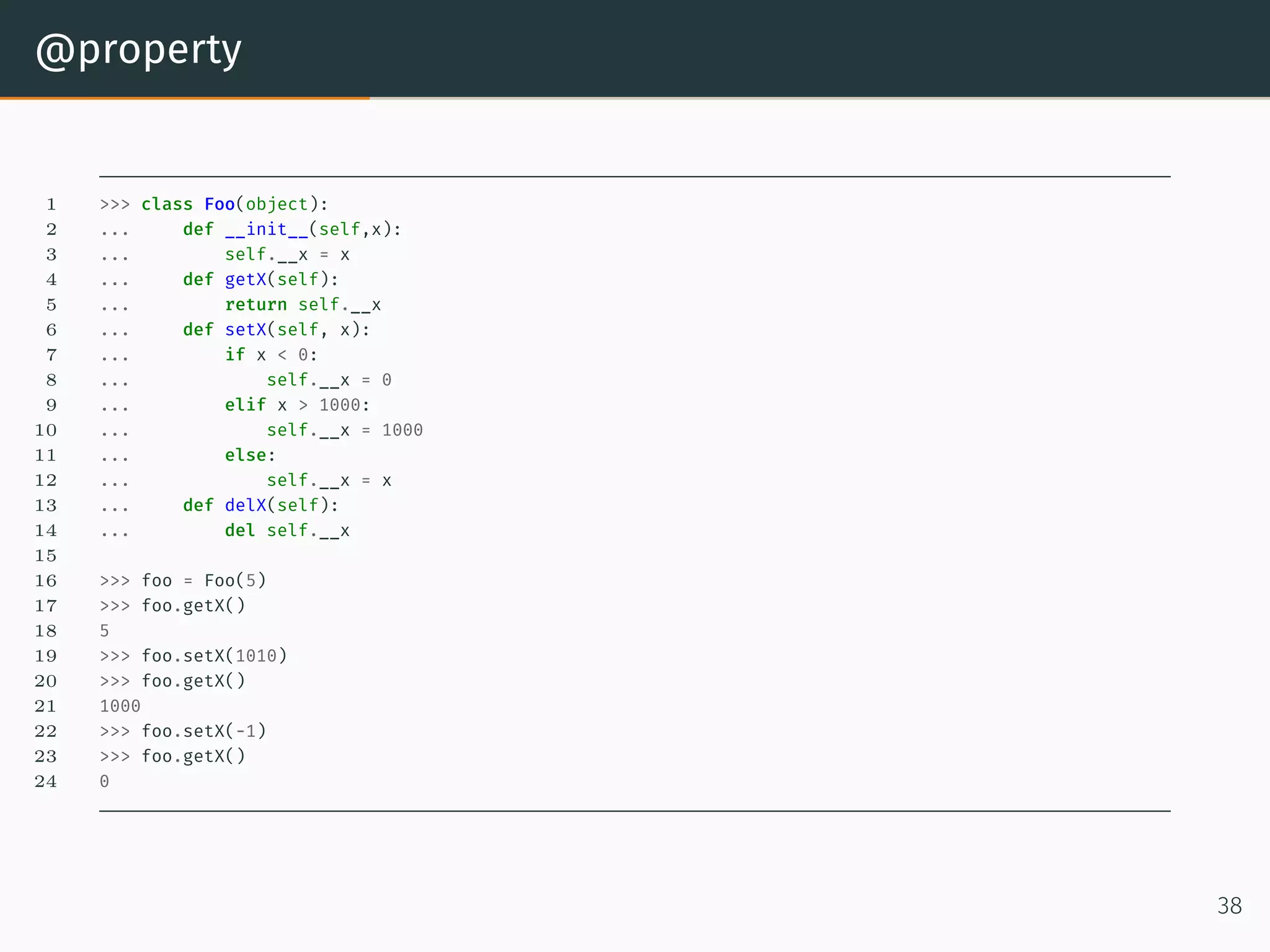

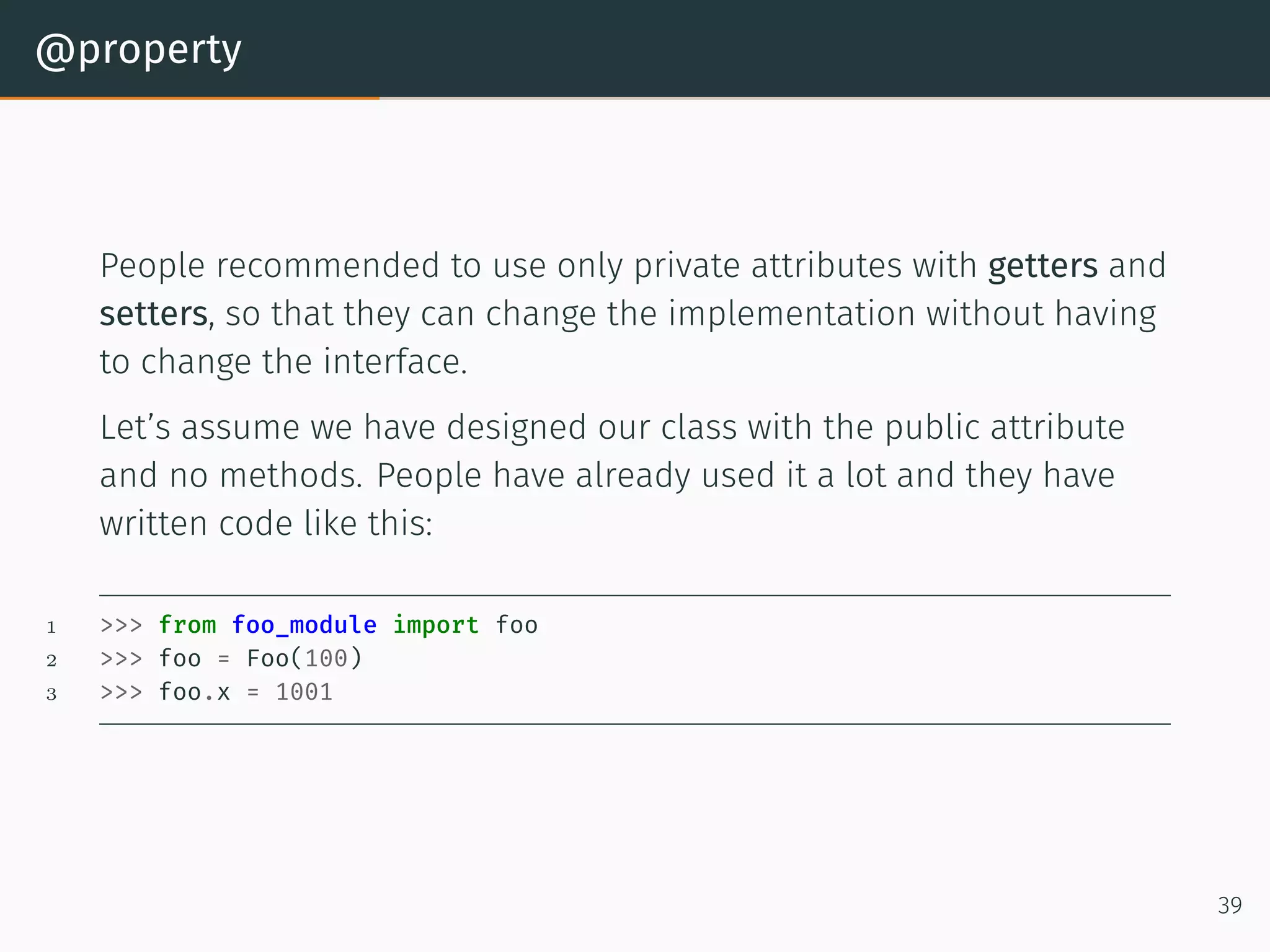

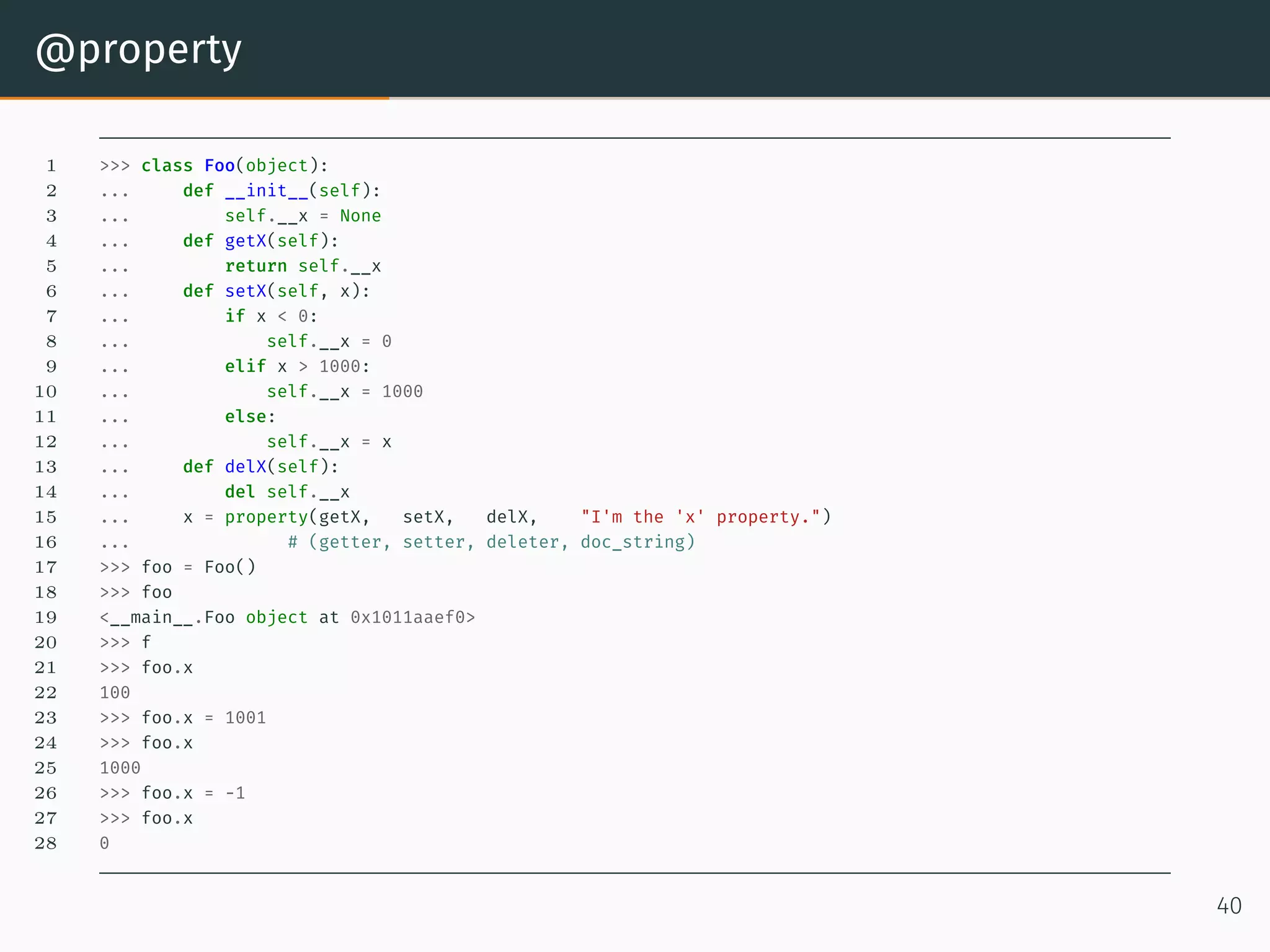

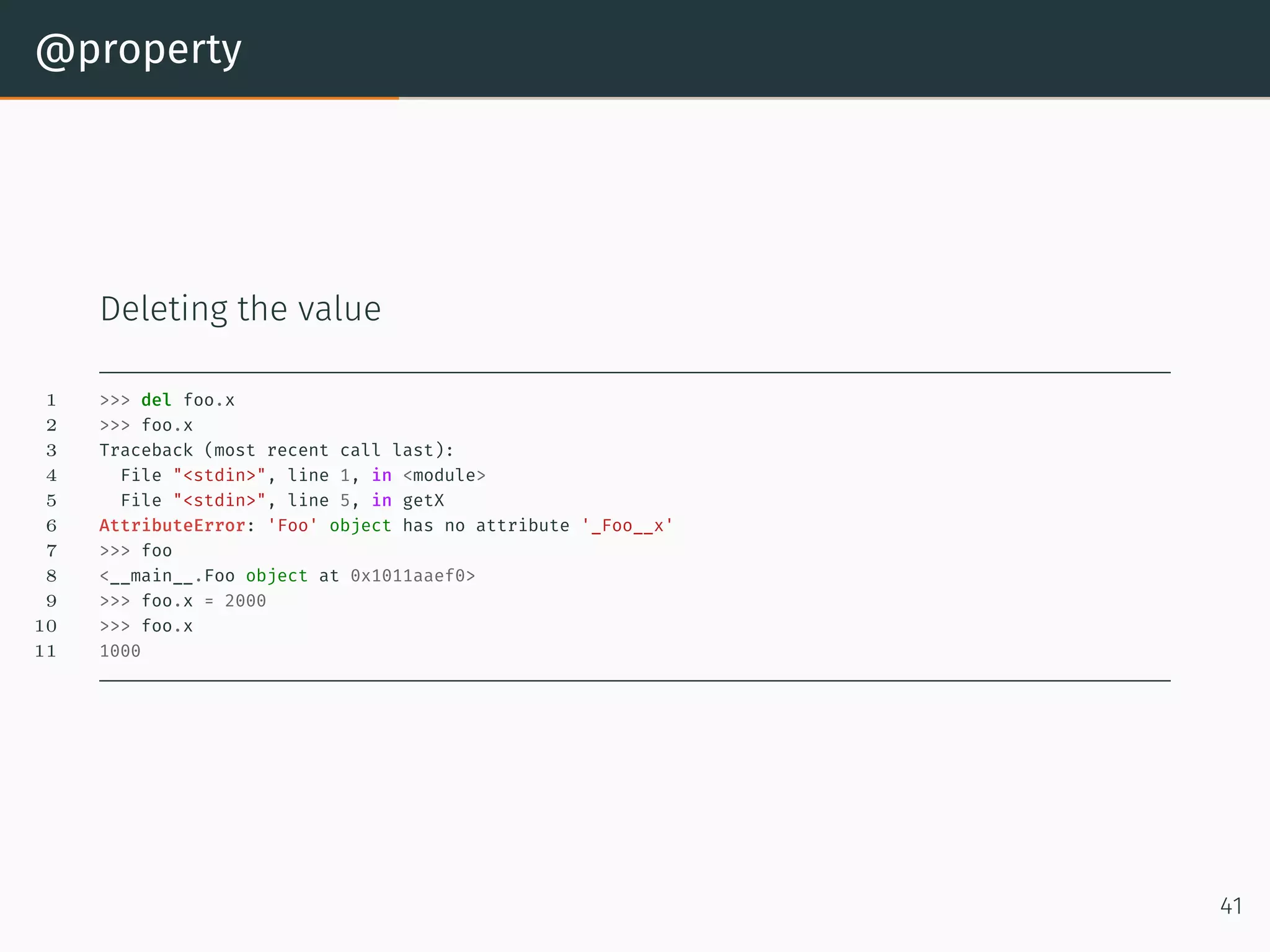

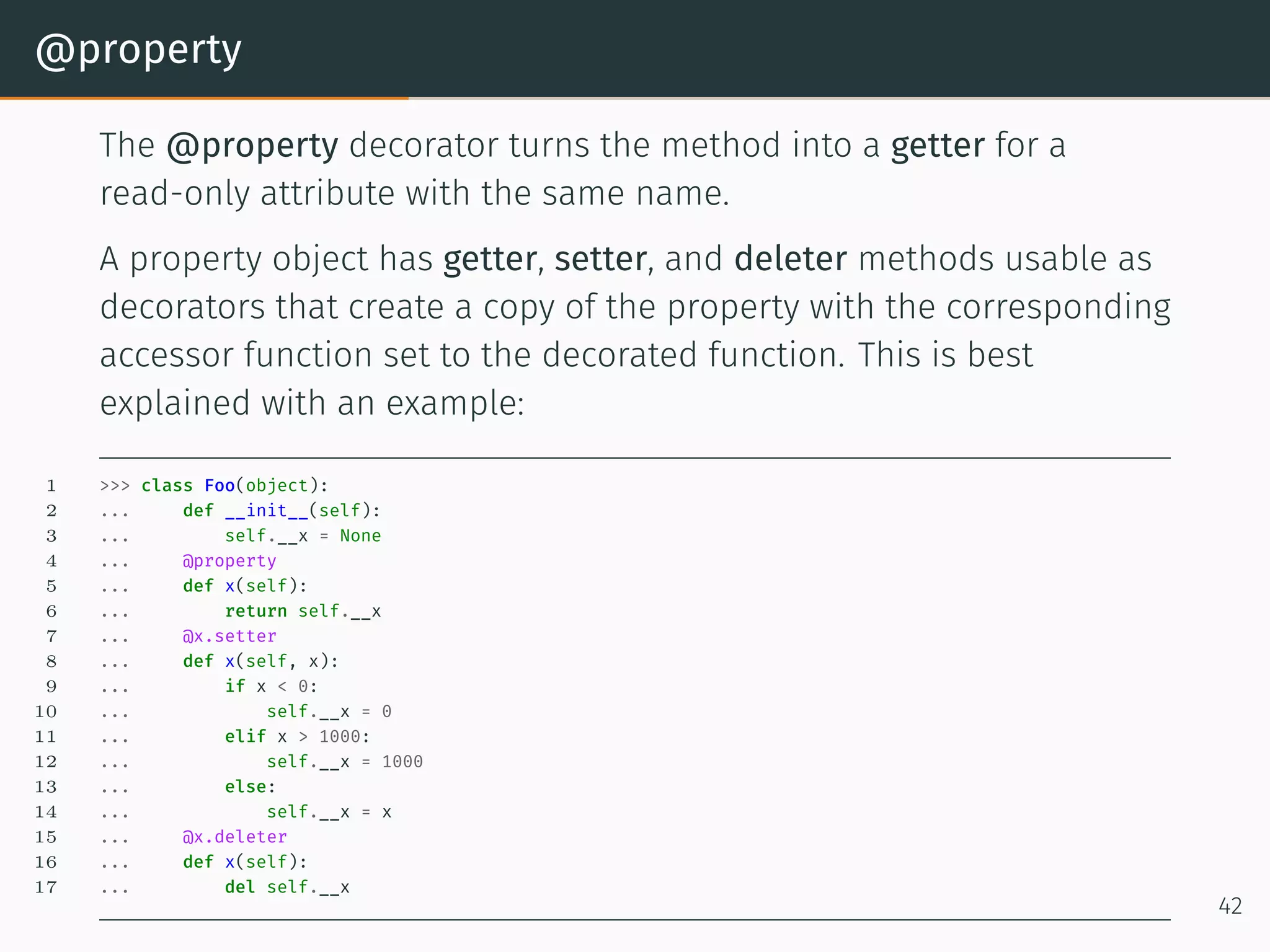

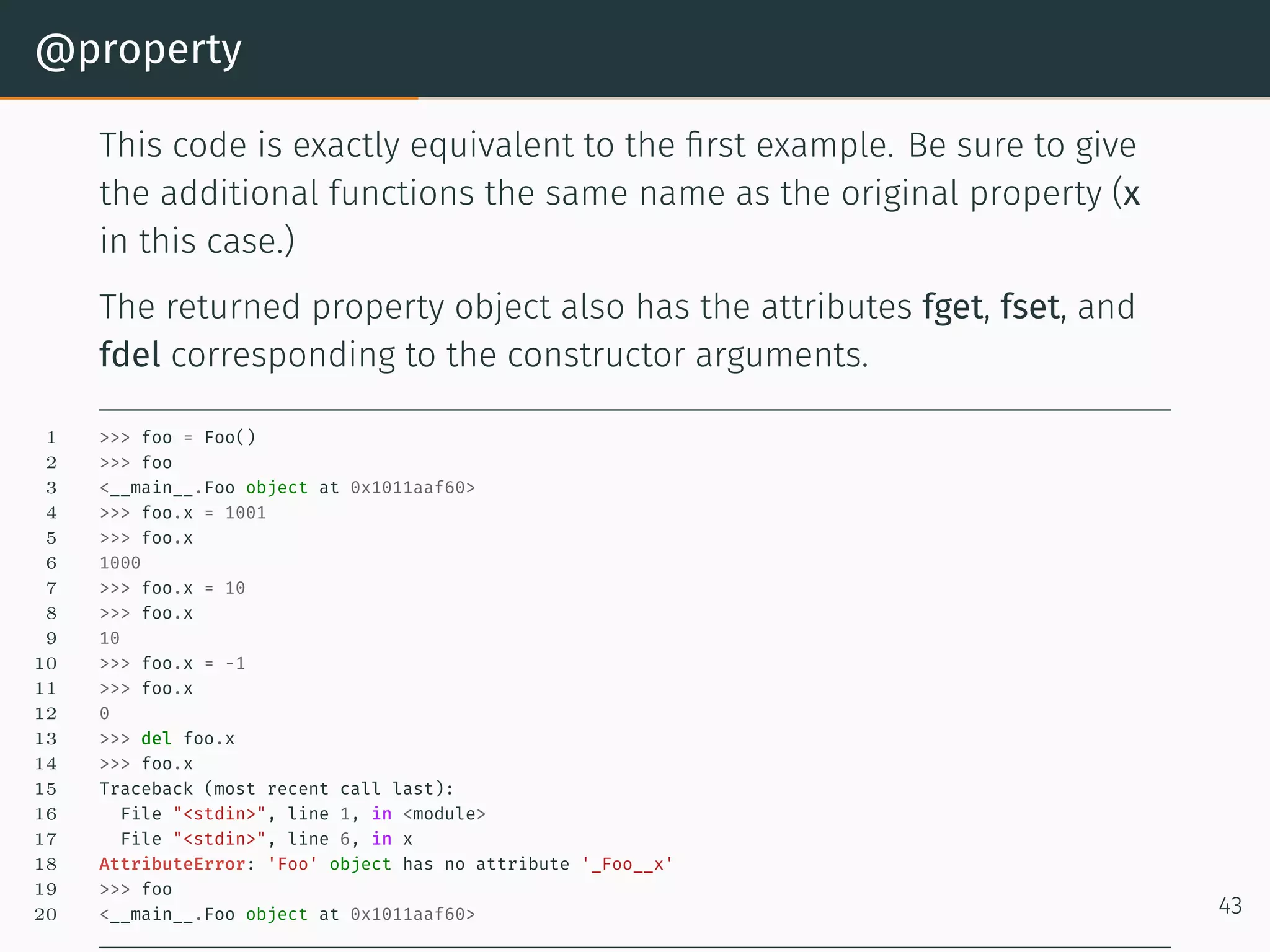

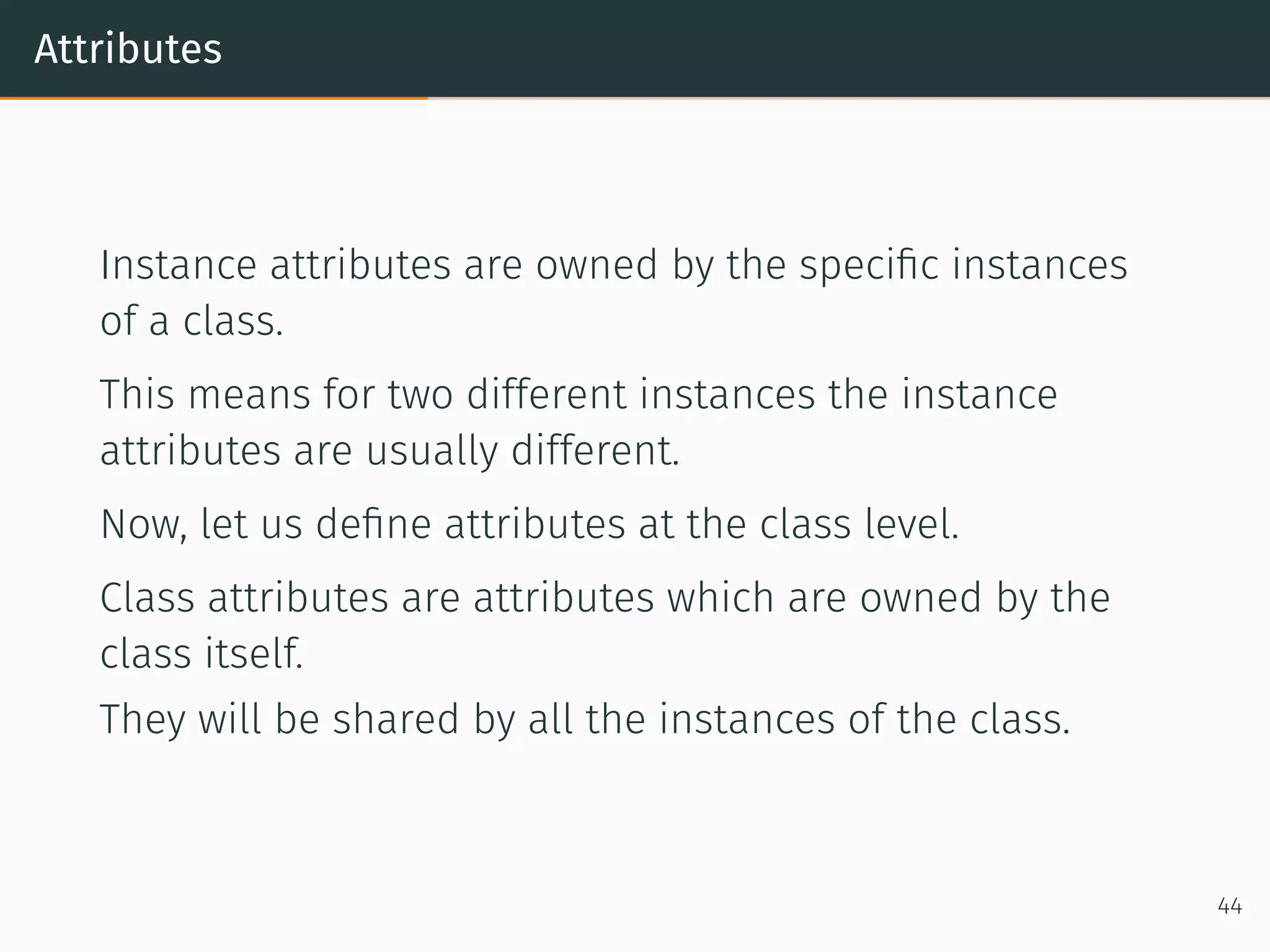

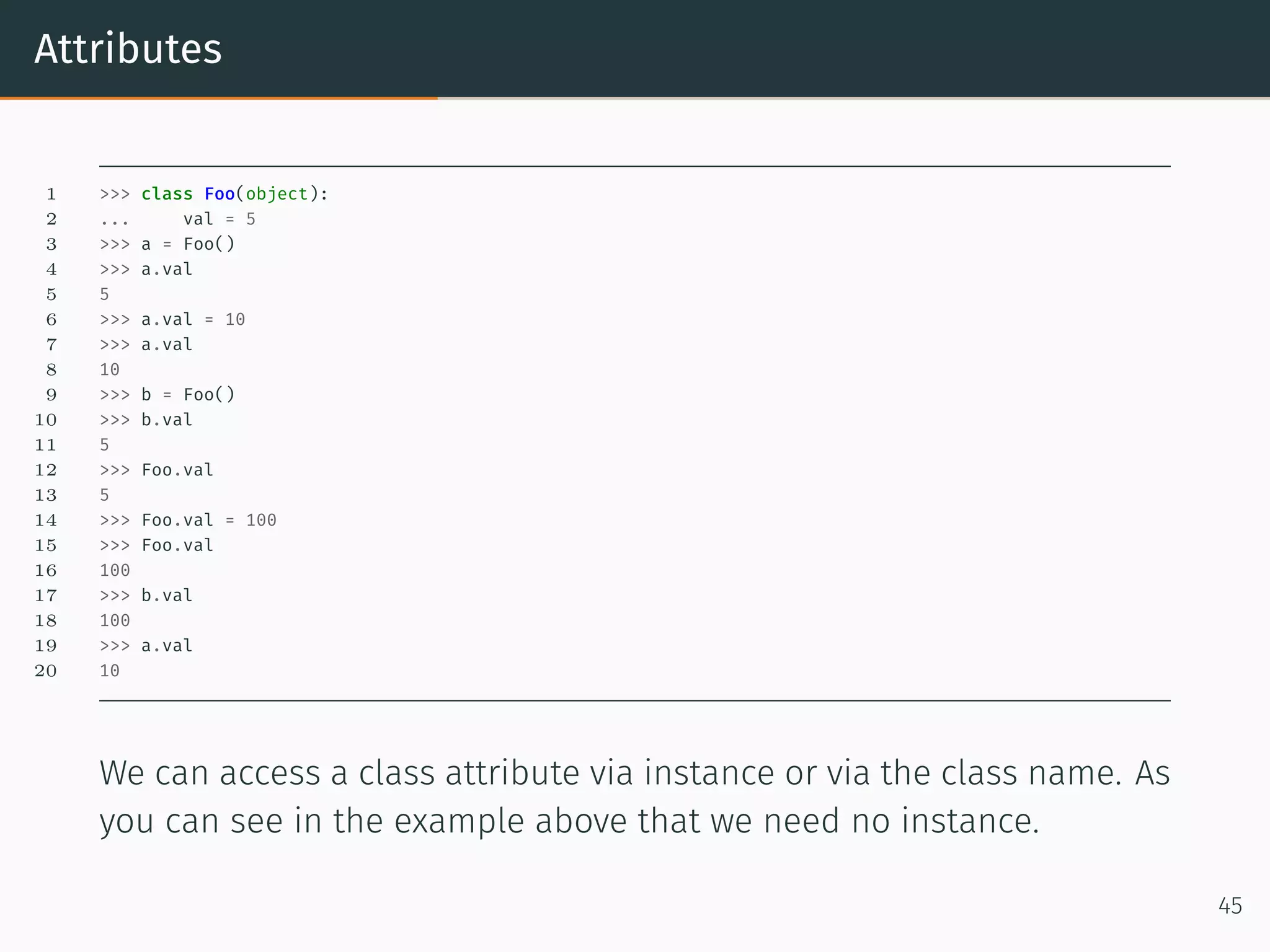

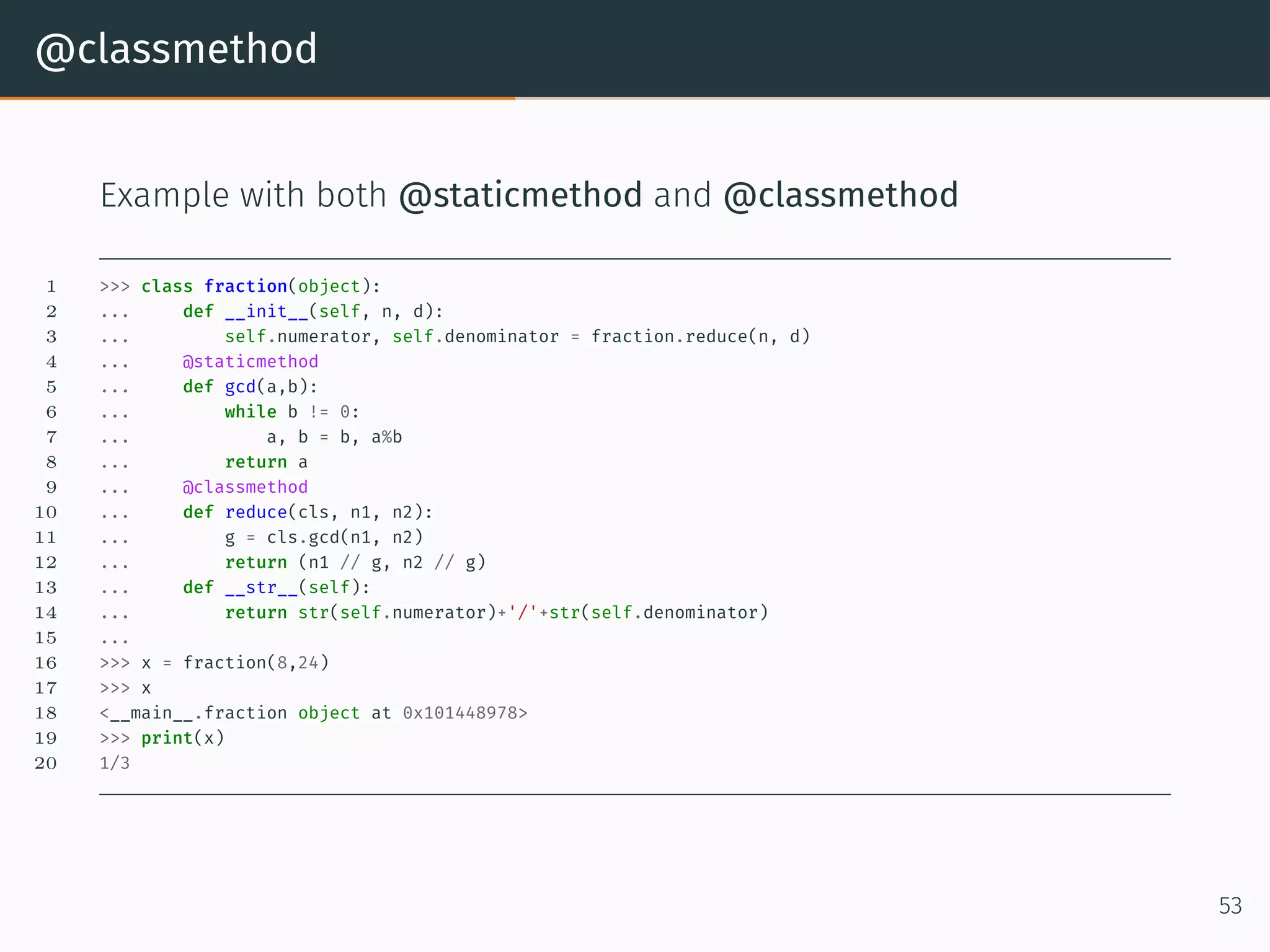

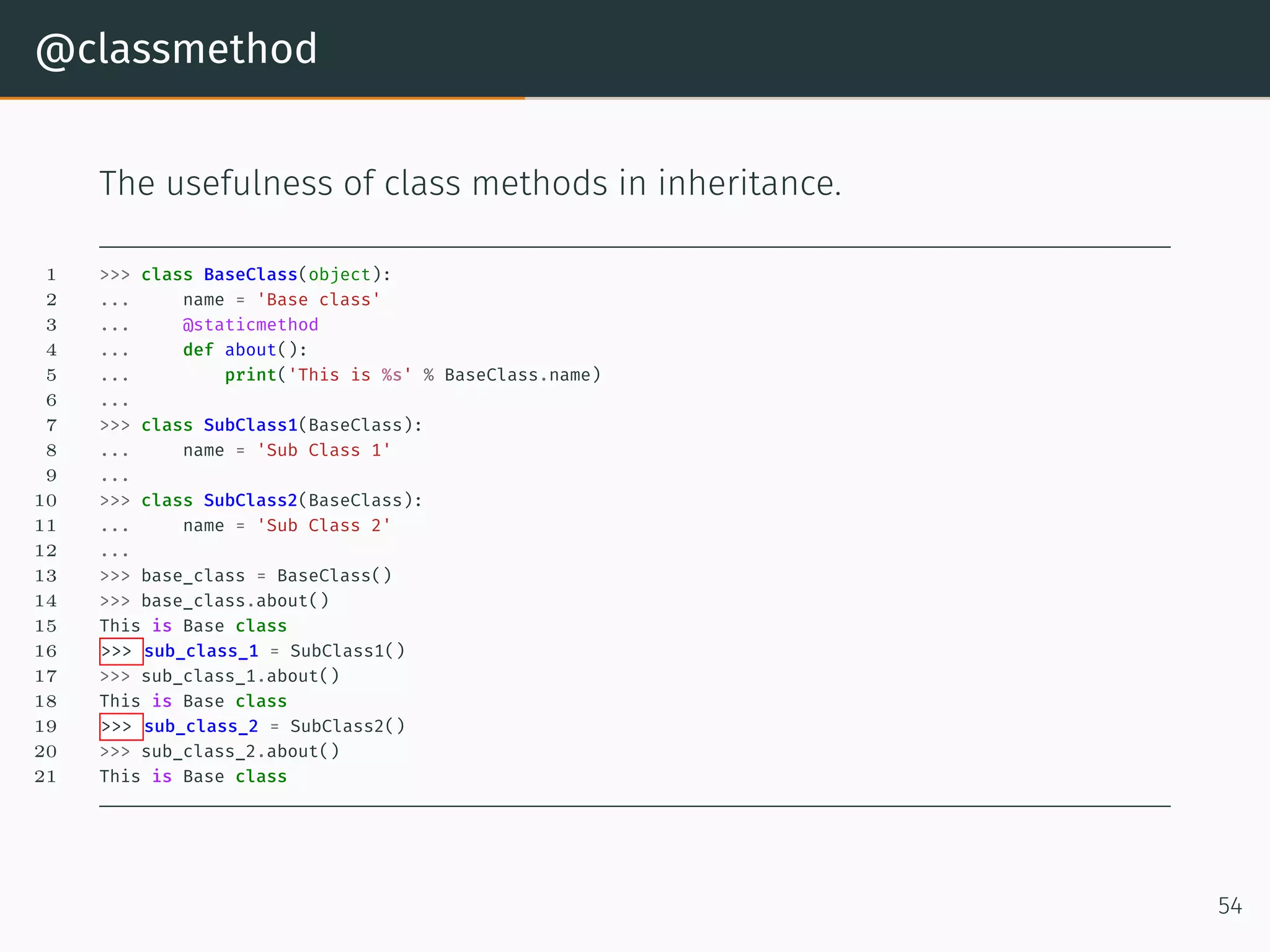

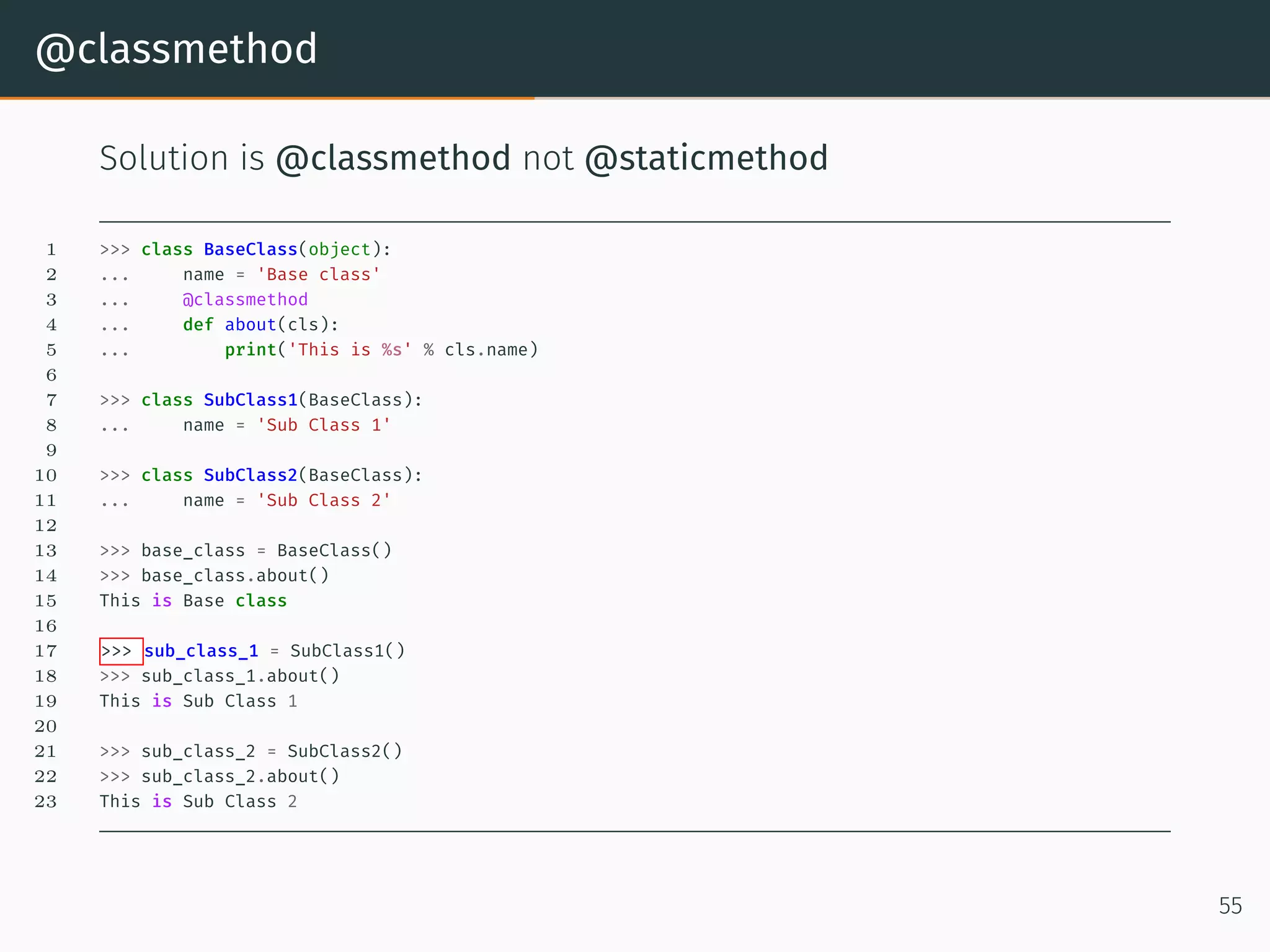

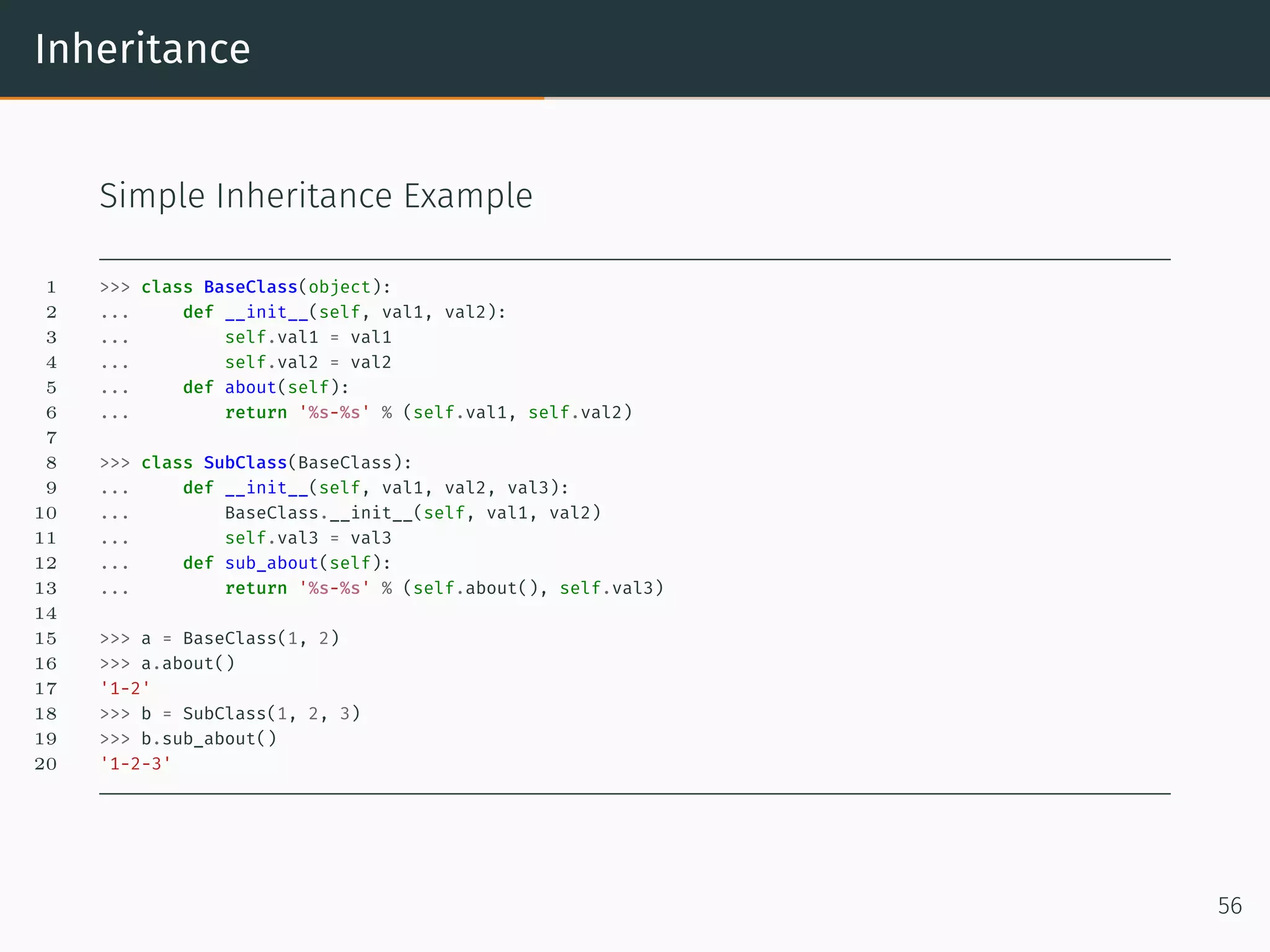

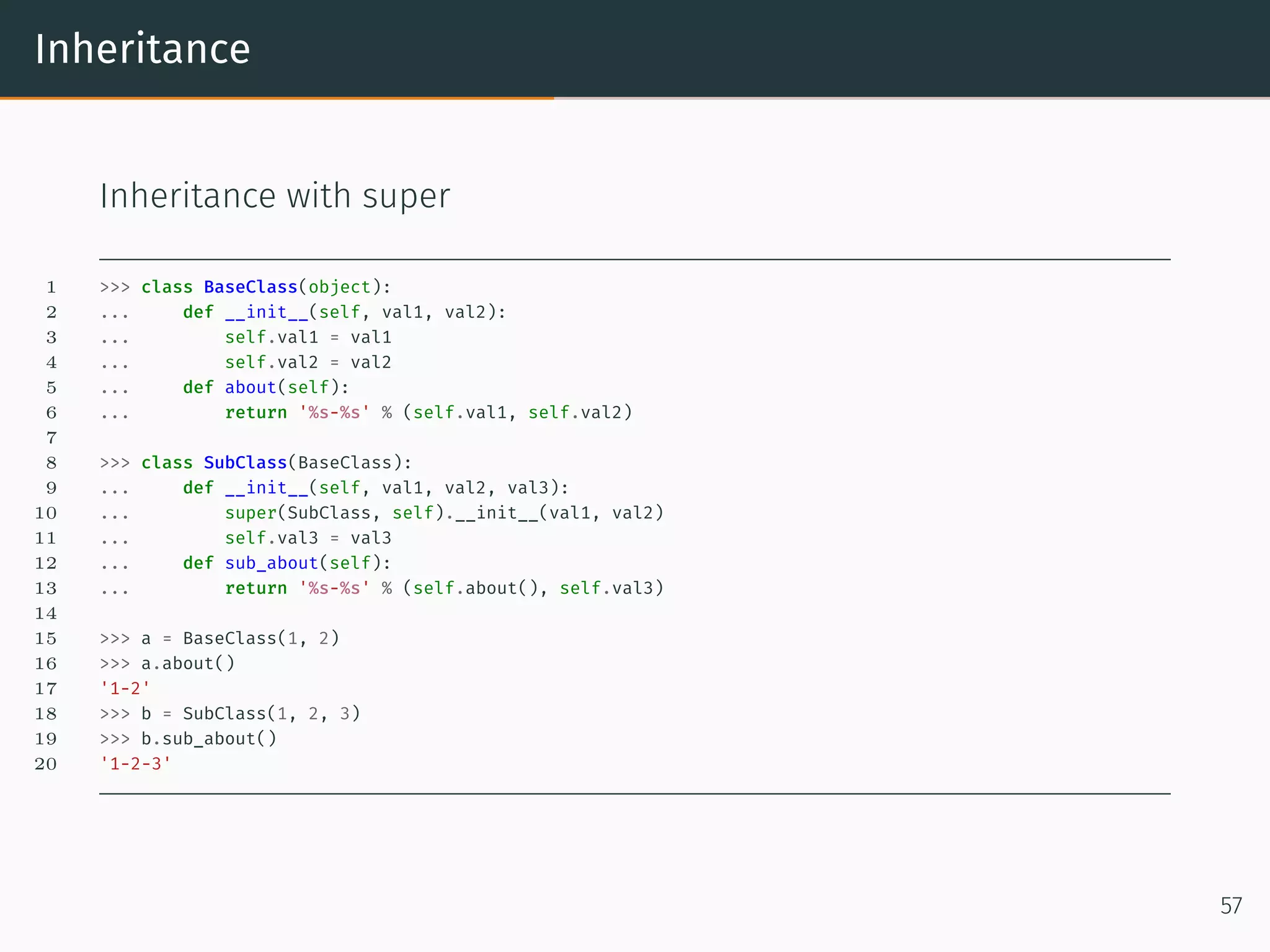

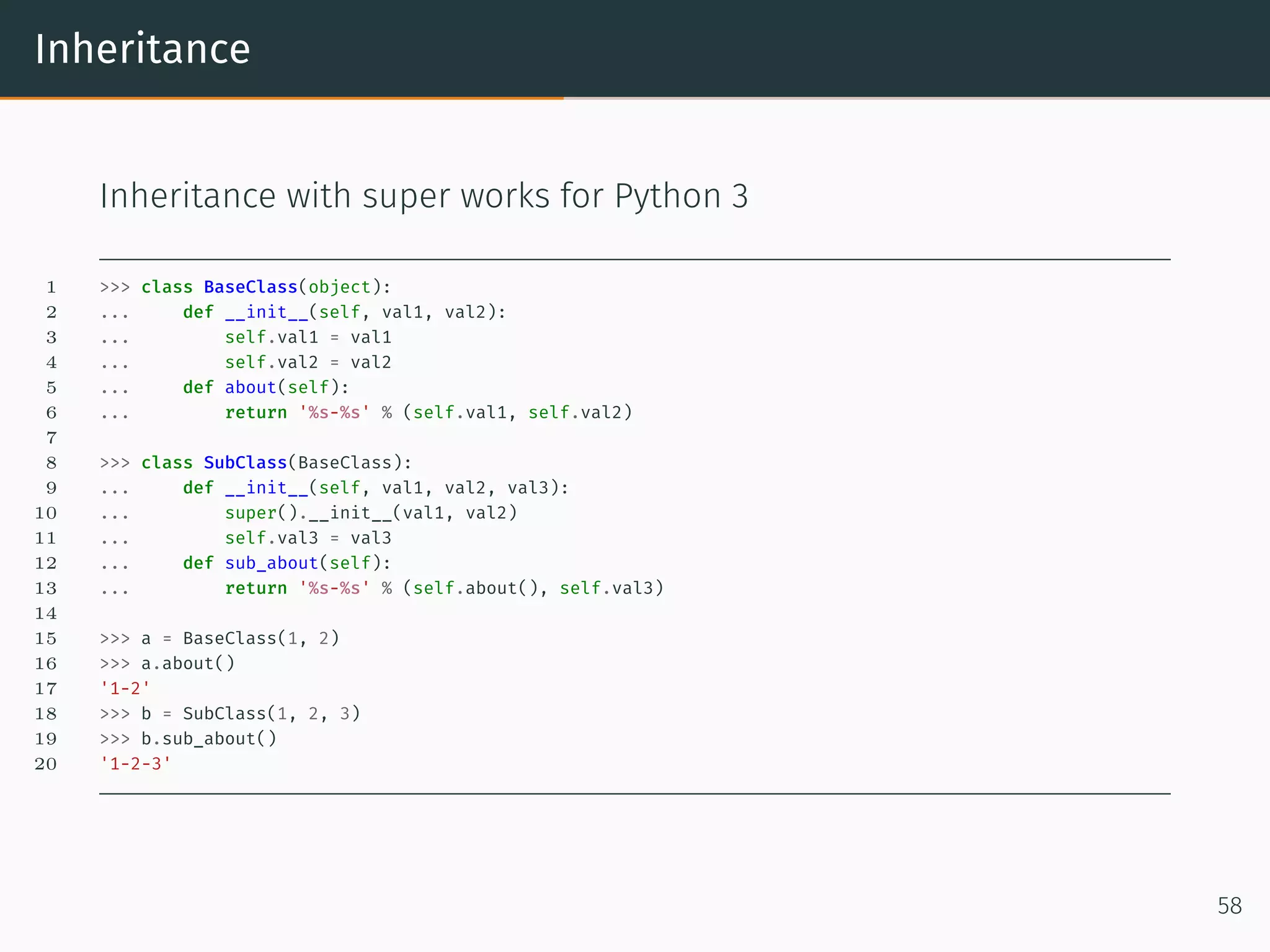

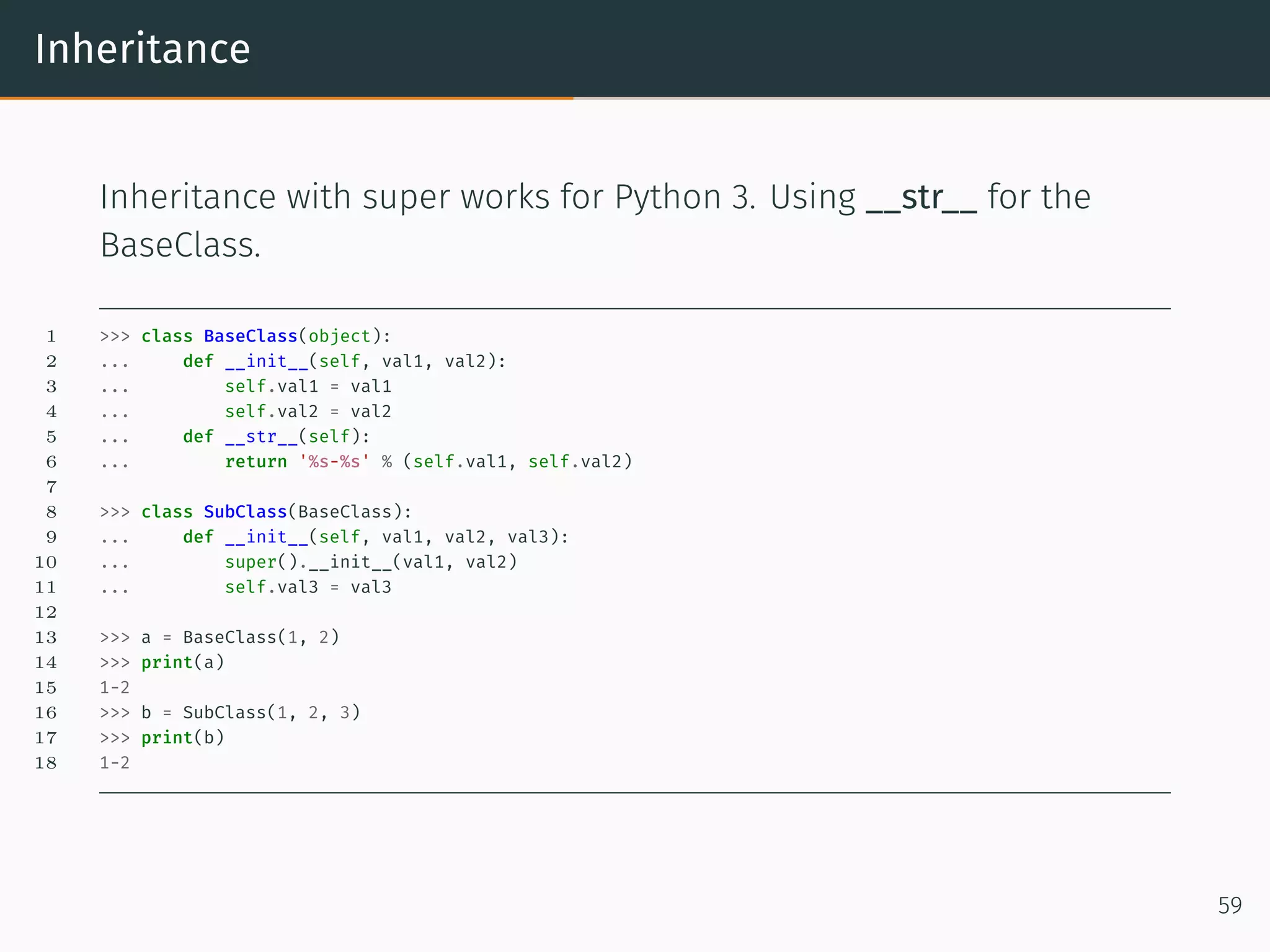

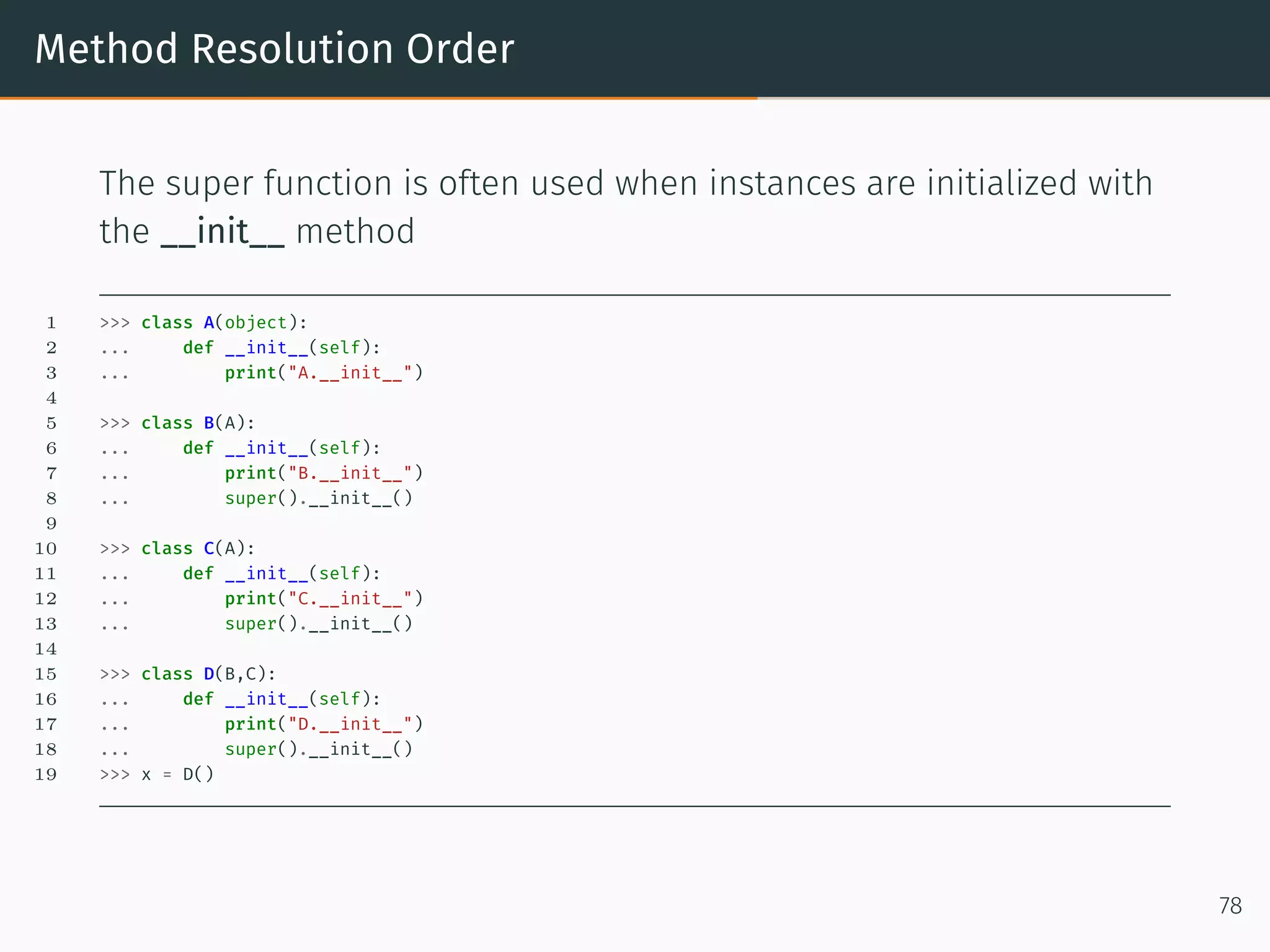

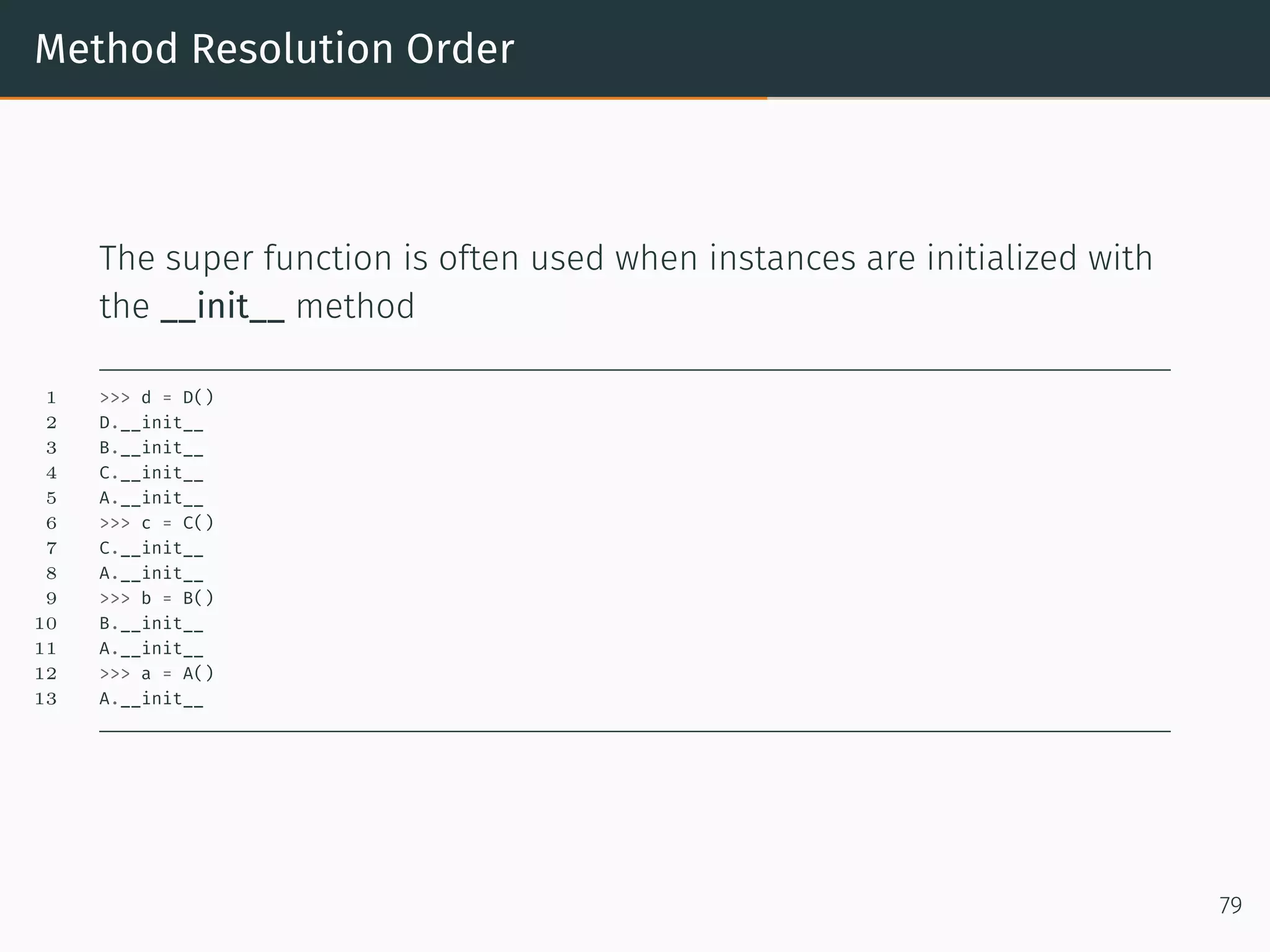

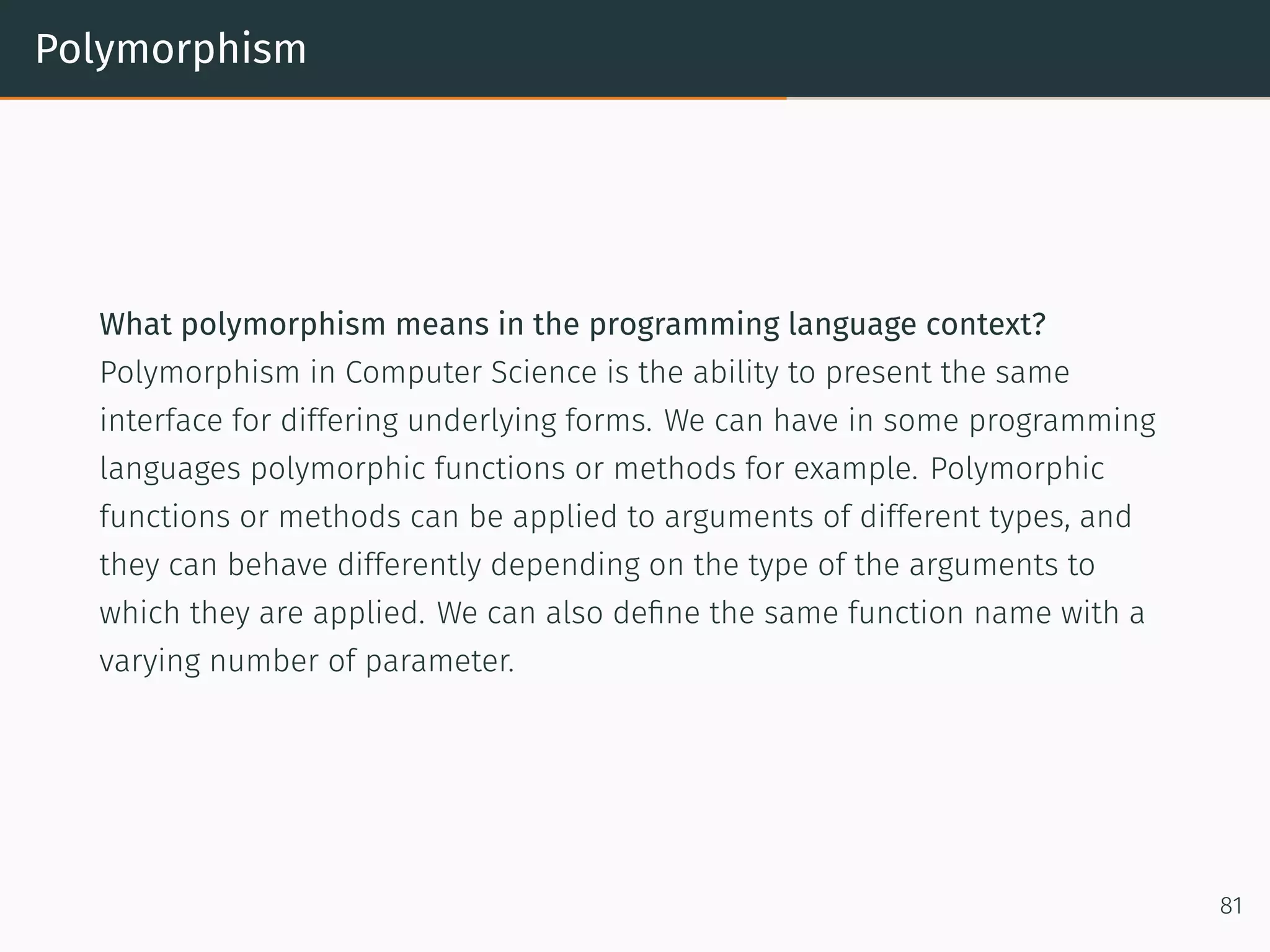

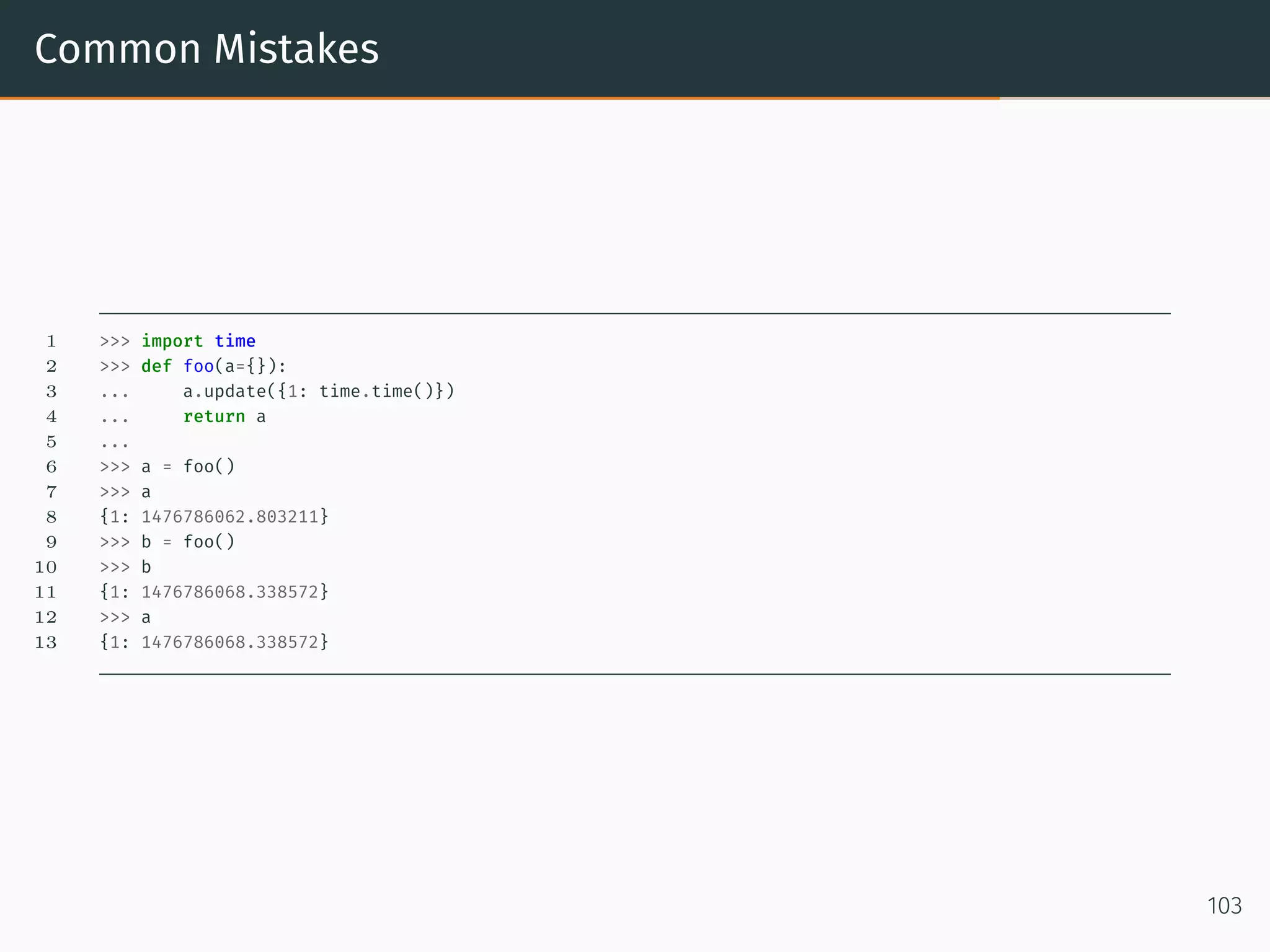

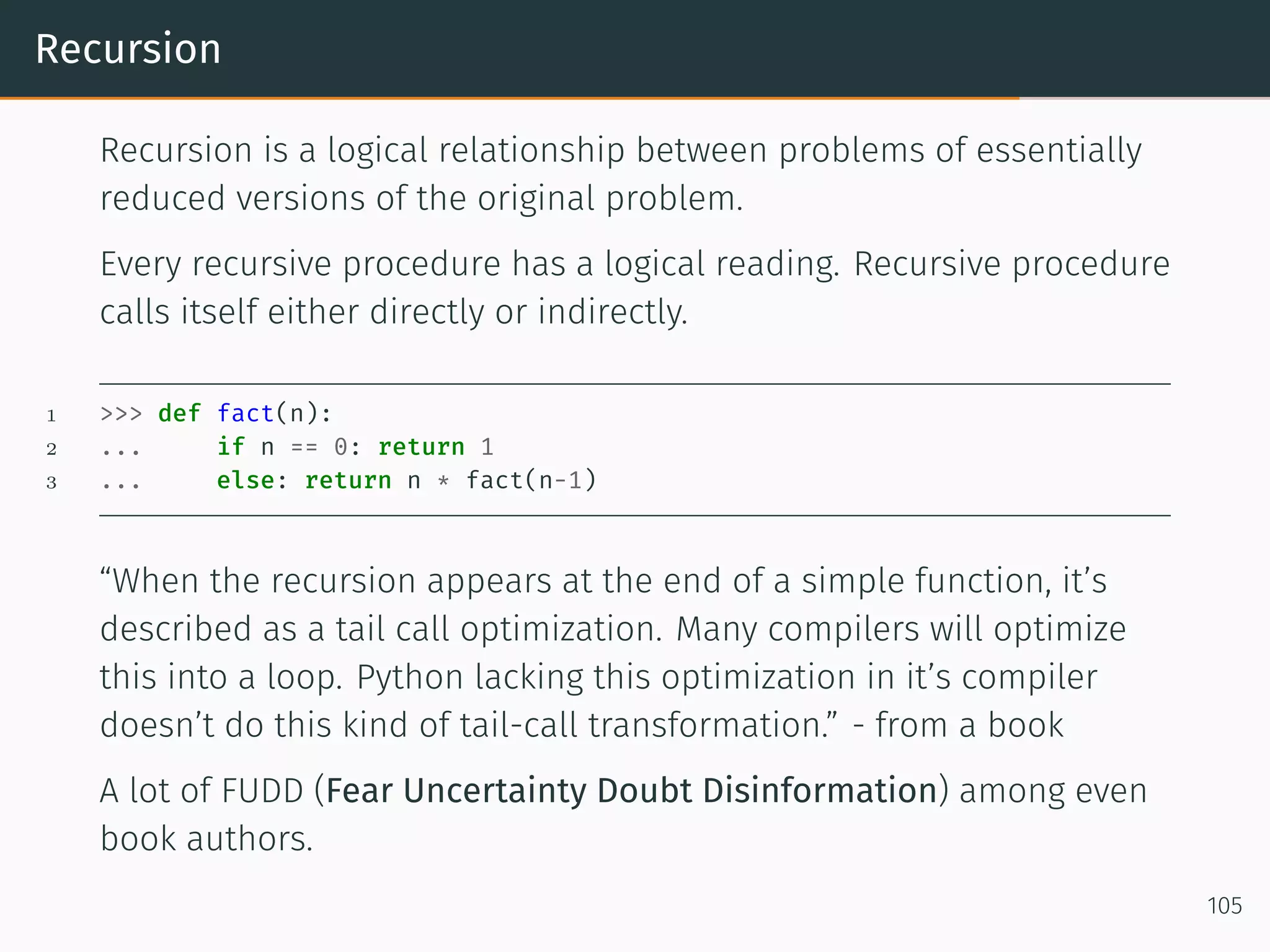

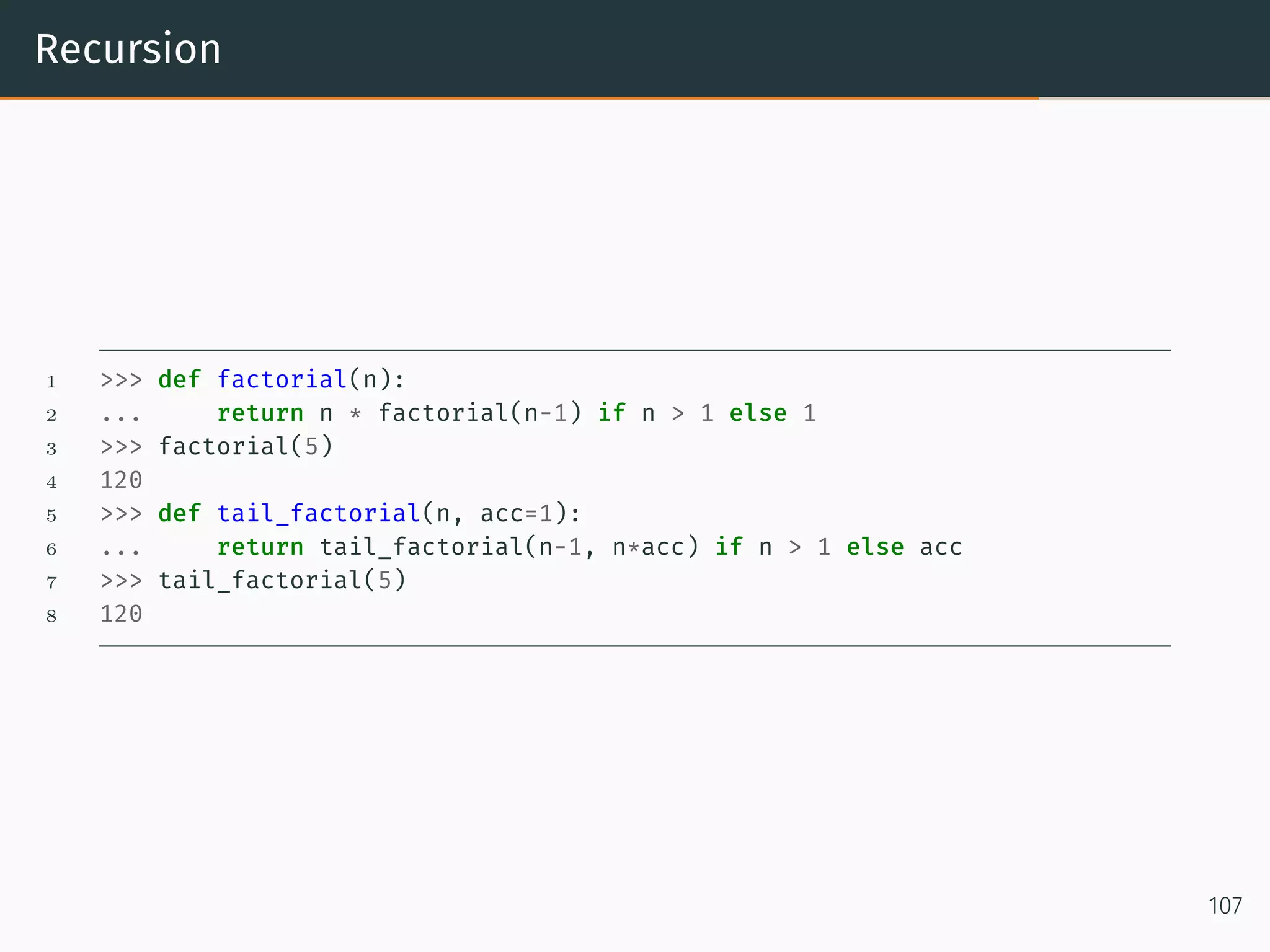

This document provides examples of built-in functions and decorators in Python like map, filter, all, any, getattr, hasattr, setattr, callable, isinstance, issubclass, closures, and memoization decorators. It demonstrates how to use these functions and decorators through examples. Built-in functions like map, filter and decorators allow extending functionality of functions. Closures enable functions to remember values in enclosing scopes. The @decorator syntax is demonstrated to be equivalent to applying a function to another function.

![__map__ 1 >>> def square(number): 2 ... return number * number 3 4 >>> list(map(square, [1, 2, 3, 4, 5, 6])) 5 [1, 4, 9, 16, 25, 36] 6 7 >>> # Can also be written as a list comprehension. 8 >>> [square(x) for x in [1, 2, 3, 4, 5, 6]] 9 [1, 4, 9, 16, 25, 36] 10 11 >>> # Another Example for __map__ 12 >>> list(map(pow,[2, 3, 4], [10, 11, 12])) 13 [1024, 177147, 16777216] 2](https://image.slidesharecdn.com/ef751a30-71a3-4b9f-acf6-c520062b4417-161026041224/75/PythonOOP-5-2048.jpg)

![__filter__ 1 >>> def square(number): 2 ... return number * number 3 4 >>> list(map(square, [0, 1, 2, 3, 4, 5, 6, 0])) 5 [0, 1, 4, 9, 16, 25, 36, 0] 6 7 >>> list(filter(square, [0, 1, 2, 3, 4, 5, 6, 0])) 8 [1, 4, 9, 16, 25, 36] 9 10 >>> # Can also be written as a list comprehension. 11 >>> [square(x) for x in [0, 1, 2, 3, 4, 5, 6, 0] if x] 12 [1, 4, 9, 16, 25, 36] 3](https://image.slidesharecdn.com/ef751a30-71a3-4b9f-acf6-c520062b4417-161026041224/75/PythonOOP-6-2048.jpg)

![all 1 >>> all([True, False]) 2 False 3 >>> all([True, True]) 4 True 5 6 >>> def is_positive(number): 7 ... if number >= 0: 8 ... return True 9 ... return False 10 11 >>> all([is_positive(x) for x in [1, 2]]) 12 True 13 >>> all([is_positive(x) for x in [1, -1]]) 14 False 15 >>> all([is_positive(x) for x in [-1, -2]]) 16 False 4](https://image.slidesharecdn.com/ef751a30-71a3-4b9f-acf6-c520062b4417-161026041224/75/PythonOOP-7-2048.jpg)

![any 1 >>> any([True, False]) 2 True 3 >>> any([False, False]) 4 False 5 6 >>> def is_positive(number): 7 ... if number >= 0: 8 ... return True 9 ... return False 10 11 >>> any([is_positive(x) for x in [-1, 1]]) 12 True 13 >>> any([is_positive(x) for x in [-1, -2]]) 14 False 5](https://image.slidesharecdn.com/ef751a30-71a3-4b9f-acf6-c520062b4417-161026041224/75/PythonOOP-8-2048.jpg)

![Closure 1 >>> del generate_adder_func 2 >>> 'Value is: %d' % add_to_5(5) 3 Value is: 10 1 >>> add_to_5.__closure__ 2 (<cell at 0x1019382e8: int object at 0x1002148f0>,) 3 >>> type(add_to_5.__closure__[0]) 4 <class 'cell'> 5 >>> add_to_5.__closure__[0].cell_contents 6 5 As you can see, the __closure__ attribute of the function add_to_5 has a reference to int object at 0x1002148f0 which is none other than n (which was defined in generate_adder_func) 18](https://image.slidesharecdn.com/ef751a30-71a3-4b9f-acf6-c520062b4417-161026041224/75/PythonOOP-22-2048.jpg)

![Solution for factorial 1 >>> def memoize(f): 2 ... memo = {} 3 ... def helper(x): 4 ... if x not in memo: 5 ... print('Finding the value for %d' % x) 6 ... memo[x] = f(x) 7 ... print('Factorial for %d is %d' % (x, memo[x])) 8 ... return memo[x] 9 ... return helper 10 ... 11 >>> @memoize 12 ... def factorial(n): 13 ... if n == 1: 14 ... return 1 15 ... else: 16 ... return n * factorial(n-1) 17 ... 18 >>> factorial(5) 19 Finding the value for 5 20 Finding the value for 4 21 Finding the value for 3 22 Finding the value for 2 23 Finding the value for 1 24 Factorial for 1 is 1 25 Factorial for 2 is 2 26 Factorial for 3 is 6 27 Factorial for 4 is 24 28 Factorial for 5 is 120 29 120 29](https://image.slidesharecdn.com/ef751a30-71a3-4b9f-acf6-c520062b4417-161026041224/75/PythonOOP-35-2048.jpg)

![Solution for fibonacci 1 >>> def memoize(f): 2 ... memo = {} 3 ... def helper(x): 4 ... if x not in memo: 5 ... memo[x] = f(x) 6 ... return memo[x] 7 ... return helper 8 ... 9 >>> @memoize 10 ... def fib(n): 11 ... if n == 0: 12 ... return 0 13 ... elif n == 1: 14 ... return 1 15 ... else: 16 ... return fib(n-1) + fib(n-2) 17 ... 18 >>> fib(10) 19 55 30](https://image.slidesharecdn.com/ef751a30-71a3-4b9f-acf6-c520062b4417-161026041224/75/PythonOOP-36-2048.jpg)

![Round robin Generator Generators are data producers 1 >>> def round_robin(): 2 ... while True: 3 ... yield from [1, 2, 3, 4] 4 >>> a = round_robin() 5 >>> a 6 <generator object round_robin at 0x101a89678> 7 >>> next(a) 8 1 9 >>> next(a) 10 2 11 >>> next(a) 12 3 13 >>> next(a) 14 4 15 >>> next(a) 16 1 17 >>> next(a) 18 2 19 >>> next(a) 20 3 21 >>> next(a) 22 4 32](https://image.slidesharecdn.com/ef751a30-71a3-4b9f-acf6-c520062b4417-161026041224/75/PythonOOP-39-2048.jpg)

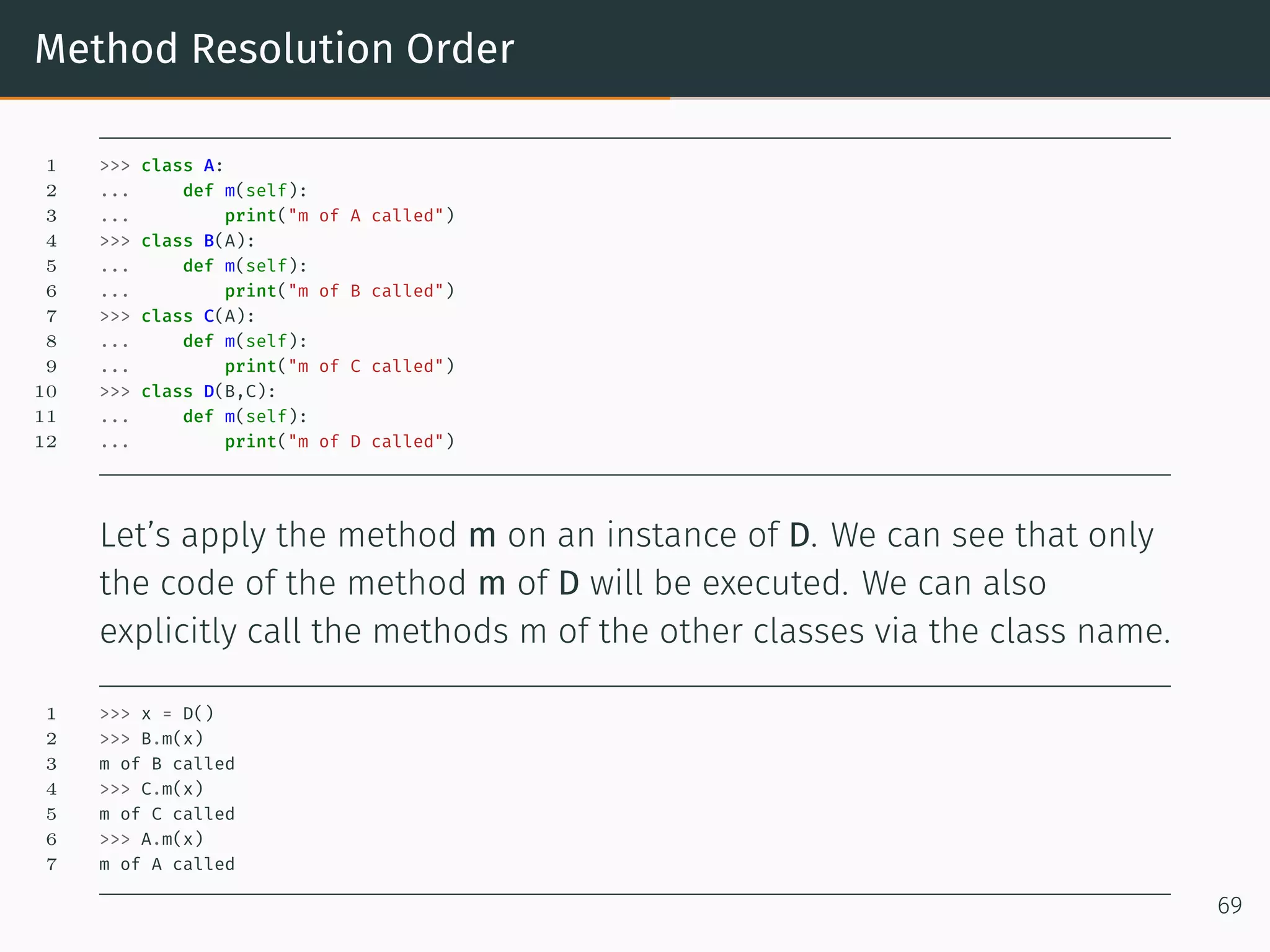

![Method Resolution Order The question arises how the super functions makes its decision. How does it decide which class has to be used? As we have already mentioned, it uses the so-called method resolution order(MRO). It is based on the “C3 superclass linearization” algorithm. This is called a linearization, because the tree structure is broken down into a linear order. The MRO method can be used to create this list 1 >>> D.mro() 2 [<class '__main__.D'>, <class '__main__.B'>, <class '__main__.C'>, <class '__main__.A'>, <class 'object'>] 3 >>> C.mro() 4 [<class '__main__.C'>, <class '__main__.A'>, <class 'object'>] 5 >>> B.mro() 6 [<class '__main__.B'>, <class '__main__.A'>, <class 'object'>] 7 >>> A.mro() 8 [<class '__main__.A'>, <class 'object'>] 80](https://image.slidesharecdn.com/ef751a30-71a3-4b9f-acf6-c520062b4417-161026041224/75/PythonOOP-94-2048.jpg)

![Polymorphism 1 >>> def f(x, y): 2 ... print("values: ", x, y) 3 ... 4 >>> f(42, 43) 5 values: 42 43 6 >>> f(42, 43.7) 7 values: 42 43.7 8 >>> f(42.3, 43) 9 values: 42.3 43 10 >>> f(42.0, 43.9) 11 values: 42.0 43.9 12 >>> f([3,5,6],(3,5)) 13 values: [3, 5, 6] (3, 5) 82](https://image.slidesharecdn.com/ef751a30-71a3-4b9f-acf6-c520062b4417-161026041224/75/PythonOOP-97-2048.jpg)

![Slots We fail to create an attribute “new” 1 >>> class Foo(object): 2 ... __slots__ = ['val'] 3 ... def __init__(self, v): 4 ... self.val = v 5 6 >>> x = Foo(42) 7 >>> x.val 8 42 9 >>> x.new = "not possible" 10 Traceback (most recent call last): 11 File "<stdin>", line 1, in <module> 12 AttributeError: 'Foo' object has no attribute 'new' 86](https://image.slidesharecdn.com/ef751a30-71a3-4b9f-acf6-c520062b4417-161026041224/75/PythonOOP-102-2048.jpg)

![Classes and Class Creation The relationship between type and class. When you have defined classes so far, you may have asked yourself, what is happening “behind the lines’. We have already seen, that applying “type” to an object returns the class of which the object is an instance of: 1 >>> x = [4, 5, 9] 2 >>> y = "Hello" 3 >>> print(type(x), type(y)) 4 <class 'list'> <class 'str'> If you apply type on the name of a class itself, you get the class type returned. 1 >>> print(type(list), type(str)) 2 <class 'type'> <class 'type'> 88](https://image.slidesharecdn.com/ef751a30-71a3-4b9f-acf6-c520062b4417-161026041224/75/PythonOOP-105-2048.jpg)

![Classes and Class Creation This is similar to applying type on type(x) and type(y) 1 >>> x = [4, 5, 9] 2 >>> y = "Hello" 3 >>> print(type(x), type(y)) 4 <class 'list'> <class 'str'> 5 >>> print(type(type(x)), type(type(y))) 6 <class 'type'> <class 'type'> 89](https://image.slidesharecdn.com/ef751a30-71a3-4b9f-acf6-c520062b4417-161026041224/75/PythonOOP-106-2048.jpg)

![Singleton 1 >>> class Singleton(type): 2 ... _instances = {} 3 ... def __call__(cls, *args, **kwargs): 4 ... if cls not in cls._instances: 5 ... cls._instances[cls] = super(Singleton, cls).__call__(*args, **kwargs) 6 ... return cls._instances[cls] 7 ... 8 >>> class SingletonClass(metaclass=Singleton): 9 ... pass 10 ... 11 >>> class RegularClass(): 12 ... pass 13 ... 14 >>> x = SingletonClass() 15 >>> y = SingletonClass() 16 >>> print(x == y) 17 True 18 >>> x = RegularClass() 19 >>> y = RegularClass() 20 >>> print(x == y) 21 False 100](https://image.slidesharecdn.com/ef751a30-71a3-4b9f-acf6-c520062b4417-161026041224/75/PythonOOP-118-2048.jpg)

![Common Mistakes 1 >>> a = [1, 2, 3] 2 >>> b = a 3 >>> b.append(4) 4 >>> b 5 [1, 2, 3, 4] 6 >>> a 7 [1, 2, 3, 4] 101](https://image.slidesharecdn.com/ef751a30-71a3-4b9f-acf6-c520062b4417-161026041224/75/PythonOOP-120-2048.jpg)

![Common Mistakes 1 >>> def foo(a=[]): 2 ... a.append(1) 3 ... return a 4 ... 5 >>> foo() 6 [1] 7 >>> foo() 8 [1, 1] 9 >>> foo() 10 [1, 1, 1] 102](https://image.slidesharecdn.com/ef751a30-71a3-4b9f-acf6-c520062b4417-161026041224/75/PythonOOP-121-2048.jpg)

![Python 3.x 1 >>> a, b = range(2) 2 >>> a, b 3 (0, 1) 4 >>> a, b, *rest = range(10) 5 >>> a, b, rest 6 (0, 1, [2, 3, 4, 5, 6, 7, 8, 9]) 117](https://image.slidesharecdn.com/ef751a30-71a3-4b9f-acf6-c520062b4417-161026041224/75/PythonOOP-138-2048.jpg)

![Python 3.x *rest can go anywhere 1 >>> a, b = range(2) 2 >>> a, b 3 (0, 1) 4 >>> a, b, *rest = range(10) 5 >>> a, b, rest 6 (0, 1, [2, 3, 4, 5, 6, 7, 8, 9]) 7 >>> 8 >>> 9 >>> a, *rest, b = range(10) 10 >>> a, rest, b 11 (0, [1, 2, 3, 4, 5, 6, 7, 8], 9) 118](https://image.slidesharecdn.com/ef751a30-71a3-4b9f-acf6-c520062b4417-161026041224/75/PythonOOP-139-2048.jpg)

![Python 3.x I reordered the keyword arguments of a function, but something was implicitly passing in arguments expecting the order. 1 >>> def maxall(iterable, key=None): 2 ... """ 3 ... A list of all max items from the iterable 4 ... """ 5 ... key = key or (lambda x: x) 6 ... m = max(iterable, key=key) 7 ... return [i for i in iterable if key(i) == key(m)] 8 9 >>> maxall(['a', 'ab', 'bc'], len) 10 ['ab', 'bc'] 124](https://image.slidesharecdn.com/ef751a30-71a3-4b9f-acf6-c520062b4417-161026041224/75/PythonOOP-145-2048.jpg)

![Python 3.x The max builtin supports max(a, b, c). We should allow that too. 1 >>> def maxall(*args, key=None): 2 ... """ 3 ... A list of all max items from the iterable 4 ... """ 5 ... if len(args) == 1: 6 ... iterable = args[0] 7 ... else: 8 ... iterable = args 9 ... key = key or (lambda x: x) 10 ... m = max(iterable, key=key) 11 ... return [i for i in iterable if key(i) == key(m)] We just broke any code that passed in the key as a second argument without using the keyword. 1 >>> maxall(['a', 'ab', 'ac'], len) 2 Traceback (most recent call last): 3 File "<stdin>", line 1, in <module> 4 File "<stdin>", line 10, in maxall 5 TypeError: unorderable types: builtin_function_or_method() > list() 125](https://image.slidesharecdn.com/ef751a30-71a3-4b9f-acf6-c520062b4417-161026041224/75/PythonOOP-146-2048.jpg)

![Python 3.x Actually in Python 2 it would just return [’a’, ’ab’, ’ac’] By the way, max shows that this is already possible in Python 2, but only if you write your function in C. Obviously, we should have used maxall(iterable, *, key=None) to begin with. 126](https://image.slidesharecdn.com/ef751a30-71a3-4b9f-acf6-c520062b4417-161026041224/75/PythonOOP-147-2048.jpg)

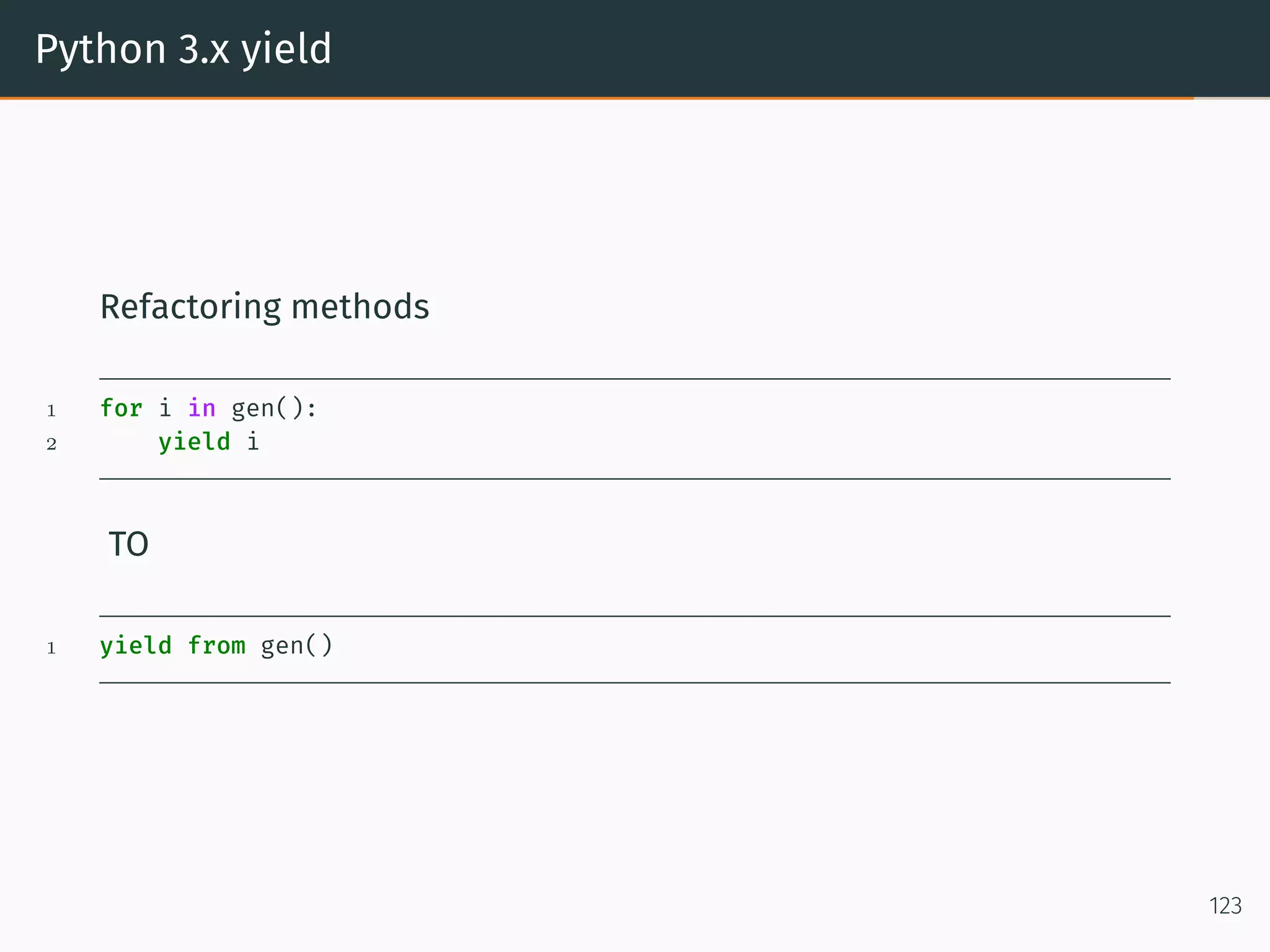

![Python 3.x yield Bad 1 def dup(n): 2 A = [] 3 for i in range(n): 4 A.extend([i, i]) 5 return A Good 1 def dup(n): 2 for i in range(n): 3 yield i 4 yield i Better 1 def dup(n): 2 for i in range(n): 3 yield from [i, i] 127](https://image.slidesharecdn.com/ef751a30-71a3-4b9f-acf6-c520062b4417-161026041224/75/PythonOOP-148-2048.jpg)

![namedtuple Factory Function for Tuples with Named Fields 1 >>> from collections import namedtuple 2 >>> import csv 3 >>> Point = namedtuple('Point', 'x y') 4 >>> for point in map(Point._make, csv.reader(open("points.csv", "r"))): 5 ... print(point.x, point.y) 6 1 2 7 2 3 8 3.4 5.6 9 10 10 10 >>> p = Point(x=11, y=22) 11 >>> p._asdict() 12 OrderedDict([('x', 11), ('y', 22)]) 13 >>> Color = namedtuple('Color', 'red green blue') 14 >>> Pixel = namedtuple('Pixel', Point._fields + Color._fields) 15 >>> Pixel(11, 22, 128, 255, 0) 16 Pixel(x=11, y=22, red=128, green=255, blue=0) 128](https://image.slidesharecdn.com/ef751a30-71a3-4b9f-acf6-c520062b4417-161026041224/75/PythonOOP-150-2048.jpg)