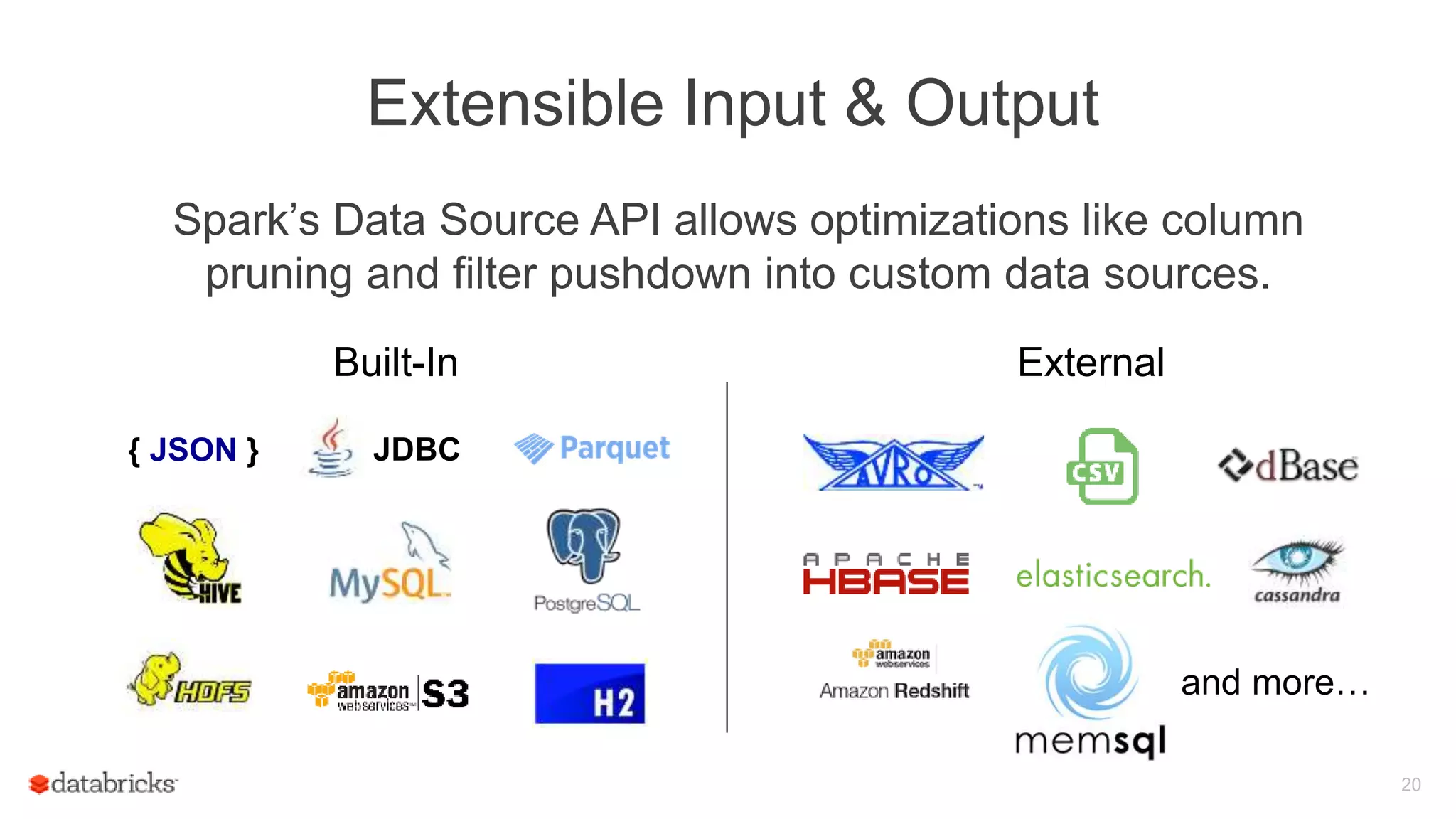

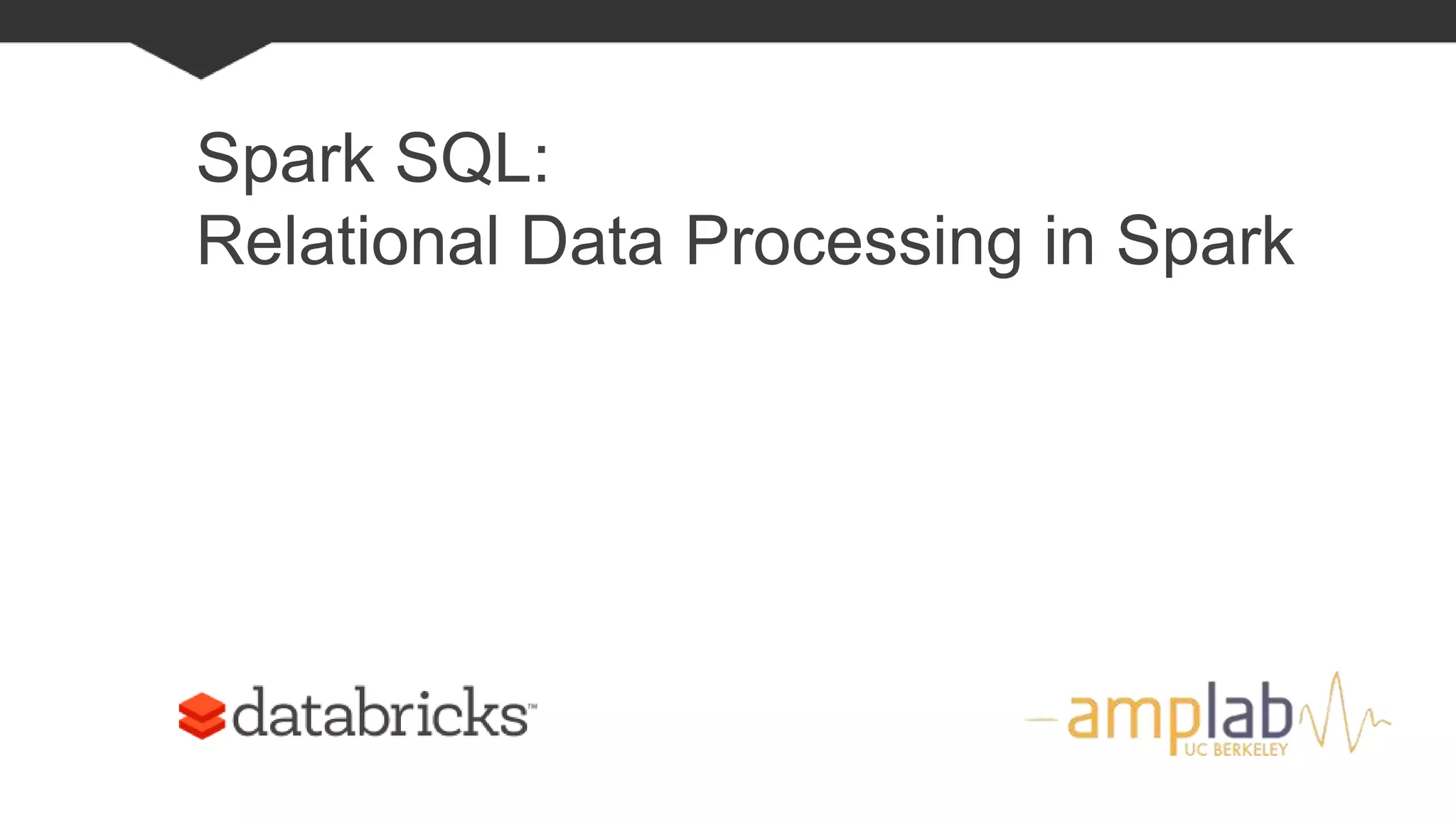

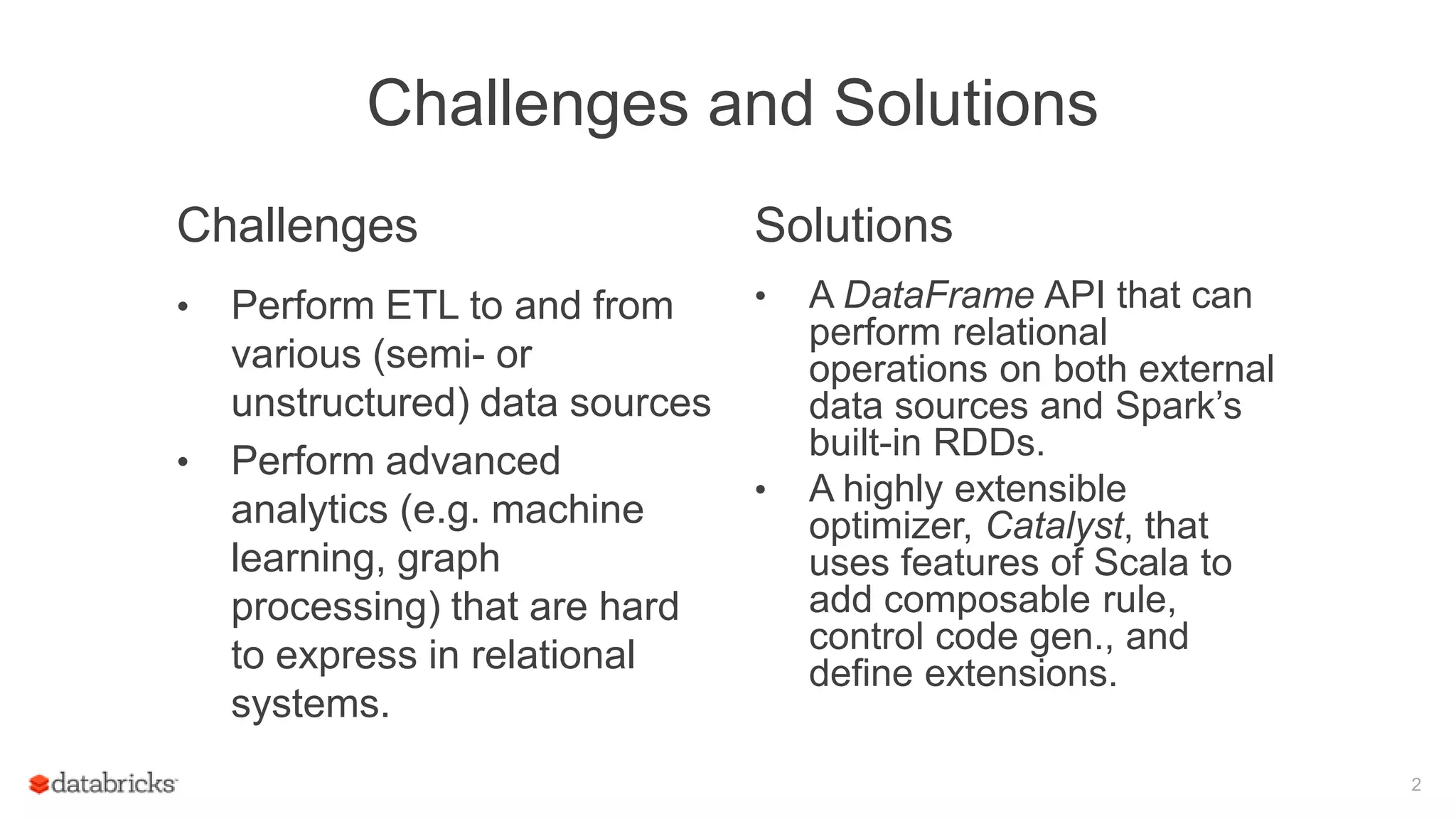

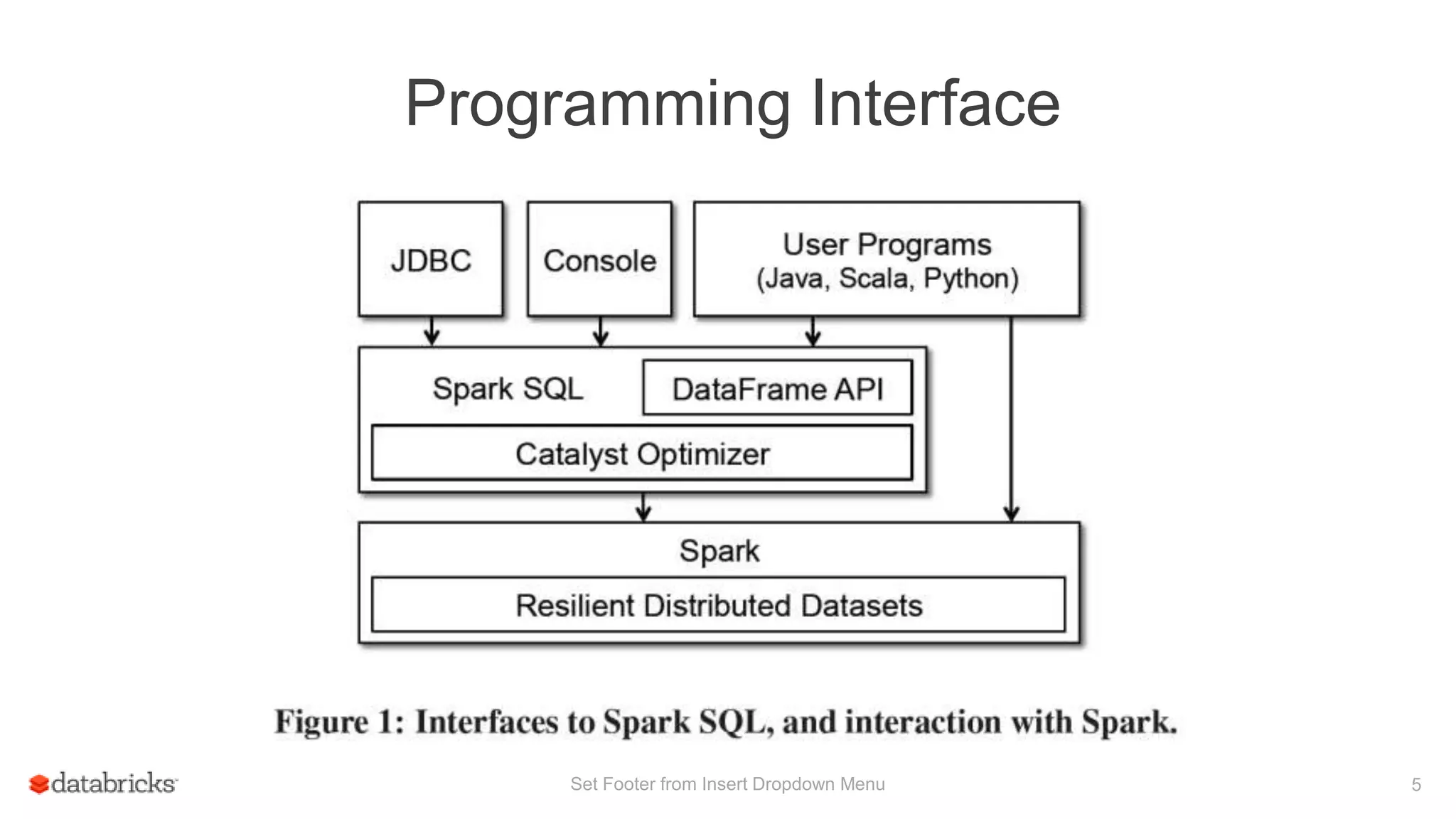

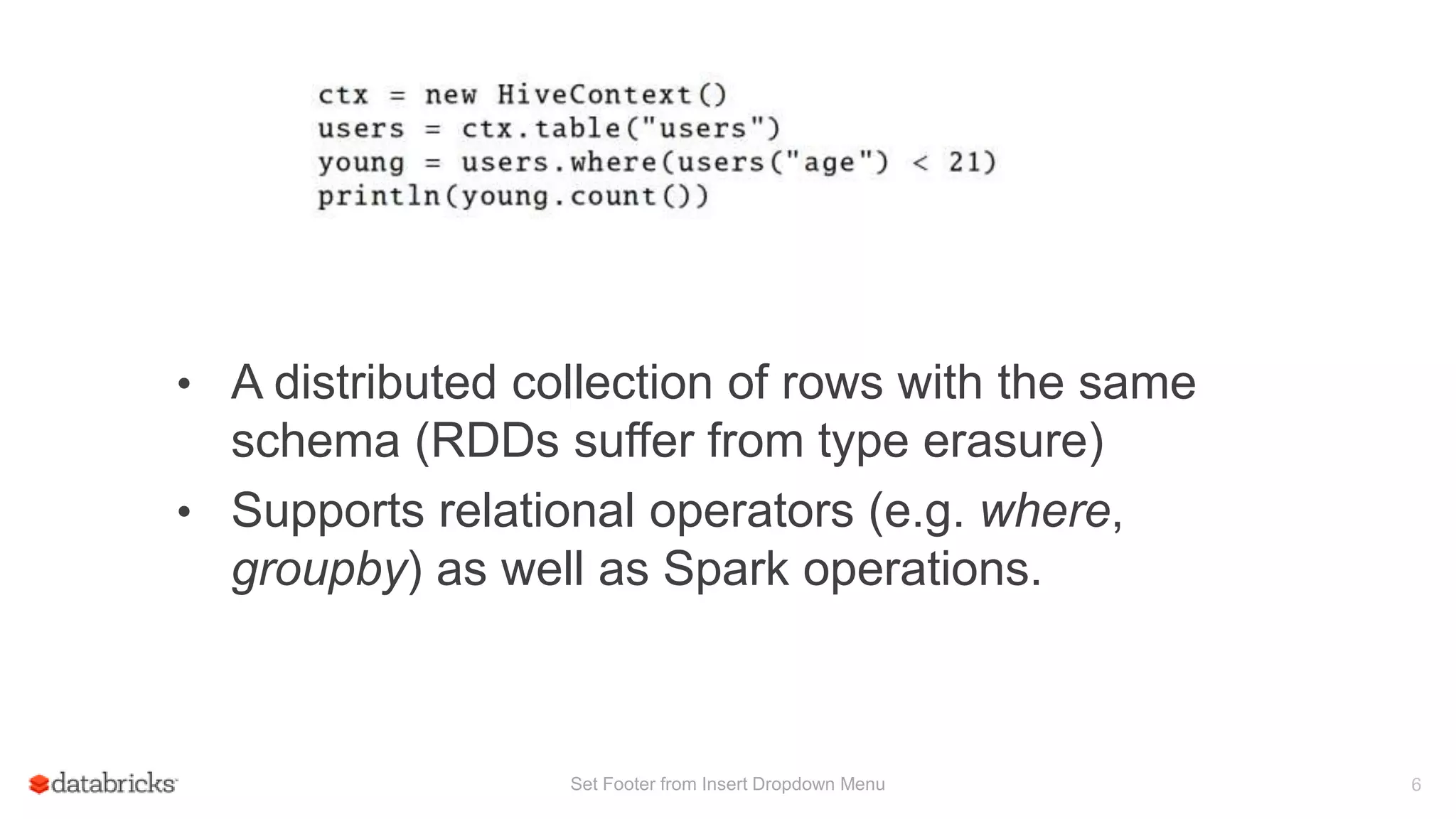

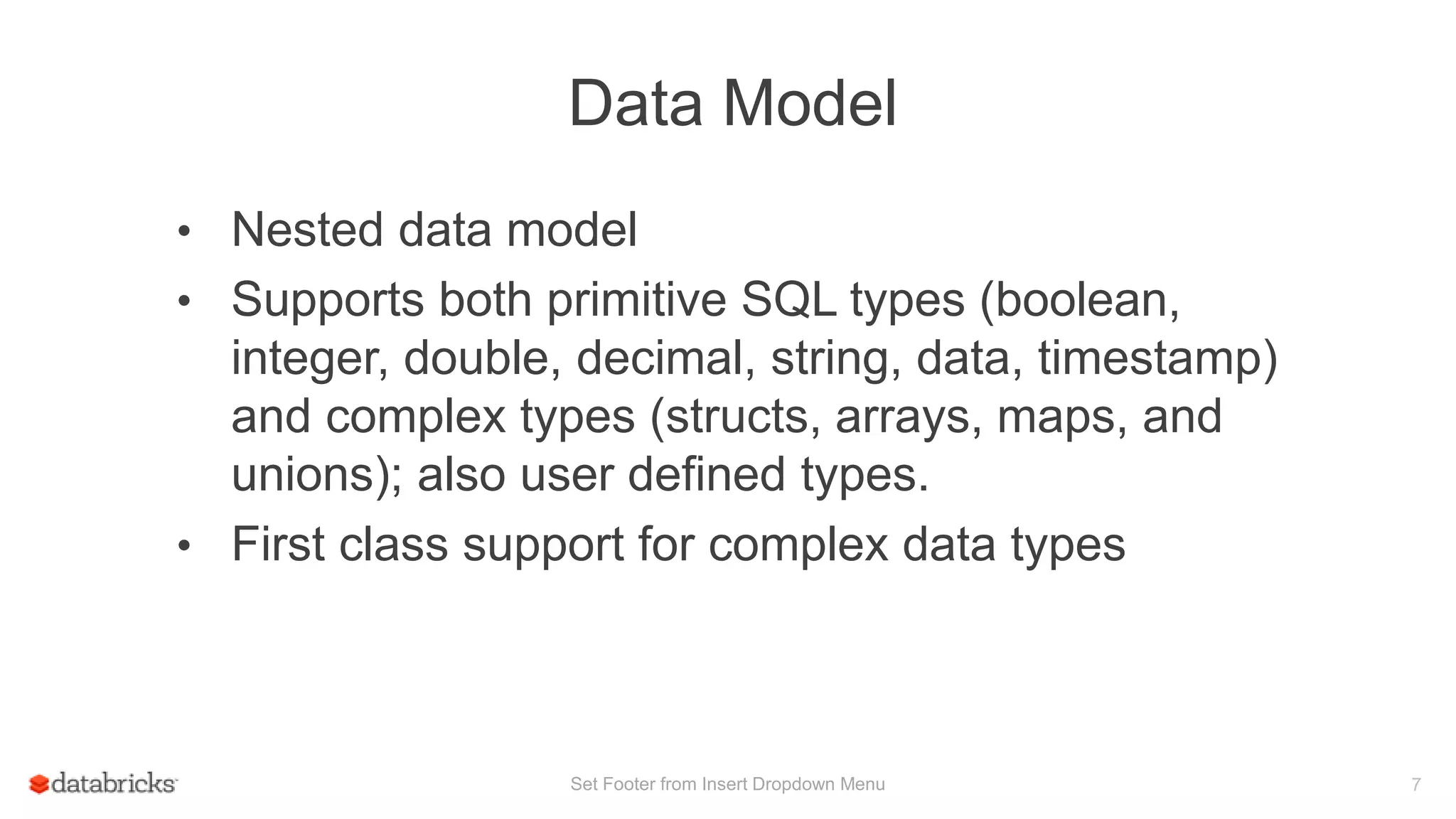

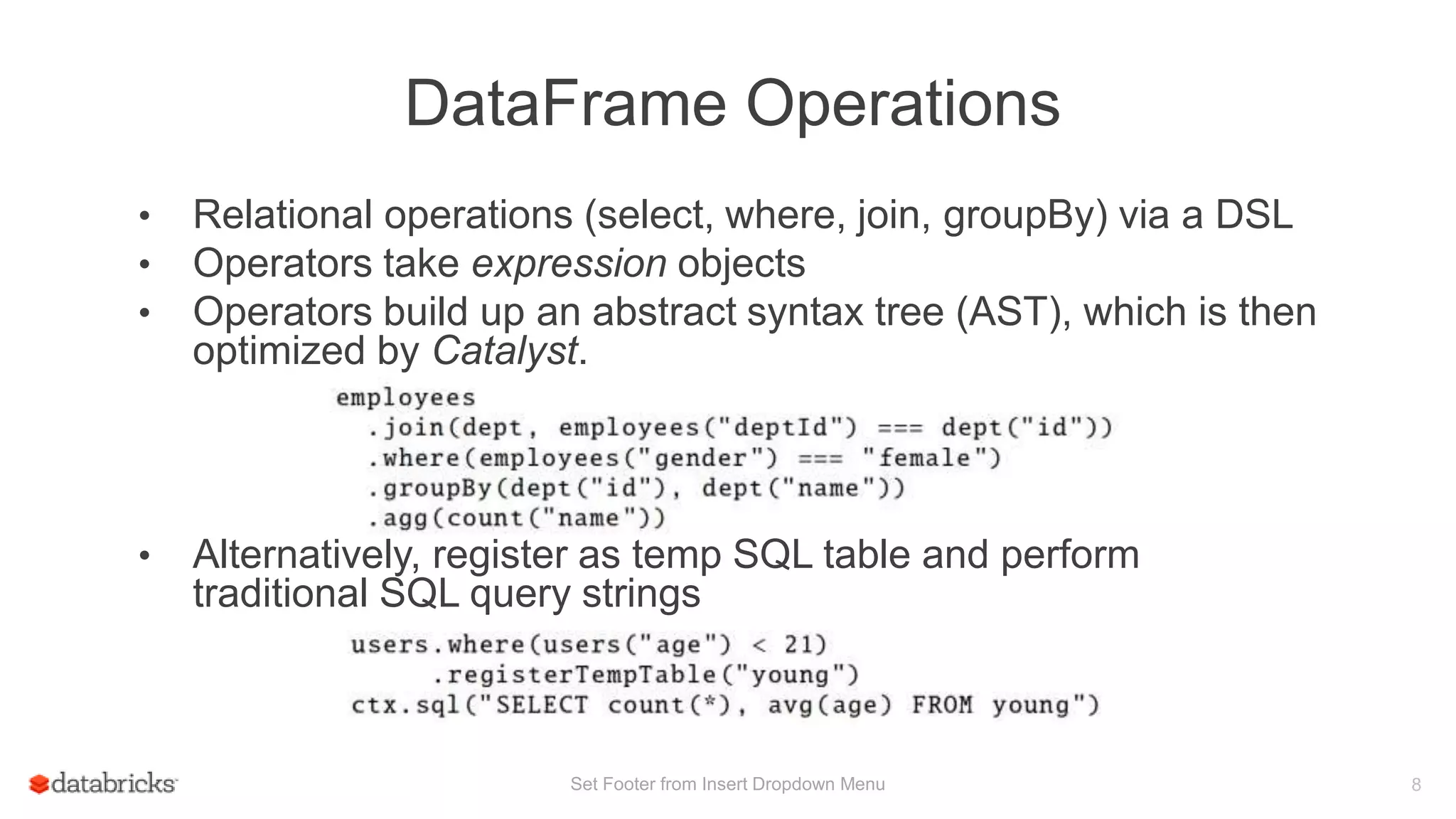

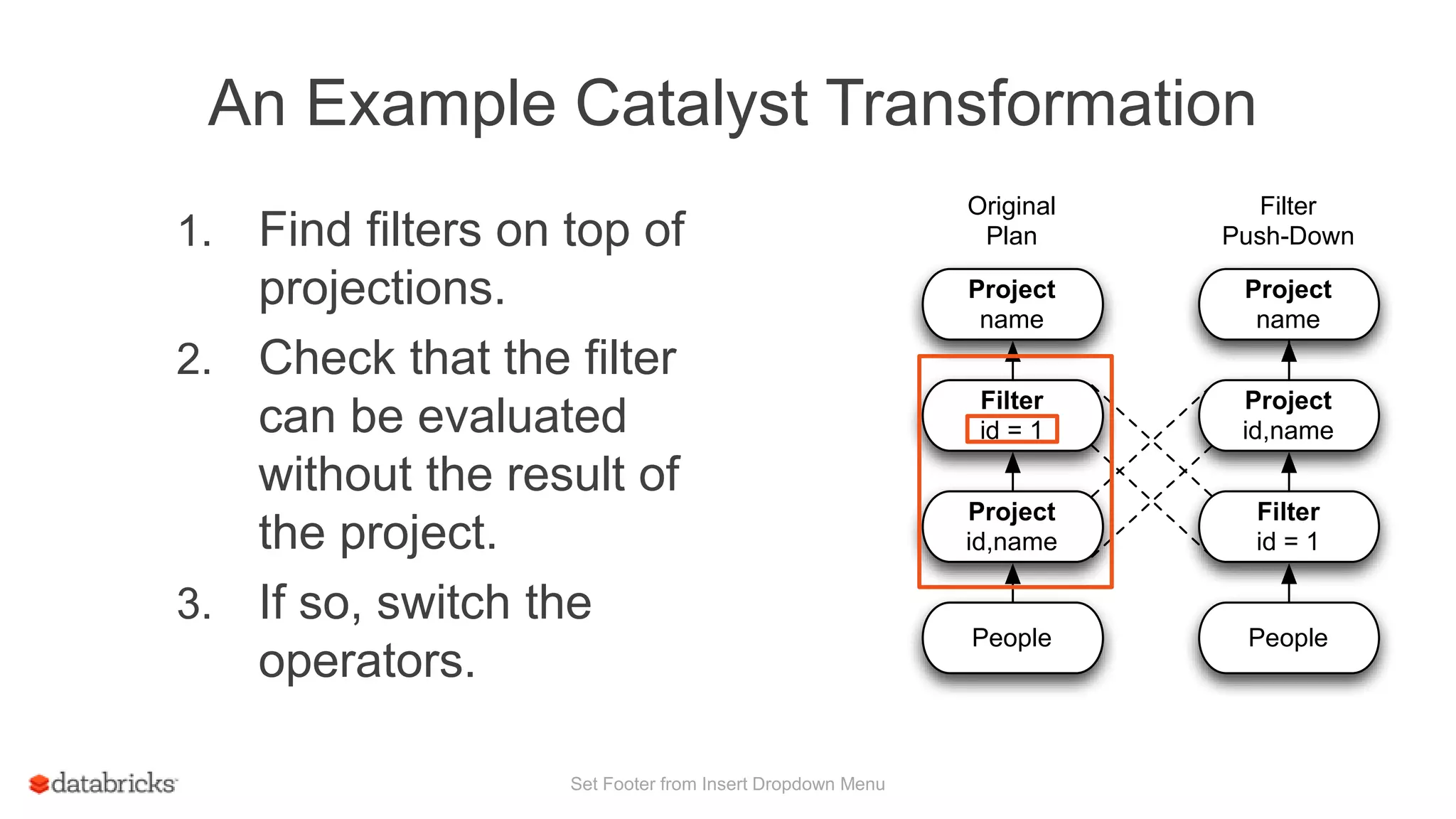

Spark SQL enables relational data processing on various data sources and integrates advanced analytics capabilities. It provides a DataFrame API that facilitates relational operations and employs an extensible optimizer, Catalyst, for optimization. The framework combines SQL-like querying with native Spark operations for improved performance and flexibility.

![DataFrame noun – [dey-tuh-freym] 16 1. A distributed collection of rows organized into named columns. 2. An abstraction for selecting, filtering, aggregating and plotting structured data (cf. R, Pandas).](https://image.slidesharecdn.com/sparksql-171008043944/75/Spark-Sql-and-DataFrame-16-2048.jpg)

![Write Less Code: Compute an Average private IntWritable one = new IntWritable(1) private IntWritable output = new IntWritable() proctected void map( LongWritable key, Text value, Context context) { String[] fields = value.split("t") output.set(Integer.parseInt(fields[1])) context.write(one, output) } IntWritable one = new IntWritable(1) DoubleWritable average = new DoubleWritable() protected void reduce( IntWritable key, Iterable<IntWritable> values, Context context) { int sum = 0 int count = 0 for(IntWritable value : values) { sum += value.get() count++ } average.set(sum / (double) count) context.Write(key, average) } data = sc.textFile(...).split("t") data.map(lambda x: (x[0], [x.[1], 1])) .reduceByKey(lambda x, y: [x[0] + y[0], x[1] + y[1]]) .map(lambda x: [x[0], x[1][0] / x[1][1]]) .collect()](https://image.slidesharecdn.com/sparksql-171008043944/75/Spark-Sql-and-DataFrame-17-2048.jpg)

![Write Less Code: Compute an Average 18 Using RDDs data = sc.textFile(...).split("t") data.map(lambda x: (x[0], [int(x[1]), 1])) .reduceByKey(lambda x, y: [x[0] + y[0], x[1] + y[1]]) .map(lambda x: [x[0], x[1][0] / x[1][1]]) .collect() Using DataFrames sqlCtx.table("people") .groupBy("name") .agg("name", avg("age")) .collect() Using SQL SELECT name, avg(age) FROM people GROUP BY name Using Pig P = load '/people' as (name, name); G = group P by name; R = foreach G generate … AVG(G.age);](https://image.slidesharecdn.com/sparksql-171008043944/75/Spark-Sql-and-DataFrame-18-2048.jpg)

![Seamlessly Integrated: RDDs Internally, DataFrame execution is done with Spark RDDs making interoperation with outside sources and custom algorithms easy. Set Footer from Insert Dropdown Menu 19 External Input def buildScan( requiredColumns: Array[String], filters: Array[Filter]): RDD[Row] Custom Processing queryResult.rdd.mapPartitions { iter => … Your code here … }](https://image.slidesharecdn.com/sparksql-171008043944/75/Spark-Sql-and-DataFrame-19-2048.jpg)