Portal:Computer programming

Portal maintenance status: (September 2019)

|

The Computer Programming Portal

Computer programming or coding is the composition of sequences of instructions, called programs, that computers can follow to perform tasks. It involves designing and implementing algorithms, step-by-step specifications of procedures, by writing code in one or more programming languages. Programmers typically use high-level programming languages that are more easily intelligible to humans than machine code, which is directly executed by the central processing unit. Proficient programming usually requires expertise in several different subjects, including knowledge of the application domain, details of programming languages and generic code libraries, specialized algorithms, and formal logic.

Auxiliary tasks accompanying and related to programming include analyzing requirements, testing, debugging (investigating and fixing problems), implementation of build systems, and management of derived artifacts, such as programs' machine code. While these are sometimes considered programming, often the term software development is used for this larger overall process – with the terms programming, implementation, and coding reserved for the writing and editing of code per se. Sometimes software development is known as software engineering, especially when it employs formal methods or follows an engineering design process. (Full article...)

Selected articles - load new batch

- Image 1

Node.js is a cross-platform, open-source JavaScript runtime environment that can run on Windows, Linux, Unix, macOS, and more. Node.js runs on the V8 JavaScript engine, and executes JavaScript code outside a web browser. According to the Stack Overflow Developer Survey, Node.js is one of the most commonly used web technologies.

Node.js lets developers use JavaScript to write command line tools and server-side scripting. The ability to run JavaScript code on the server is often used to generate dynamic web page content before the page is sent to the user's web browser. Consequently, Node.js represents a "JavaScript everywhere" paradigm, unifying web-application development around a single programming language, as opposed to using different languages for the server- versus client-side programming.

Node.js has an event-driven architecture capable of asynchronous I/O. These design choices aim to optimize throughput and scalability in web applications with many input/output operations, as well as for real-time Web applications (e.g., real-time communication programs and browser games). (Full article...) - Image 2

Stephen Gary Wozniak (/ˈwɒzniæk/; born August 11, 1950), also known by his nickname Woz, is an American technology entrepreneur, electrical engineer, computer programmer, and inventor. In 1976, he co-founded Apple Computer with his early business partner Steve Jobs. Through his work at Apple in the 1970s and 1980s, he is widely recognized as one of the most prominent pioneers of the personal computer revolution.

In 1975, Wozniak started developing the Apple I into the computer that launched Apple when he and Jobs first began marketing it the following year. He was the primary designer of the Apple II, introduced in 1977, known as one of the first highly successful mass-produced microcomputers, while Jobs oversaw the development of its foam-molded plastic case and early Apple employee Rod Holt developed its switching power supply.

With human–computer interface expert Jef Raskin, Wozniak had a major influence over the initial development of the original Macintosh concepts from 1979 to 1981, when Jobs took over the project following Wozniak's brief departure from the company due to a traumatic airplane accident. After permanently leaving Apple in 1985, Wozniak founded CL 9 and created the first programmable universal remote, released in 1987. He then pursued several other business and philanthropic ventures throughout his career, focusing largely on technology in K–12 schools, which involved a 1990 initiative to place computers in schools in the former Soviet Union. (Full article...) - Image 3

Yoshua Bengio OC OQ FRS FRSC (born March 5, 1964) is a Canadian computer scientist, and a pioneer of artificial neural networks and deep learning. He is a professor at the Université de Montréal and co-president and scientific director of the nonprofit LawZero. He founded Mila, the Quebec Artificial Intelligence (AI) Institute, and was its scientific director until 2025.

Bengio received the 2018 ACM A.M. Turing Award, often referred to as the "Nobel Prize of Computing", together with Geoffrey Hinton and Yann LeCun, for their foundational work on deep learning. Bengio, Hinton, and LeCun are sometimes referred to as the "Godfathers of AI". Bengio is the most-cited computer scientist globally (by both total citations and by h-index), and the most-cited living scientist across all fields (by total citations). In 2024, TIME Magazine included Bengio in its yearly list of the world's 100 most influential people. (Full article...) - Image 4The Antikythera mechanism (/ˌæntɪkɪˈθɪərə/ AN-tik-ih-THEER-ə, US also /ˌæntaɪkɪˈ-/ AN-ty-kih-) is an ancient Greek hand-powered orrery (model of the Solar System). It is the oldest known example of an analogue computer. It could be used to predict astronomical positions and eclipses decades in advance. It could also be used to track the four-year cycle of athletic games similar to an olympiad, the cycle of the ancient Olympic Games.

The artefact was among wreckage retrieved from a shipwreck off the coast of the Greek island Antikythera in 1901. In 1902, during a visit to the National Archaeological Museum in Athens, it was noticed by Greek politician Spyridon Stais as containing a gear, prompting the first study of the fragment by his cousin, Valerios Stais, the museum director. The device, housed in the remains of a wooden-framed case of (uncertain) overall size 34 cm × 18 cm × 9 cm (13.4 in × 7.1 in × 3.5 in), was found as one lump, later separated into three main fragments which are now divided into 82 separate fragments after conservation efforts. Four of these fragments contain gears, while inscriptions are found on many others. The largest gear is about 13 cm (5 in) in diameter and originally had 223 teeth. All these fragments of the mechanism are kept at the National Archaeological Museum, along with reconstructions and replicas, to demonstrate how it may have looked and worked.

In 2005, a team from Cardiff University led by Mike Edmunds used computer X-ray tomography and high resolution scanning to image inside fragments of the crust-encased mechanism and read the faintest inscriptions that once covered the outer casing. These scans suggest that the mechanism had 37 meshing bronze gears enabling it to follow the movements of the Moon and the Sun through the zodiac, to predict eclipses and to model the irregular orbit of the Moon, where the Moon's velocity is higher in its perigee than in its apogee. This motion was studied in the 2nd century BC by astronomer Hipparchus of Rhodes, and he may have been consulted in the machine's construction. There is speculation that a portion of the mechanism is missing and it calculated the positions of the five classical planets. The inscriptions were further deciphered in 2016, revealing numbers connected with the synodic cycles of Venus and Saturn. (Full article...) - Image 5

Eiffel is an object-oriented programming language designed by Bertrand Meyer (an object-orientation proponent and author of Object-Oriented Software Construction) and Eiffel Software. Meyer conceived the language in 1985 with the goal of increasing the reliability of commercial software development. The first version was released in 1986. In 2005, the International Organization for Standardization (ISO) released a technical standard for Eiffel.

The design of the language is closely connected with the Eiffel programming method. Both are based on a set of principles, including design by contract, command–query separation, the uniform-access principle, the single-choice principle, the open–closed principle, and option–operand separation.

Many concepts initially introduced by Eiffel were later added into Java, C#, and other languages. New language design ideas, particularly through the Ecma/ISO standardization process, continue to be incorporated into the Eiffel language. (Full article...) - Image 6Programming languages are used for controlling the behavior of a machine (often a computer). Like natural languages, programming languages follow rules for syntax and semantics.

There are thousands of programming languages and new ones are created every year. Few languages ever become sufficiently popular that they are used by more than a few people, but professional programmers may use dozens of languages in a career.

Most programming languages are not standardized by an international (or national) standard, even widely used ones, such as Perl or Standard ML (despite the name). Notable standardized programming languages include ALGOL, C, C++, JavaScript (under the name ECMAScript), Smalltalk, Prolog, Common Lisp, Scheme (IEEE standard), ISLISP, Ada, Fortran, COBOL, SQL, and XQuery. (Full article...) - Image 7Logotype used on the cover of the first edition of The C Programming Language

C is a general-purpose programming language. It was created in the 1970s by Dennis Ritchie and remains widely used and influential. By design, C gives the programmer relatively direct access to the features of the typical CPU architecture, customized for the target instruction set. It has been and continues to be used to implement operating systems (especially kernels), device drivers, and protocol stacks, but its use in application software has been decreasing. C is used on computers that range from the largest supercomputers to the smallest microcontrollers and embedded systems.

A successor to the programming language B, C was originally developed at Bell Labs by Ritchie between 1972 and 1973 to construct utilities running on Unix. It was applied to re-implementing the kernel of the Unix operating system. During the 1980s, C gradually gained popularity. It has become one of the most widely used programming languages, with C compilers available for practically all modern computer architectures and operating systems. The book The C Programming Language, co-authored by the original language designer, served for many years as the de facto standard for the language. C has been standardized since 1989 by the American National Standards Institute (ANSI) and, subsequently, jointly by the International Organization for Standardization (ISO) and the International Electrotechnical Commission (IEC).

C is an imperative procedural language, supporting structured programming, lexical variable scope, and recursion, with a static type system. It was designed to be compiled to provide low-level access to memory and language constructs that map efficiently to machine instructions, all with minimal runtime support. Despite its low-level capabilities, the language was designed to encourage cross-platform programming. A standards-compliant C program written with portability in mind can be compiled for a wide variety of computer platforms and operating systems with few changes to its source code. (Full article...) - Image 8

.jpg/330px-Used_Punchcard_(5151286161).jpg)

A 12-row/80-column IBM punched card from the mid-twentieth century

A punched card (also punch card) is a stiff paper-based medium used to store digital information via the presence or absence of holes in predefined positions. Developed over the 18th to 20th centuries, punched cards were widely used for data processing, the control of automated machines, and computing. Early applications included controlling weaving looms and recording census data.

Punched cards were widely used in the 20th century, where unit record machines, organized into data processing systems, used punched cards for data input, data output, and data storage. The IBM 12-row/80-column punched card format came to dominate the industry. Many early digital computers used punched cards as the primary medium for input of both computer programs and data. Punched cards were used for decades before being replaced by magnetic tape data storage. While punched cards are now obsolete as a storage medium, as of 2012, some voting machines still used punched cards to record votes.

Punched cards had a significant cultural impact in the 20th century. Their legacy persists in modern computing, influencing the 80-character line standard still present in some command-line interfaces and programming environments. (Full article...) - Image 9The history of artificial intelligence (AI) began in antiquity, with myths, stories, and rumors of artificial beings endowed with intelligence or consciousness by master craftsmen. The study of logic and formal reasoning from antiquity to the present led directly to the invention of the programmable digital computer in the 1940s, a machine based on abstract mathematical reasoning. This device and the ideas behind it inspired scientists to begin discussing the possibility of building an electronic brain.

The field of AI research was founded at a workshop held on the campus of Dartmouth College in 1956. Attendees of the workshop became the leaders of AI research for decades. Many of them predicted that machines as intelligent as humans would exist within a generation. The U.S. government provided millions of dollars with the hope of making this vision come true.

Eventually, it became obvious that researchers had grossly underestimated the difficulty of this feat. In 1974, criticism from James Lighthill and pressure from the U.S. Congress led the U.S. and British Governments to stop funding undirected research into artificial intelligence. Seven years later, a visionary initiative by the Japanese Government and the success of expert systems reinvigorated investment in AI, and by the late 1980s, the industry had grown into a billion-dollar enterprise. However, investors' enthusiasm waned in the 1990s, and the field was criticized in the press and avoided by industry (a period known as an "AI winter"). Nevertheless, research and funding continued to grow under other names. (Full article...) - Image 10In computer systems a loader is the part of an operating system that is responsible for loading programs and libraries. It is one of the essential stages in the process of starting a program, as it places programs into memory and prepares them for execution. Loading a program involves either memory-mapping or copying the contents of the executable file containing the program instructions into memory, and then carrying out other required preparatory tasks to prepare the executable for running. Once loading is complete, the operating system starts the program by passing control to the loaded program code.

All operating systems that support program loading have loaders, apart from highly specialized computer systems that only have a fixed set of specialized programs. Embedded systems typically do not have loaders, and instead, the code executes directly from ROM or similar. In order to load the operating system itself, as part of booting, a specialized boot loader is used. In many operating systems, the loader resides permanently in memory, though some operating systems that support virtual memory may allow the loader to be located in a region of memory that is pageable.

In the case of operating systems that support virtual memory, the loader may not actually copy the contents of executable files into memory, but rather may simply declare to the virtual memory subsystem that there is a mapping between a region of memory allocated to contain the running program's code and the contents of the associated executable file. (See memory-mapped file.) The virtual memory subsystem is then made aware that pages with that region of memory need to be filled on demand if and when program execution actually hits those areas of unfilled memory. This may mean parts of a program's code are not actually copied into memory until they are actually used, and unused code may never be loaded into memory at all. (Full article...) - Image 11

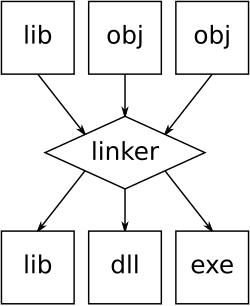

An illustration of the linking process. Object files and static libraries are assembled into a new library or executable

A linker or link editor is a computer program that combines intermediate software build files such as object and library files into a single executable file such as a program or library. A linker is often part of a toolchain that includes a compiler and/or assembler that generates intermediate files that the linker processes. The linker may be integrated with other toolchain tools such that the user does not interact with the linker directly.

A simpler version that writes its output directly to memory is called the loader, though loading is typically considered a separate process. (Full article...) - Image 12Lisp (historically LISP, an abbreviation of "list processing") is a family of programming languages with a long history and a distinctive, fully parenthesized prefix notation.

Originally specified in the late 1950s, it is the second-oldest high-level programming language still in common use, after Fortran. Lisp has changed since its early days, and many dialects have existed over its history. Today, the best-known general-purpose Lisp dialects are Common Lisp, Scheme, Racket, and Clojure.

Lisp was originally created as a practical mathematical notation for computer programs, influenced by (though not originally derived from) the notation of Alonzo Church's lambda calculus. It quickly became a favored programming language for artificial intelligence (AI) research. As one of the earliest programming languages, Lisp pioneered many ideas in computer science, including tree data structures, automatic storage management, dynamic typing, conditionals, higher-order functions, recursion, the self-hosting compiler, and the read–eval–print loop.

The name LISP derives from "List Processor". Linked lists are one of Lisp's major data structures, and Lisp source code is made of lists. Thus, Lisp programs can manipulate source code as a data structure, giving rise to the macro systems that allow programmers to create new syntax or new domain-specific languages embedded in Lisp. (Full article...) - Image 13

PDP-11 CPU board

Computer hardware includes the physical parts of a computer, such as the central processing unit (CPU), random-access memory (RAM), motherboard, computer data storage, graphics card, sound card, and computer case. It includes external devices such as a monitor, mouse, keyboard, and speakers.

By contrast, software is a set of written instructions that can be stored and run by hardware. Hardware derived its name from the fact it is hard or rigid with respect to changes, whereas software is soft because it is easy to change.

Hardware is typically directed by the software to execute any command or instruction. A combination of hardware and software forms a usable computing system, although other systems exist with only hardware. (Full article...) - Image 14In computing, a compiler is software that translates computer code written in one programming language (the source language) into another language (the target language). The name "compiler" is primarily used for programs that translate source code from a high-level programming language to a low-level programming language (e.g. assembly language, object code, or machine code) to create an executable program.

There are many different types of compilers which produce output in different useful forms. A cross-compiler produces code for a different CPU or operating system than the one on which the cross-compiler itself runs. A bootstrap compiler is often a temporary compiler, used for compiling a more permanent or better optimized compiler for a language.

Related software include decompilers, programs that translate from low-level languages to higher level ones; programs that translate between high-level languages, usually called source-to-source compilers or transpilers; language rewriters, usually programs that translate the form of expressions without a change of language; and compiler-compilers, compilers that produce compilers (or parts of them), often in a generic and reusable way so as to be able to produce many differing compilers. (Full article...) - Image 15

Scala (/ˈskɑːlɑː/ SKAH-lah) is a strongly statically typed high-level general-purpose programming language that supports both object-oriented programming and functional programming. Designed to be concise, many of Scala's design decisions are intended to address criticisms of Java.

Scala source code can be compiled to Java bytecode and run on a Java virtual machine (JVM). Scala can also be transpiled to JavaScript to run in a browser, or compiled directly to a native executable. When running on the JVM, Scala provides language interoperability with Java so that libraries written in either language may be referenced directly in Scala or Java code. Like Java, Scala is object-oriented, and uses a syntax termed curly-brace which is similar to the language C. Since Scala 3, there is also an option to use the off-side rule (indenting) to structure blocks, and its use is advised. Martin Odersky has said that this turned out to be the most productive change introduced in Scala 3.

Unlike Java, Scala has many features of functional programming languages (like Scheme, Standard ML, and Haskell), including currying, immutability, lazy evaluation, and pattern matching. It also has an advanced type system supporting algebraic data types, covariance and contravariance, higher-order types (but not higher-rank types), anonymous types, operator overloading, optional parameters, named parameters, raw strings, and an experimental exception-only version of algebraic effects that can be seen as a more powerful version of Java's checked exceptions. (Full article...)

Selected images

- Image 1GNOME Shell, GNOME Clocks, Evince, gThumb and GNOME Files at version 3.30, in a dark theme

- Image 2Partial view of the Mandelbrot set. Step 1 of a zoom sequence: Gap between the "head" and the "body" also called the "seahorse valley".

- Image 4An IBM Port-A-Punch punched card

- Image 5Grace Hopper at the UNIVAC keyboard, c. 1960. Grace Brewster Murray: American mathematician and rear admiral in the U.S. Navy who was a pioneer in developing computer technology, helping to devise UNIVAC I. the first commercial electronic computer, and naval applications for COBOL (common-business-oriented language).

- Image 7A lone house. An image made using Blender 3D.

- Image 9Output from a (linearised) shallow water equation model of water in a bathtub. The water experiences 5 splashes which generate surface gravity waves that propagate away from the splash locations and reflect off of the bathtub walls.

- Image 10Stephen Wolfram is a British-American computer scientist, physicist, and businessman. He is known for his work in computer science, mathematics, and in theoretical physics.

- Image 11Deep Blue was a chess-playing expert system run on a unique purpose-built IBM supercomputer. It was the first computer to win a game, and the first to win a match, against a reigning world champion under regular time controls. Photo taken at the Computer History Museum.

- Image 12A view of the GNU nano Text editor version 6.0

- Image 13A head crash on a modern hard disk drive

- Image 15Partial map of the Internet based on the January 15, 2005 data found on opte.org. Each line is drawn between two nodes, representing two IP addresses. The length of the lines are indicative of the delay between those two nodes. This graph represents less than 30% of the Class C networks reachable by the data collection program in early 2005.

- Image 16Ada Lovelace was an English mathematician and writer, chiefly known for her work on Charles Babbage's proposed mechanical general-purpose computer, the Analytical Engine. She was the first to recognize that the machine had applications beyond pure calculation, and to have published the first algorithm intended to be carried out by such a machine. As a result, she is often regarded as the first computer programmer.

- Image 17This image (when viewed in full size, 1000 pixels wide) contains 1 million pixels, each of a different color.

- Image 18Margaret Hamilton standing next to the navigation software that she and her MIT team produced for the Apollo Project.

Did you know? - load more entries

- ... that NATO was once targeted by a group of "gay furry hackers"?

- ... that a "hacker" with blog posts written by ChatGPT was at the center of an online scavenger hunt promoting Avenged Sevenfold's album Life Is but a Dream...?

- ... that the study of selection algorithms has been traced to an 1883 work of Lewis Carroll on how to award second place in single-elimination tournaments?

- ... that Phil Fletcher as Hacker T. Dog caused Lauren Layfield to make the "most famous snort" in the United Kingdom in 2016?

- ... that it took a particle accelerator and machine-learning algorithms to extract the charred text of PHerc. Paris. 4 without unrolling it?

- ... that both Thackeray and Longfellow bought paintings by Fanny Steers?

Subcategories

WikiProjects

- There are many users interested in computer programming, join them.

Computer programming news

- 28 October 2025 – AI boom, Workplace impact of artificial intelligence

- Multinational technology and e-commerce company Amazon announces it will lay off 14,000 corporate positions as it invests more in building AI and cloud computing infrastructure. (DW) (CNBC)

- 5 September 2025 – AI boom

- European High-Performance Computing Joint Undertaking

Topics

Related portals

Associated Wikimedia

The following Wikimedia Foundation sister projects provide more on this subject:

- Commons

Free media repository - Wikibooks

Free textbooks and manuals - Wikidata

Free knowledge base - Wikinews

Free-content news - Wikiquote

Collection of quotations - Wikisource

Free-content library - Wikiversity

Free learning tools - Wiktionary

Dictionary and thesaurus

.jpg/250px-Steve_Wozniak_by_Gage_Skidmore_3_(cropped).jpg)

_in_Dark_theme.png/120px-GNOME_Shell,_GNOME_Clocks,_Evince,_gThumb,_GNOME_Files_at_version_3.30_(2018-09)_in_Dark_theme.png)