Does anyone know a (free) method for importing large datasets into Google Colab, of multiple GB? Github is severely limited, uploading a folder to google drive takes a long time.

1 Answer

$\begingroup$ $\endgroup$

1 One option is that you can download the dataset into your system and save it in an easily accessible directory. Then, run the following codes:

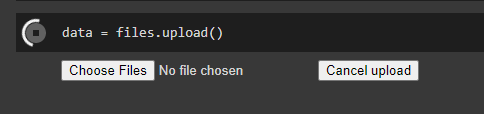

from google.colab import files data = files.upload() After running the above line, you will get a Choose File button where you can directly browse your system and choose your file.

Added the screenshot for your reference:

- 1$\begingroup$ Unfortunately this method also takes a lot of time to upload. I have a 25mb file that takes about 10 minutes to upload, forget about uploading in GB's. $\endgroup$spectre– spectre2021-12-12 15:03:32 +00:00Commented Dec 12, 2021 at 15:03

wgetcommand. I have discussed various approaches here. $\endgroup$