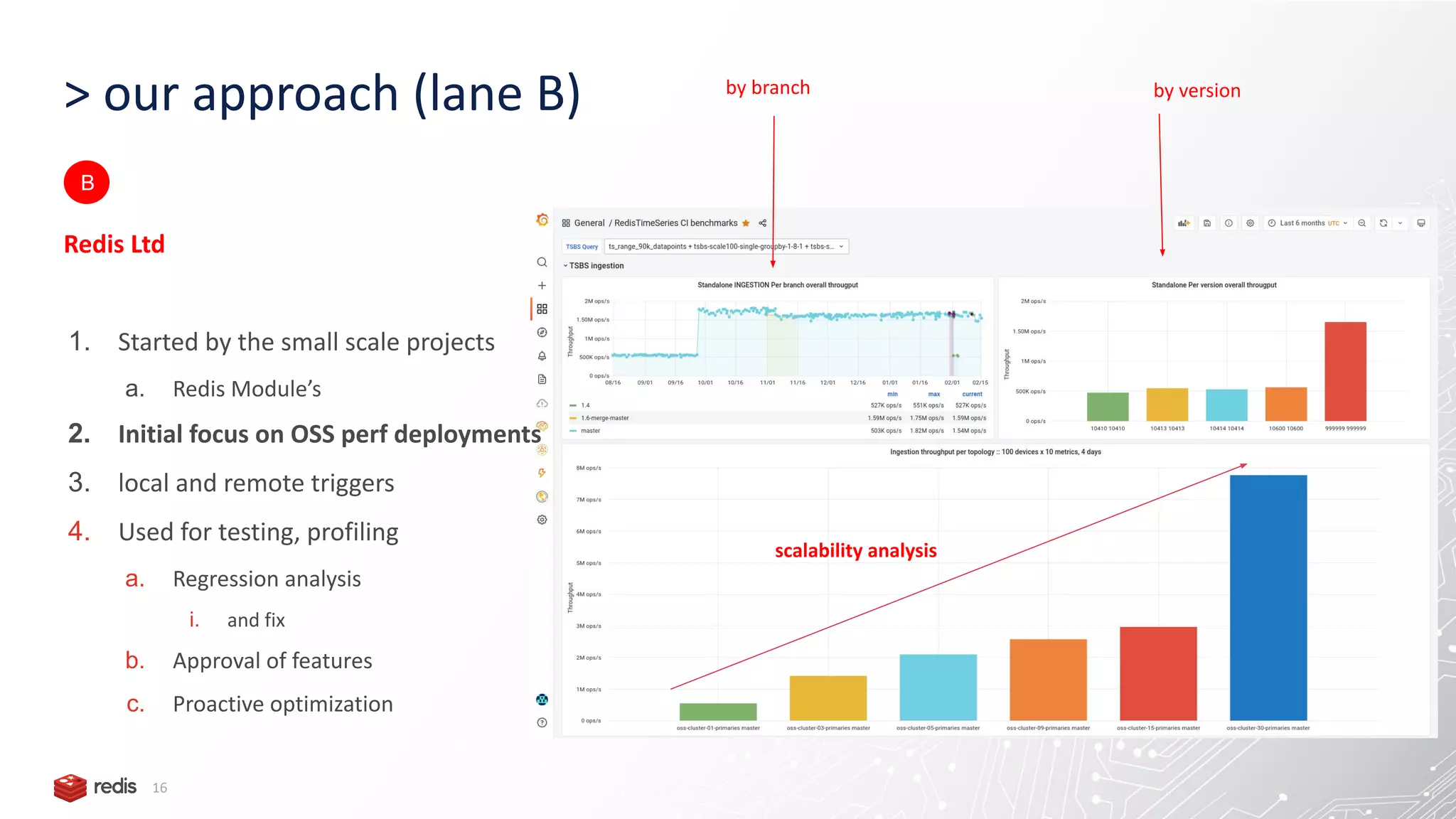

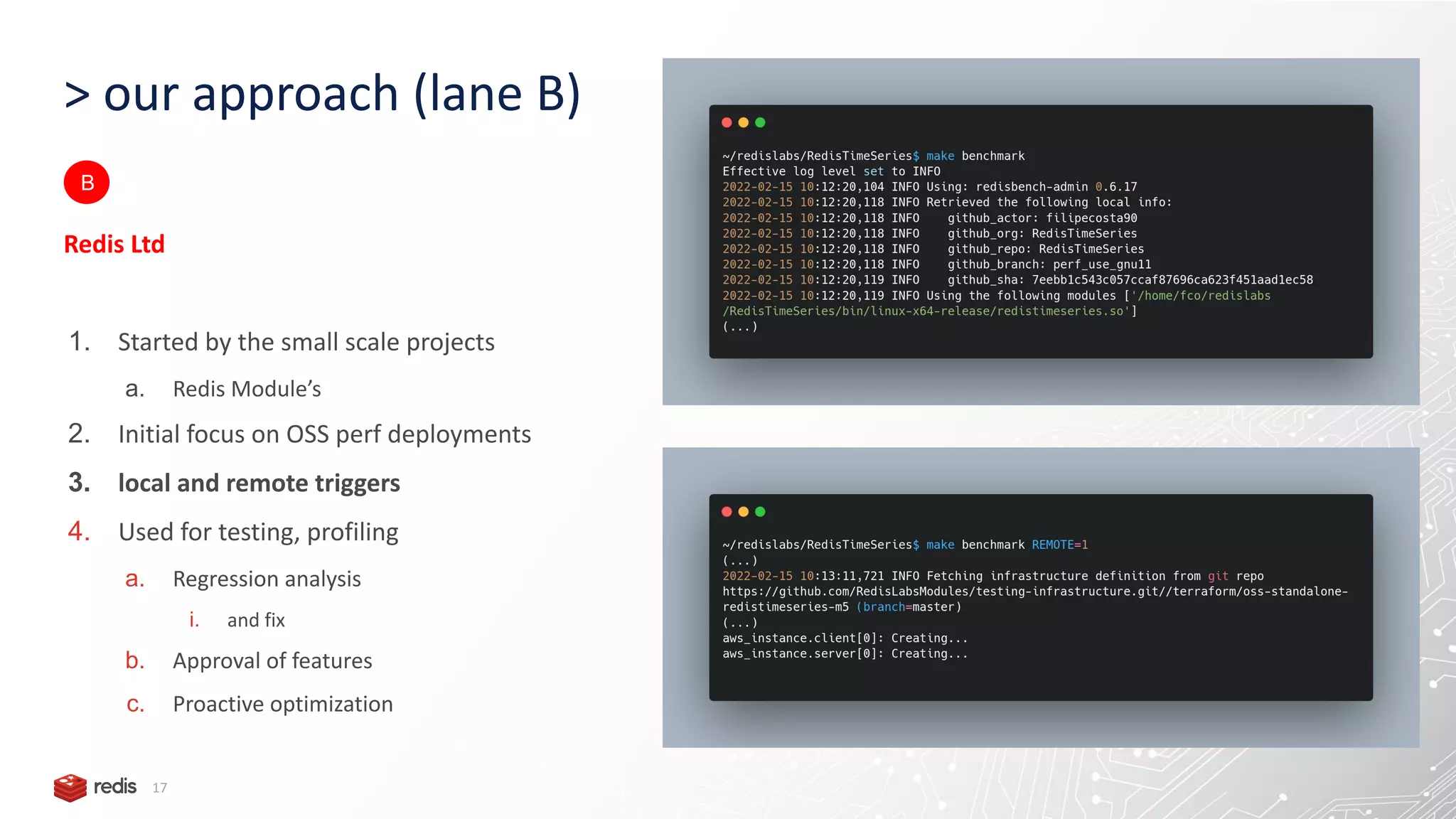

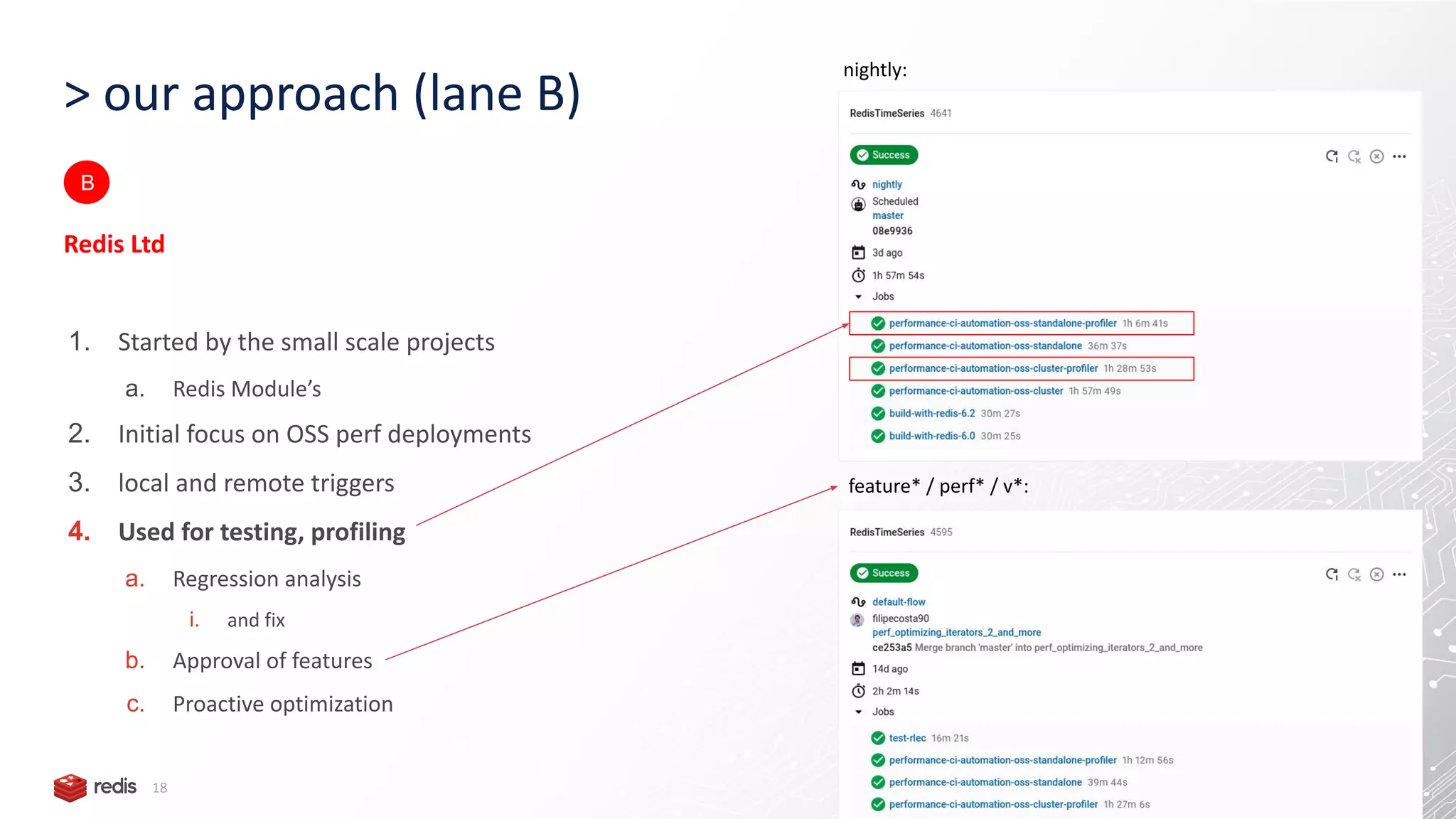

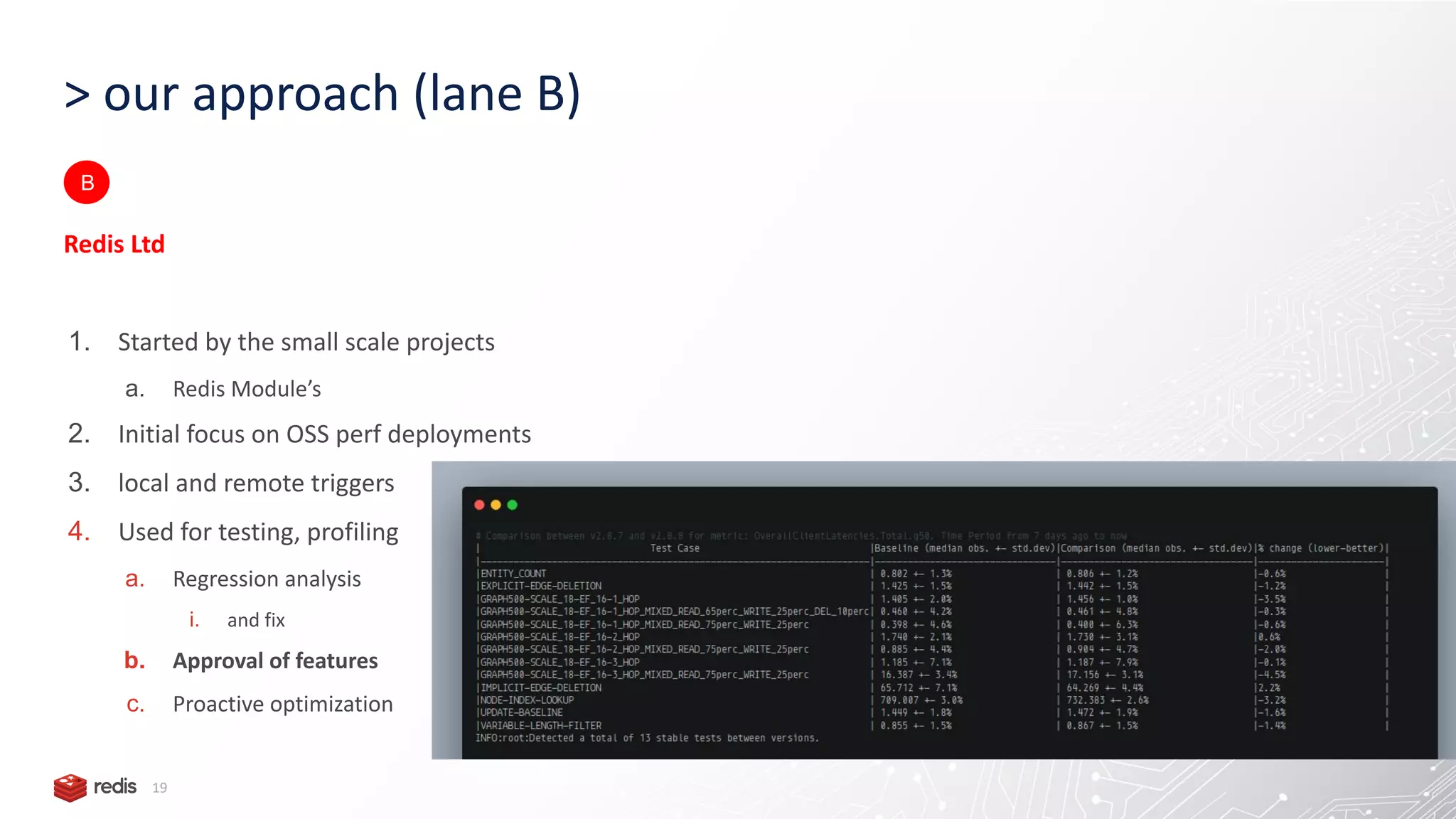

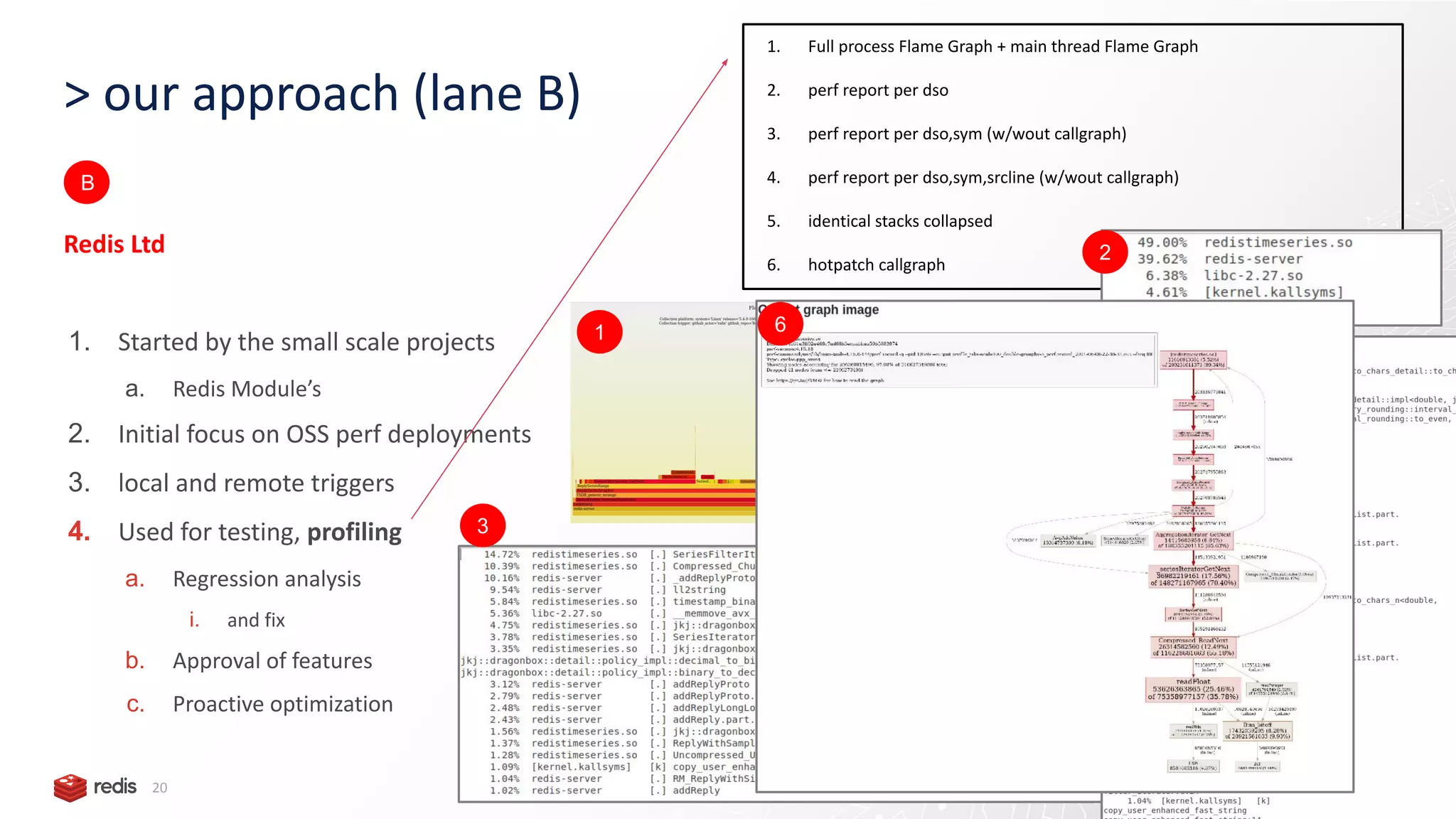

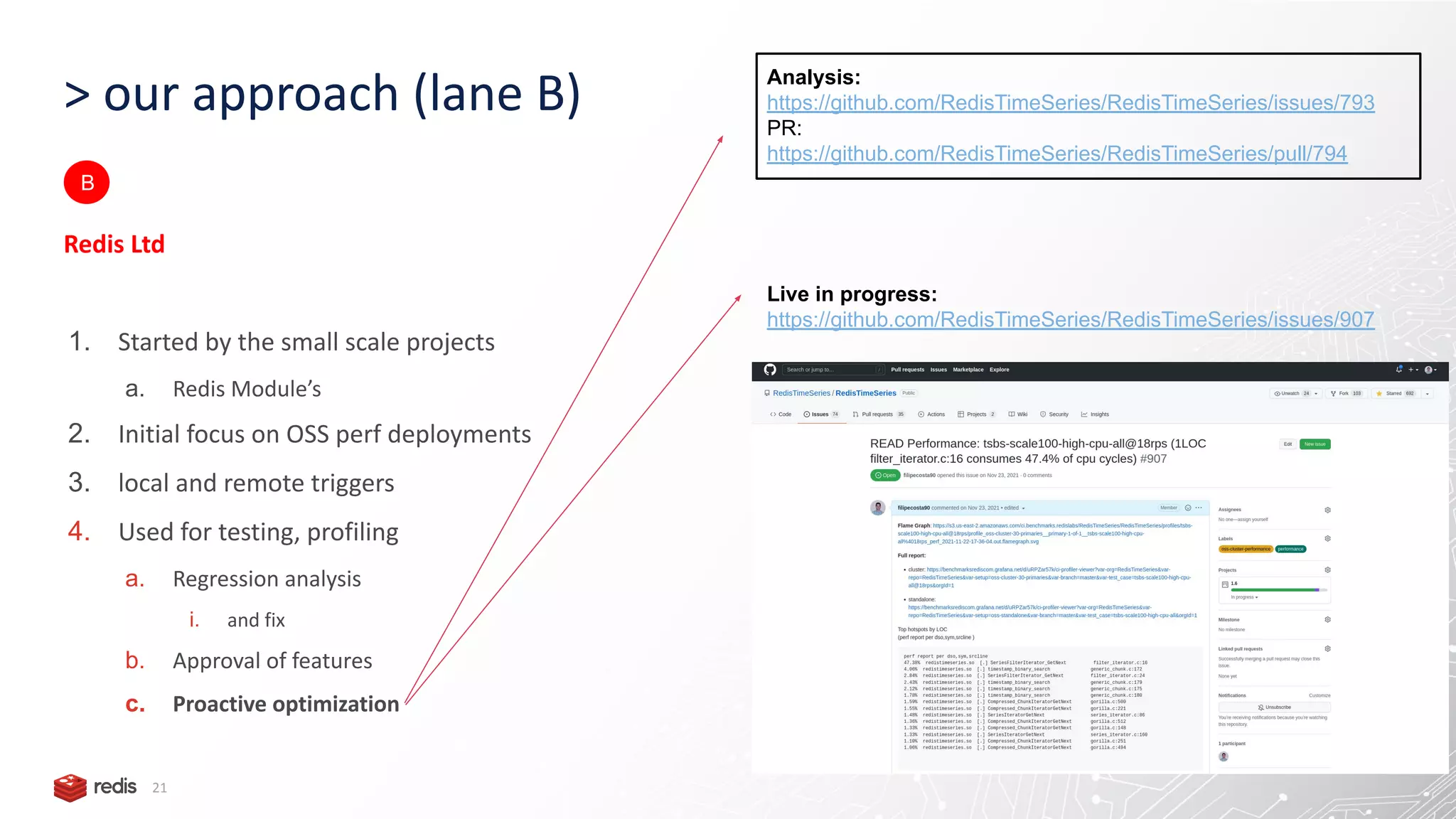

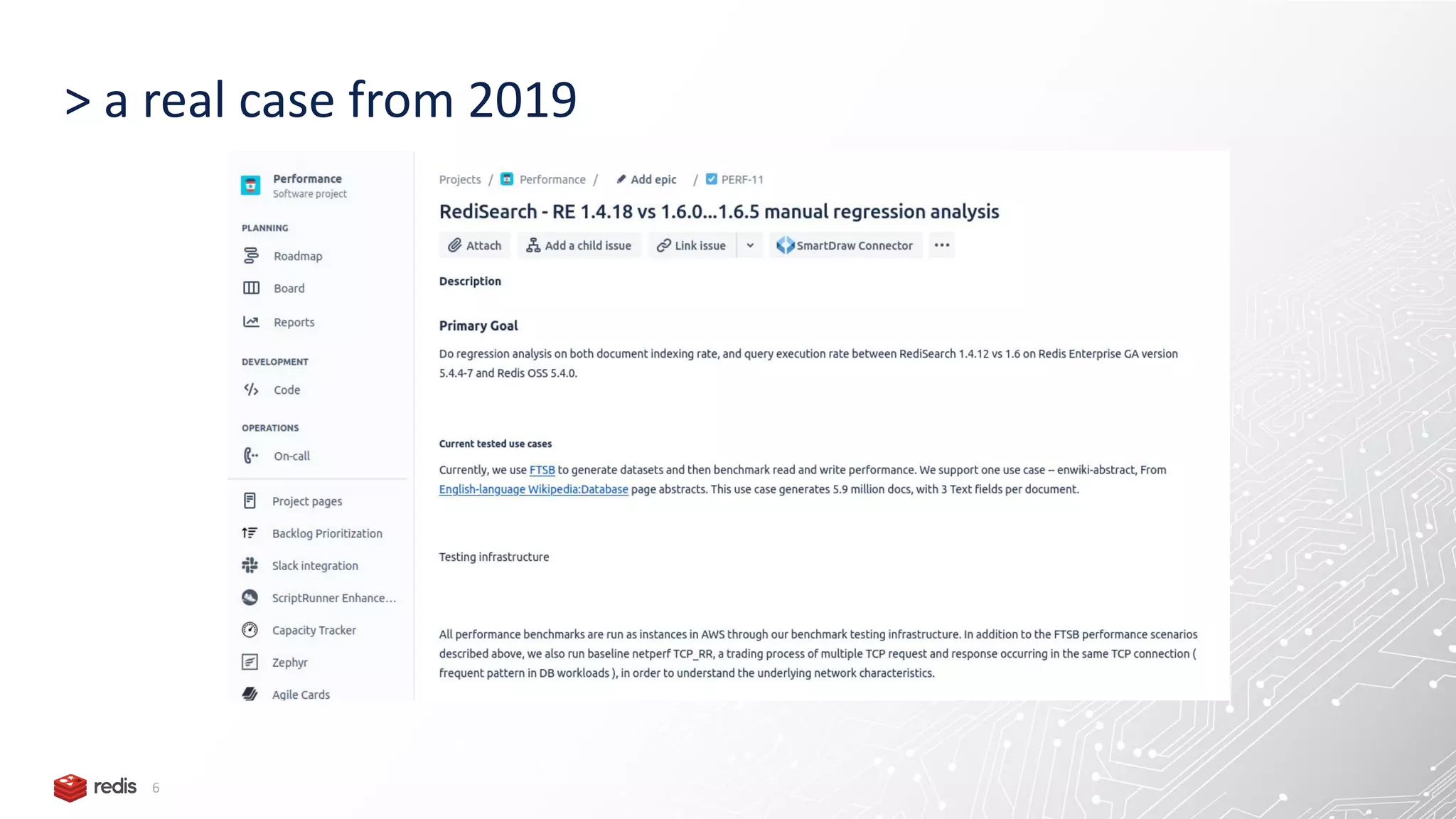

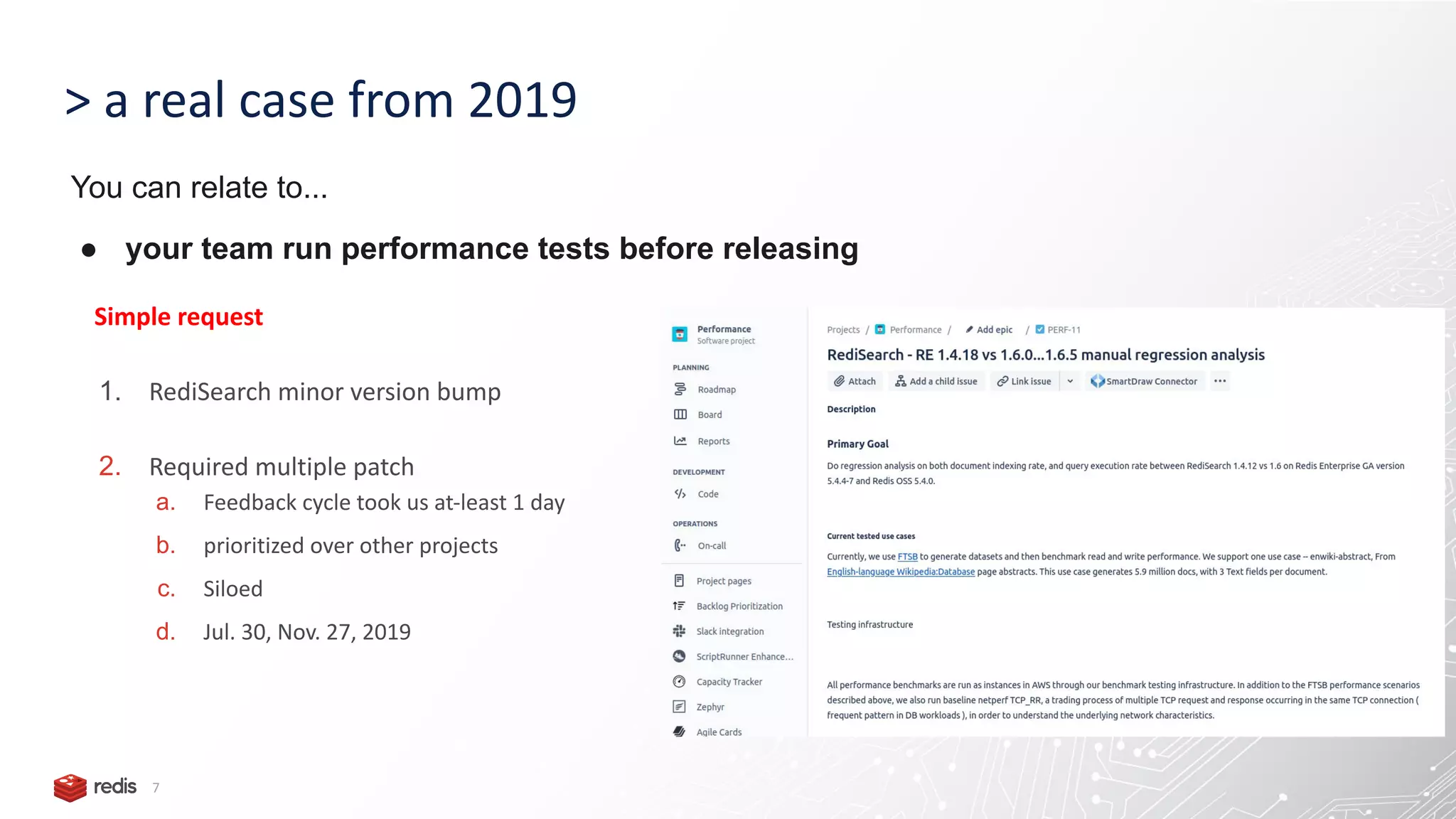

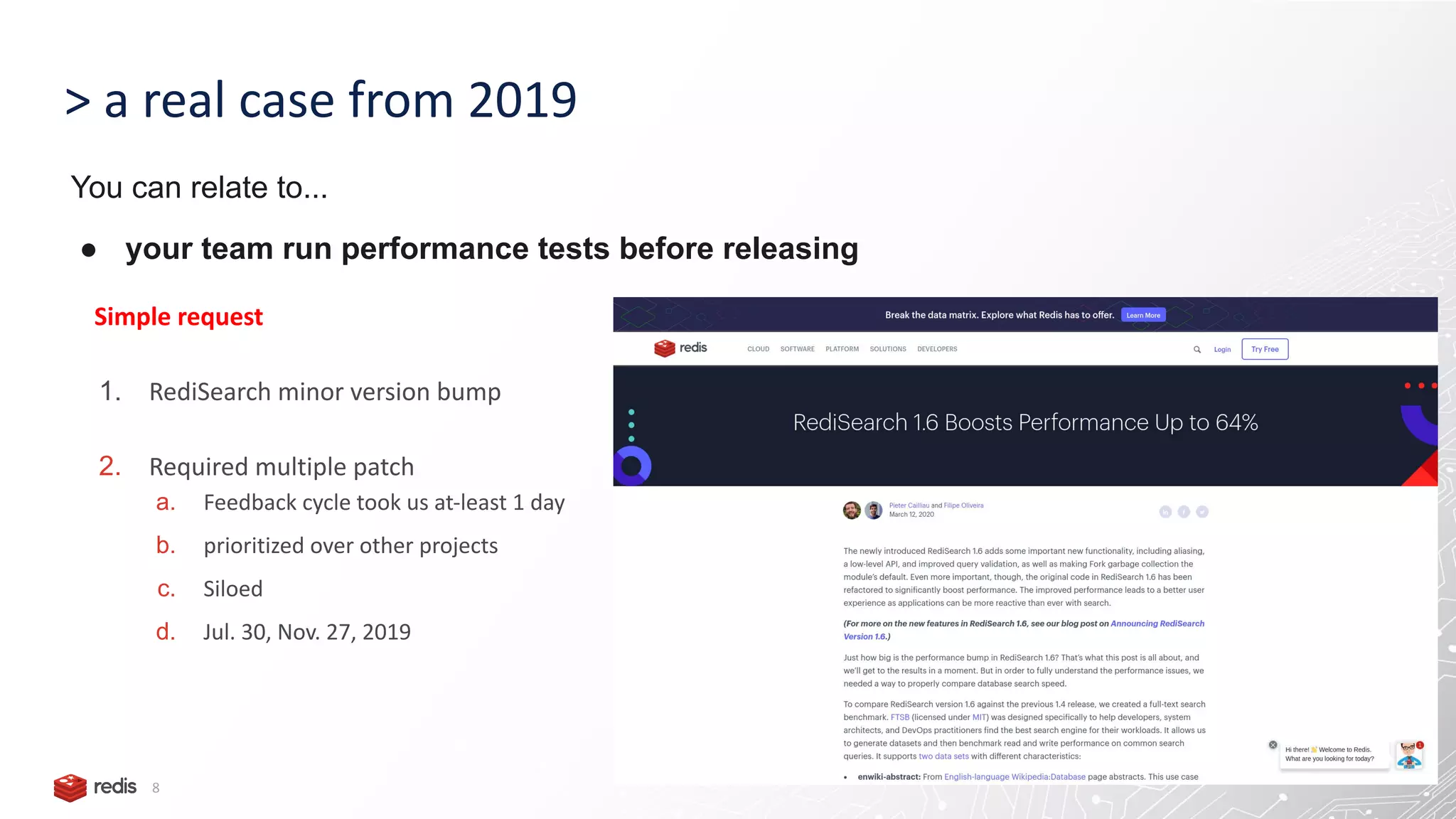

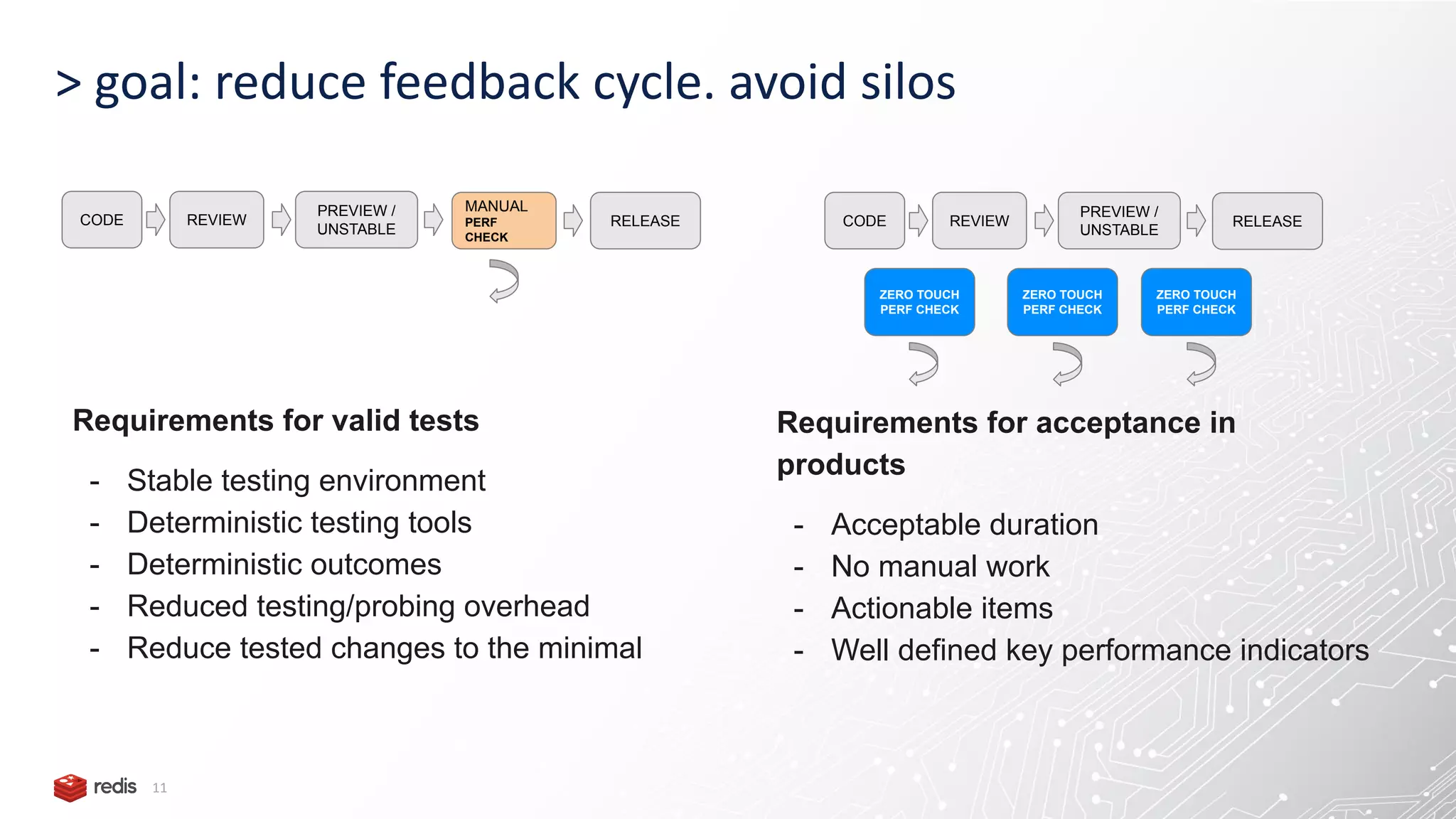

The document outlines the performance testing and analysis initiatives at Redis Ltd, managed by performance engineer Filipe Oliveira. It discusses the importance of fostering a proactive performance culture, automating extensive tests, and reducing feedback cycles to catch performance regressions early. Upcoming goals include extending profiling tools and improving anomaly detection to enhance both open-source and company platforms.

![> our approach (lane A) 14 Vanilla Redis (purely OSS project) 1. Created an OSS SPEC a. https://github.com/redis/redis-benchmarks-specification/ 2. Extend the spec and use it a. for historical data b. for regression analysis c. for docs A [1] join: https://github.com/redis/redis-benchmarks-specification/#joining-the-performance-initiative Redis Developers Group + (Redis Ltd, AWS, Ericson, Alibaba, …. )](https://image.slidesharecdn.com/e2eperformancetestingatredisltd1-220221113646/75/End-to-end-performance-testing-profiling-and-analysis-at-Redis-14-2048.jpg)

![> our approach (lane B) 15 Redis Ltd 1. Started by the small scale projects a. Redis Module’s 2. Initial focus on OSS perf deployments 3. local and remote triggers 4. Used for testing, profiling a. Regression analysis i. and fix b. Approval of features c. Proactive optimization B [1] https://github.com/RedisTimeSeries/RedisTimeSeries/tree/master/tests/benchmarks [2] https://github.com/RedisLabsModules/redisbench-admin](https://image.slidesharecdn.com/e2eperformancetestingatredisltd1-220221113646/75/End-to-end-performance-testing-profiling-and-analysis-at-Redis-15-2048.jpg)