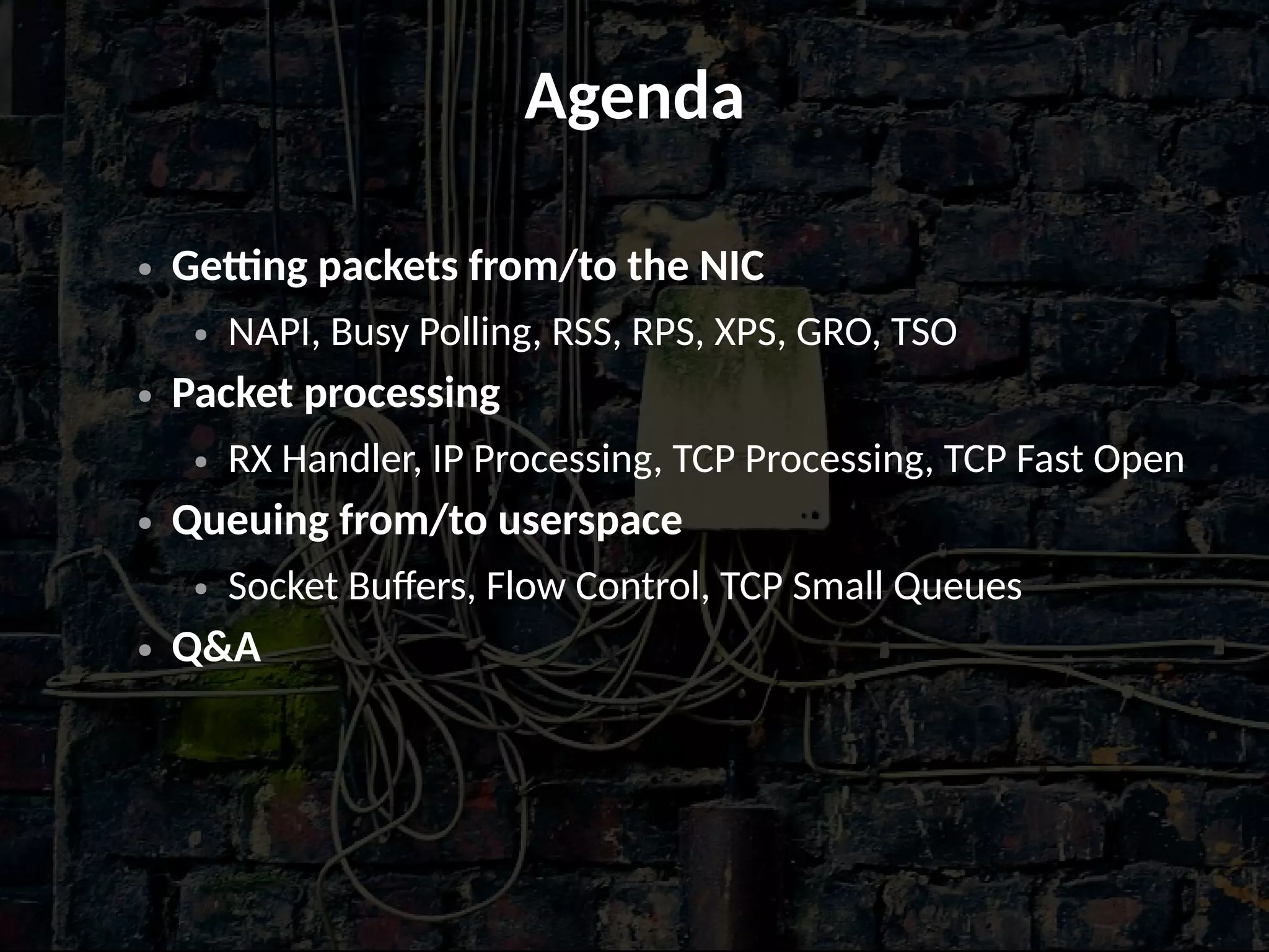

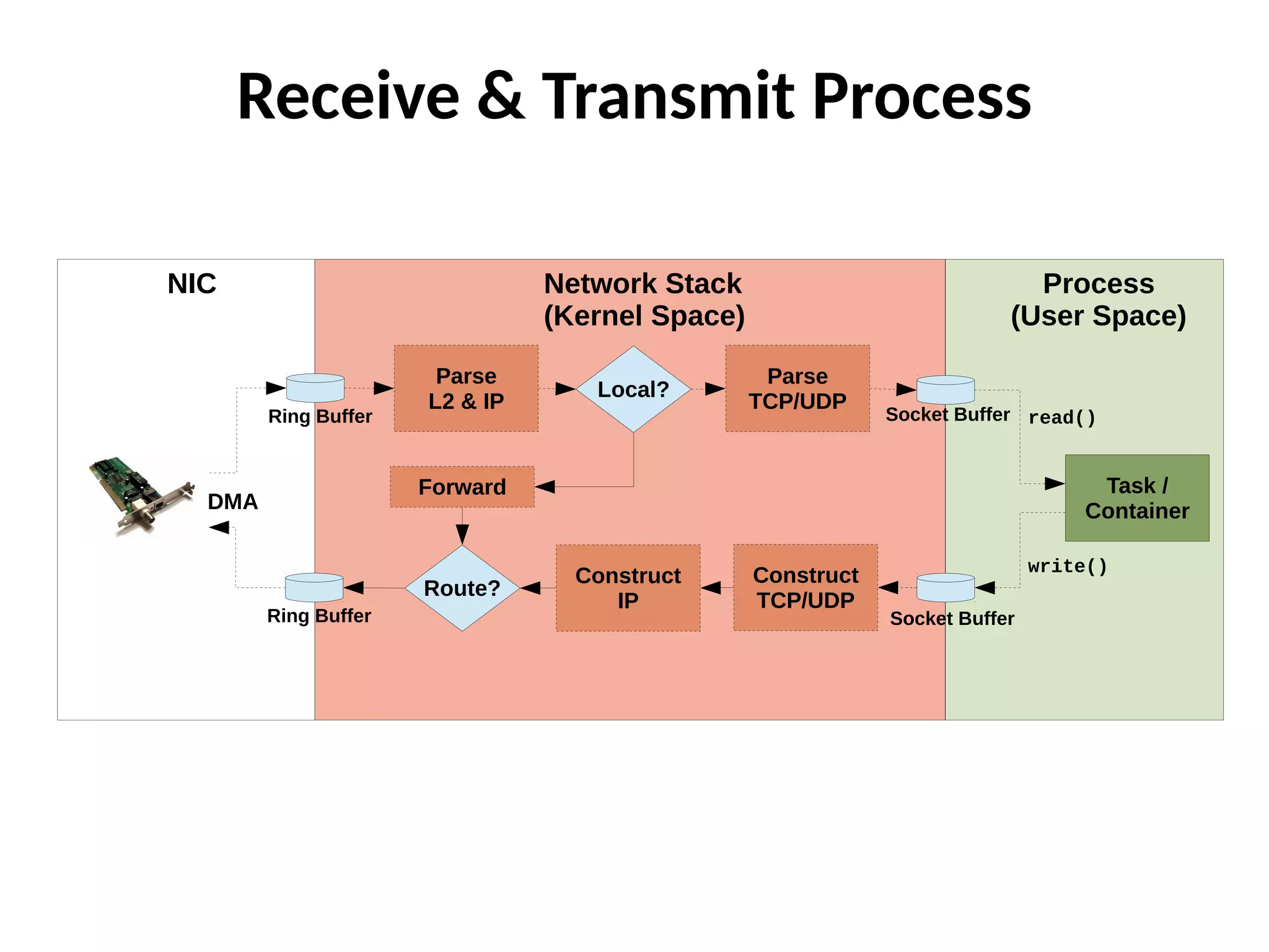

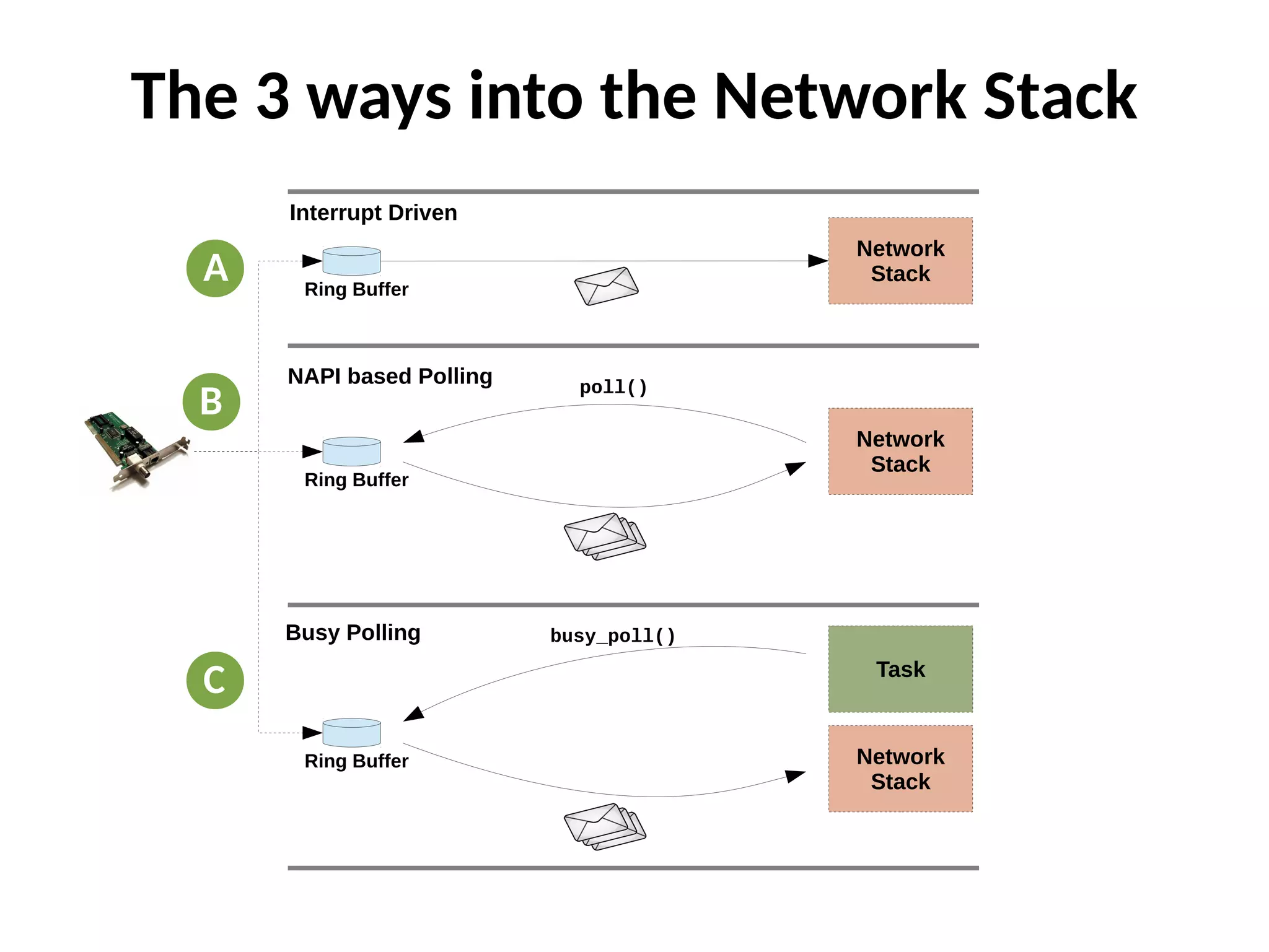

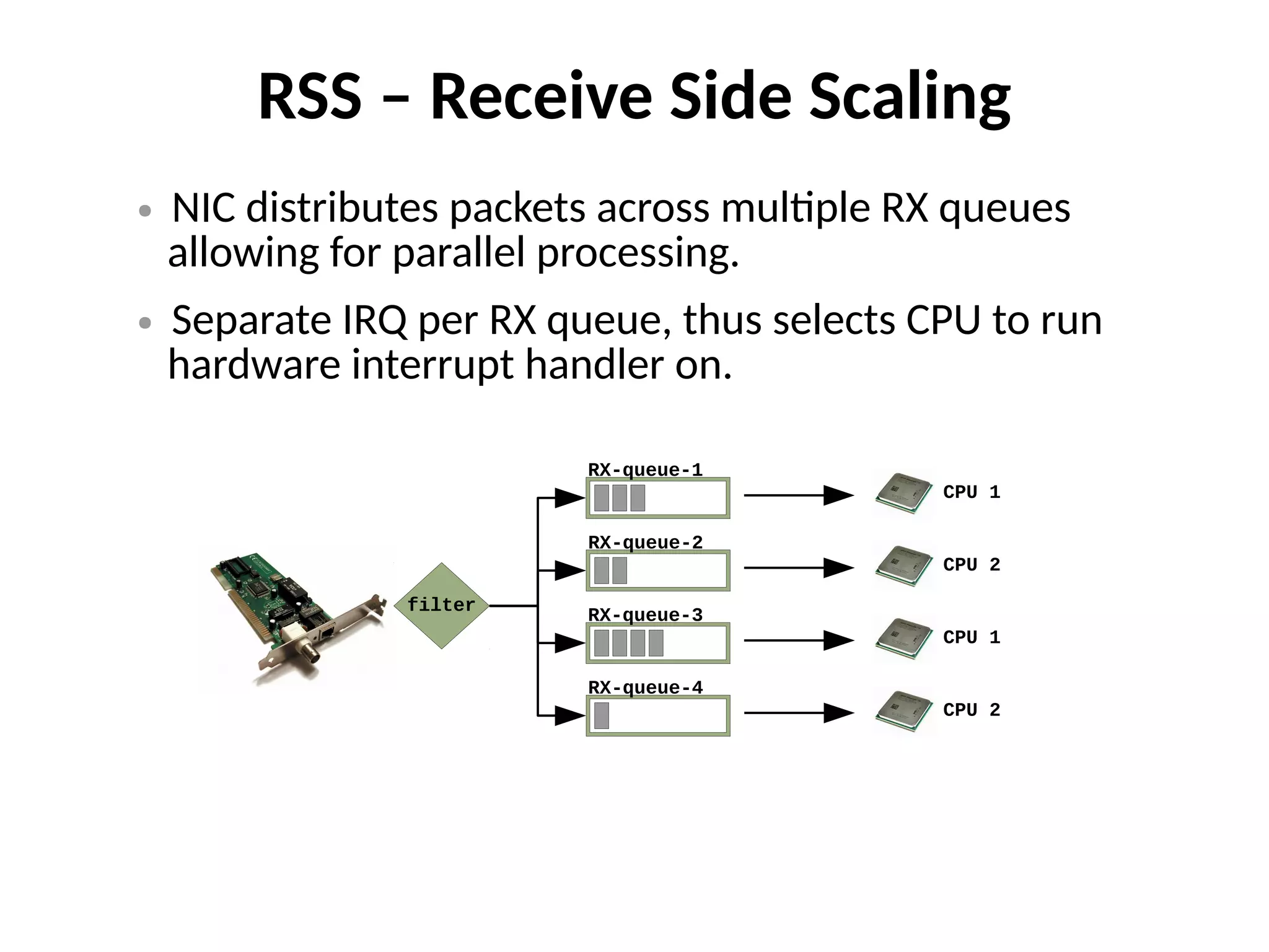

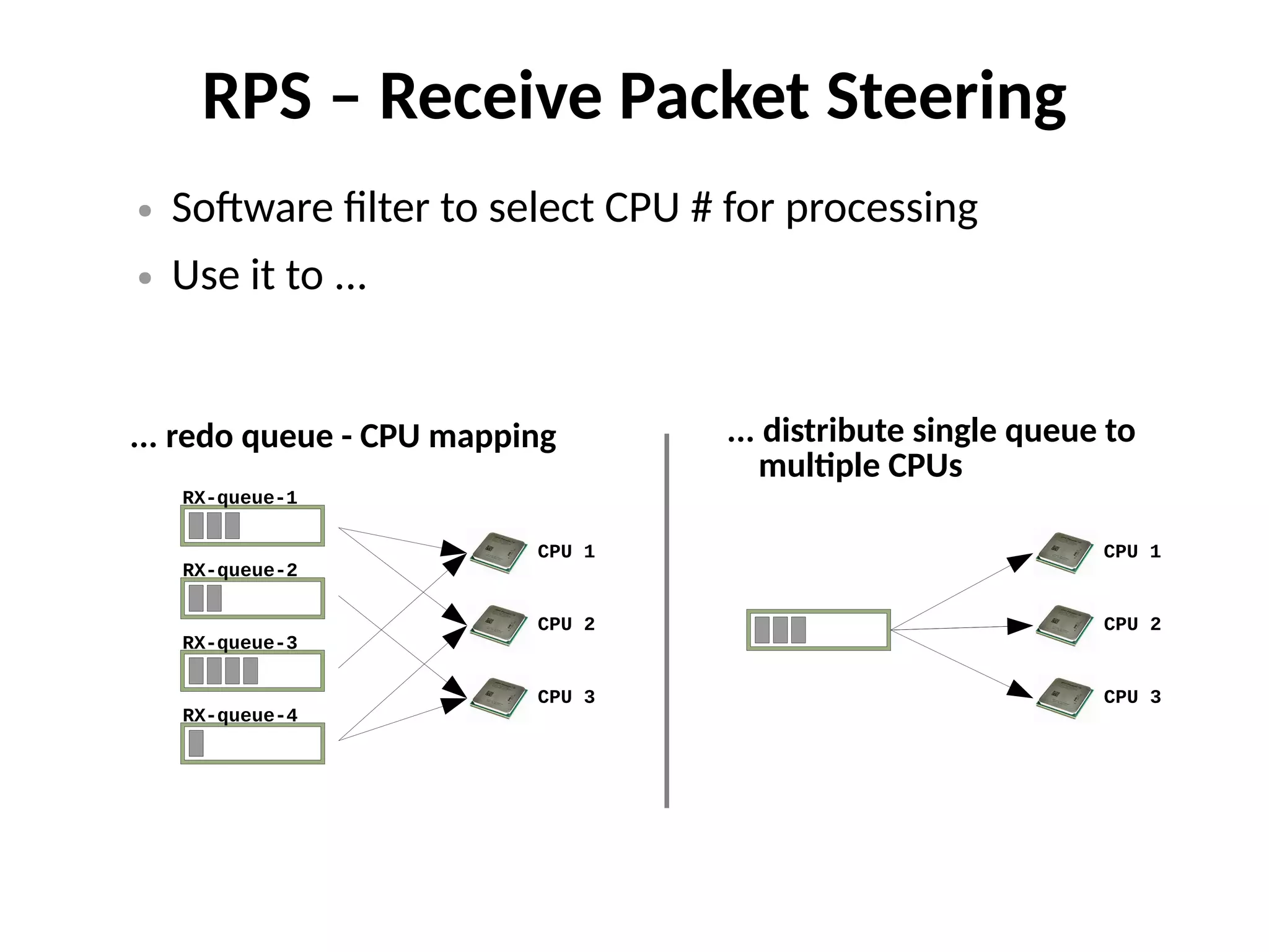

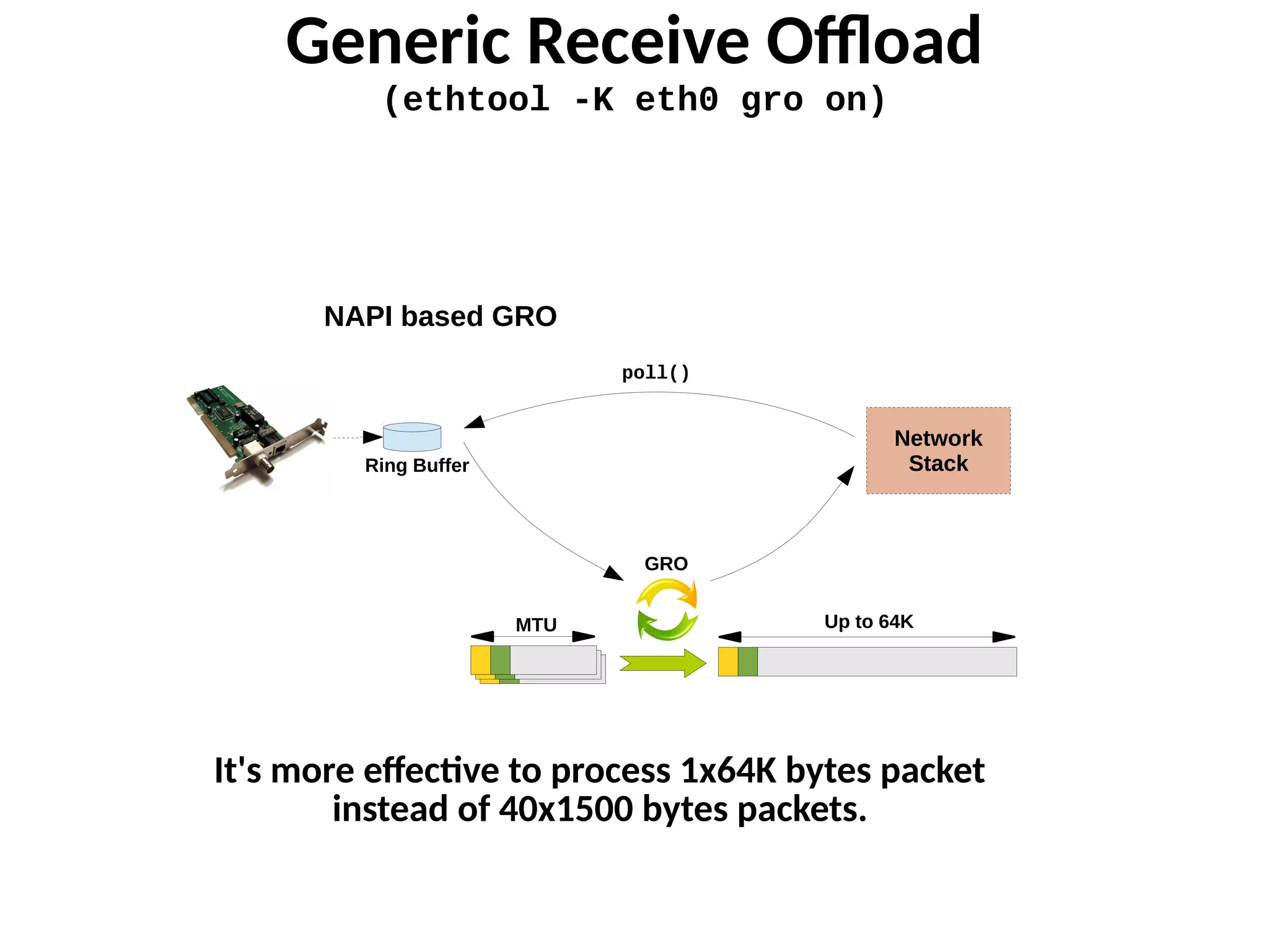

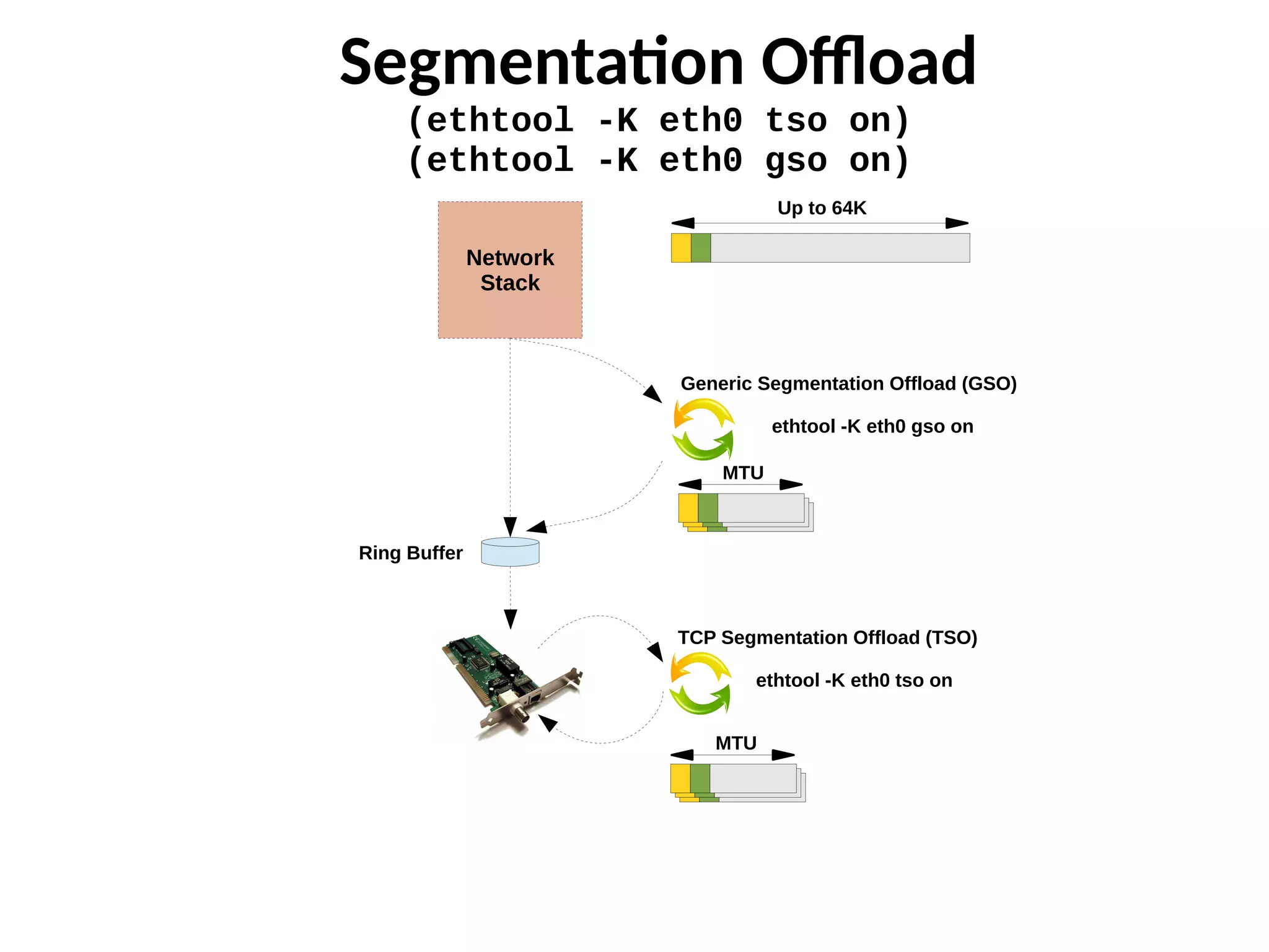

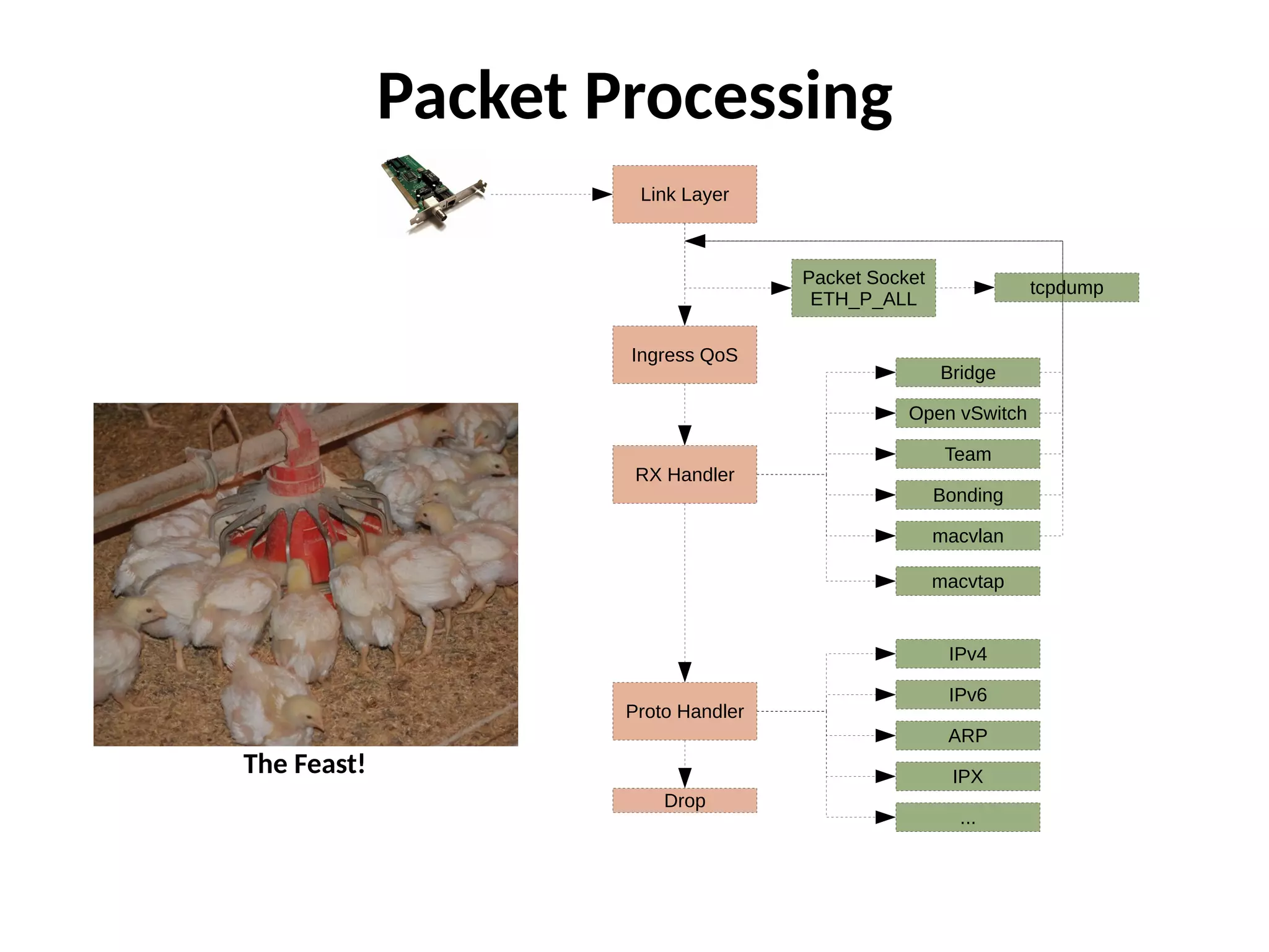

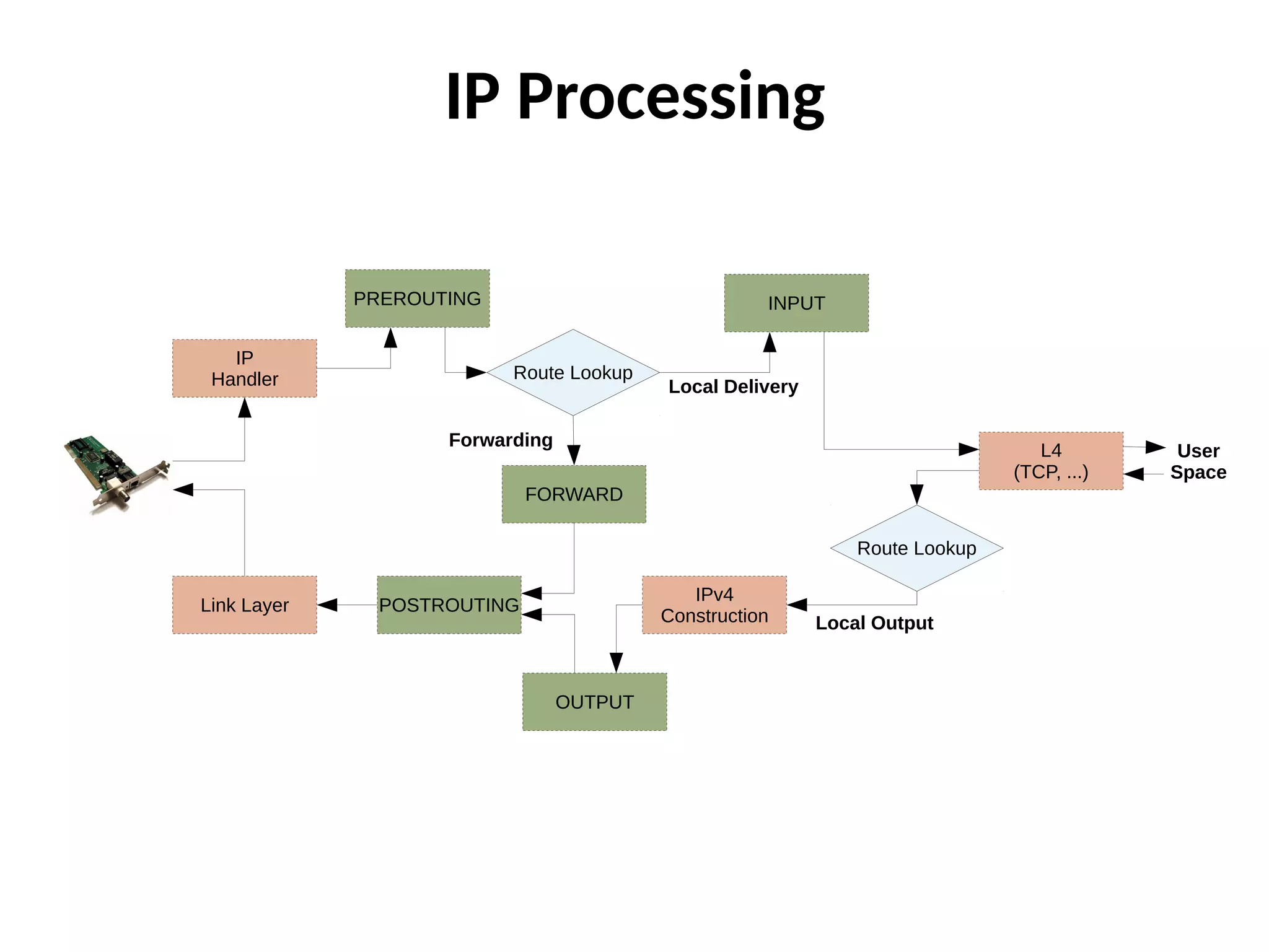

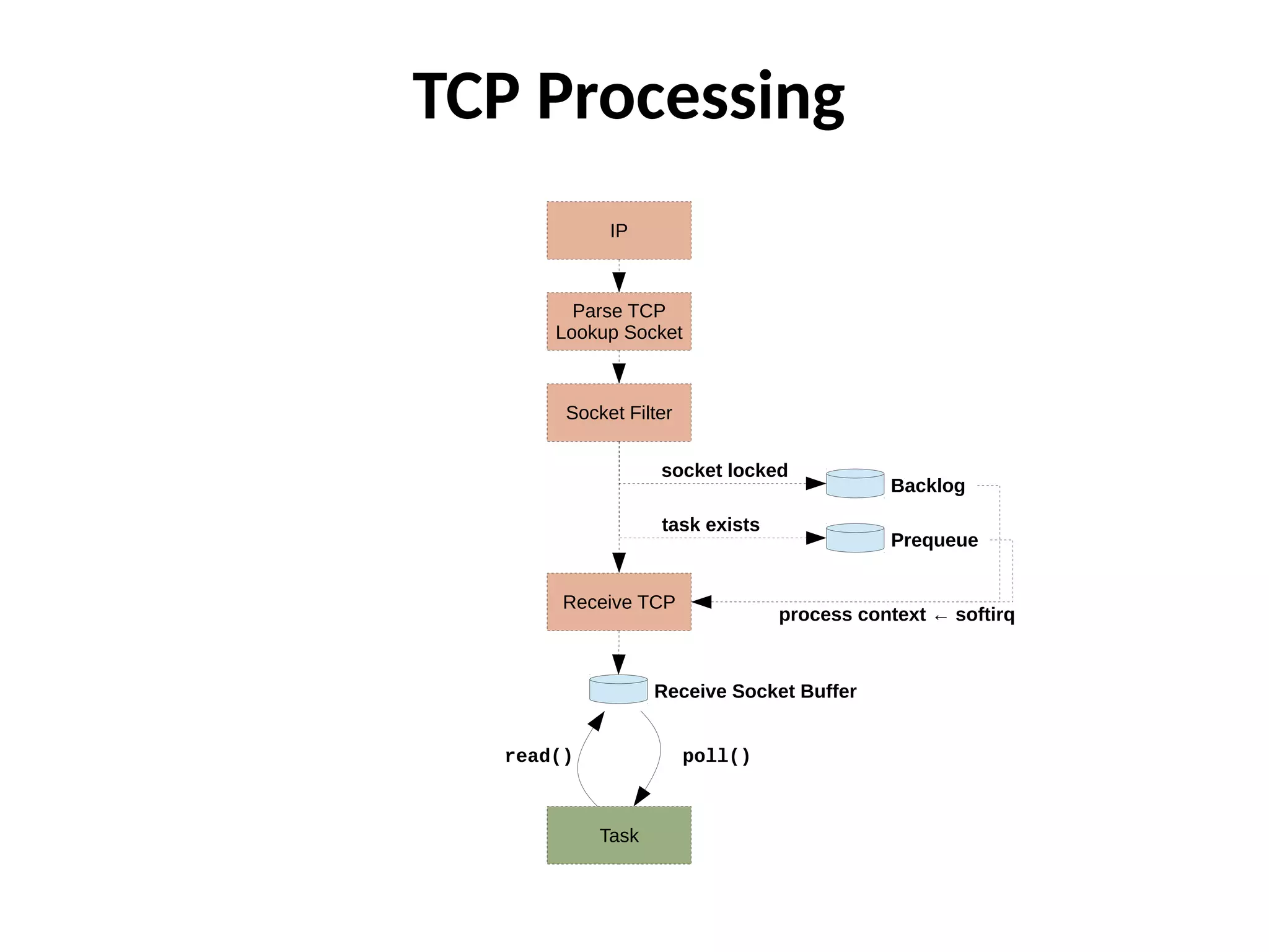

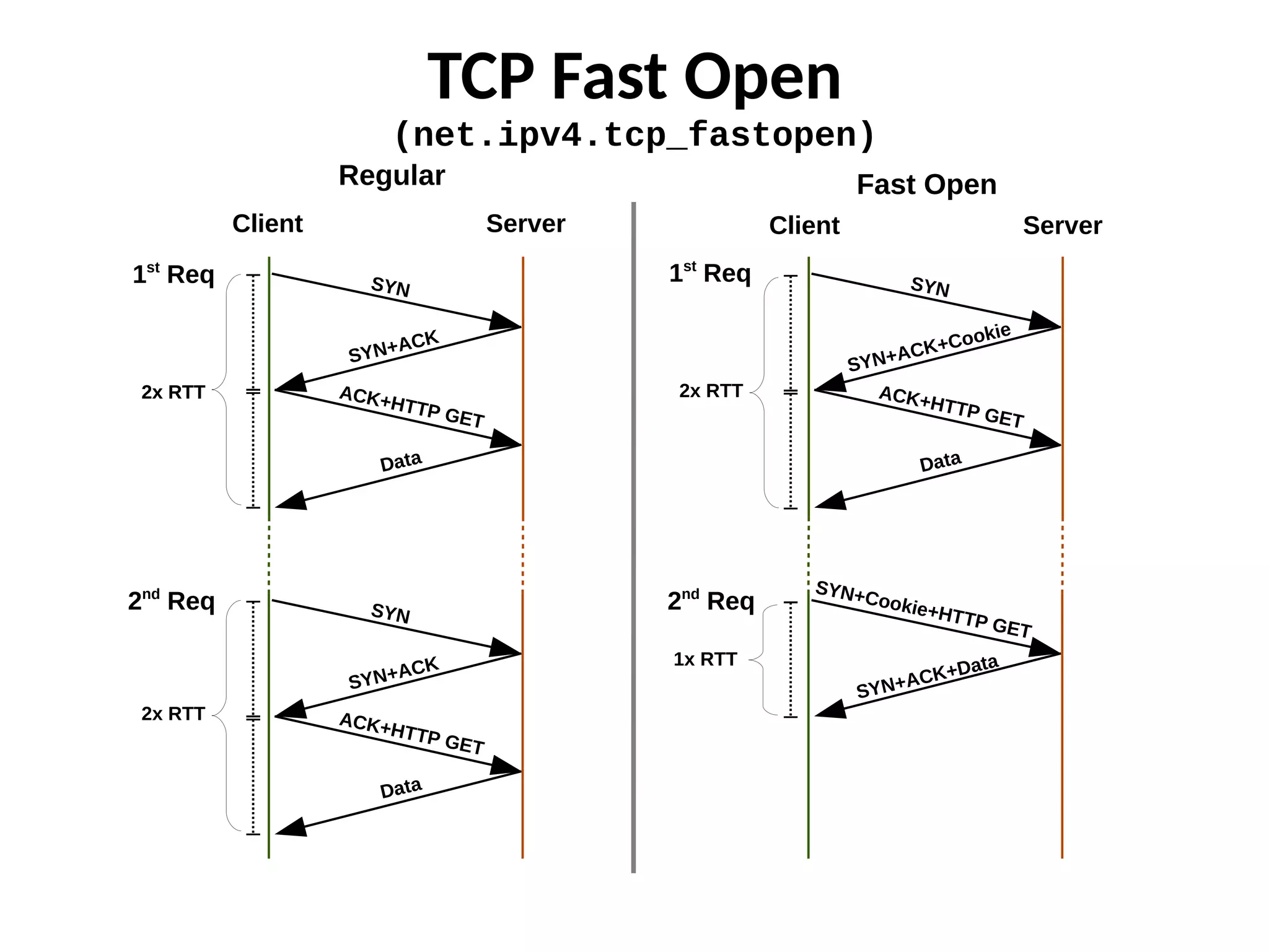

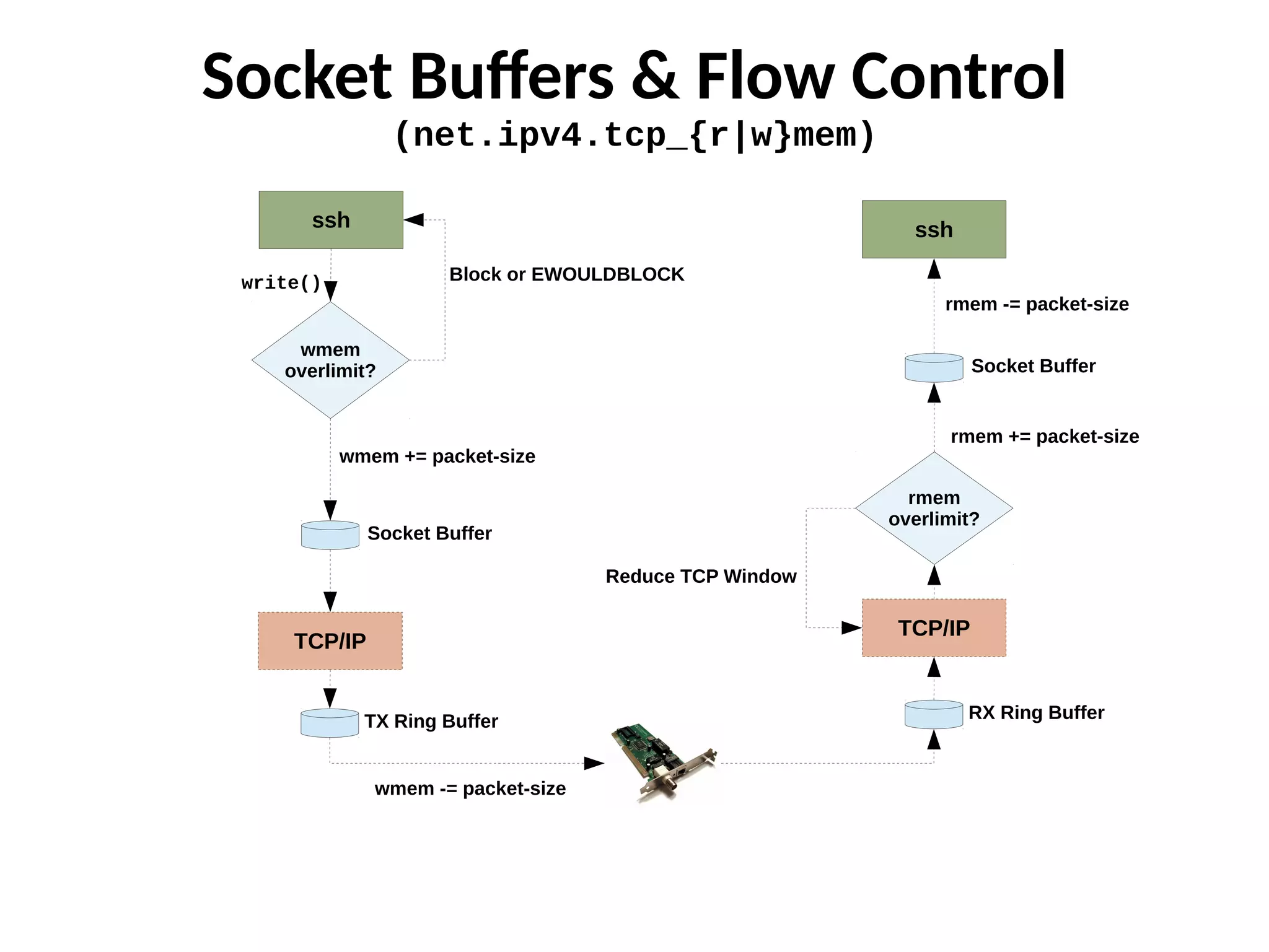

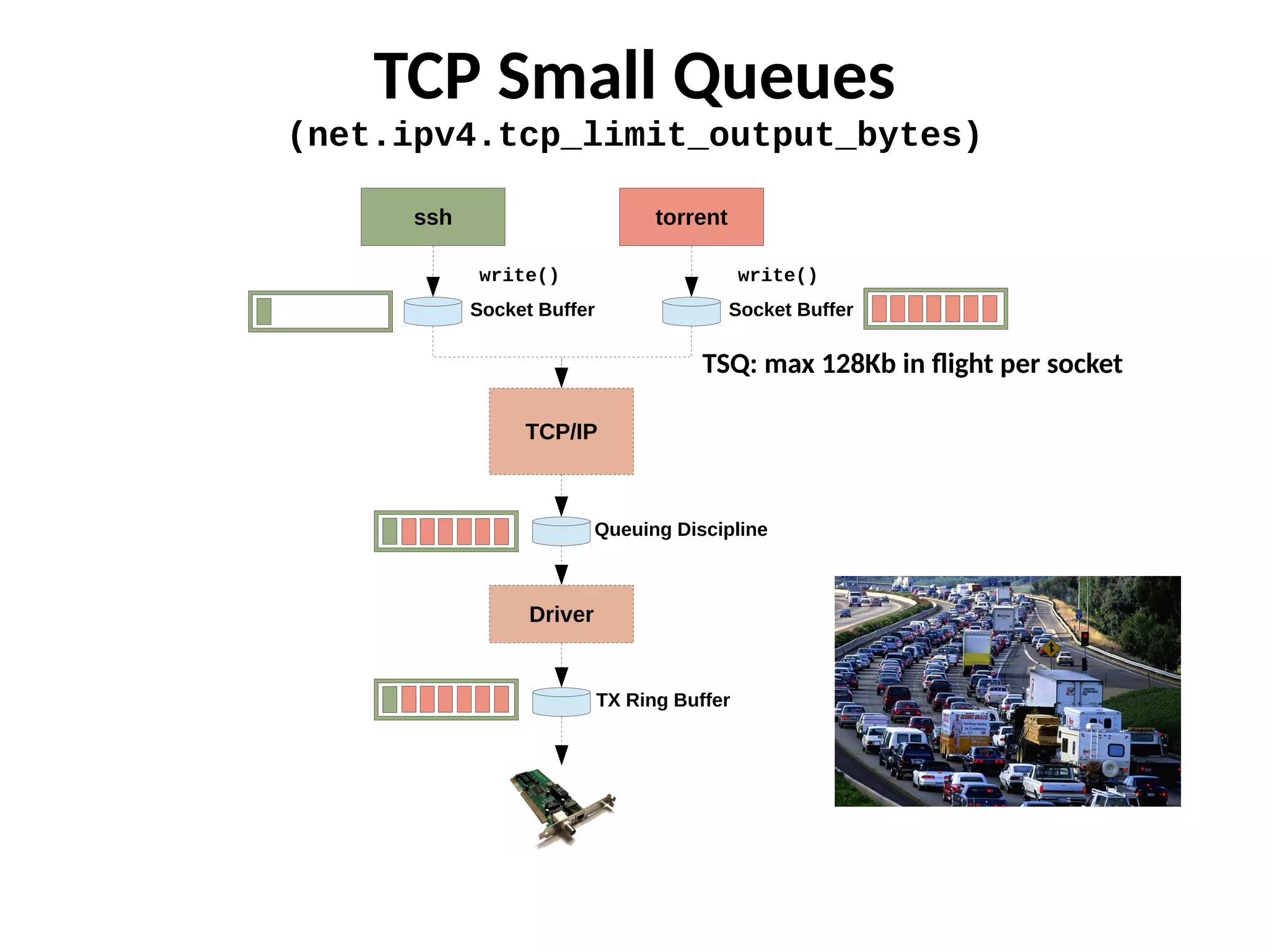

The document provides a comprehensive overview of kernel networking as presented by Thomas Graf at LinuxCon 2015, covering packet processing, receive and transmit mechanisms, and various network stack components like NAPI and RSS. It explains hardware offloading techniques, including segmentation and checksum offloading, to improve performance, along with memory management in socket buffers and flow control. The document concludes with a Q&A section and contact information for further inquiries.