In my unity project I have an image file which contains information. I would like to be able to read this .bmp file pixel by pixel. So I tried simply loading a sprite, and then reading its texture. However when I do that, the loaded texture appears to be different than the image I'm using, as if it was compressed. I tried modifying the asset settings (making it an advanced texture with point filter mode, and setting format to argb32bit uncompressed) but it doesn't help. Any ideas?

EDIT: Some additional information,

The code with which I load the image into a pixel array

// sprites from which paths will be loaded public Sprite[] path_maps; // Color 24 array which contains the texture bitmap private Color32[] pixels; // graph with paths public Graph[] graphs; void Start () { // I skipped some code that doesn't matter to the problem // go through all path maps for (int i = 0; i < path_maps.Length; i++) { // load sprite into pixels array pixels = path_maps[i].texture.GetPixels32 (); // create new graph graphs [i] = new Graph (); // get graph from path map graphs[i].pixels_to_graph(pixels, path_maps[i].texture.width, path_maps[i].texture.height, camera_width, camera_height); } } The function which reads pixels and creates node for each colored pixel (only relevant part of the function)

public void pixels_to_graph (Color32[] pixels, int width, int height, double camera_width, double camera_height) { // assign pixels width and height pixels_width = width; pixels_height = height; // assign camera dimensions this.camera_width = camera_width; this.camera_height = camera_height; // First create as many nodes as there are colored pixels (simply read the pixel map) // Note that pixel map contains the bitmap from bottom to the top (left bottom to top right) // create nodes array pixel_nodes = new Node[width*height]; // current position in pixel array. Made for easier calculations int carriage = 0; // go through all rows for (int i = 0; i < height; i++) { // go column by column for (int j = 0; j < width; j++) { // if it's transparent or white - ignore if (pixels [carriage].a == 0 || pixels [carriage].Equals (col_white)) continue; // else add node to the node array pixel_nodes [carriage] = new Node (j, i, pixels_width, pixels_height, camera_width, camera_height); // note that i is the y position while j is the x position // add node to nodes list nodes.Add (pixel_nodes [carriage]); carriage++; } } I detect the problem in the above pixels_to_graph function. When I debug it with a 1680x1050 image, that has pixels in its bottom left corner (three black squares with 1 pixel distance between them, of size 3x3px, 2x2px, 1x1px) it only detects the 3x3 one, the colour value at position of the other 2 squares is white. When I tried other shapes I also discovered other distortions, some 1px wide lines are not there, as well as single pixels.

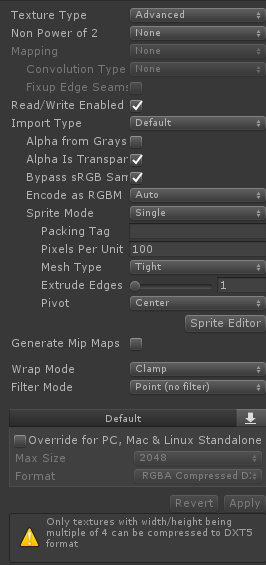

My current texture settings (though note I tried different options but some of them don't display anything, this one at least displays something):