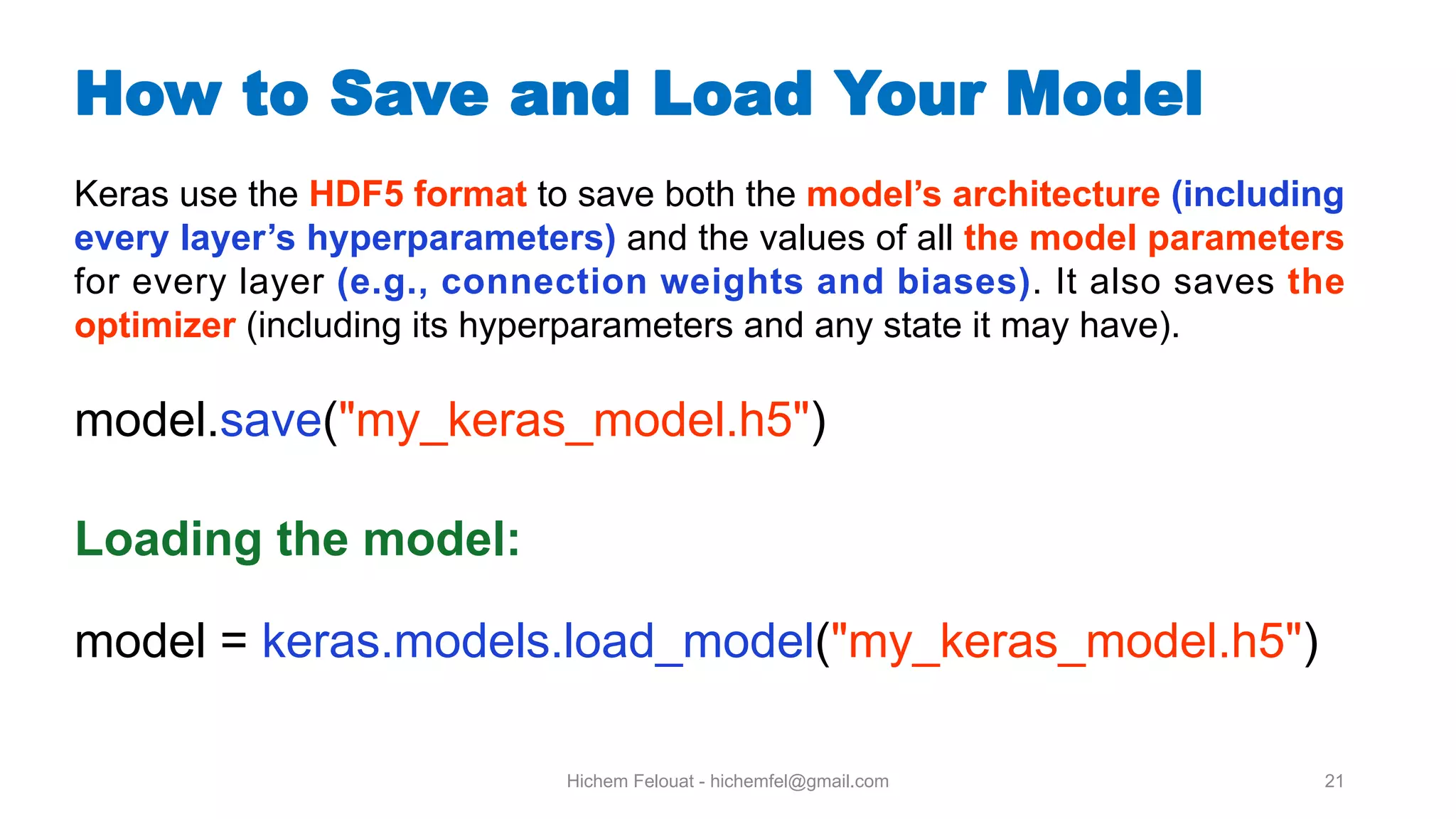

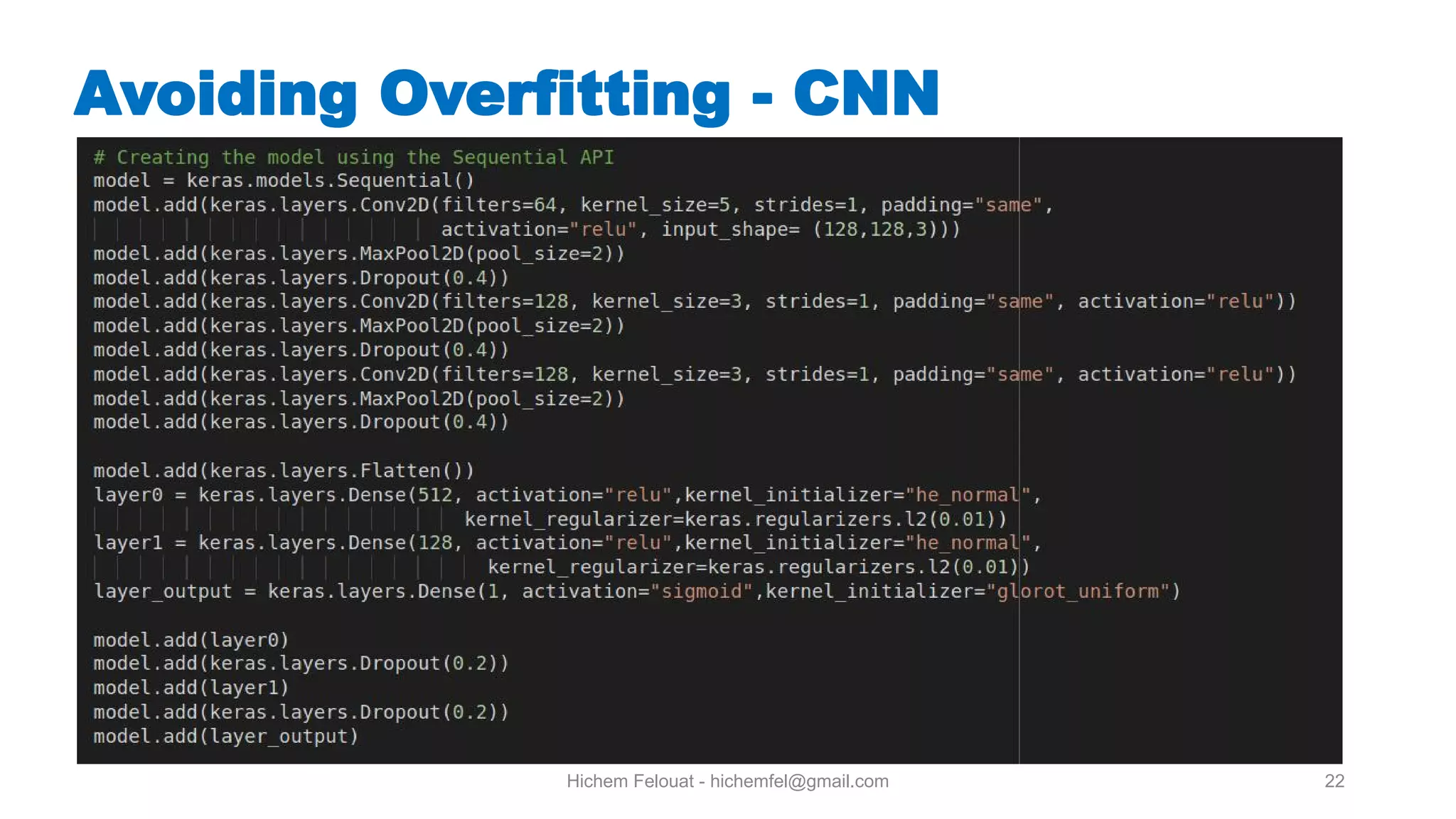

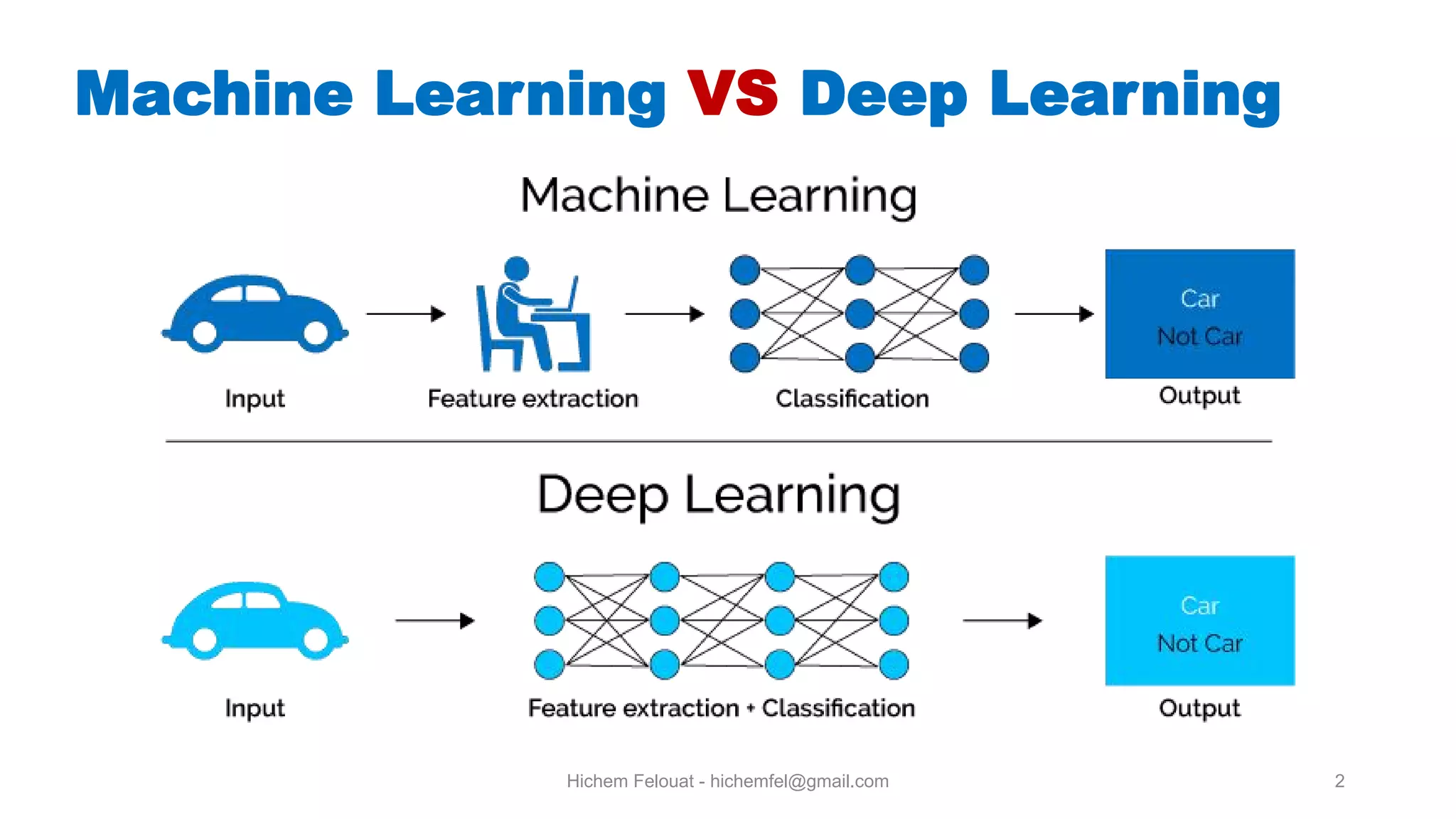

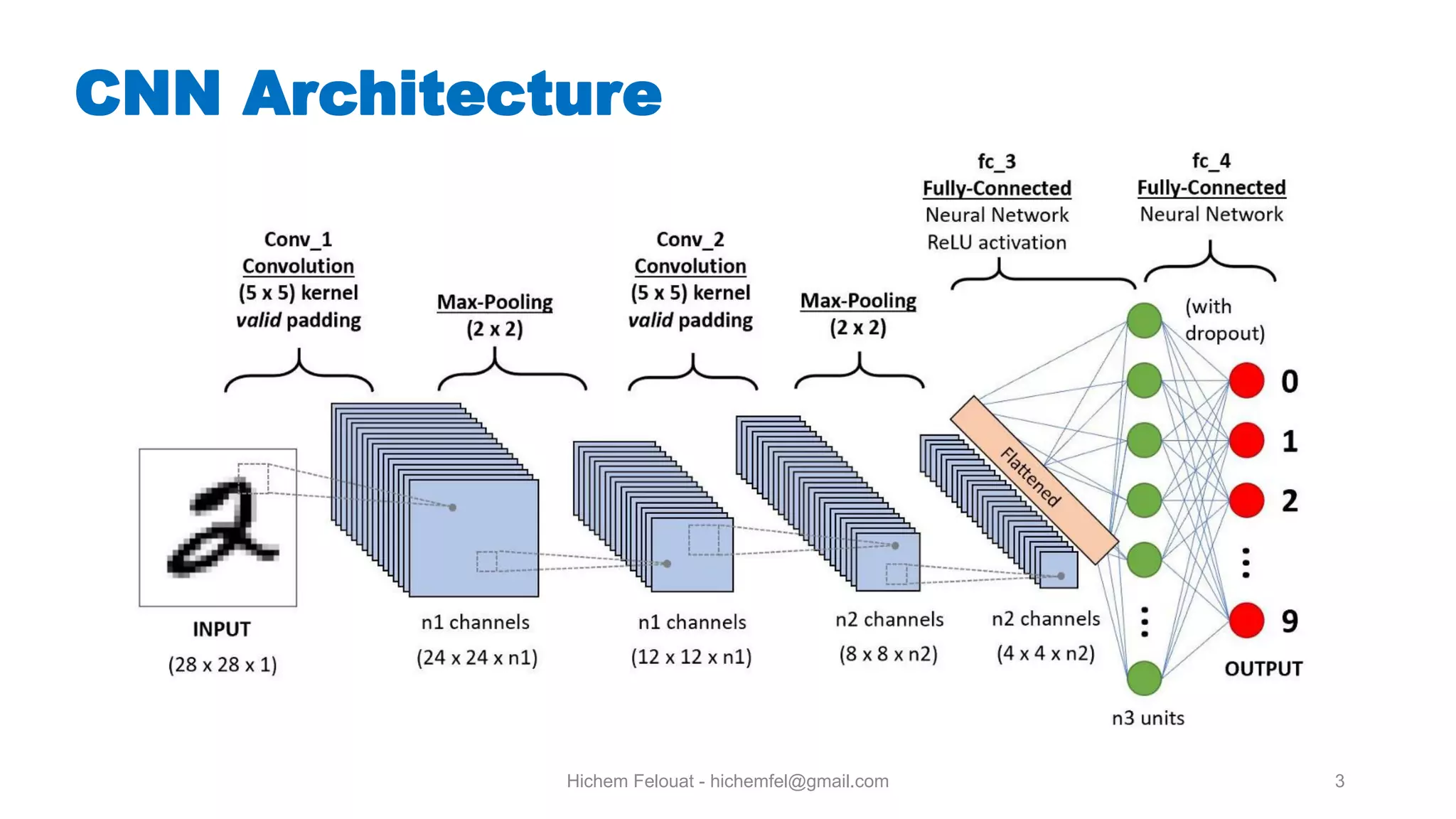

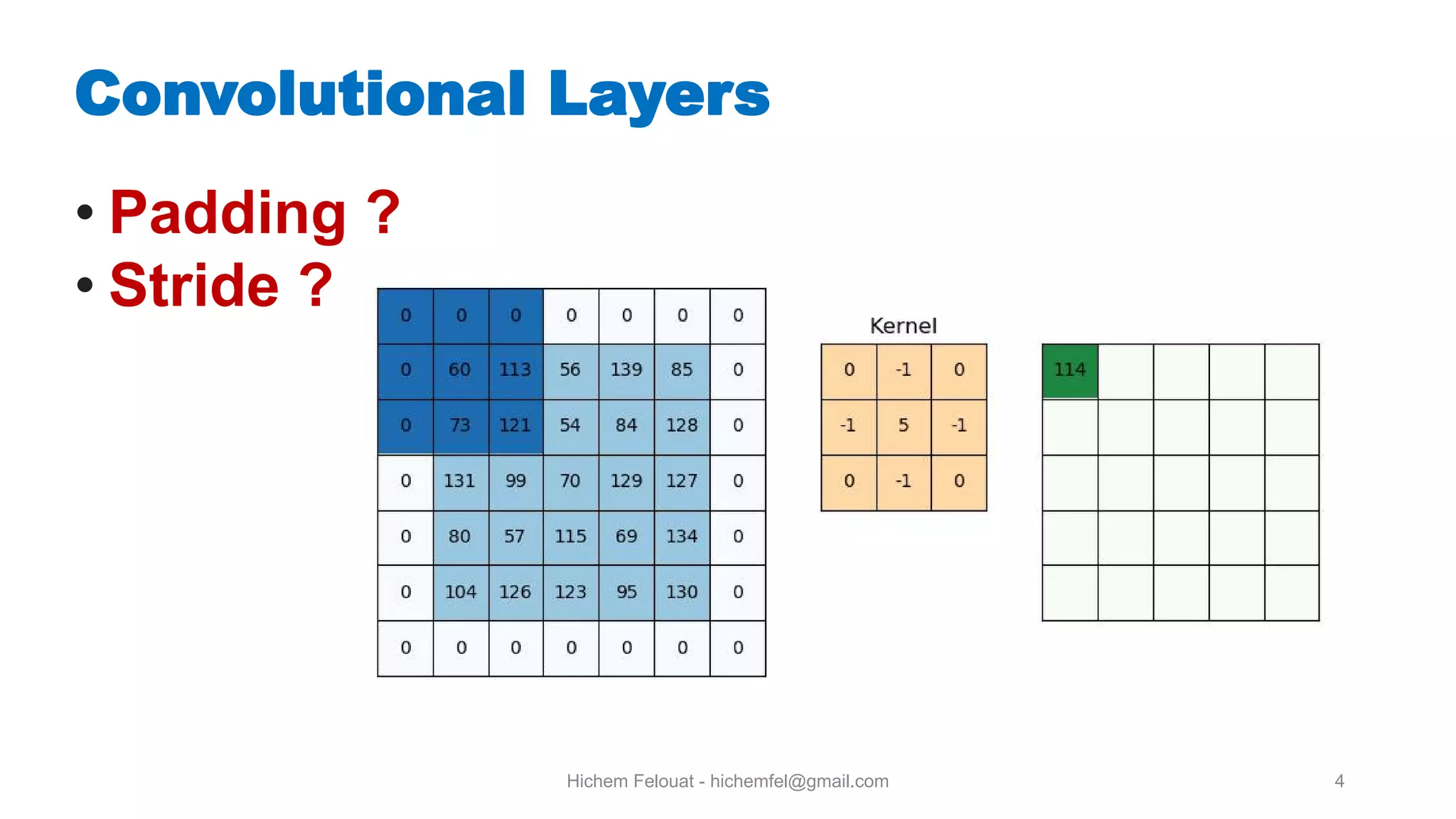

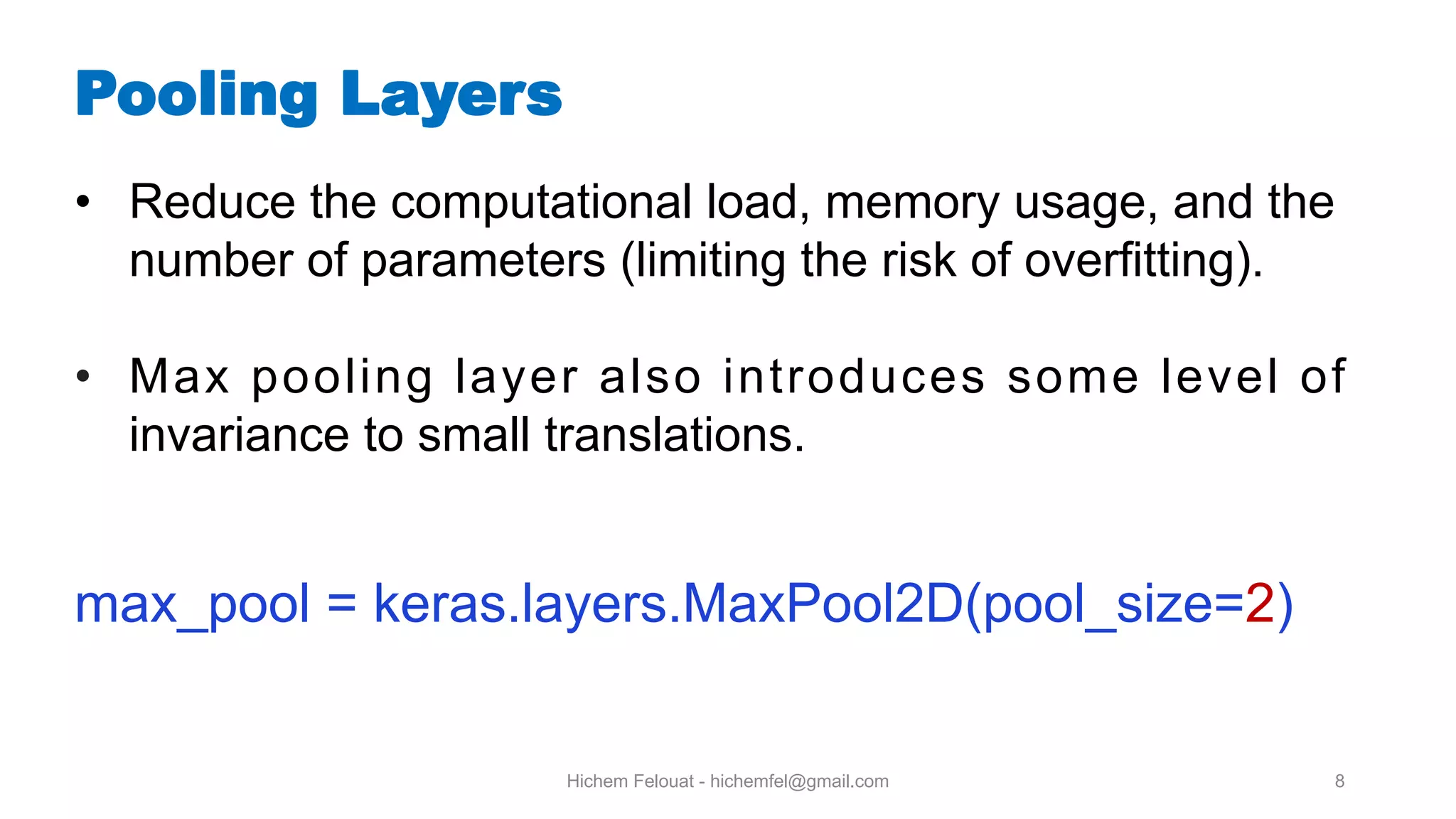

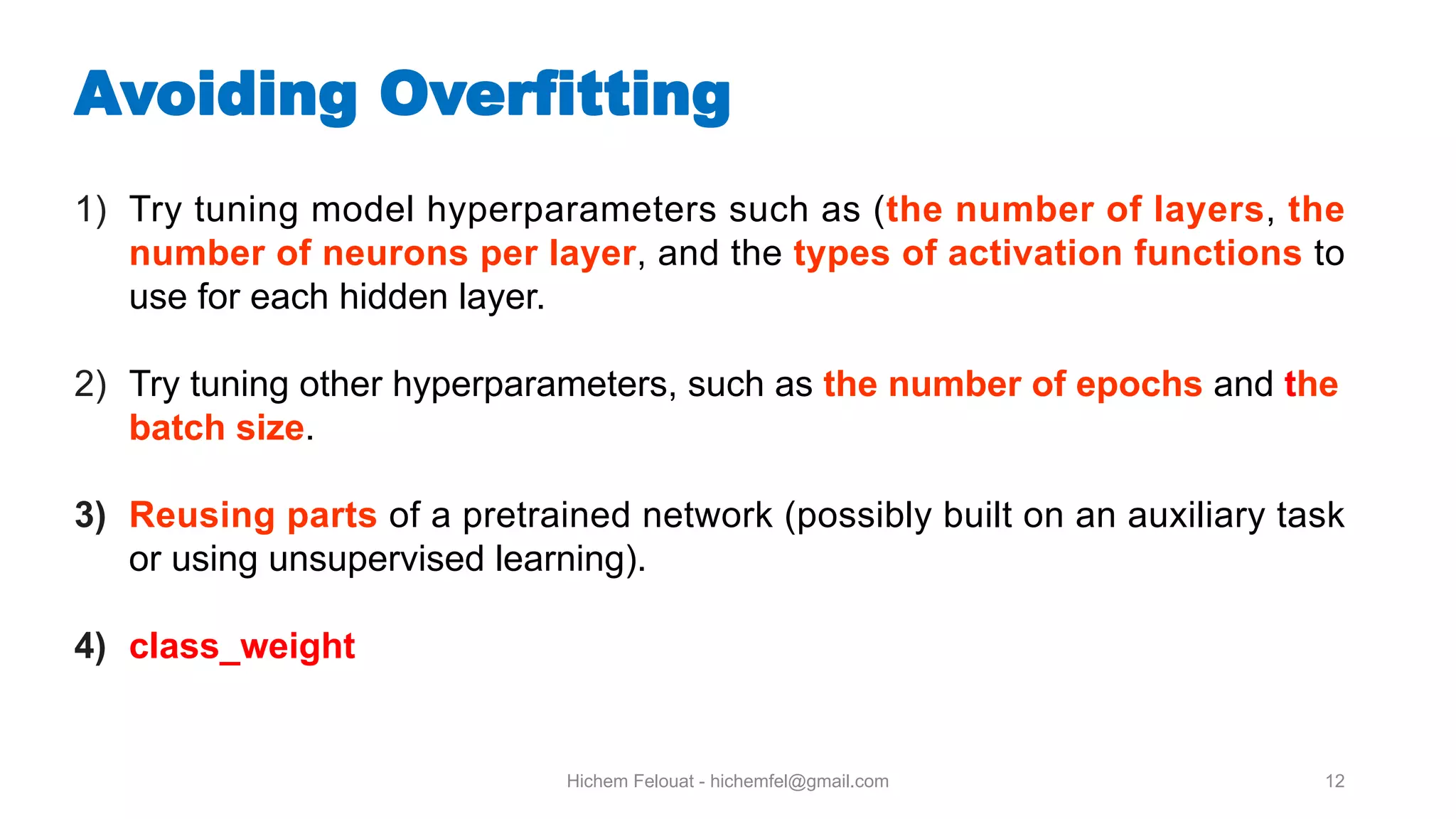

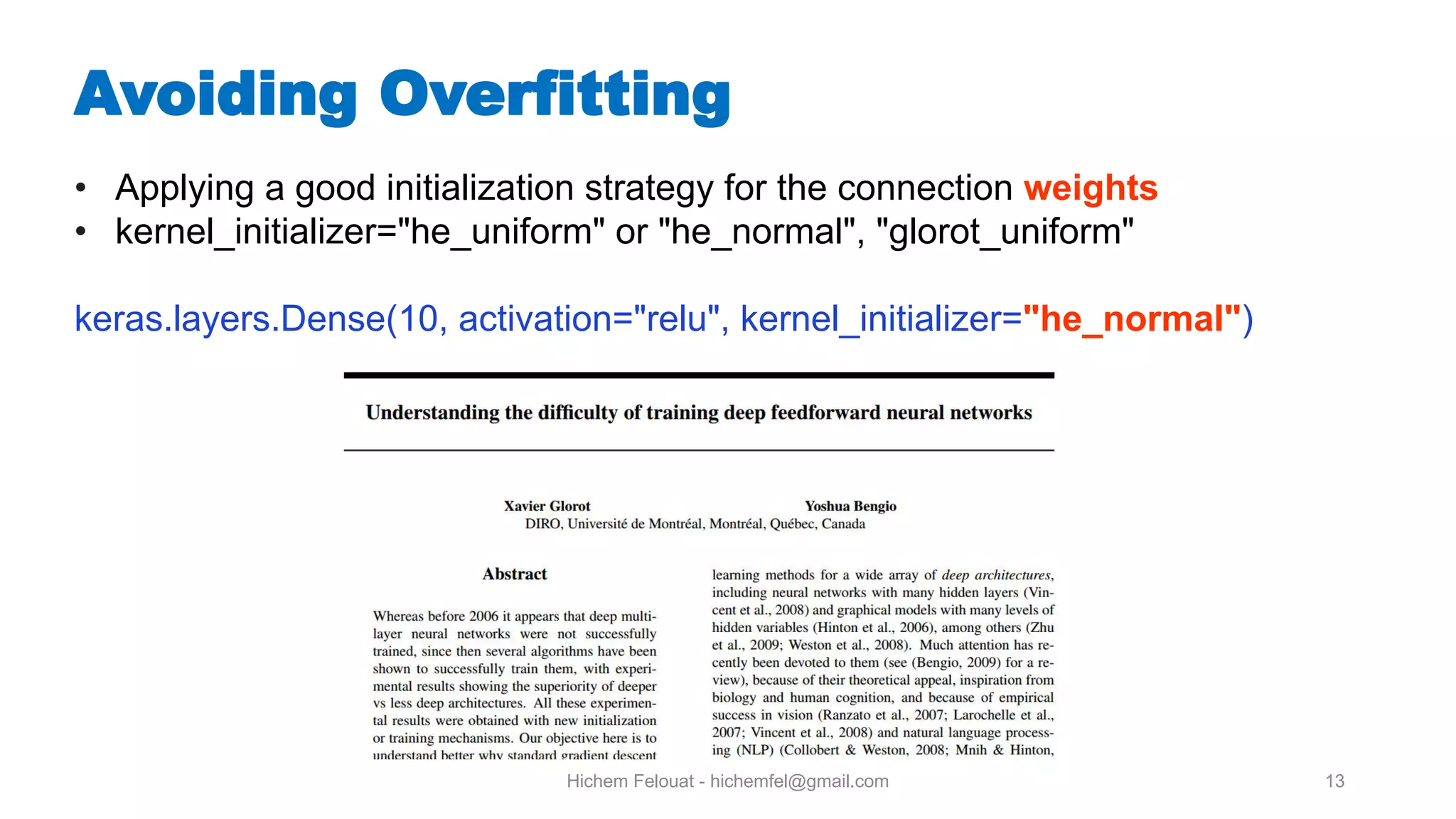

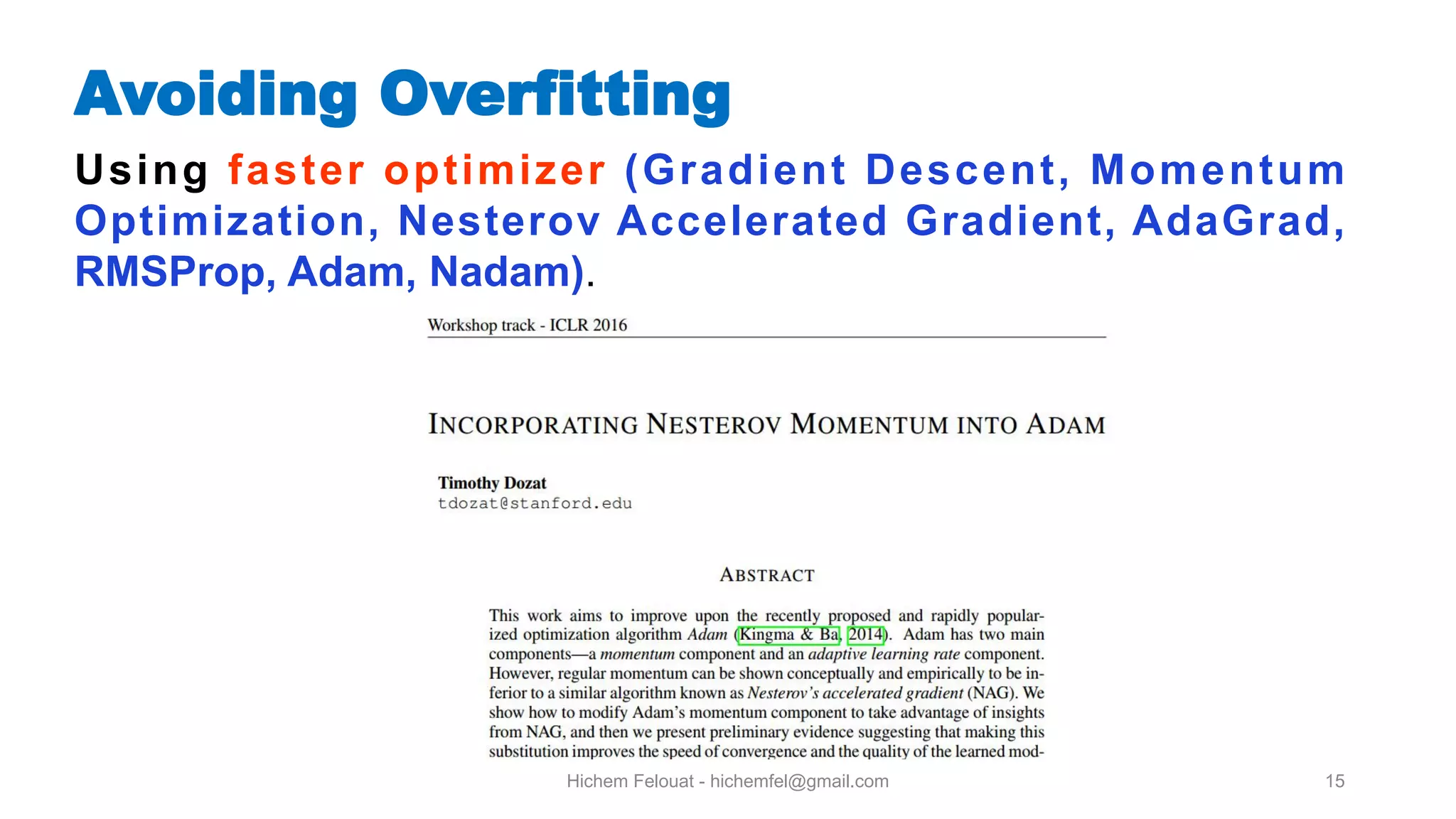

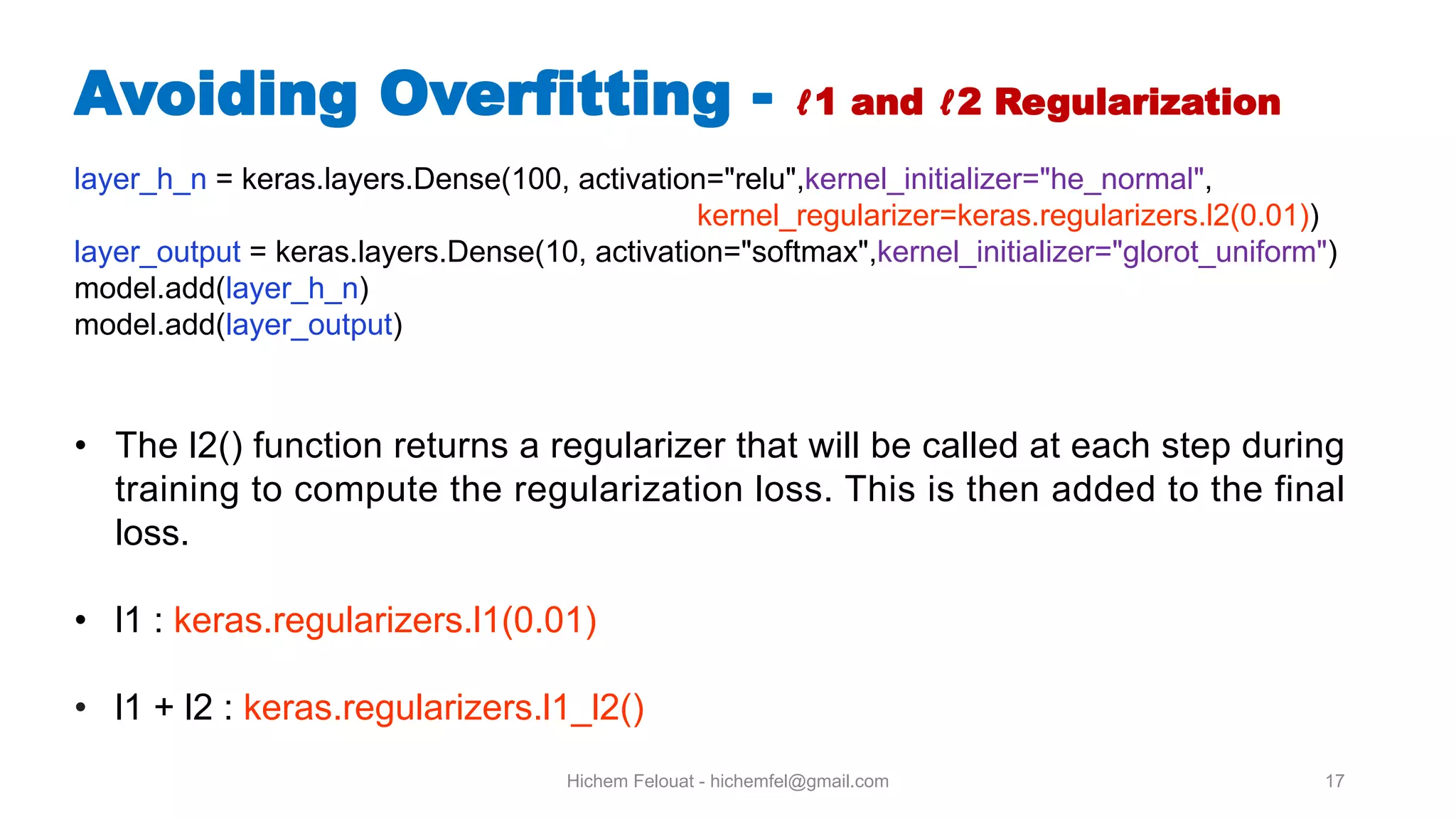

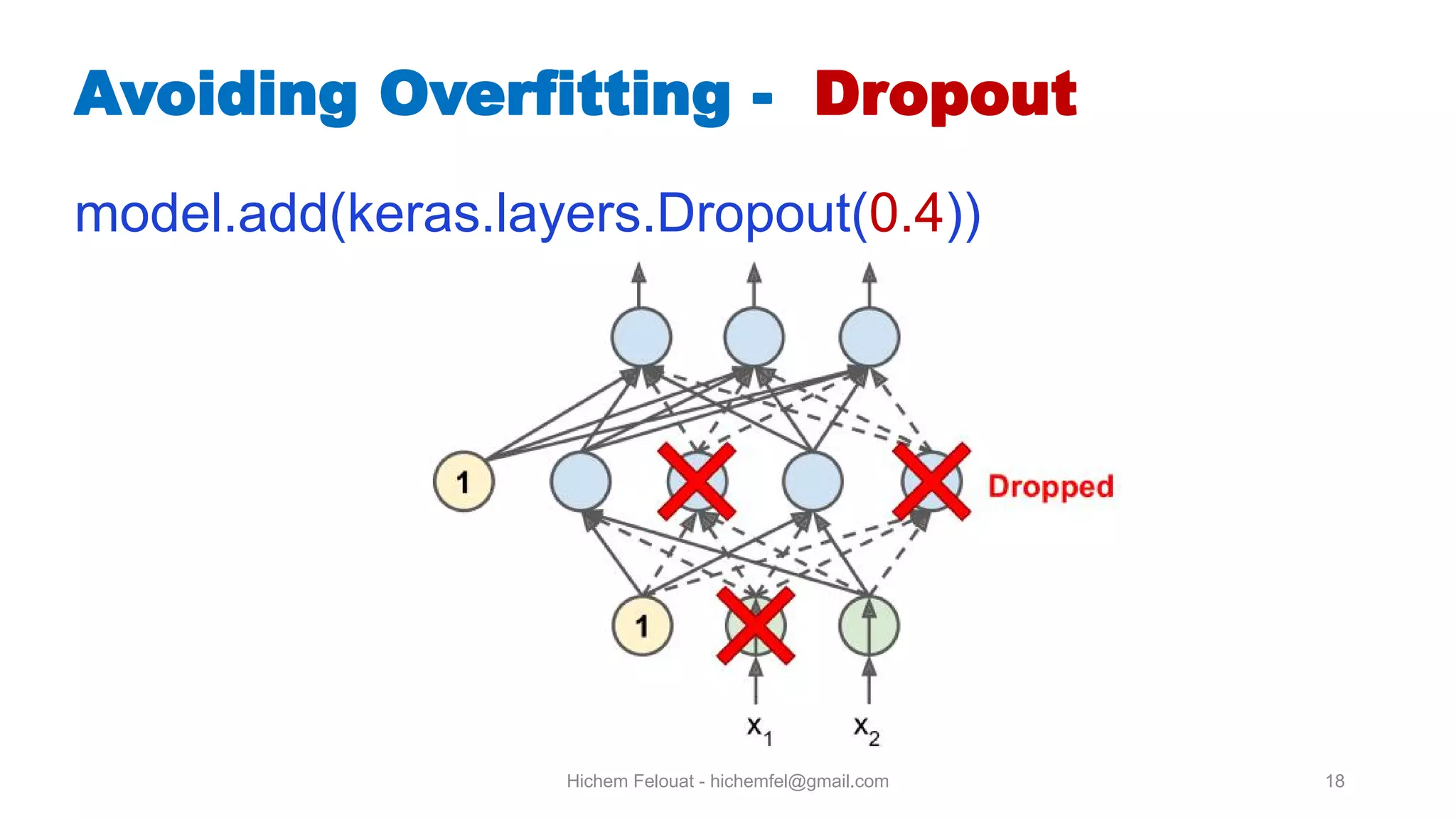

This document provides an overview of building and training a convolutional neural network (CNN) from scratch in Keras and TensorFlow. It discusses CNN architecture including convolutional layers, pooling layers, and fully connected layers. It also covers techniques for avoiding overfitting such as regularization, dropout, data augmentation, early stopping, and callbacks. The document concludes with instructions on how to save and load a trained CNN model.

![Hichem Felouat - hichemfel@gmail.com 14 Batch Normalization: This operation simply zero-centers and normalizes each input, then scales and shifts the result (before or after the activation function of each hidden layer). model = keras.models.Sequential([ keras.layers.Flatten(input_shape=[28, 28]), keras.layers.BatchNormalization(), keras.layers.Dense(300, activation="elu", kernel_initializer="he_normal"), keras.layers.BatchNormalization(), keras.layers.Dense(100, activation="elu", kernel_initializer="he_normal"), keras.layers.BatchNormalization(), keras.layers.Dense(10, activation="softmax") ]) Avoiding Overfitting](https://image.slidesharecdn.com/cnn-200926193538/75/Build-your-own-Convolutional-Neural-Network-CNN-14-2048.jpg)

![Hichem Felouat - hichemfel@gmail.com 16 Avoiding Overfitting Update the optimizer’s learning_rate attribute at the beginning of each epoch: lr_scheduler = keras.callbacks.ReduceLROnPlateau(factor=0.5, patience=5) history = model.fit(X_train, y_train, [...], callbacks=[lr_scheduler])](https://image.slidesharecdn.com/cnn-200926193538/75/Build-your-own-Convolutional-Neural-Network-CNN-16-2048.jpg)

![Hichem Felouat - hichemfel@gmail.com 19 How to increase your small image dataset trainAug = ImageDataGenerator( rotation_range=40, width_shift_range=0.2, height_shift_range = 0.2, shear_range=0.2, zoom_range=0.2, horizontal_flip=True, fill_mode='nearest') model.compile(loss="binary_crossentropy", optimizer=opt, metrics=["accuracy"]) H = model.fit_generator( trainAug.flow(trainX, trainY, batch_size=BS), steps_per_epoch=len(trainX) // BS,validation_data=(testX, testY), validation_steps=len(testX) // BS, epochs=EPOCHS)](https://image.slidesharecdn.com/cnn-200926193538/75/Build-your-own-Convolutional-Neural-Network-CNN-19-2048.jpg)

![Hichem Felouat - hichemfel@gmail.com 20 Avoiding Overfitting - Using Callbacks checkpoint_cb = keras.callbacks.ModelCheckpoint ("my_keras_model.h5", save_best_only=True) history = model.fit(X_train, y_train, epochs=10, validation_data=(X_valid, y_valid), callbacks=[checkpoint_cb]) # roll back to best model model = keras.models.load_model("my_keras_model.h5") • You can combine both callbacks to save checkpoints of your model (in case your computer crashes) and interrupt training early when there is no more progress (to avoid wasting time and resources): early_stopping_cb = keras.callbacks.EarlyStopping(patience=10, restore_best_weights=True) history = model.fit(X_train, y_train, epochs=100, validation_data=(X_valid, y_valid), callbacks=[checkpoint_cb, early_stopping_cb])](https://image.slidesharecdn.com/cnn-200926193538/75/Build-your-own-Convolutional-Neural-Network-CNN-20-2048.jpg)