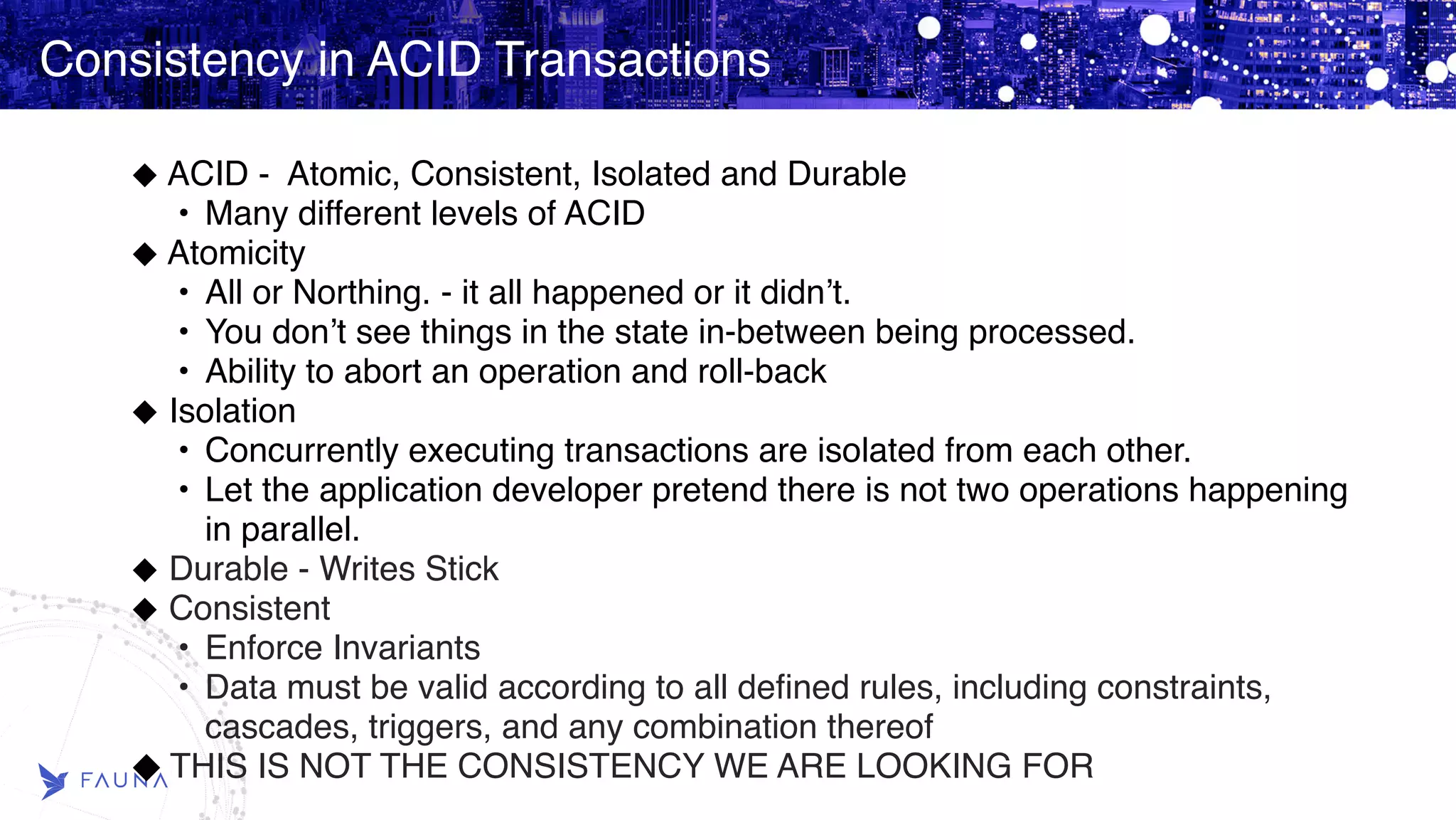

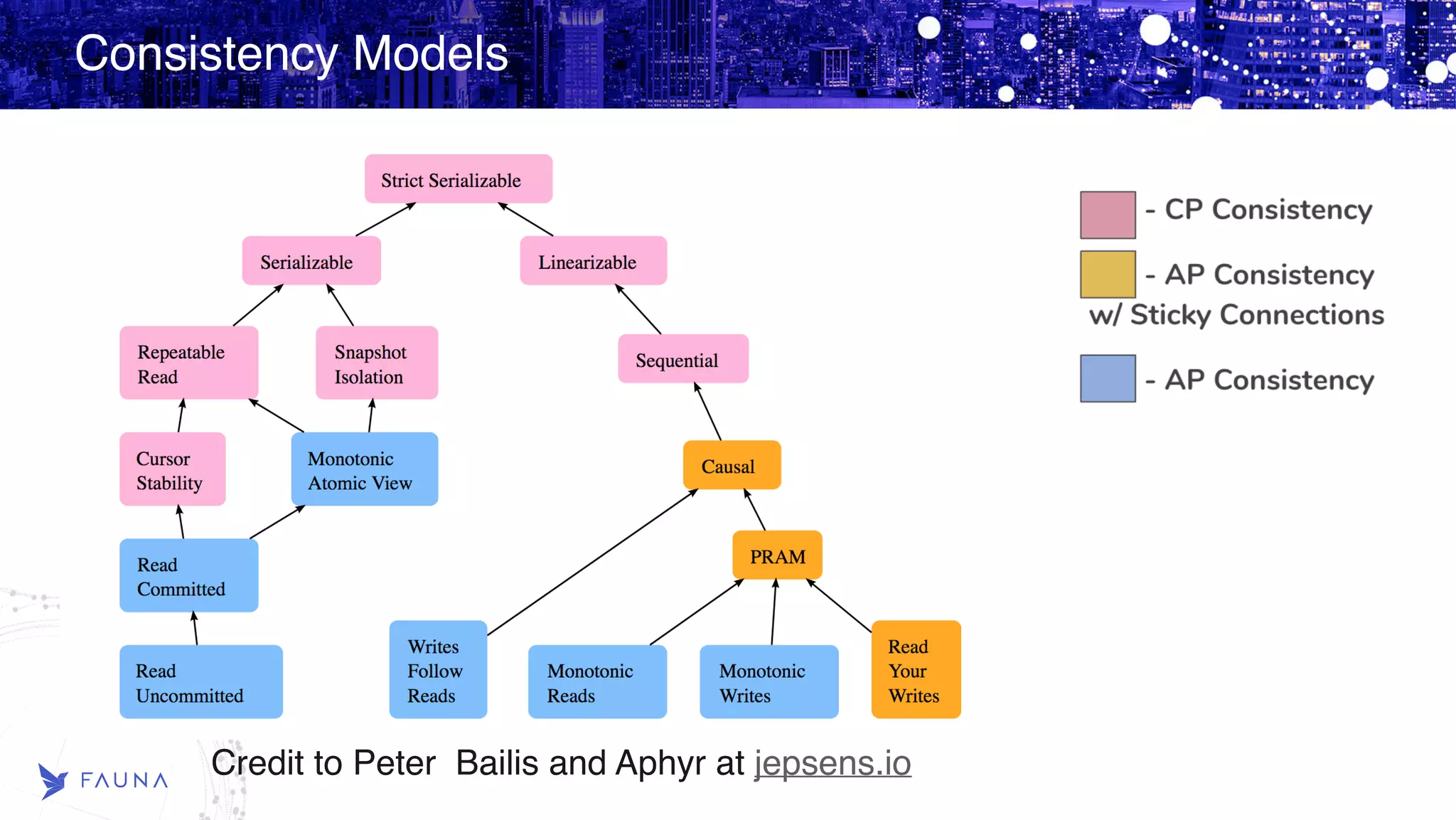

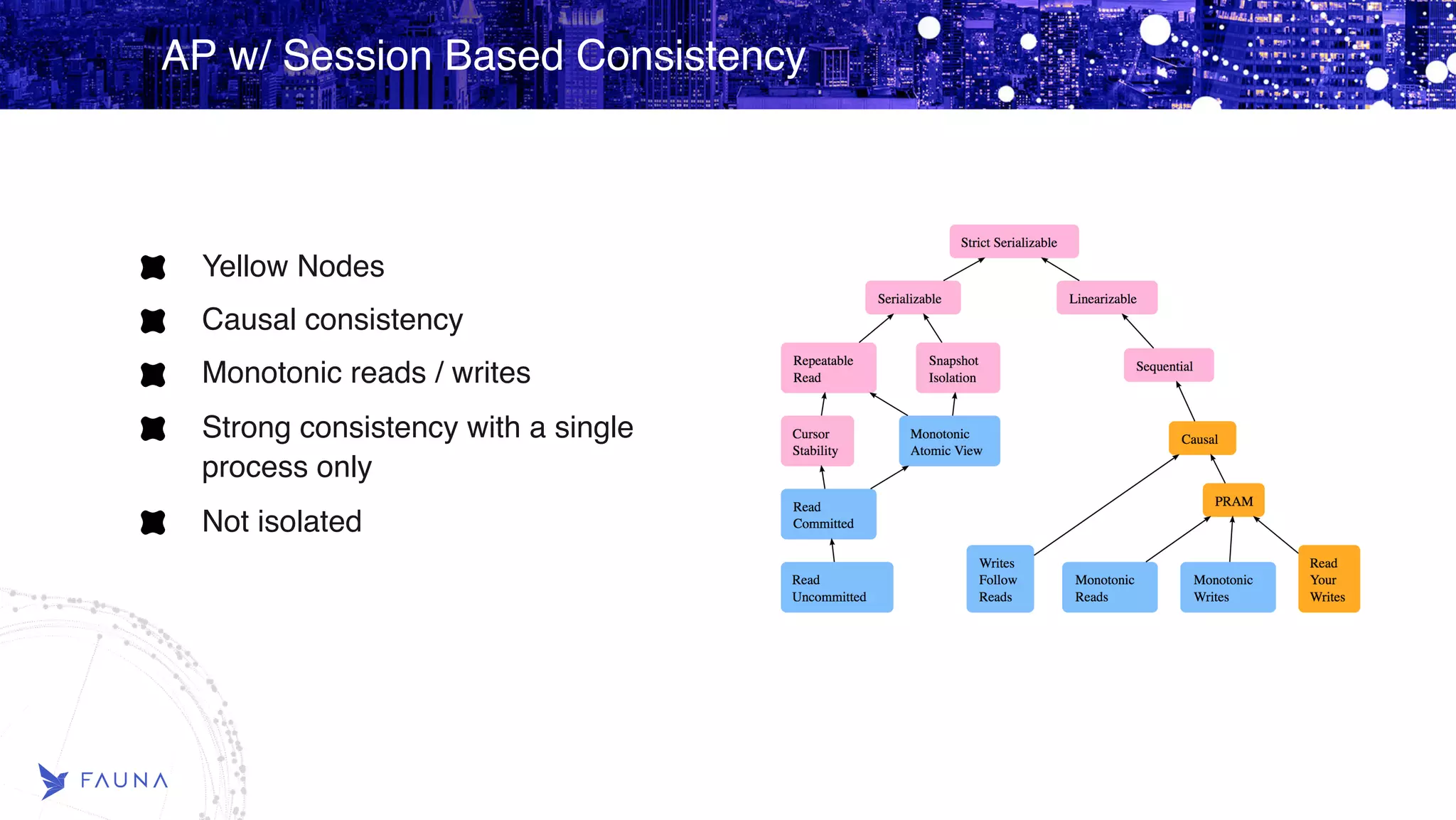

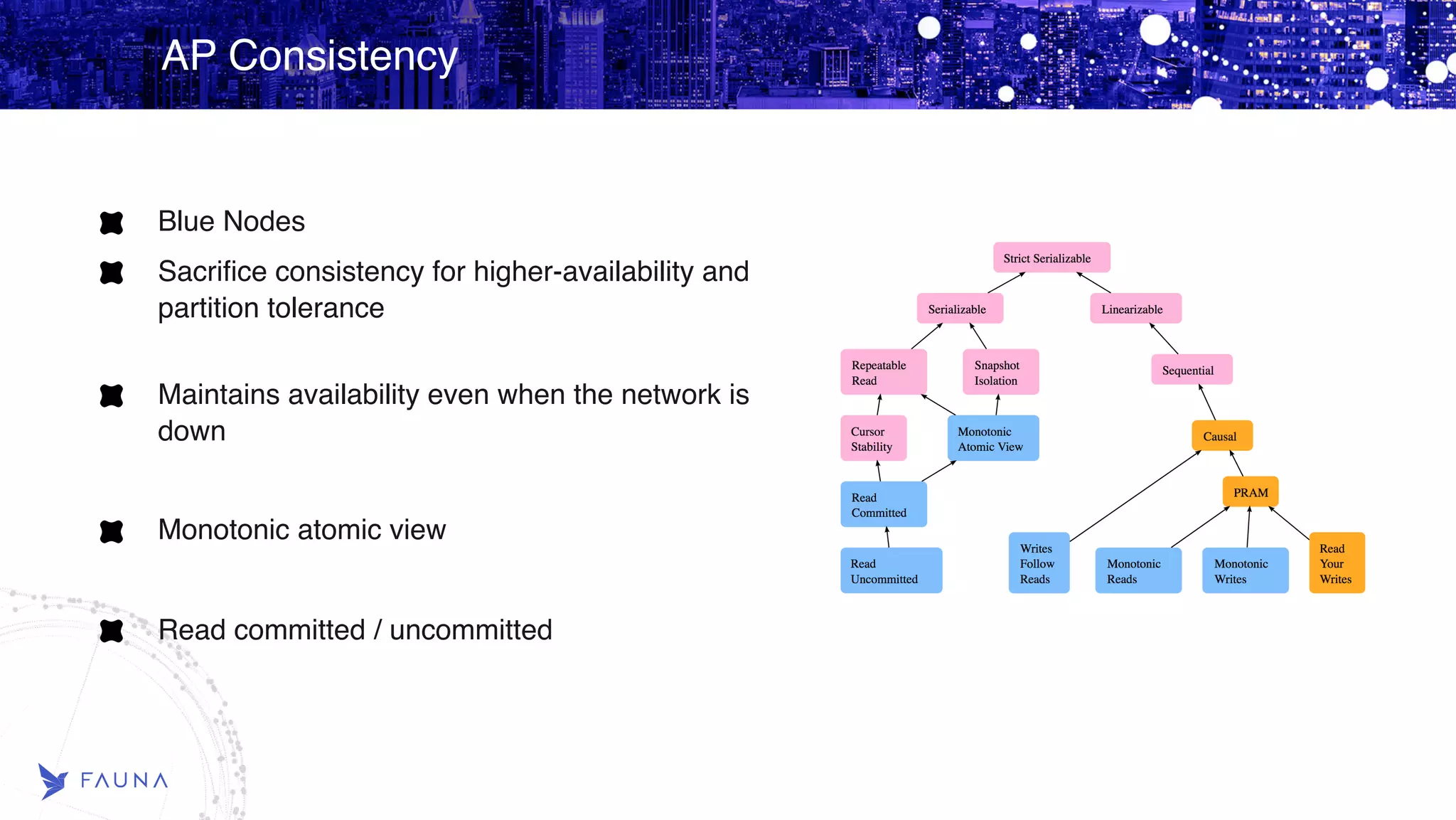

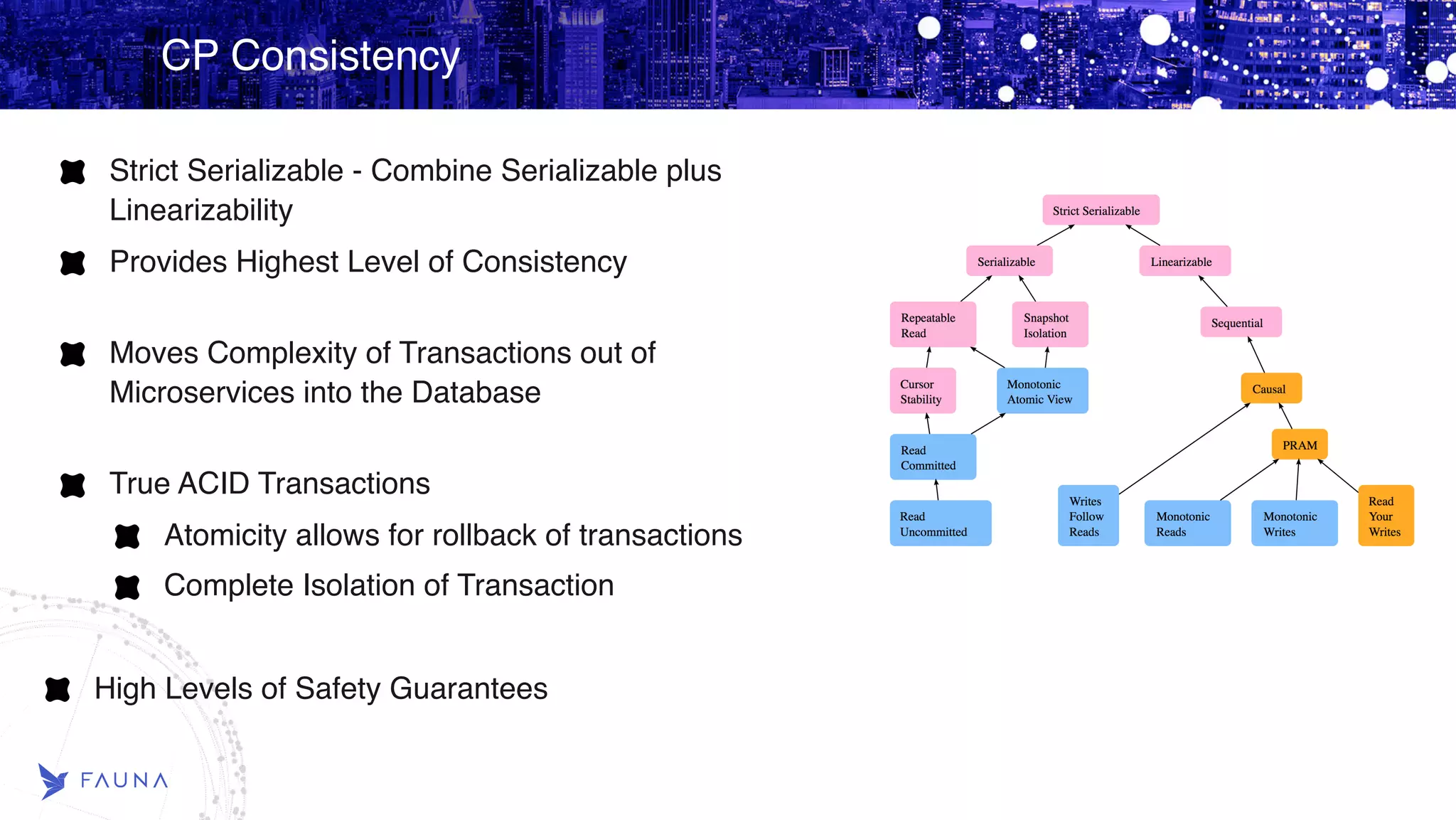

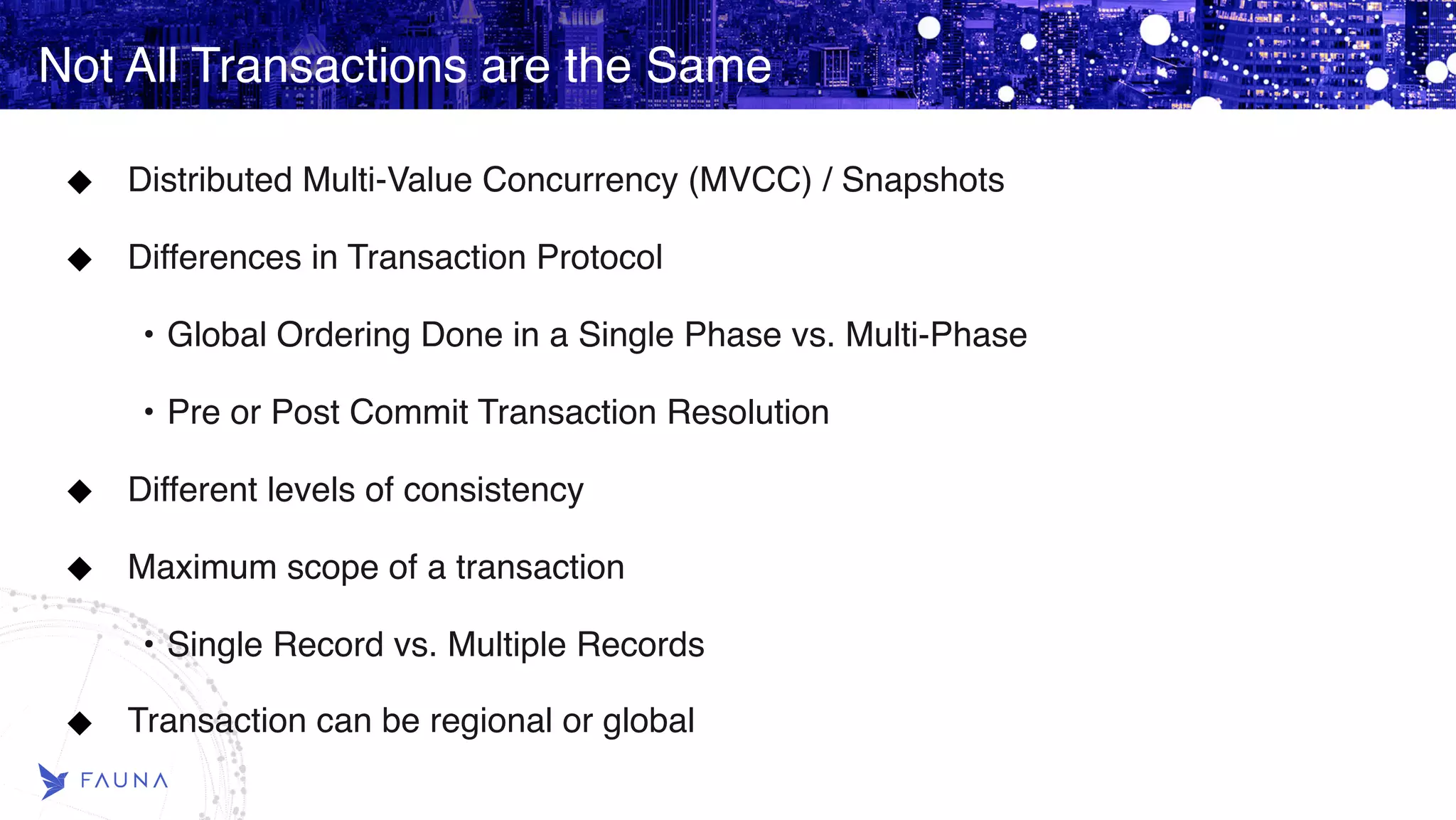

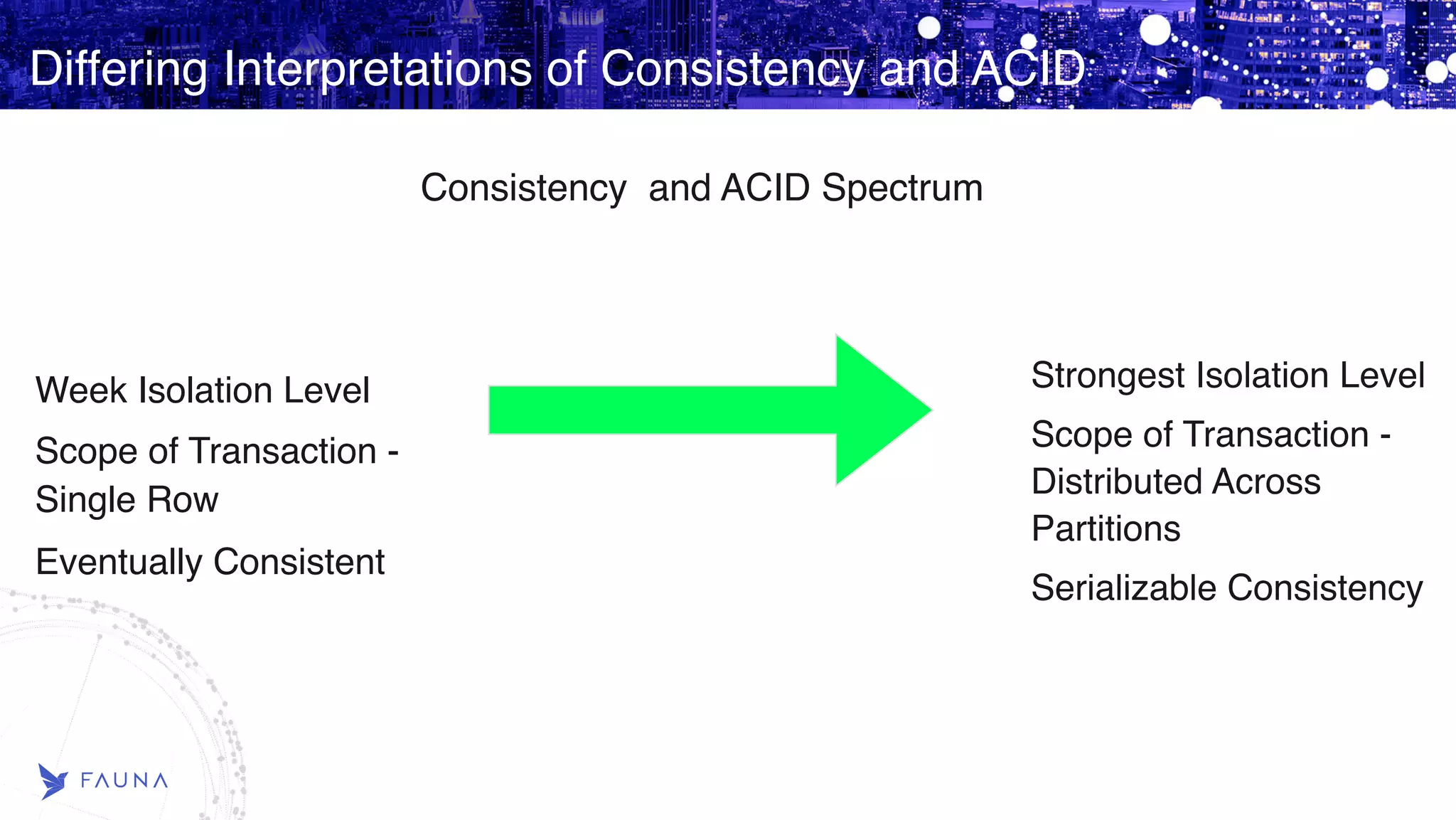

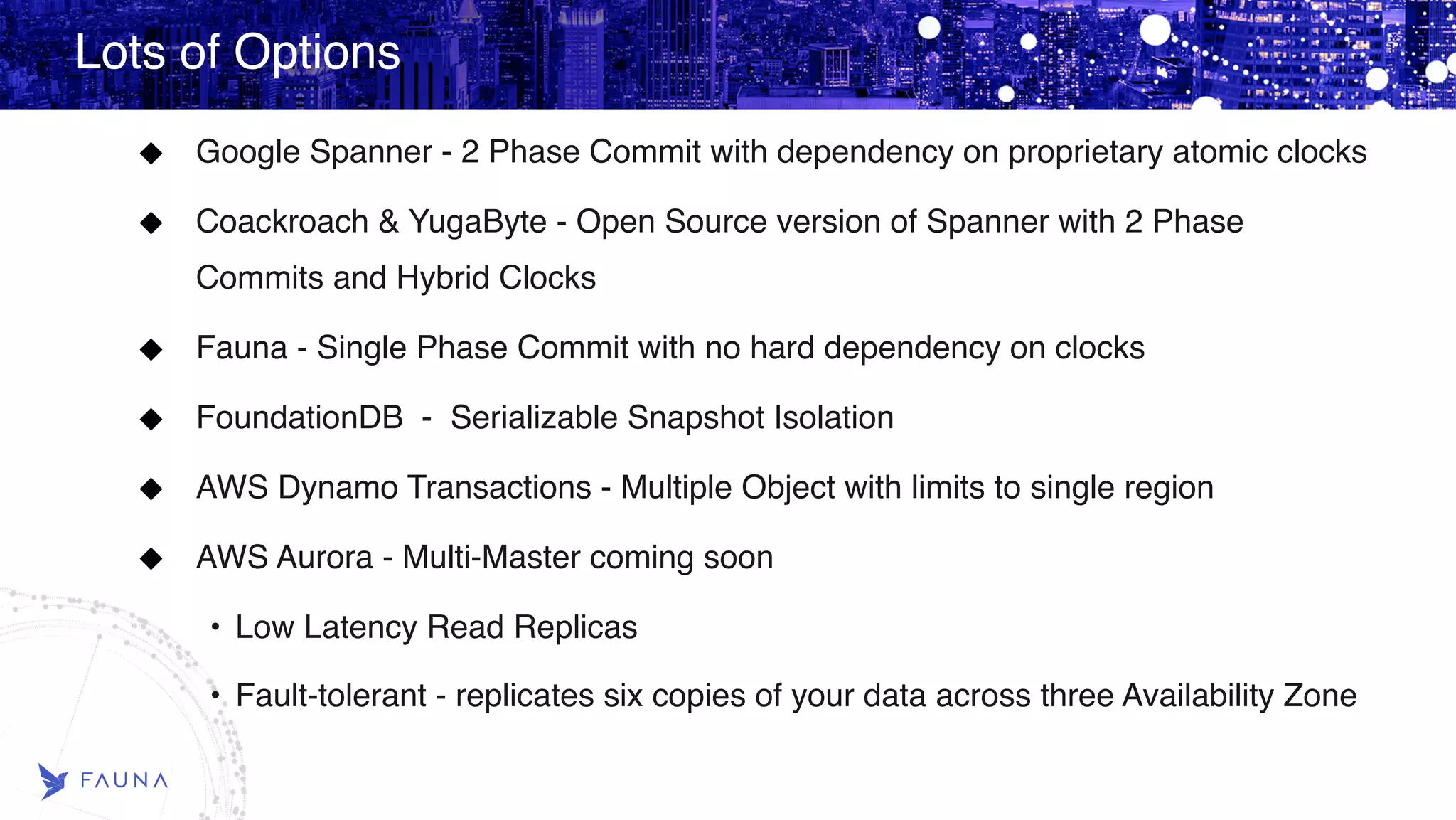

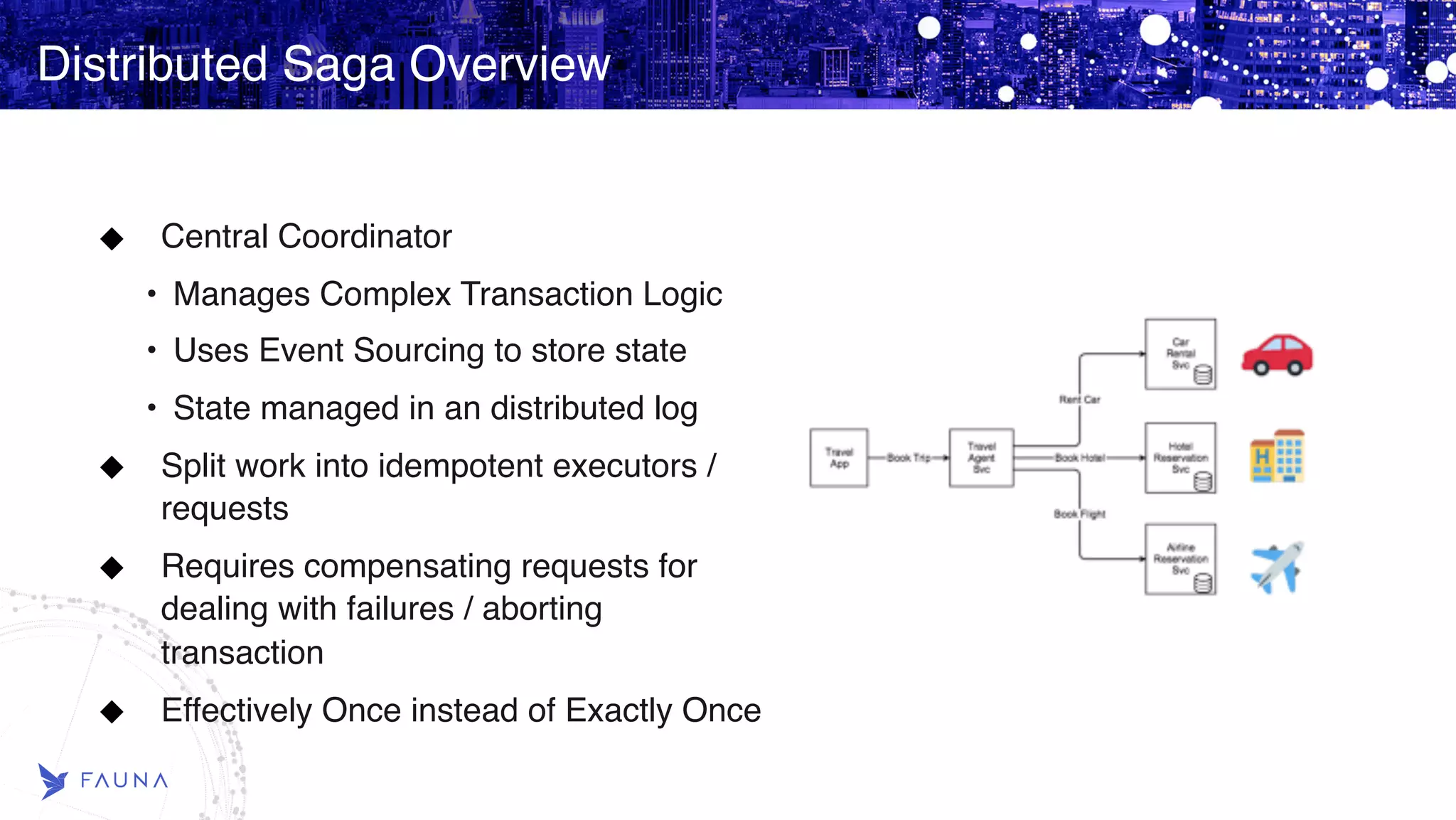

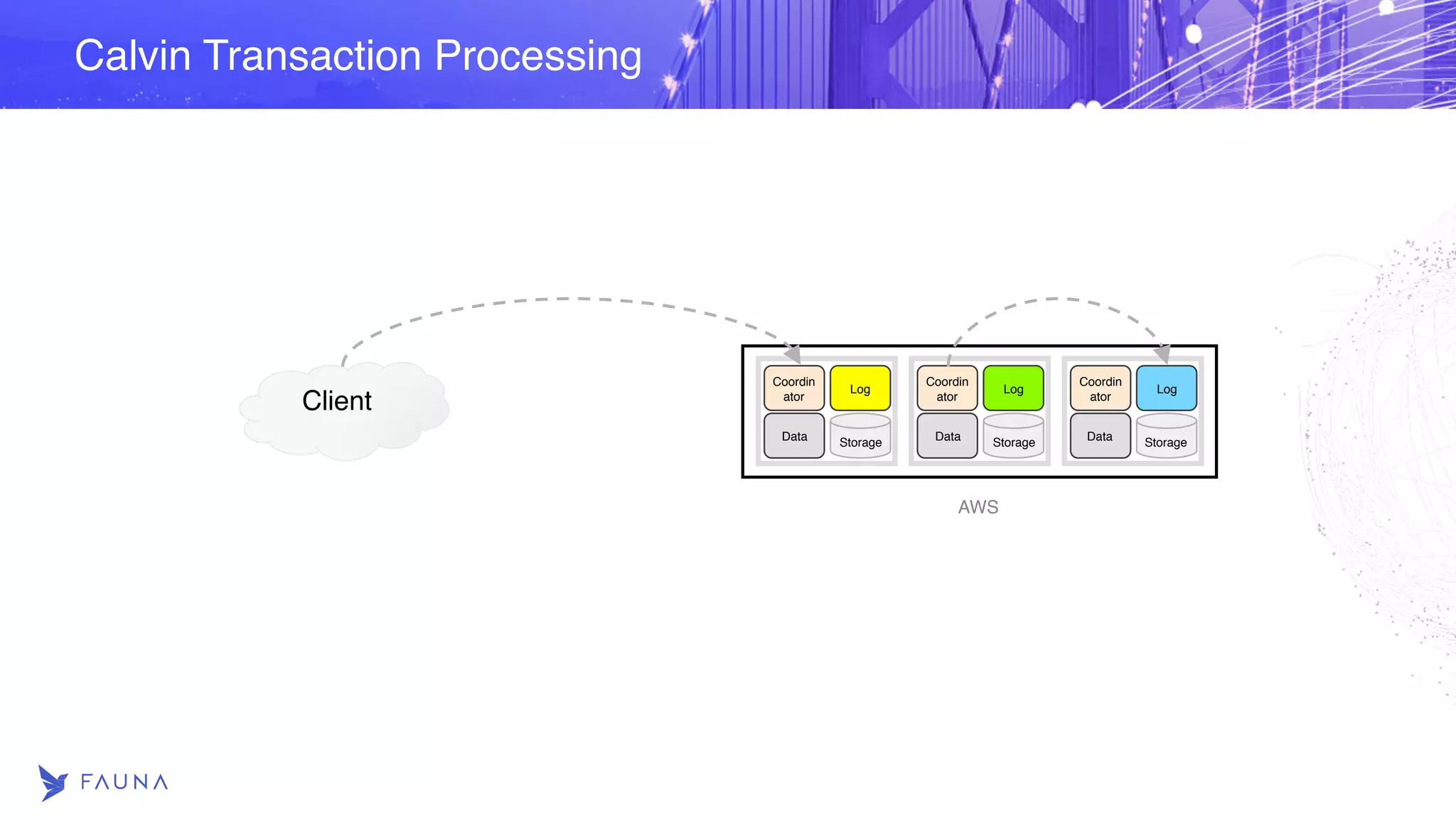

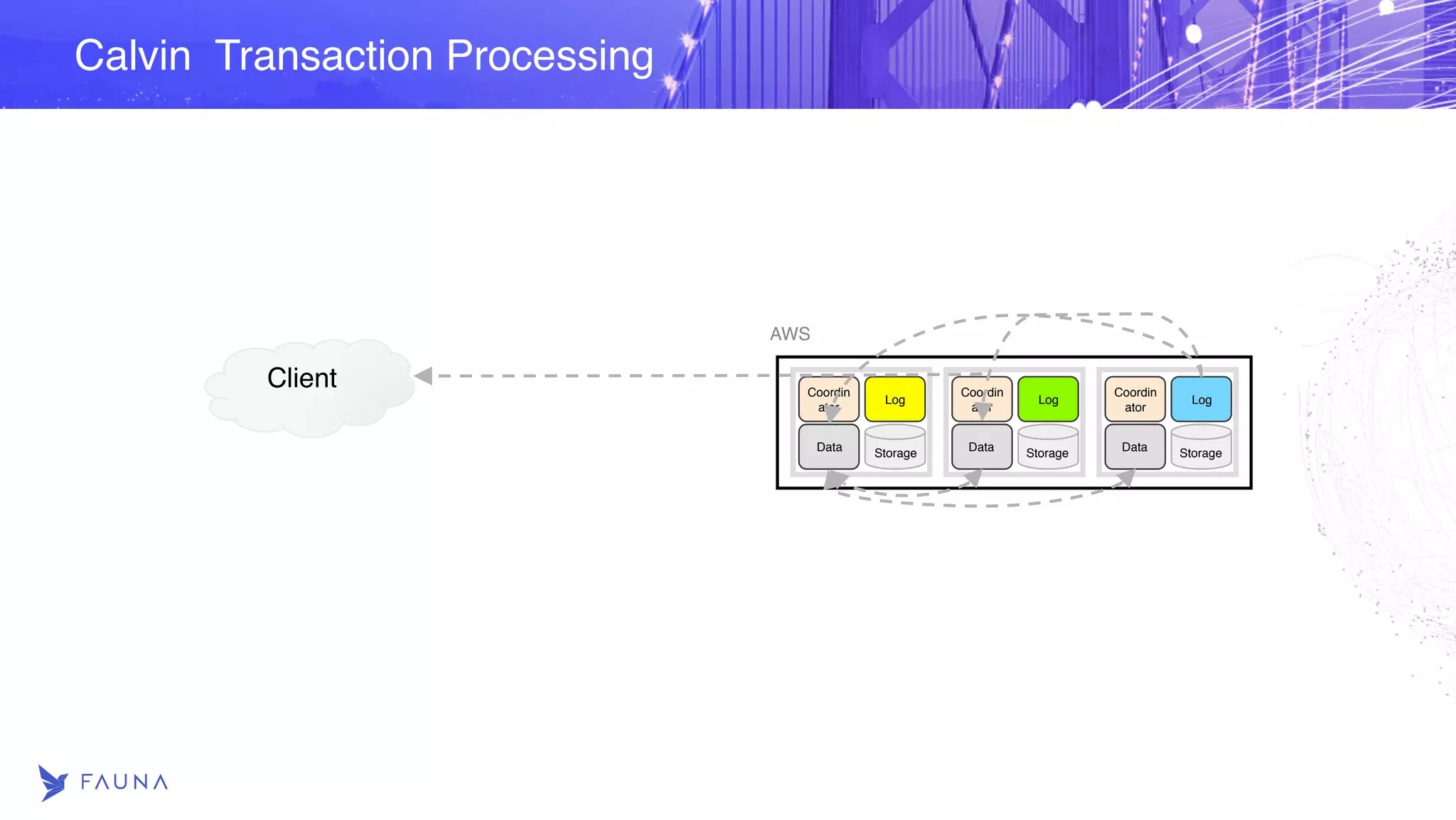

This document discusses data consistency patterns in cloud native applications. It begins with an overview of data consistency challenges and models, including eventual, causal, and linearizable consistency. It then covers consistency at the application tier using patterns like sticky sessions as well as using distributed databases that provide strong consistency with global transactions. Specific databases discussed include Spanner, CockroachDB, YugaByte, FaunaDB, and FoundationDB. The document also covers using eventual consistency with patterns like CRDTs, sagas, and lightweight transactions. It concludes with an in-depth discussion of the Calvin consensus protocol used by FaunaDB to provide deterministic, serializable transactions at a global scale.