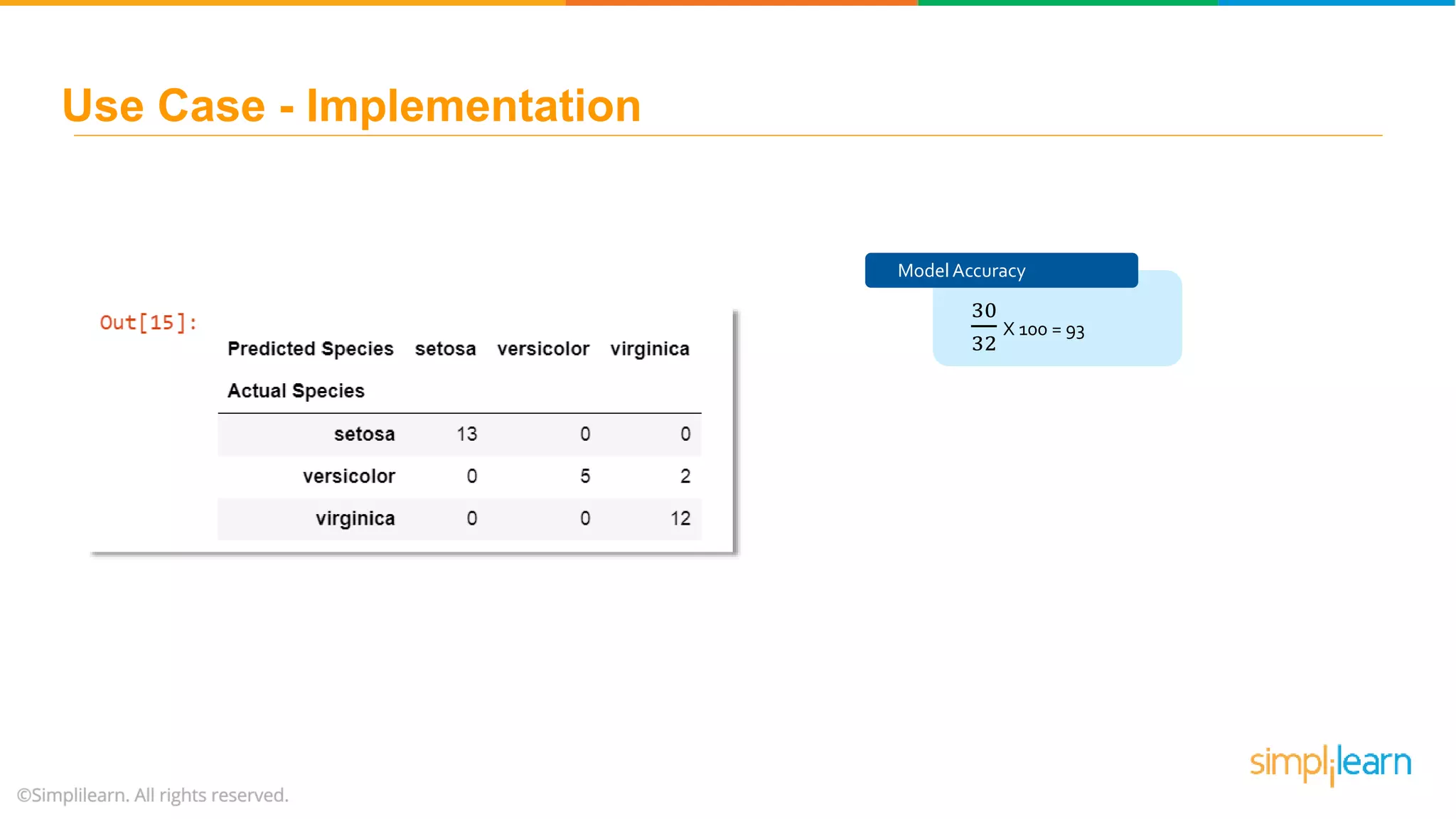

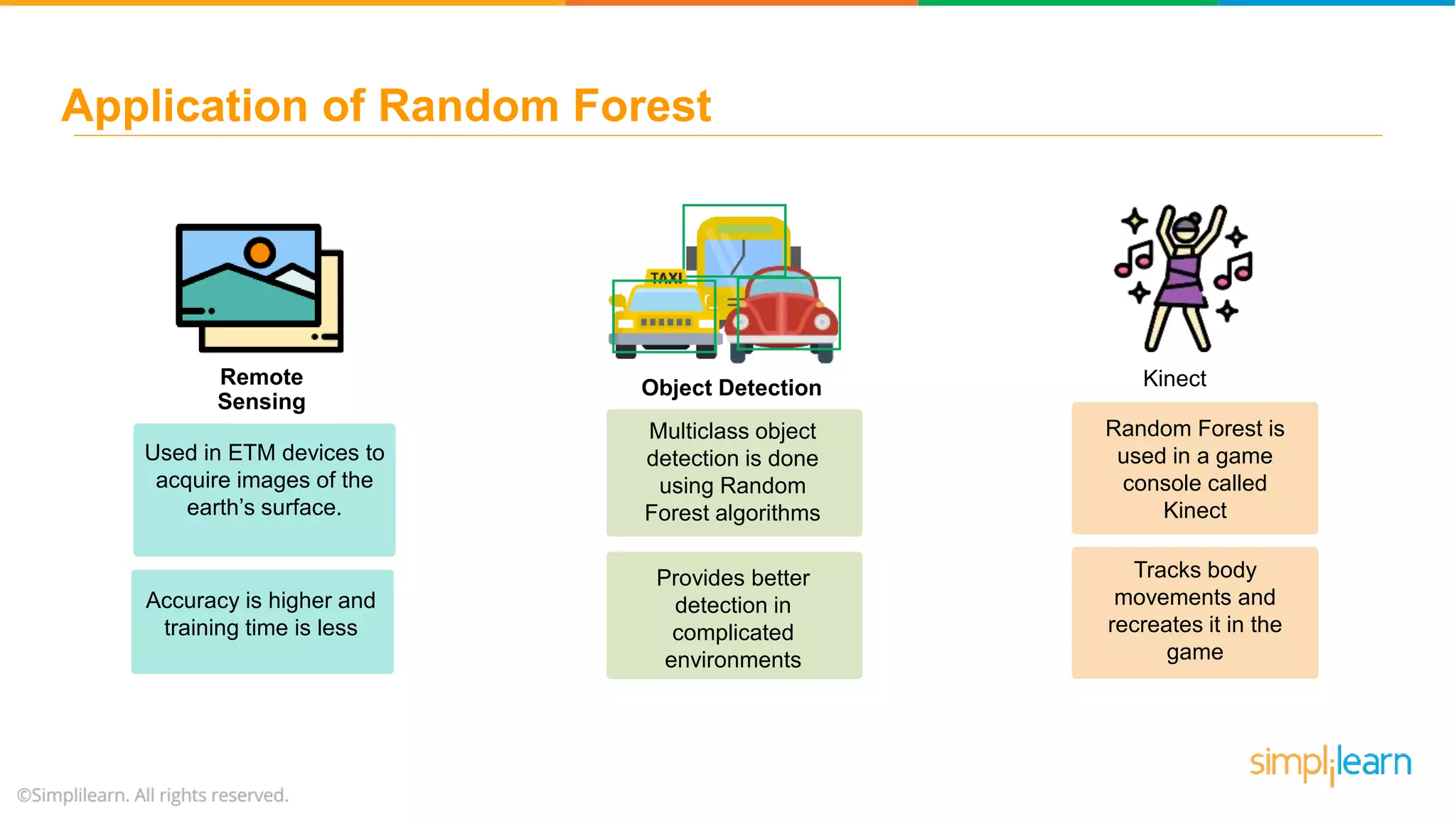

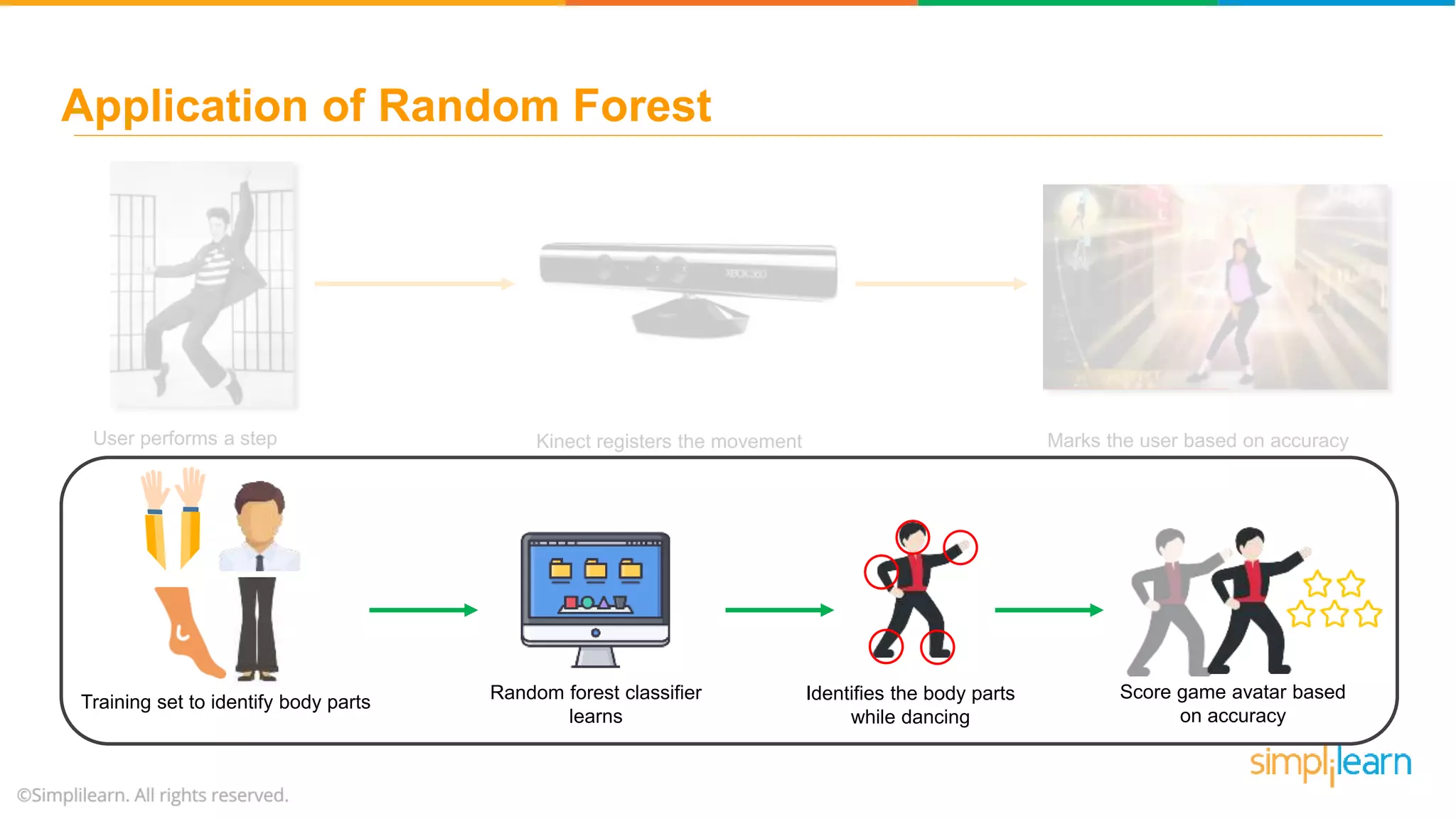

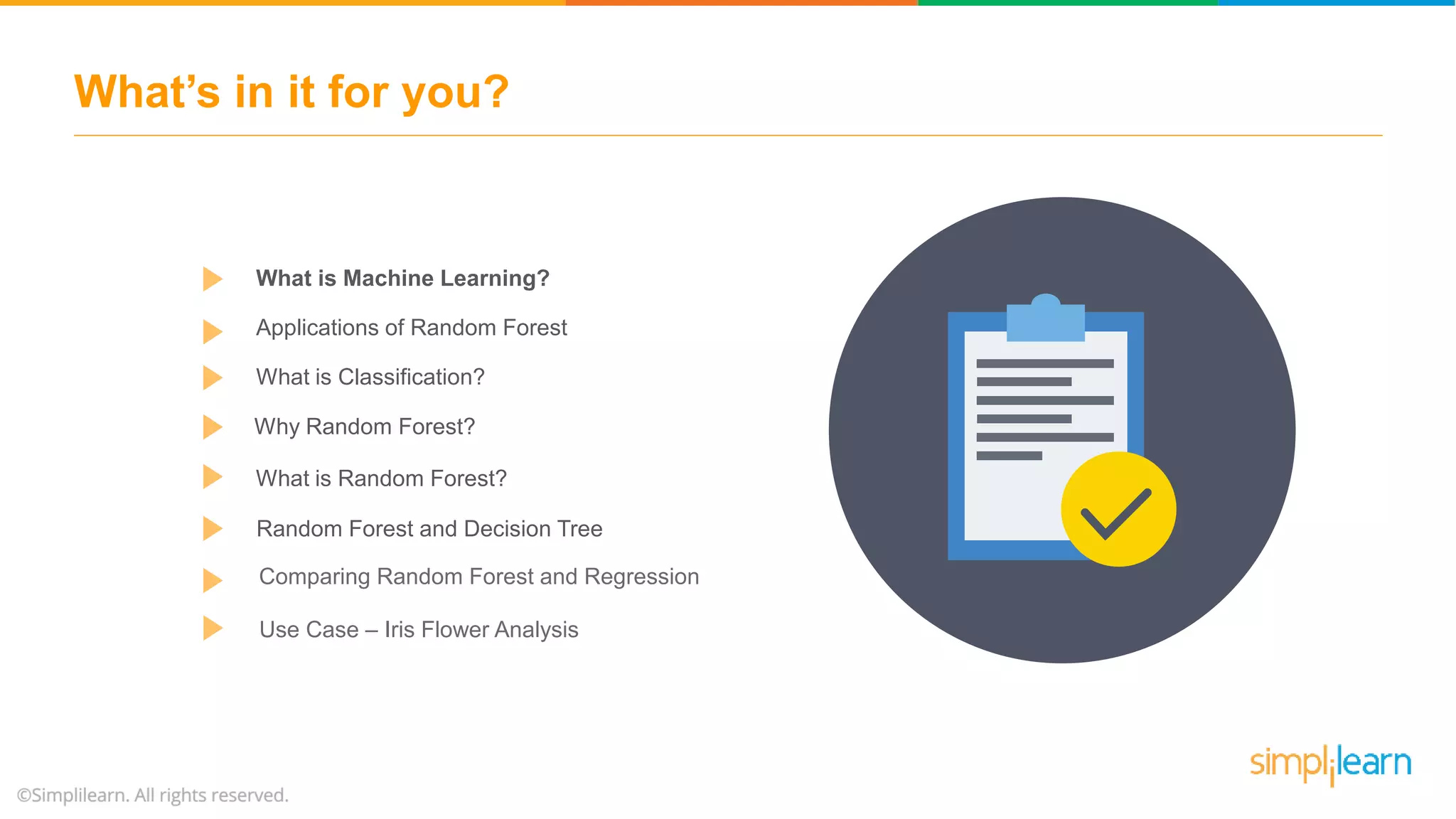

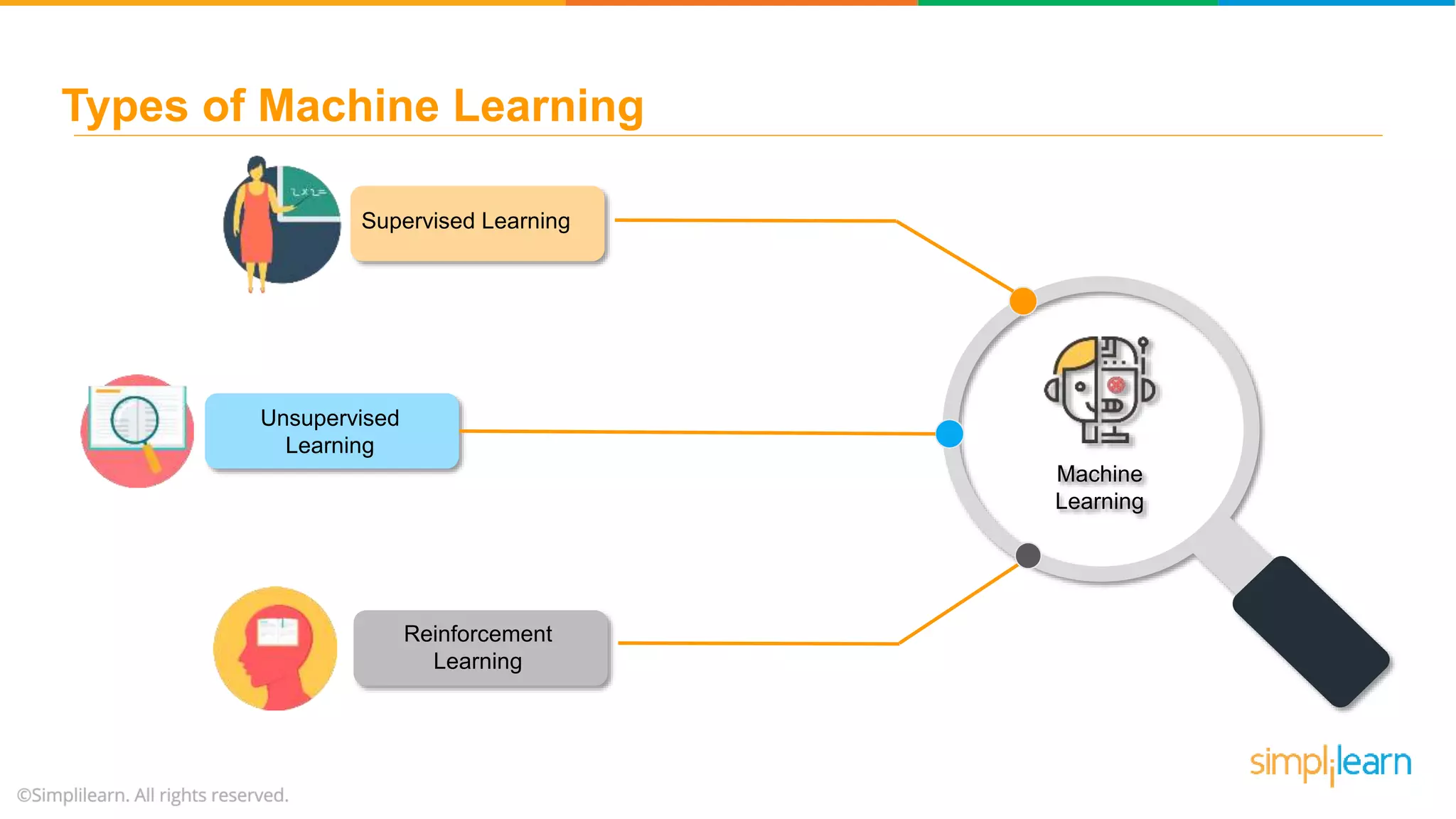

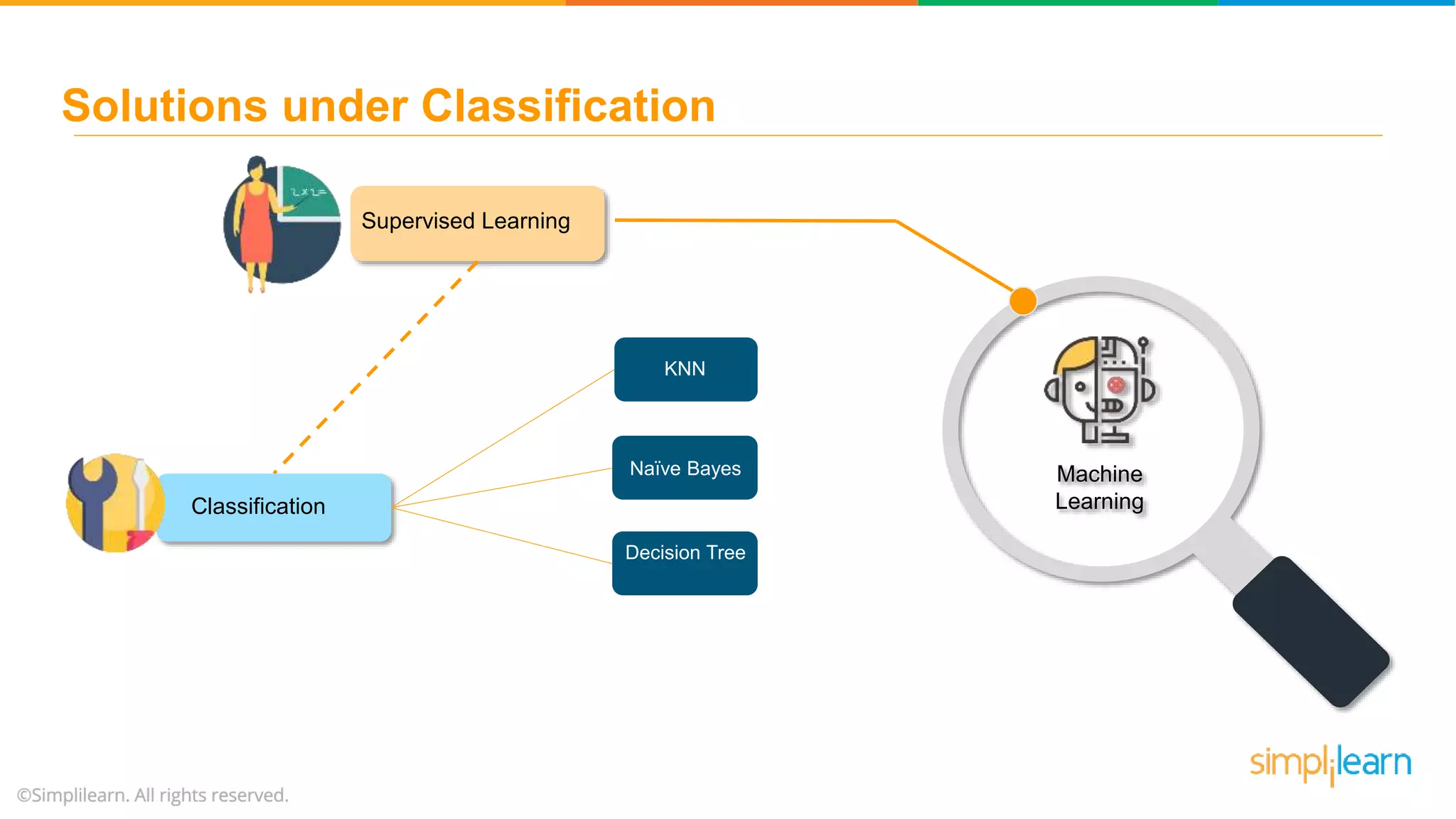

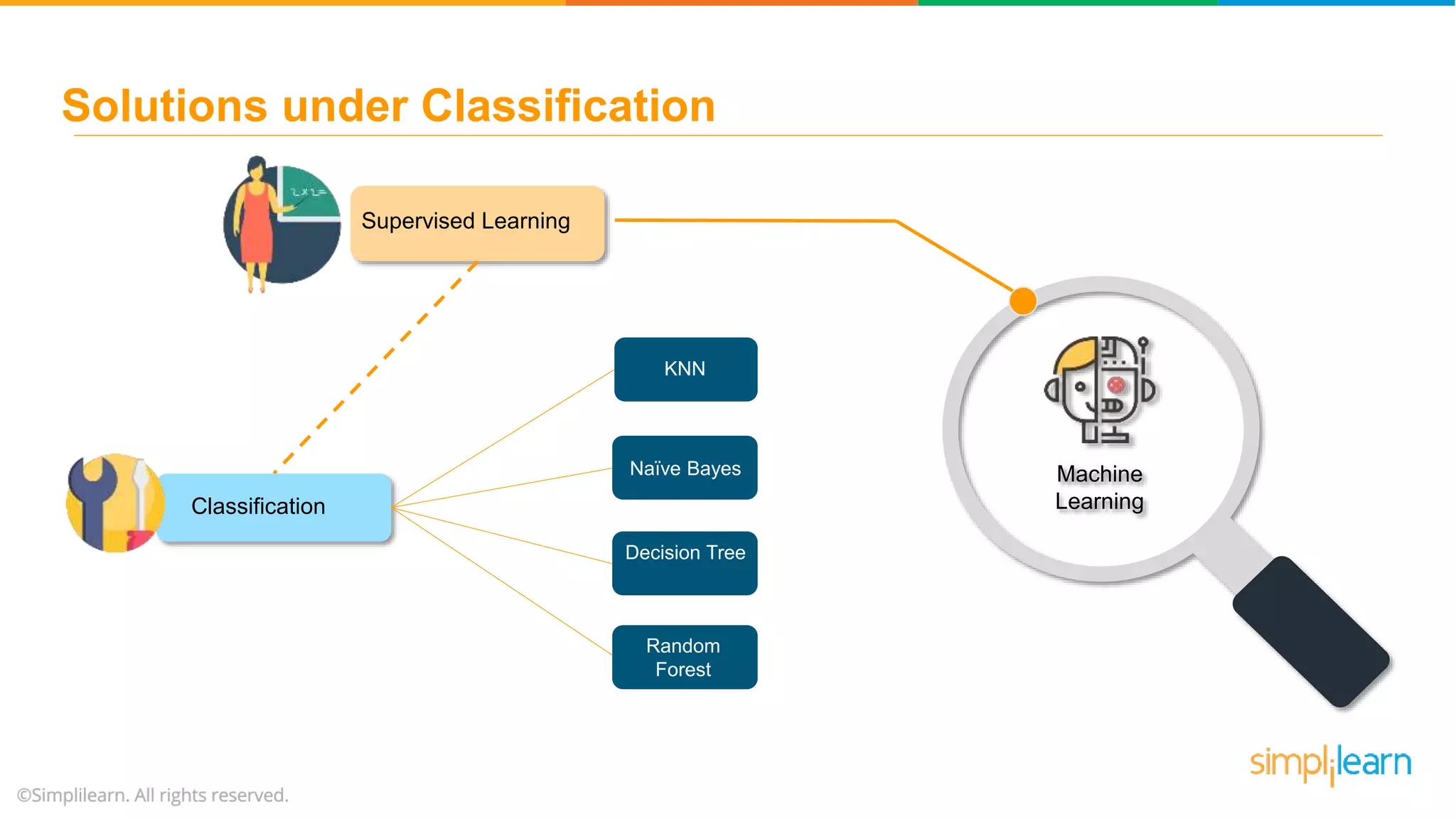

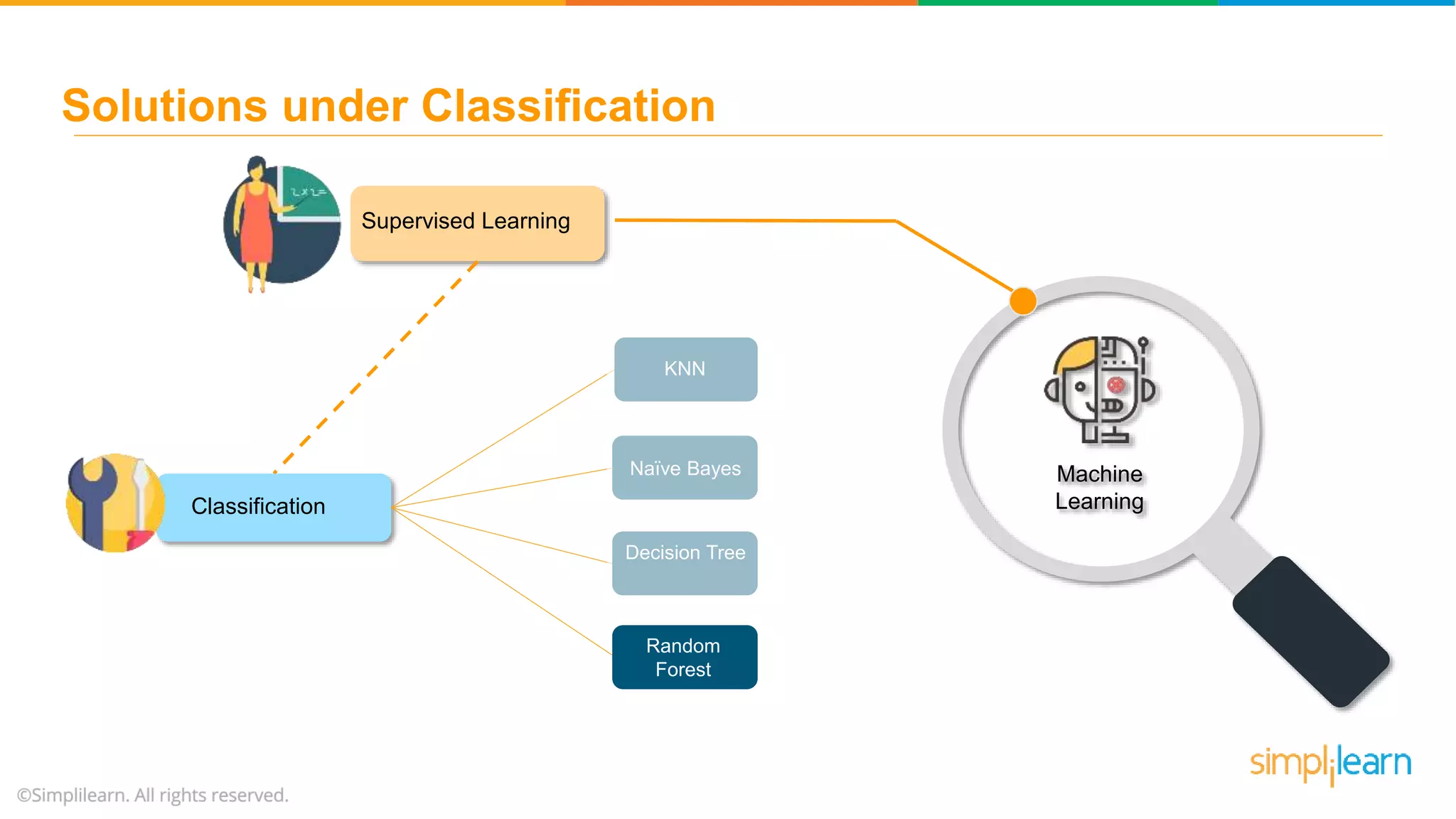

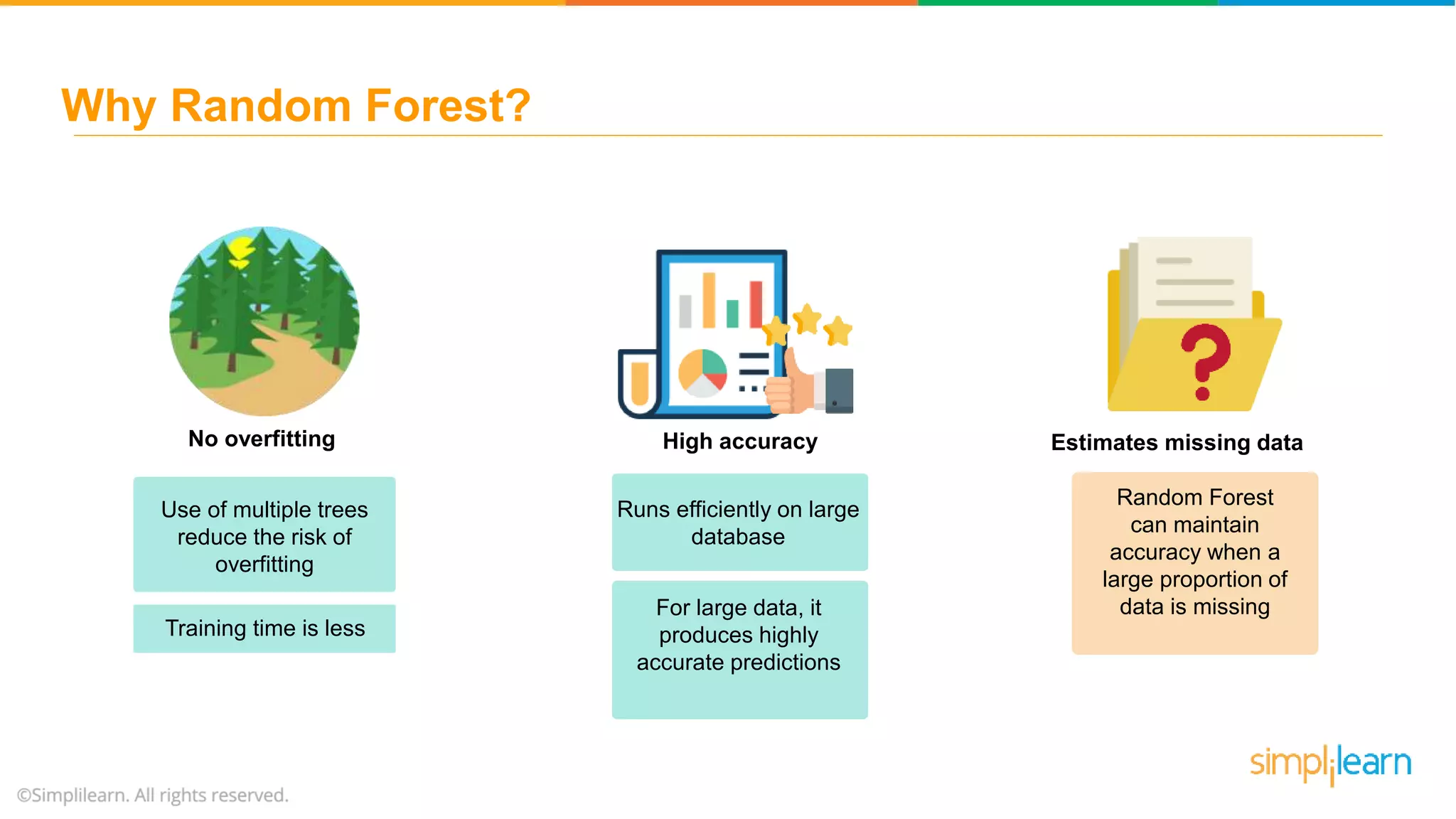

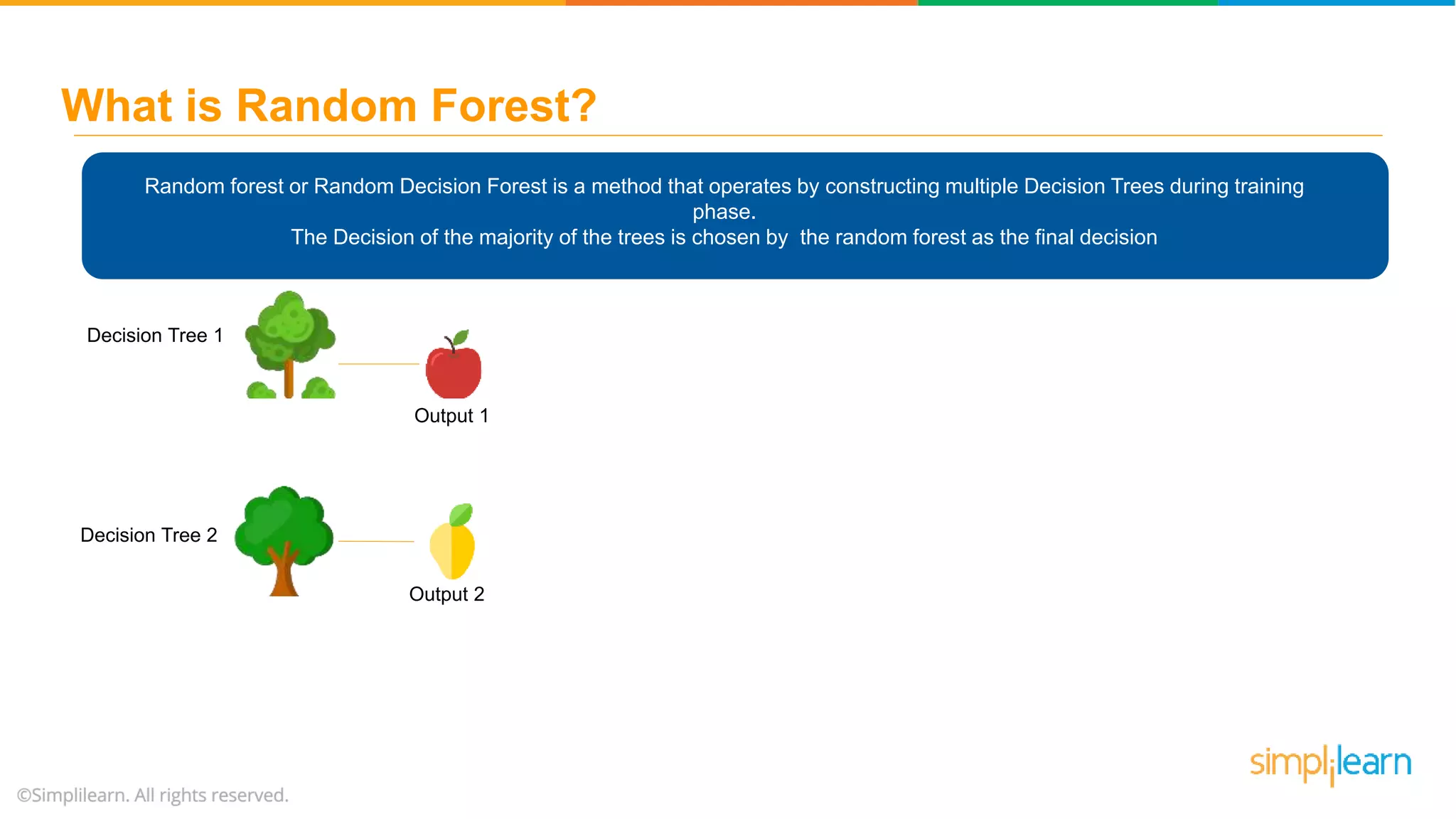

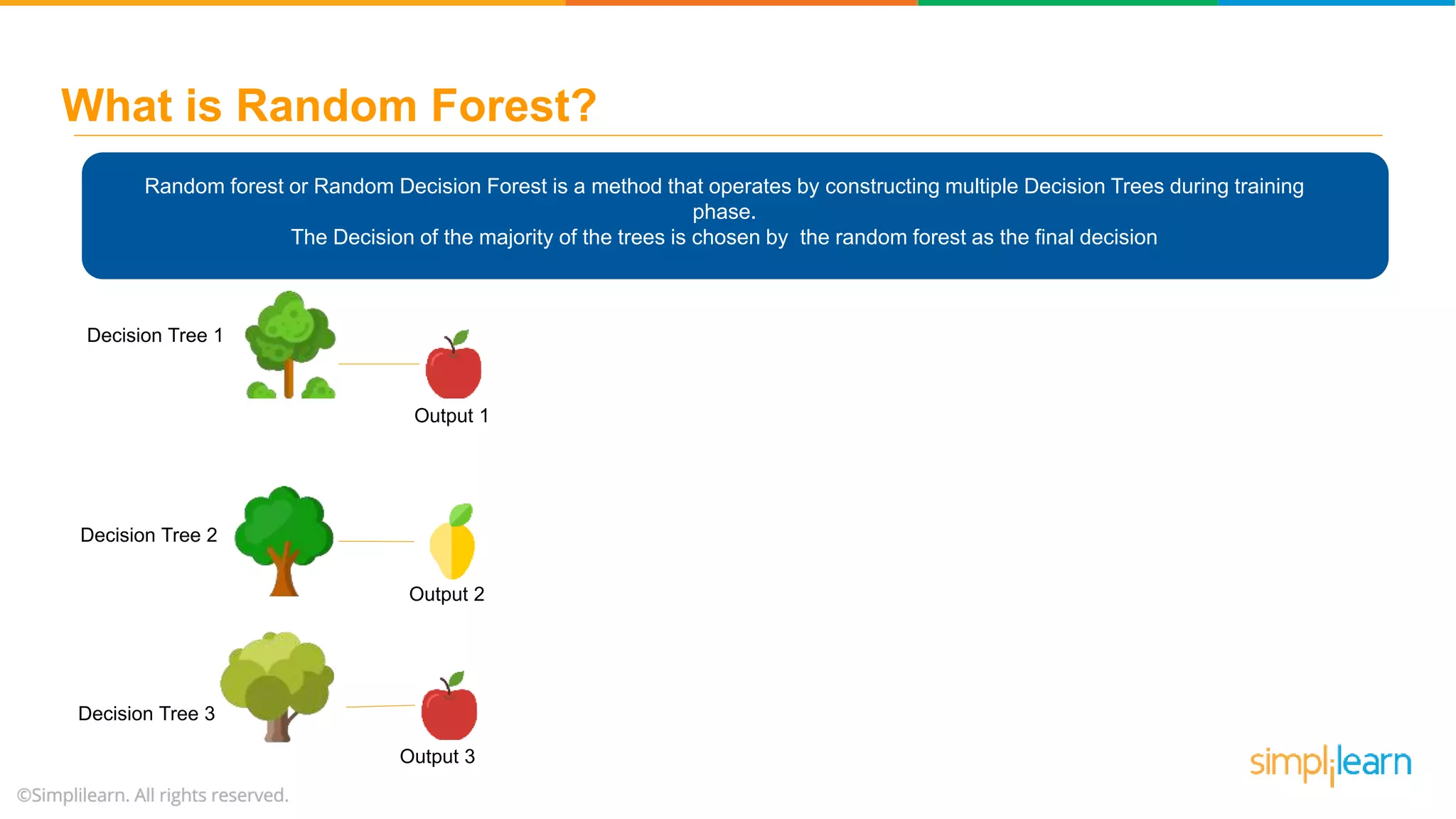

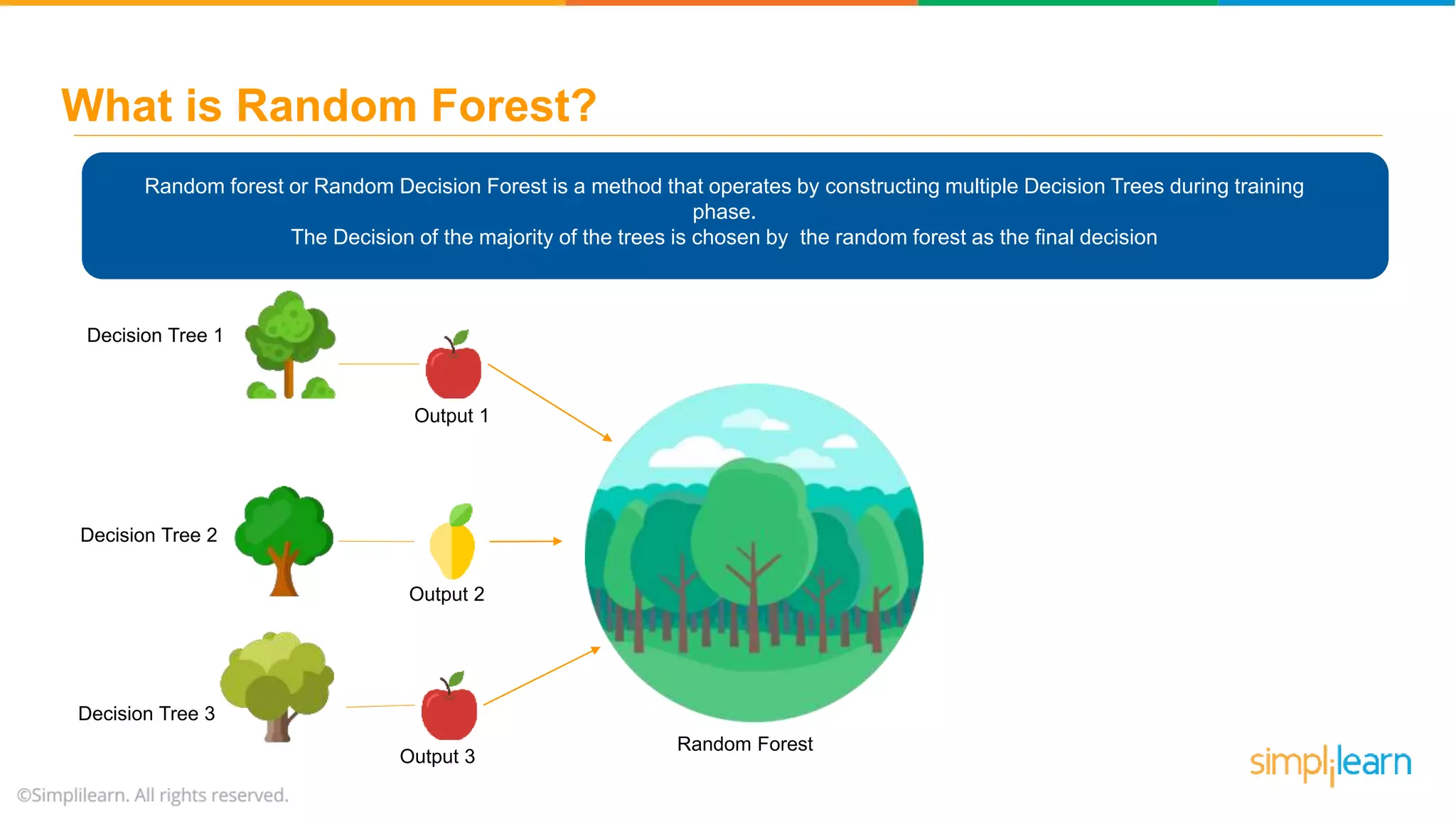

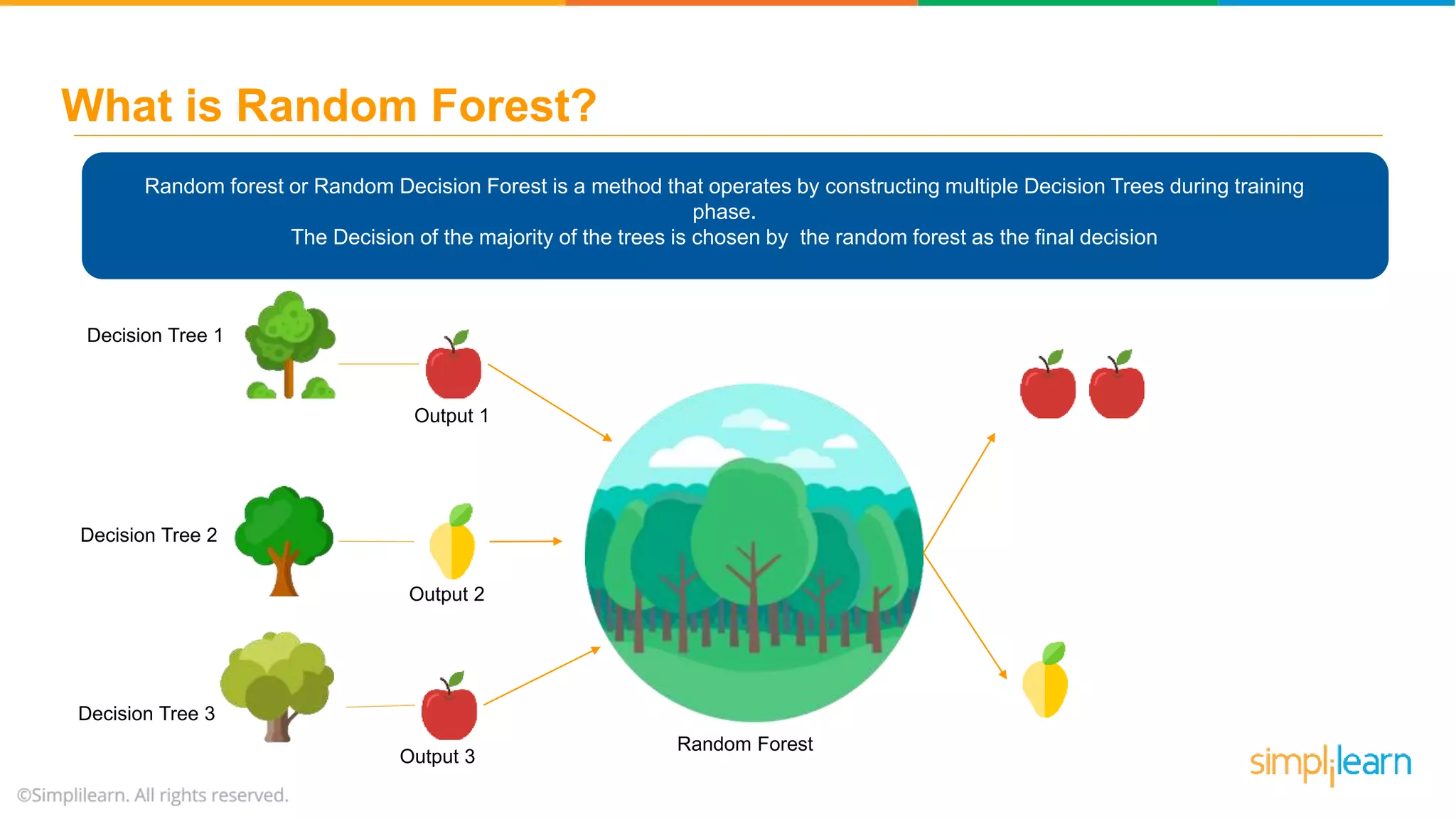

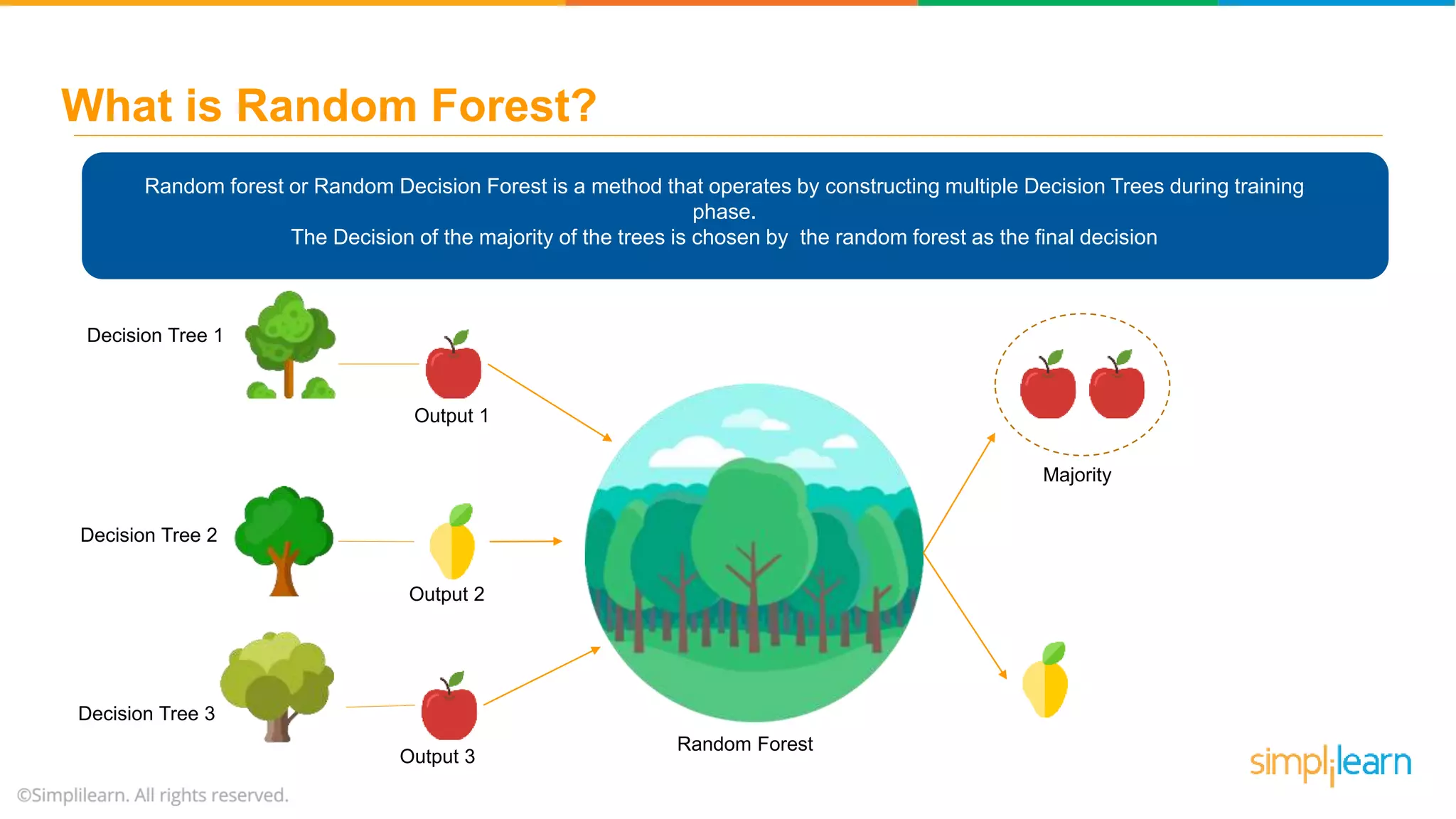

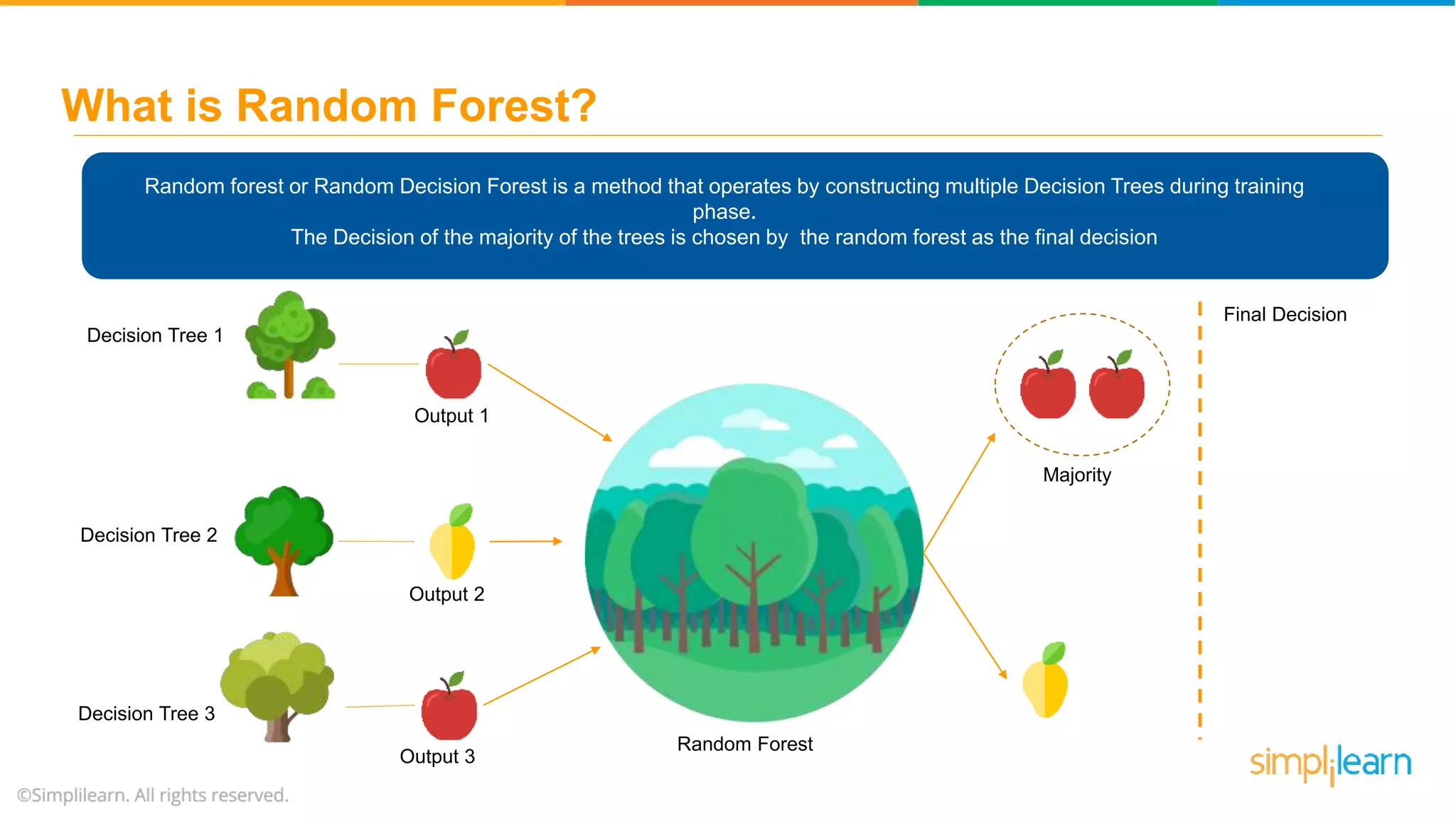

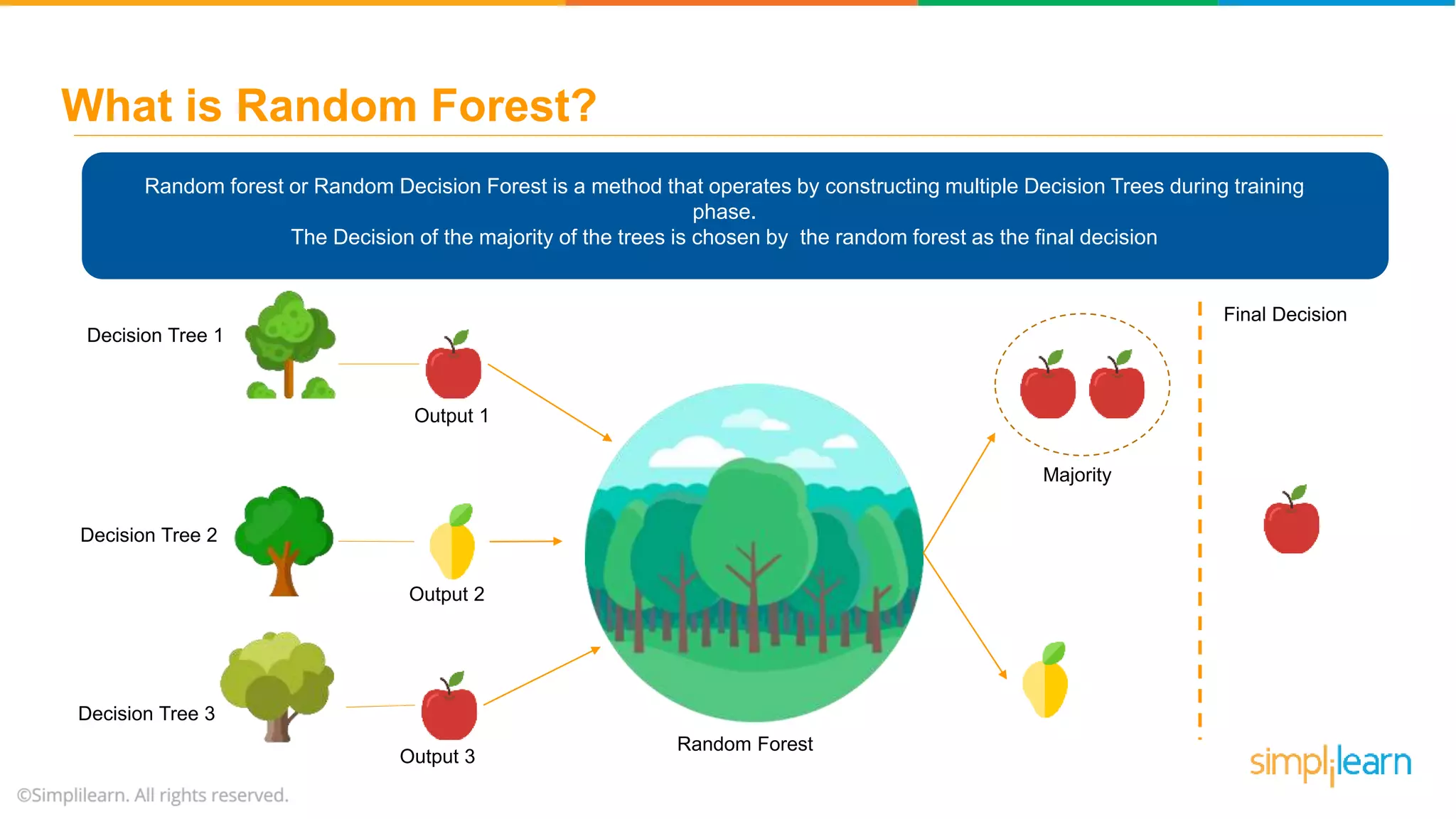

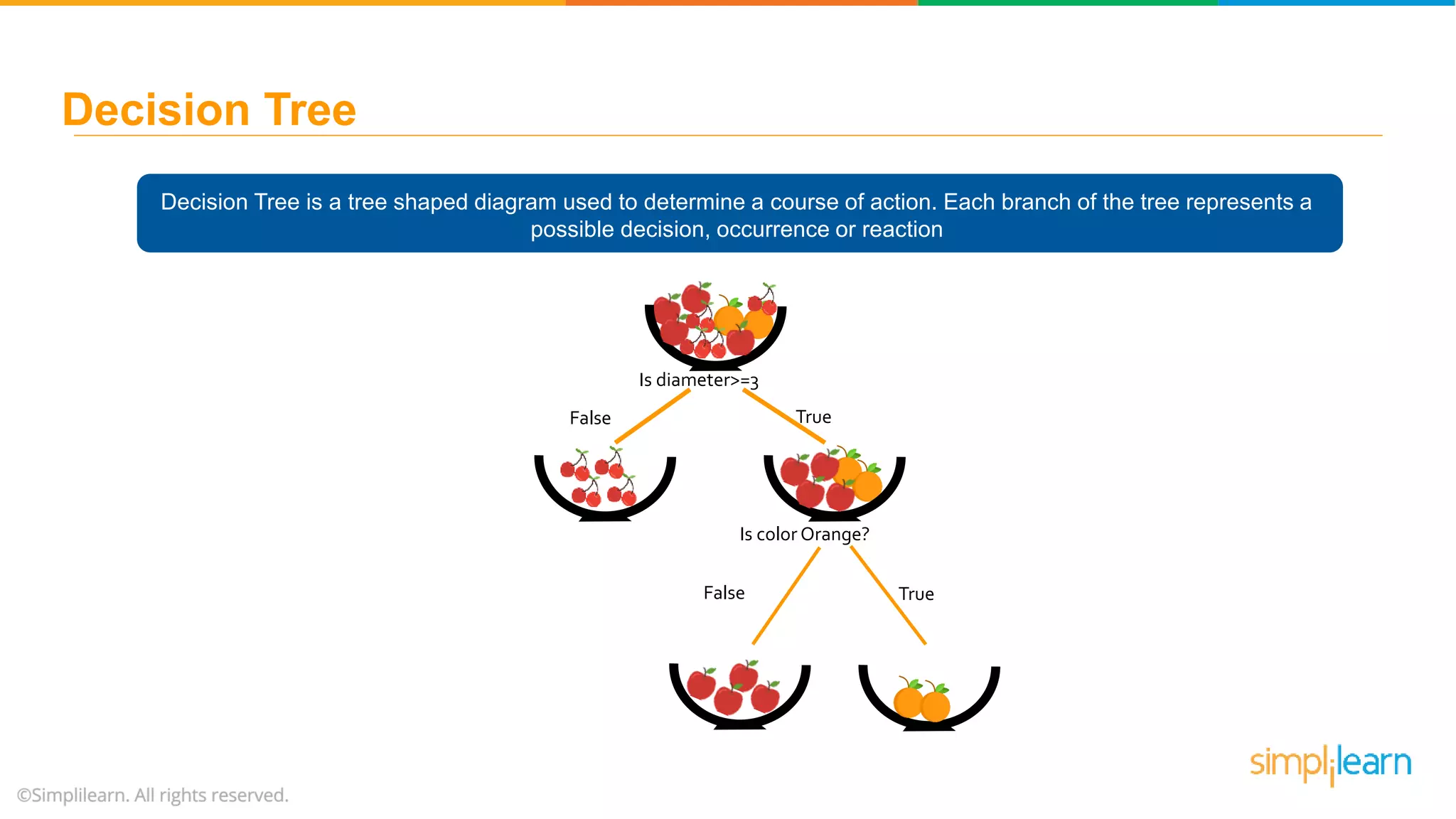

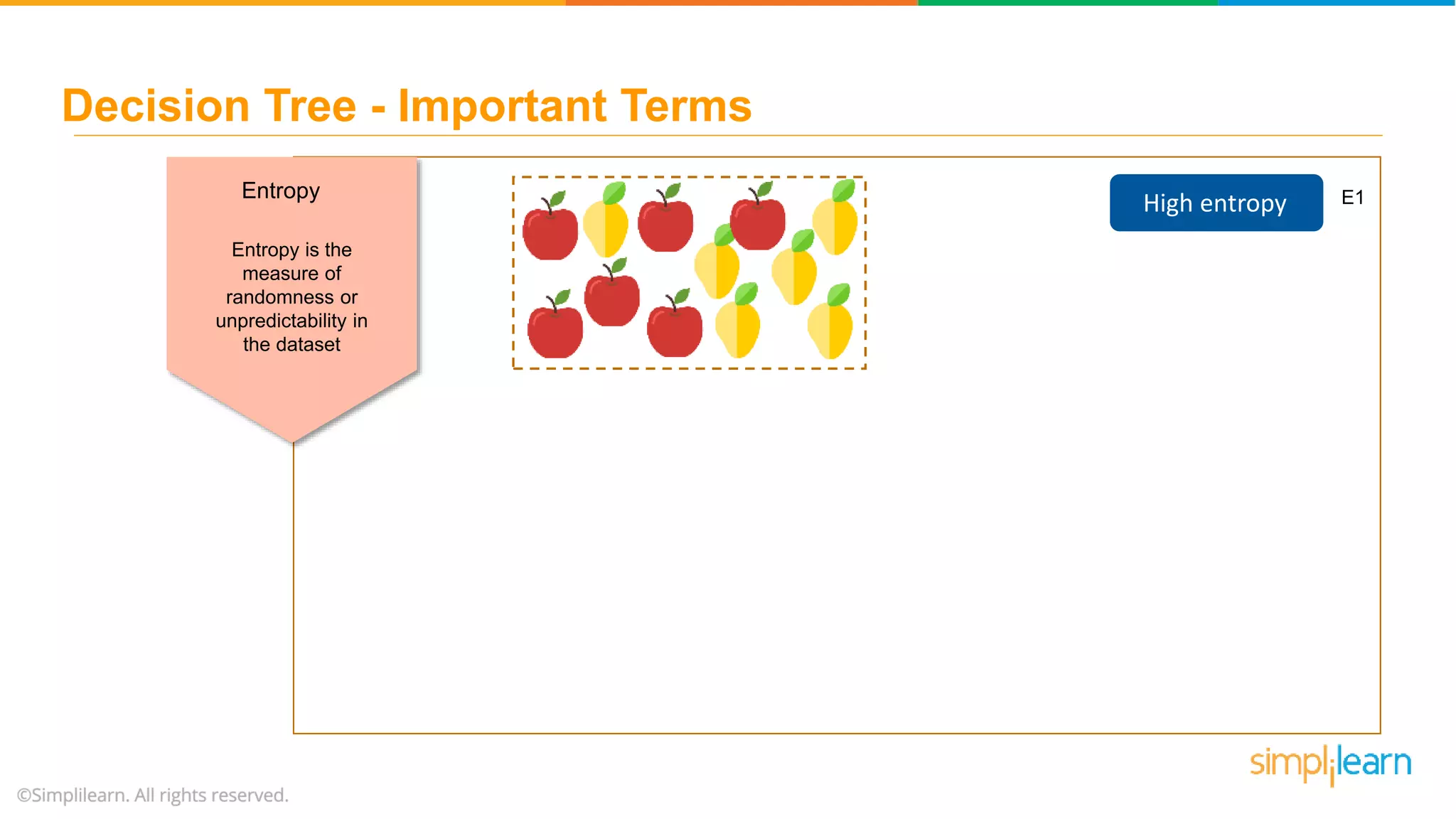

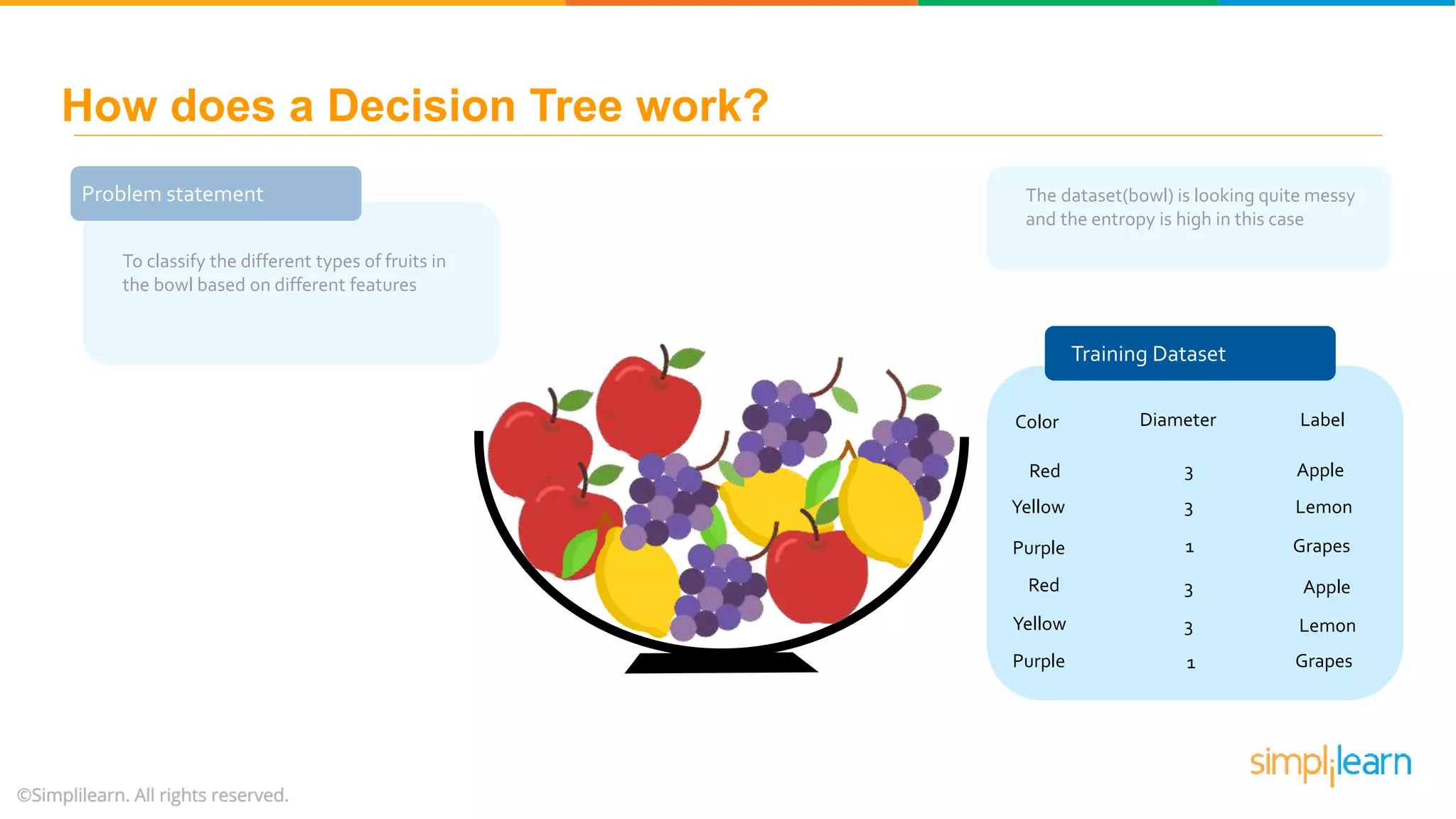

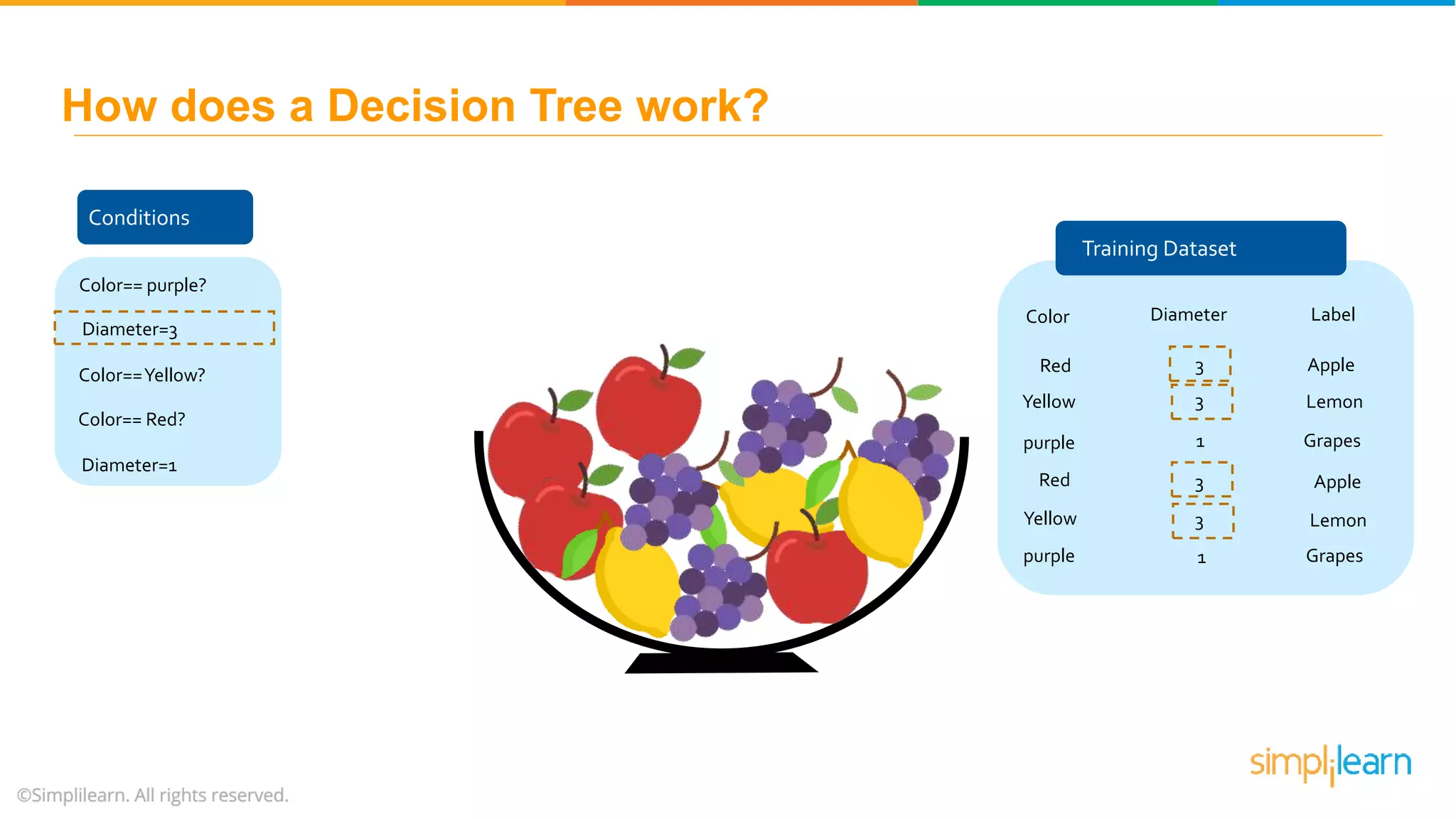

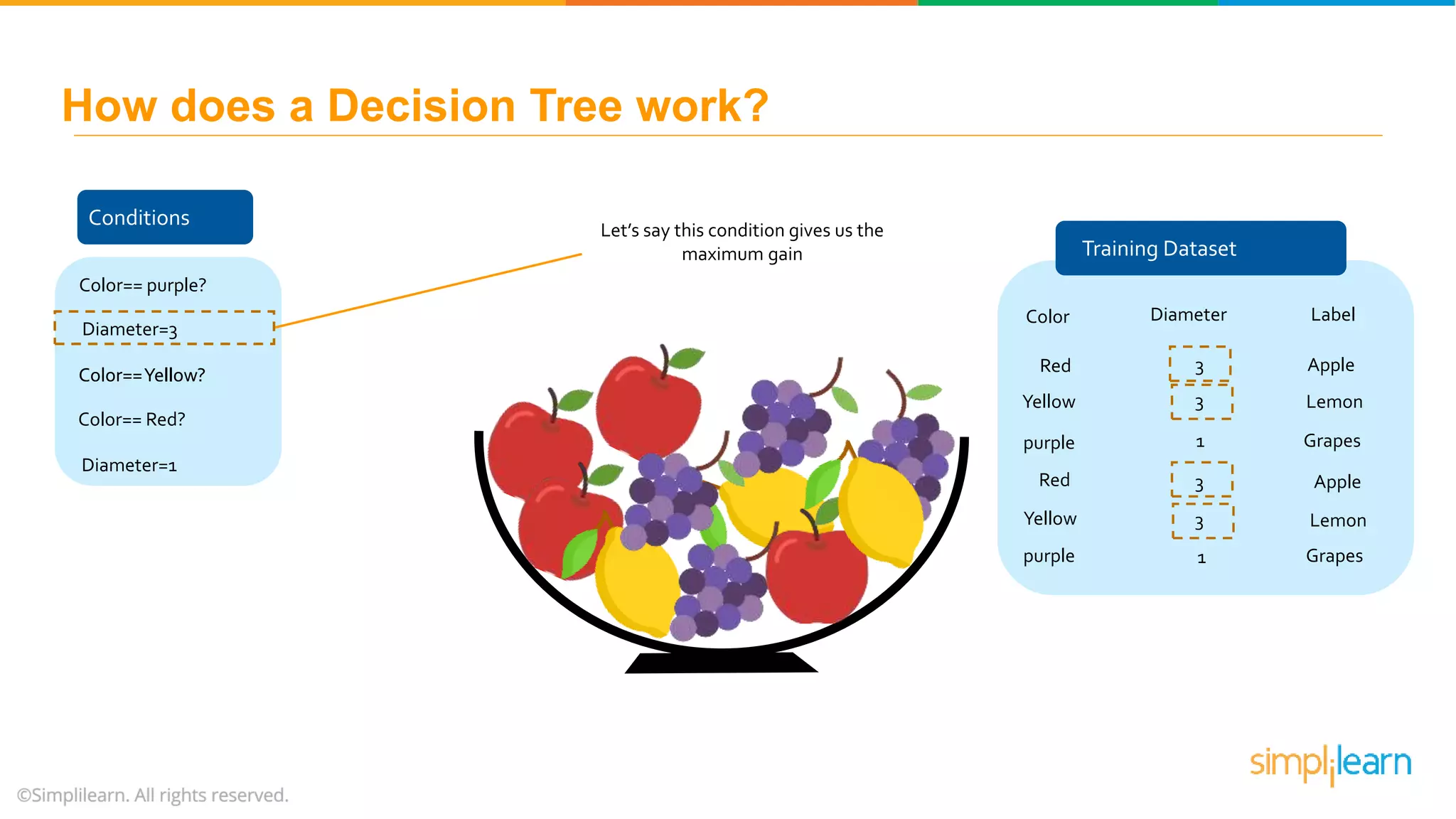

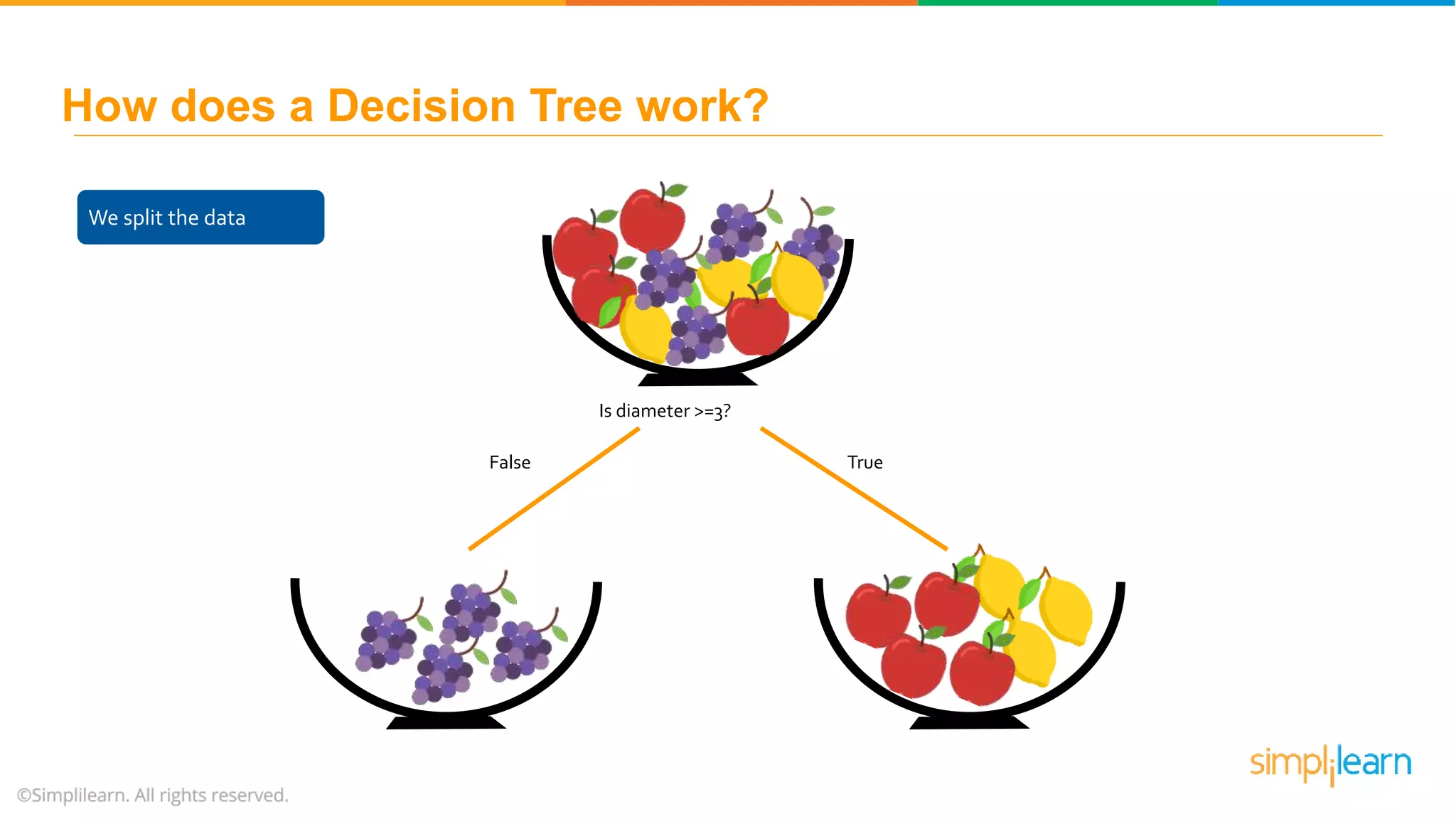

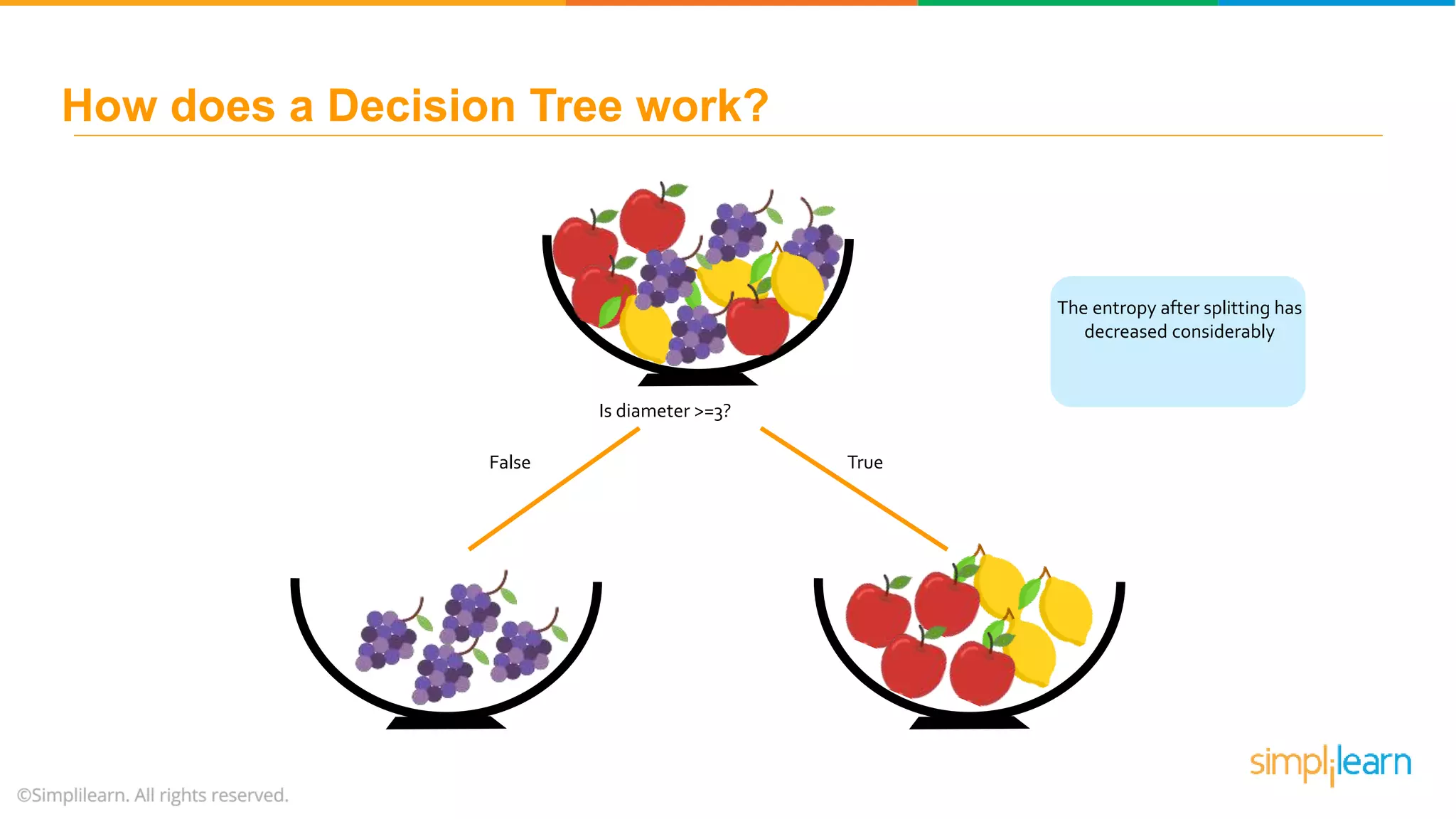

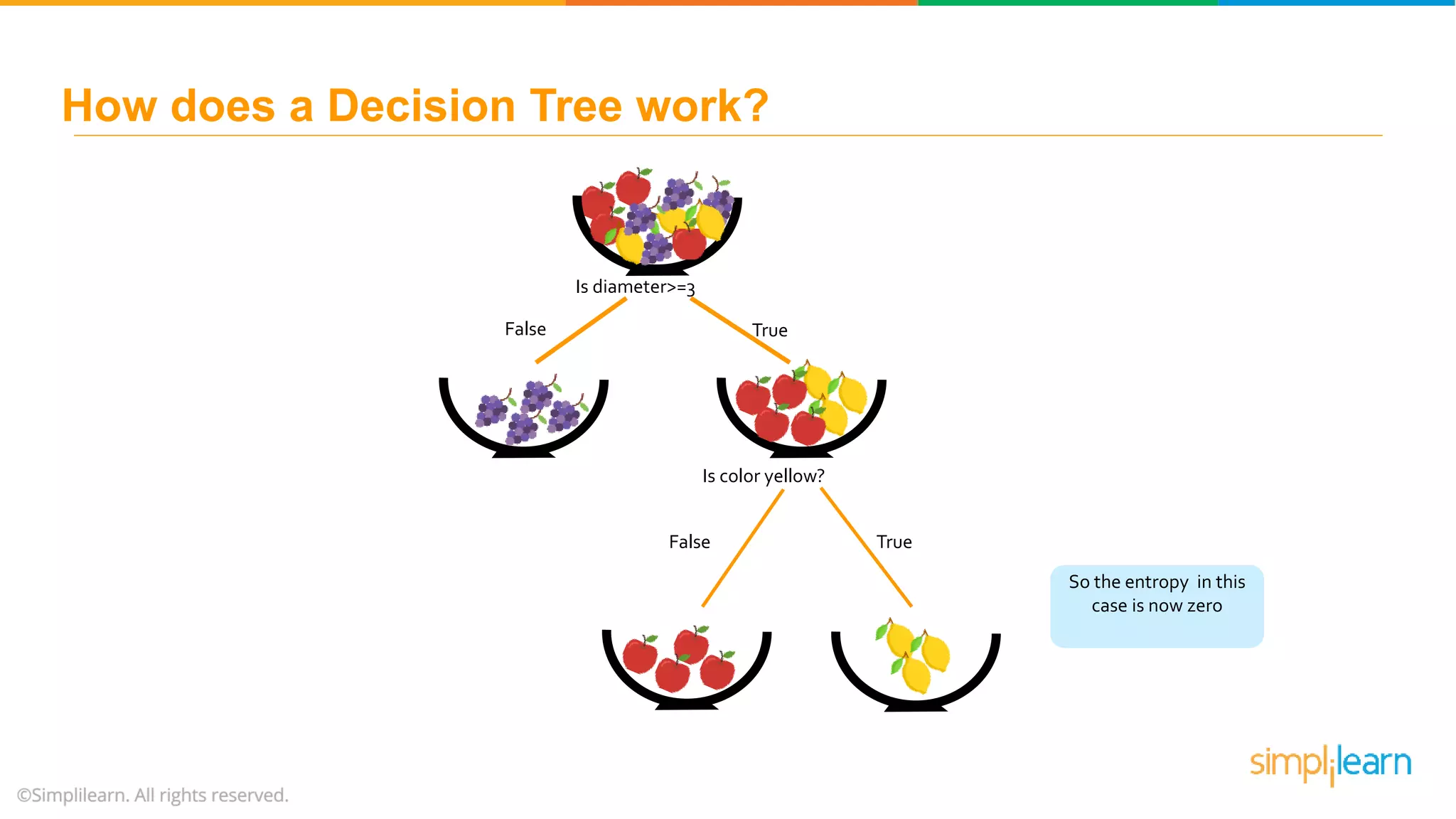

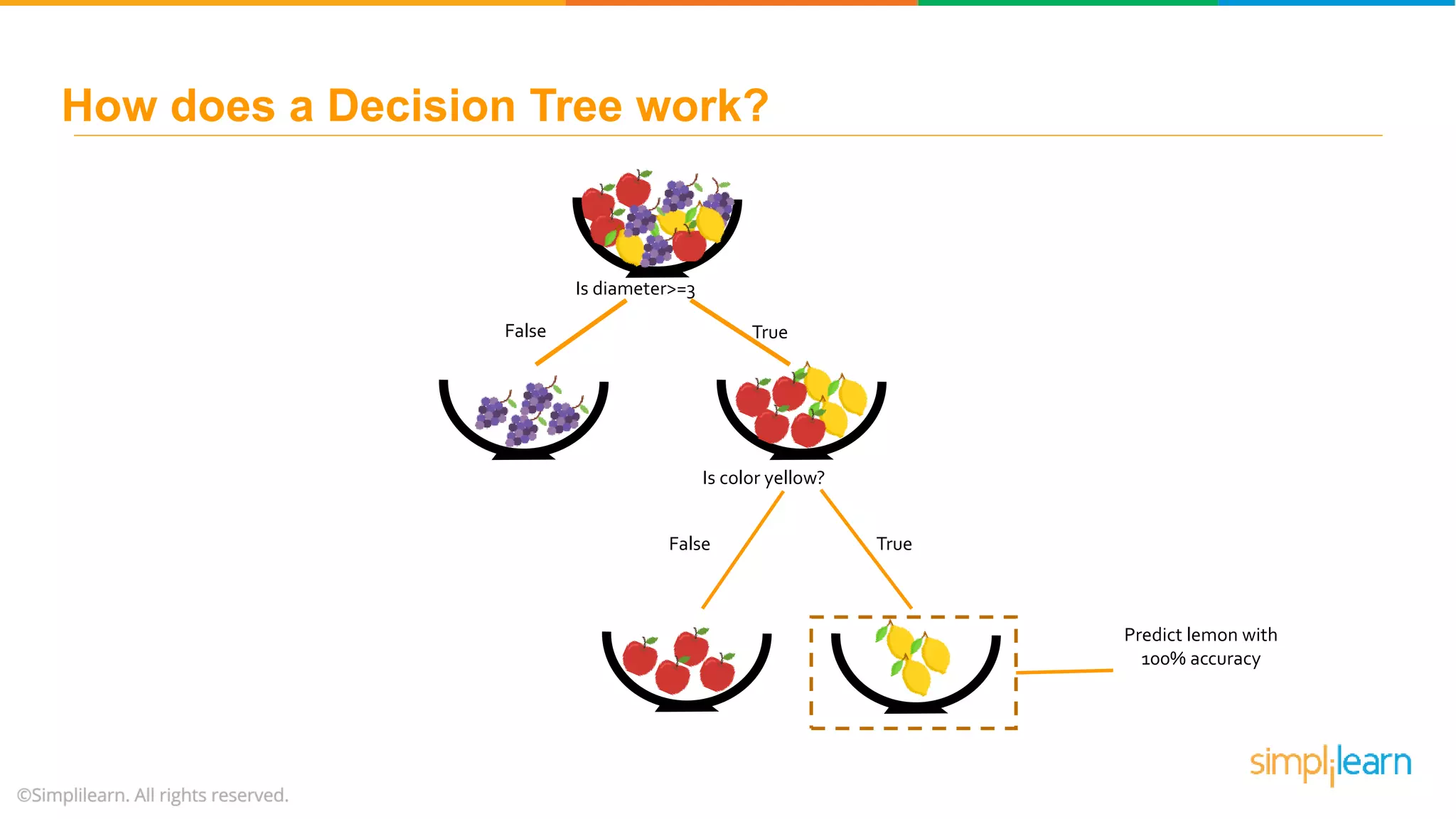

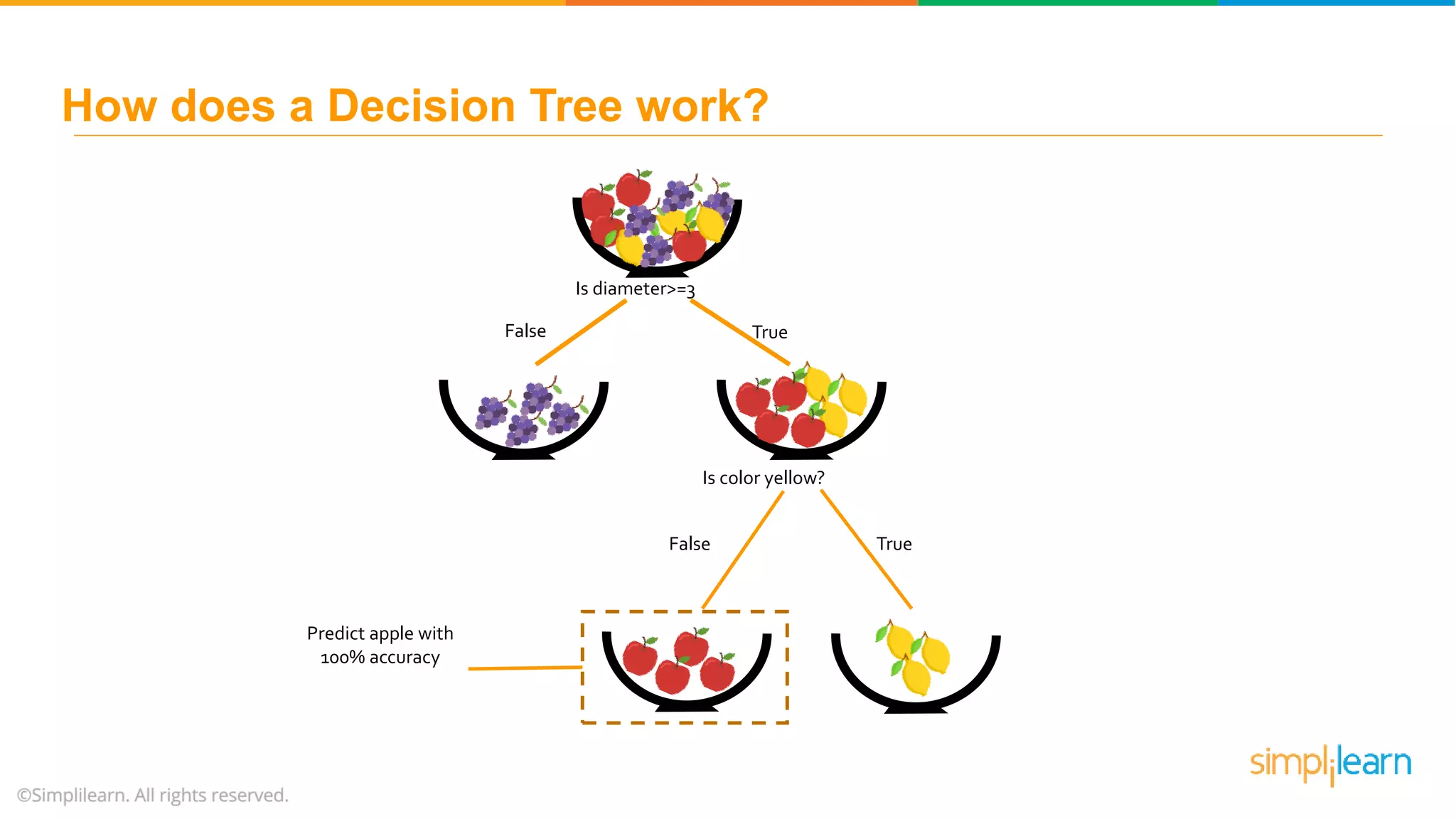

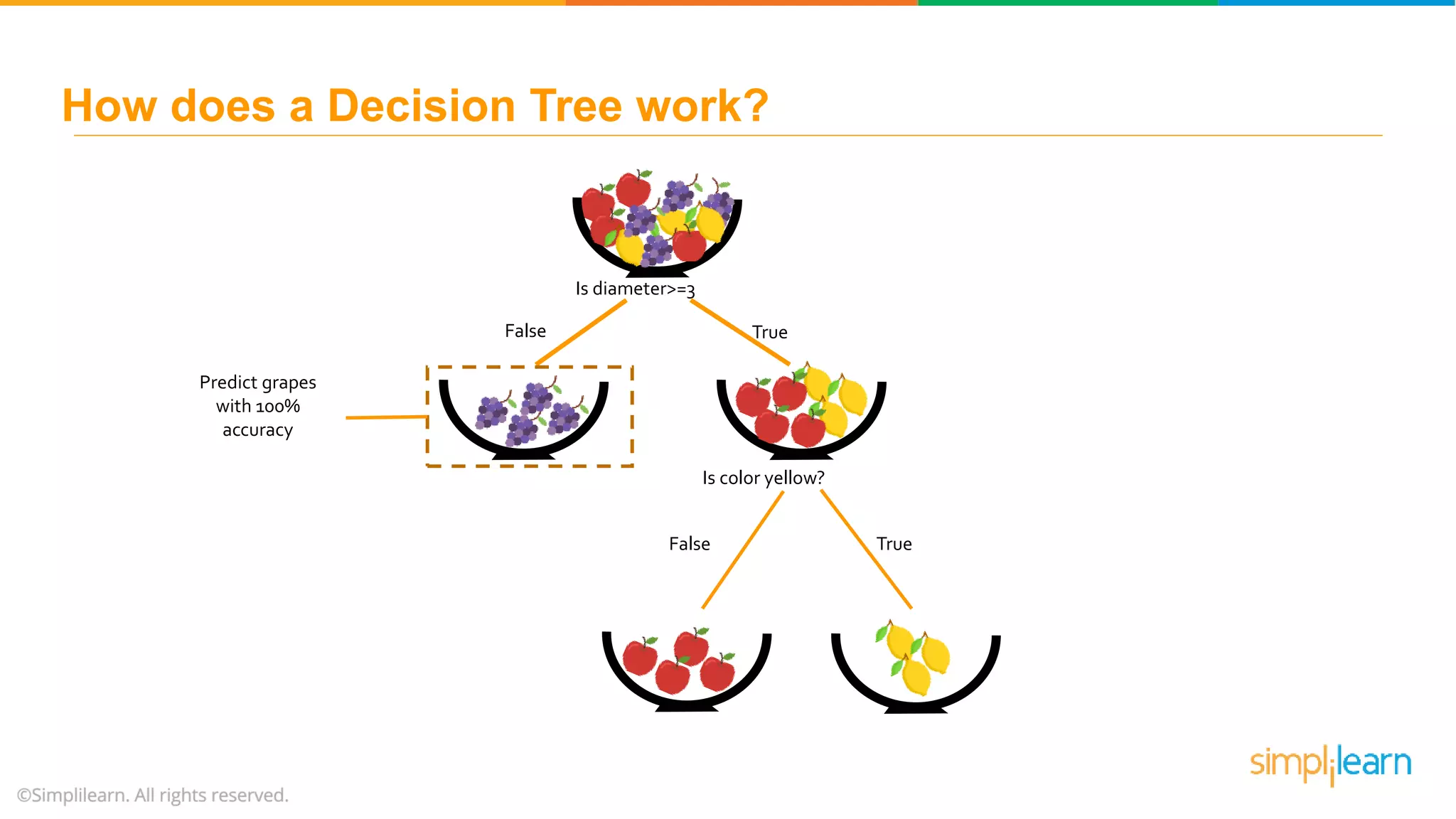

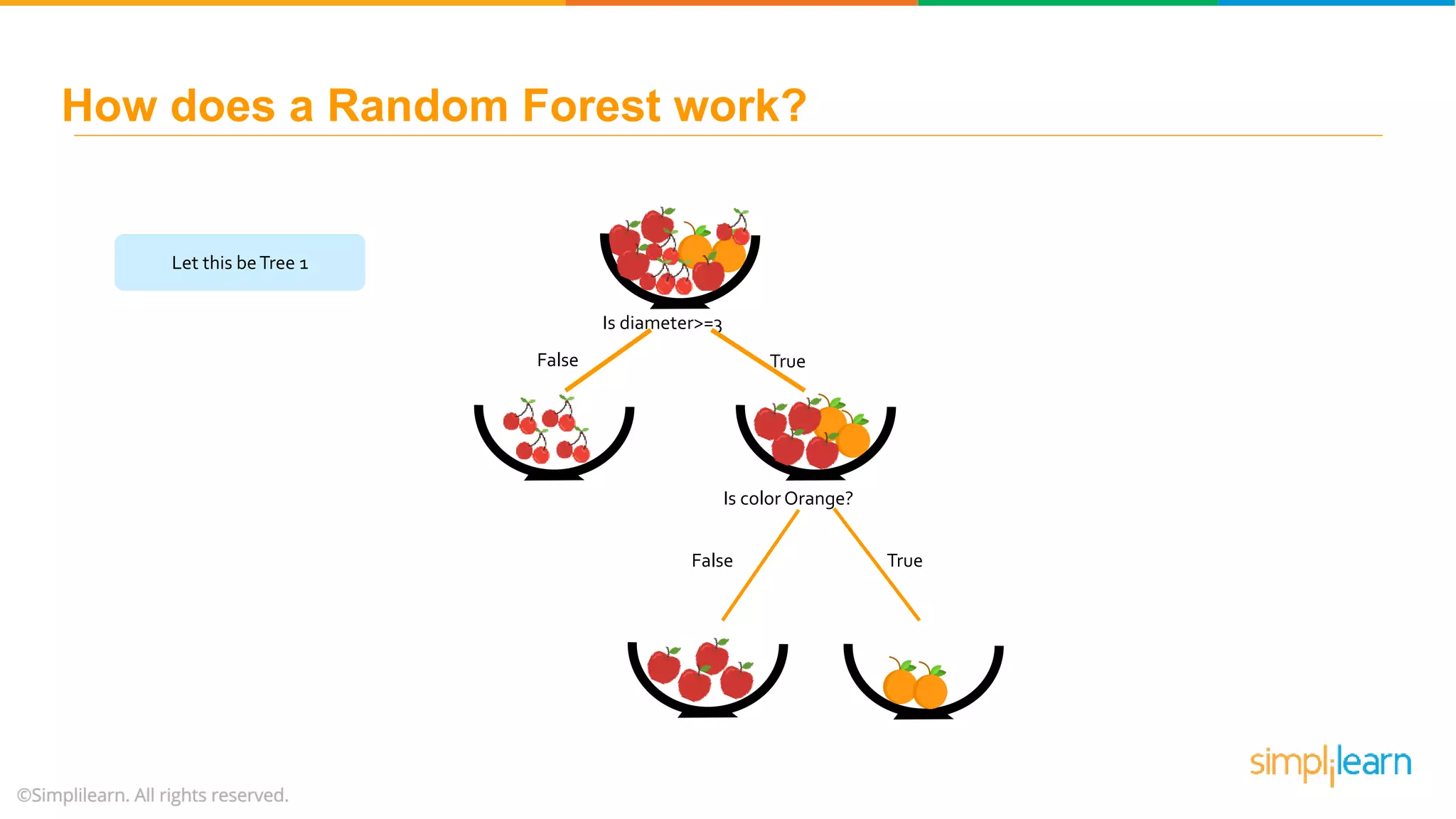

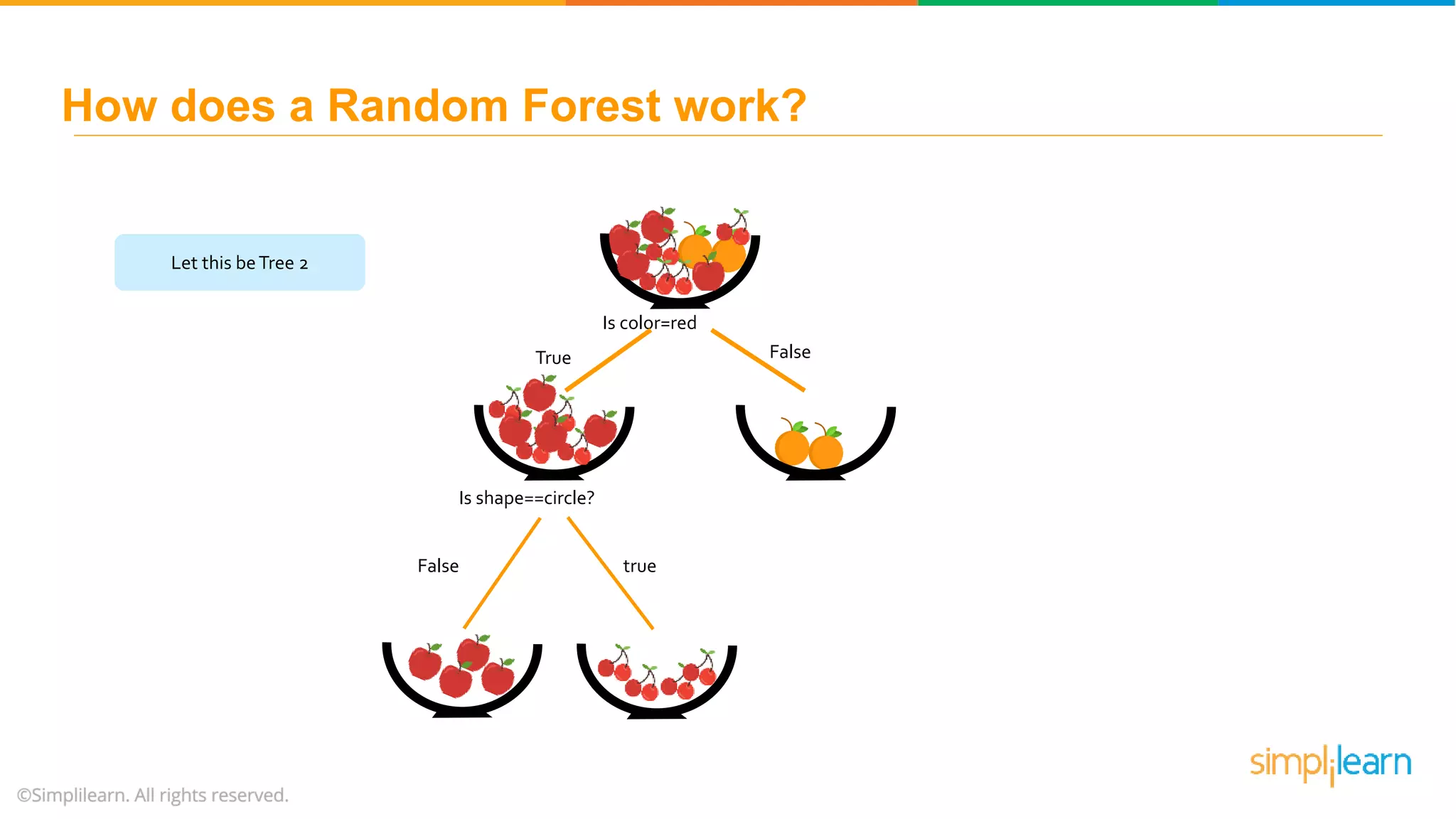

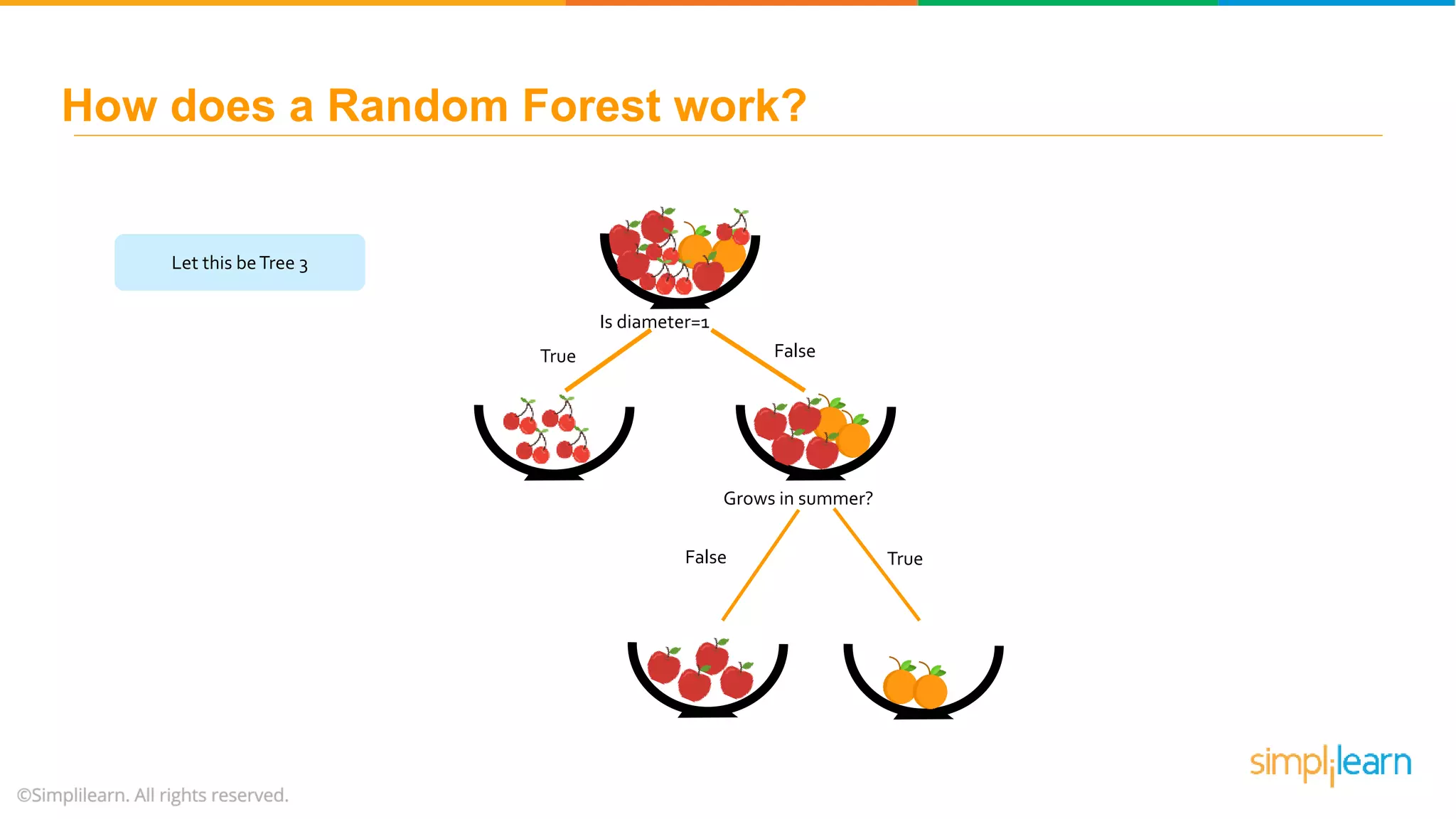

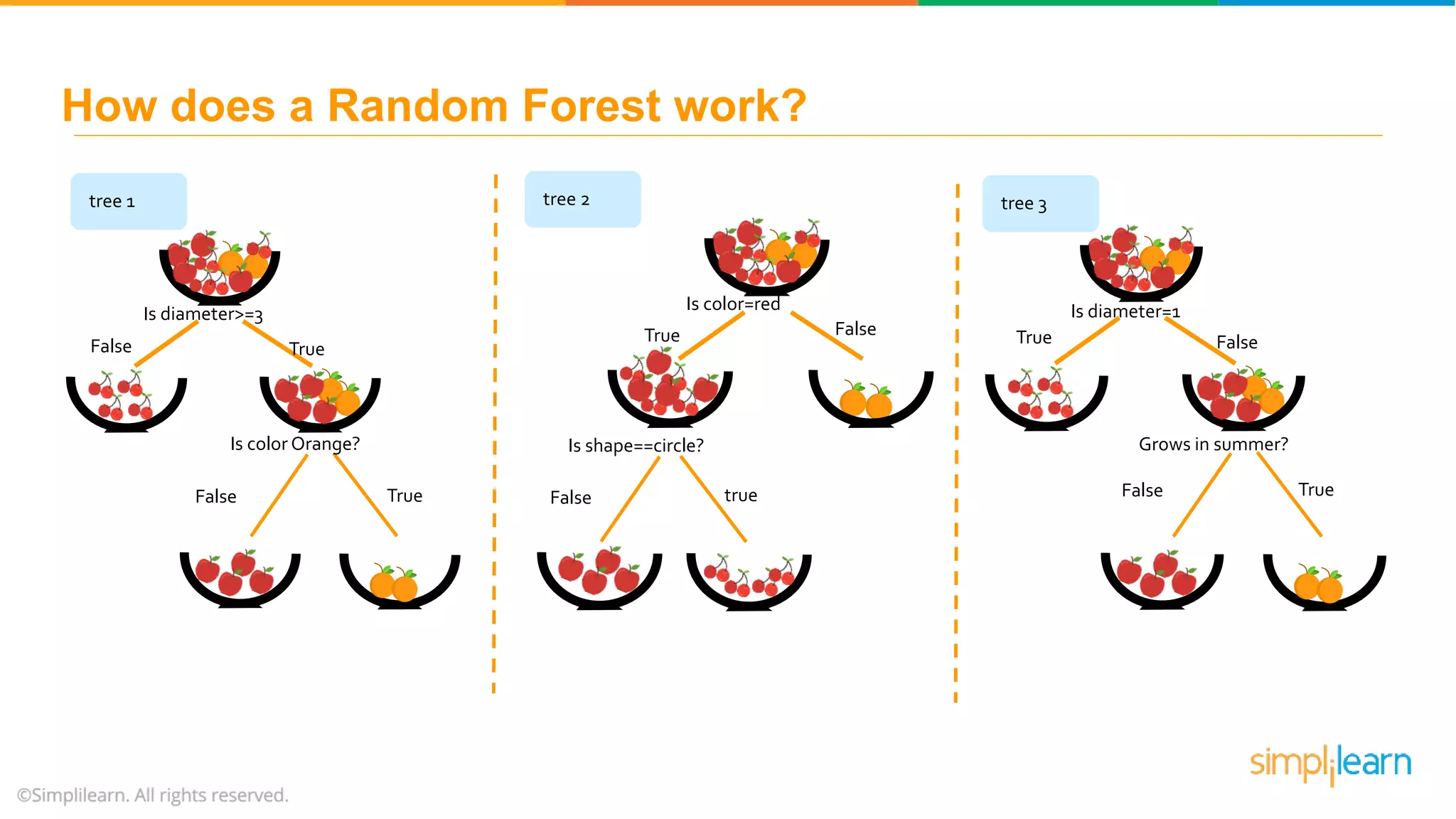

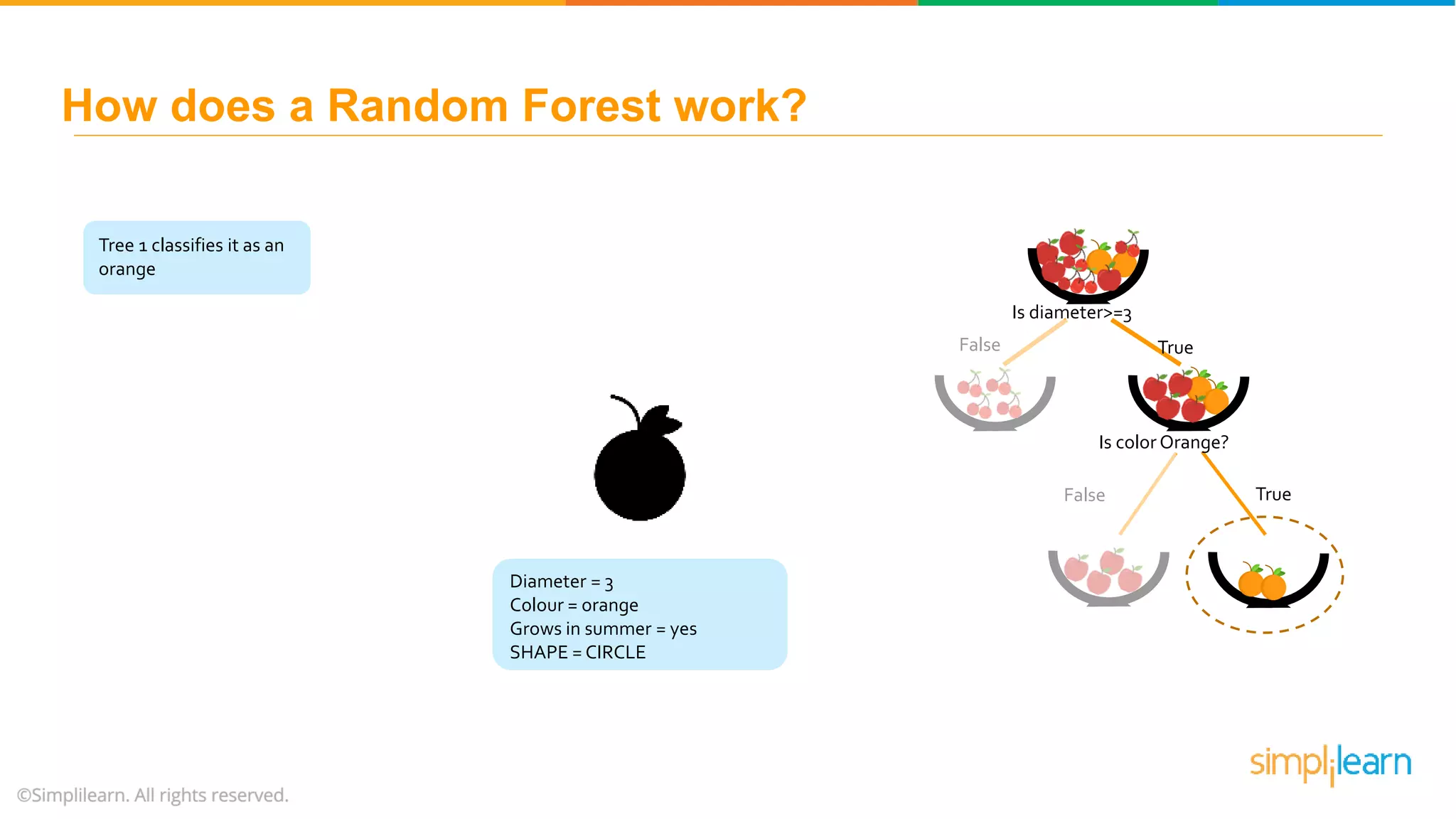

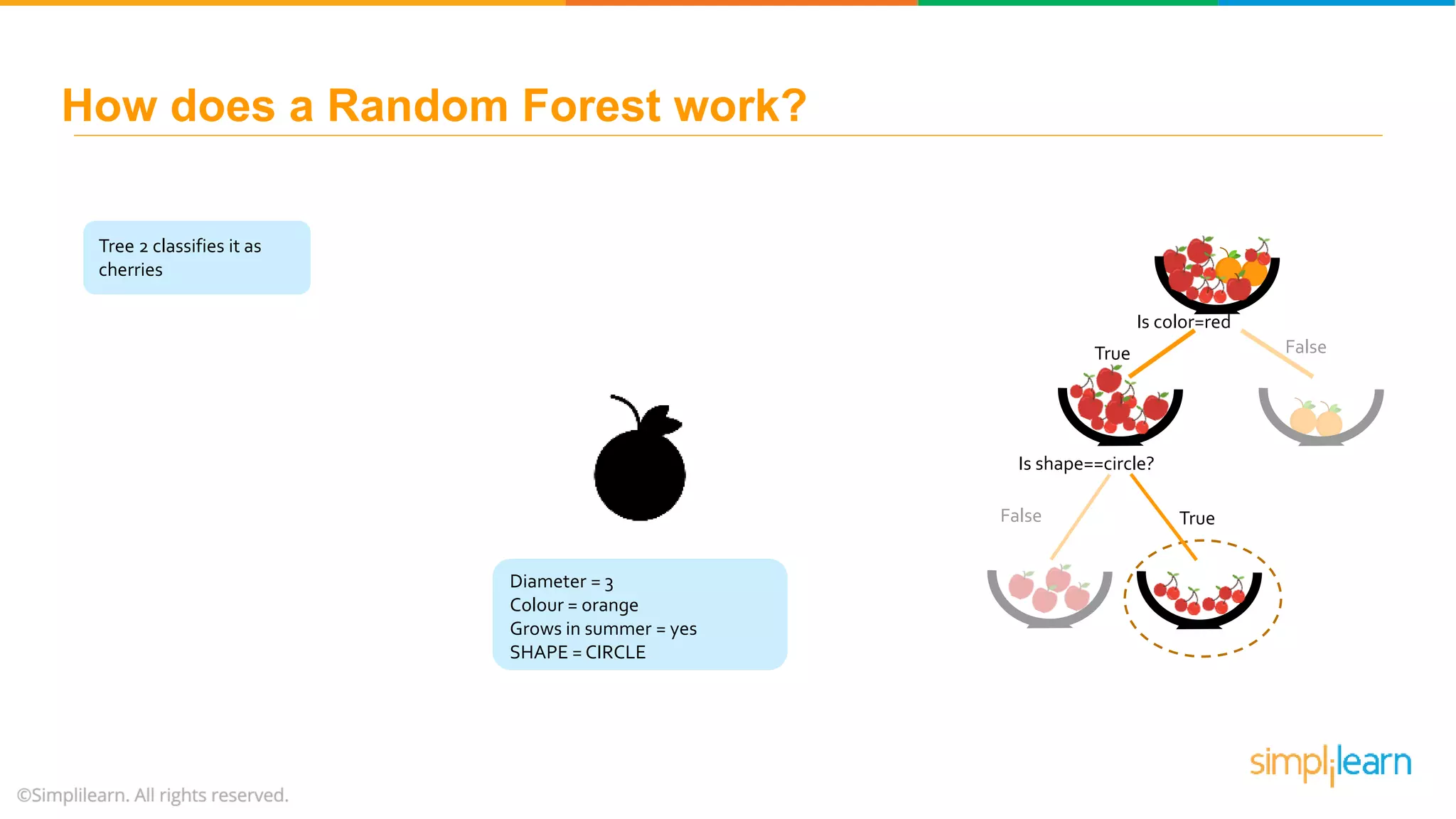

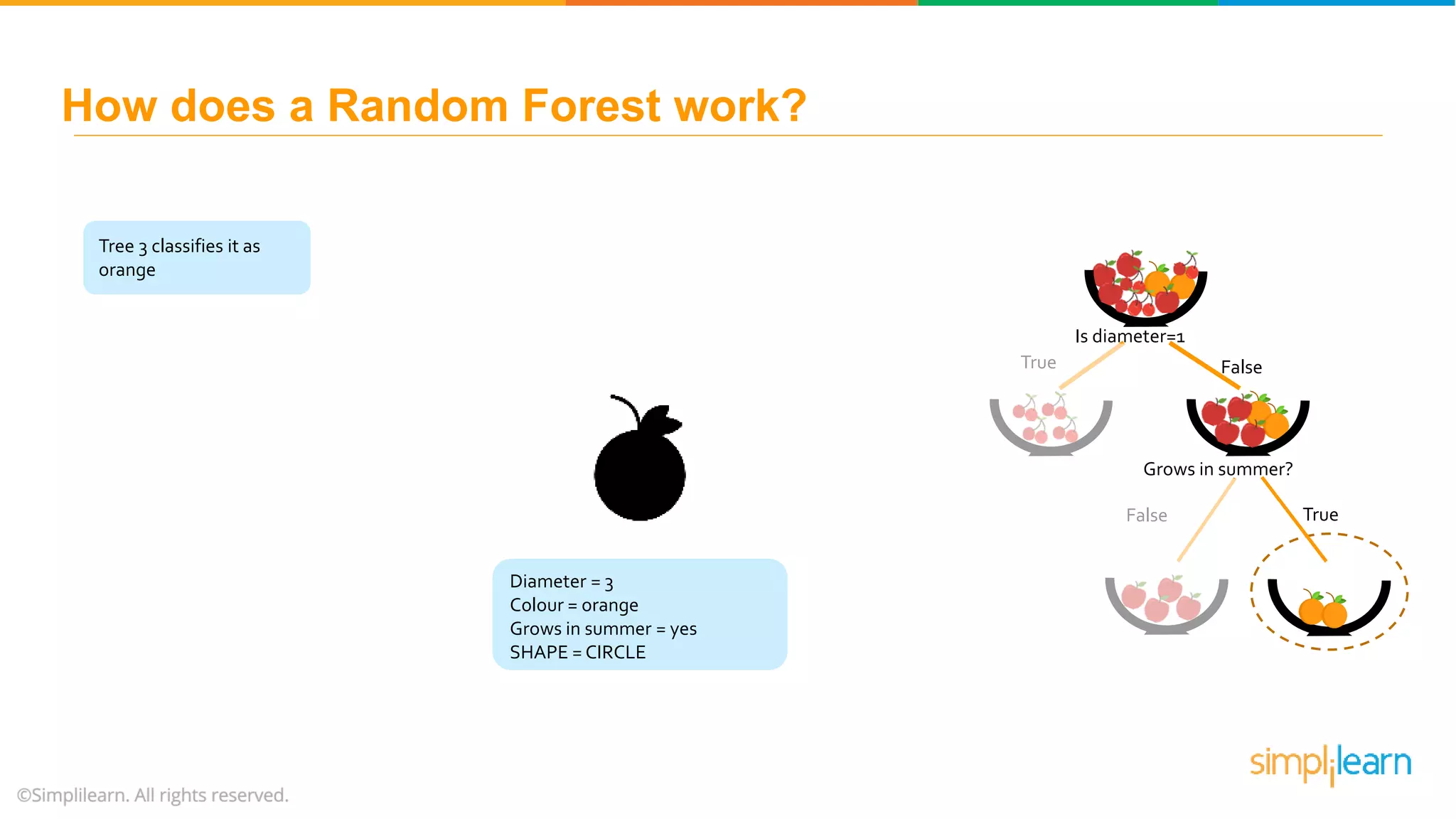

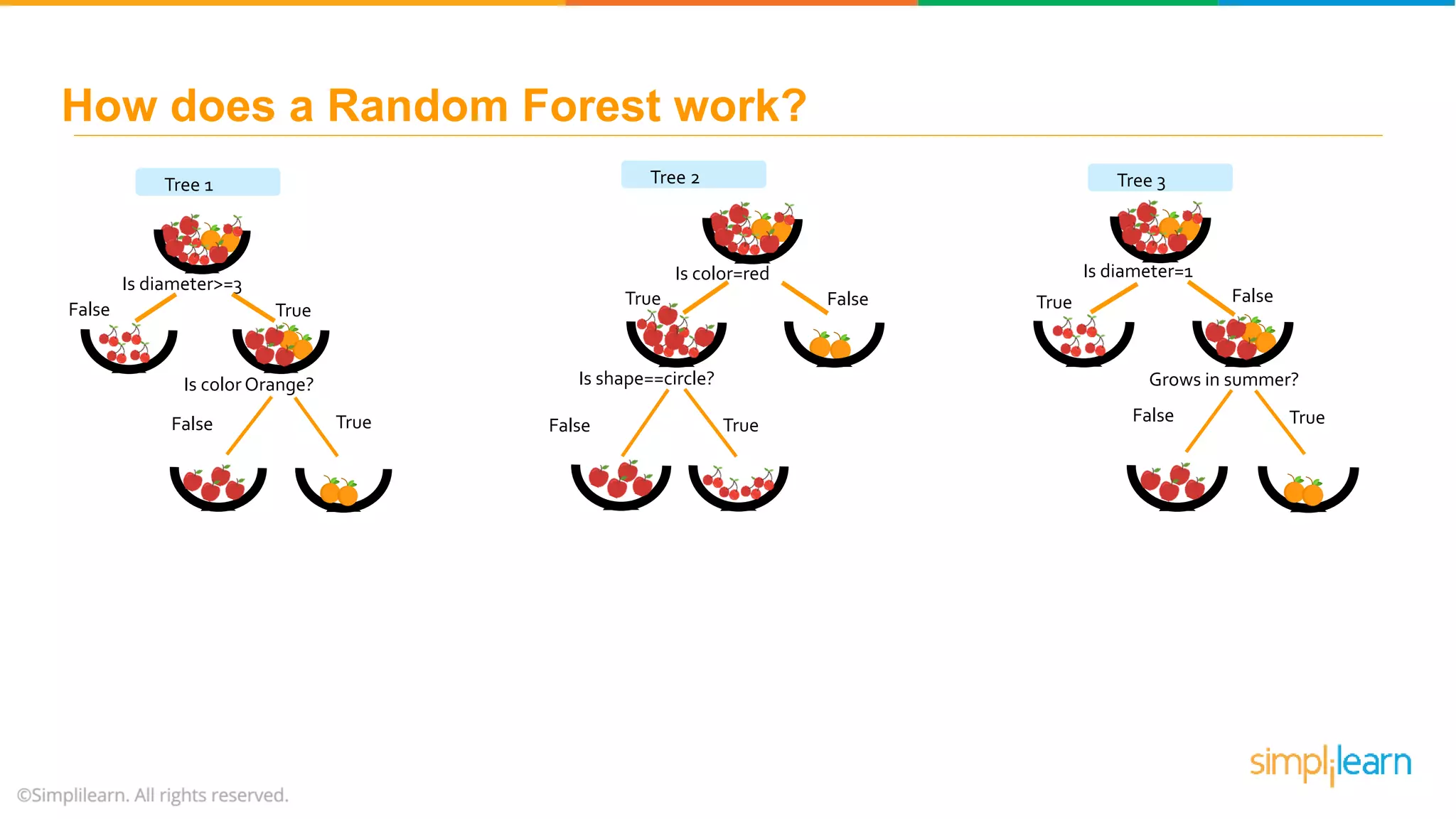

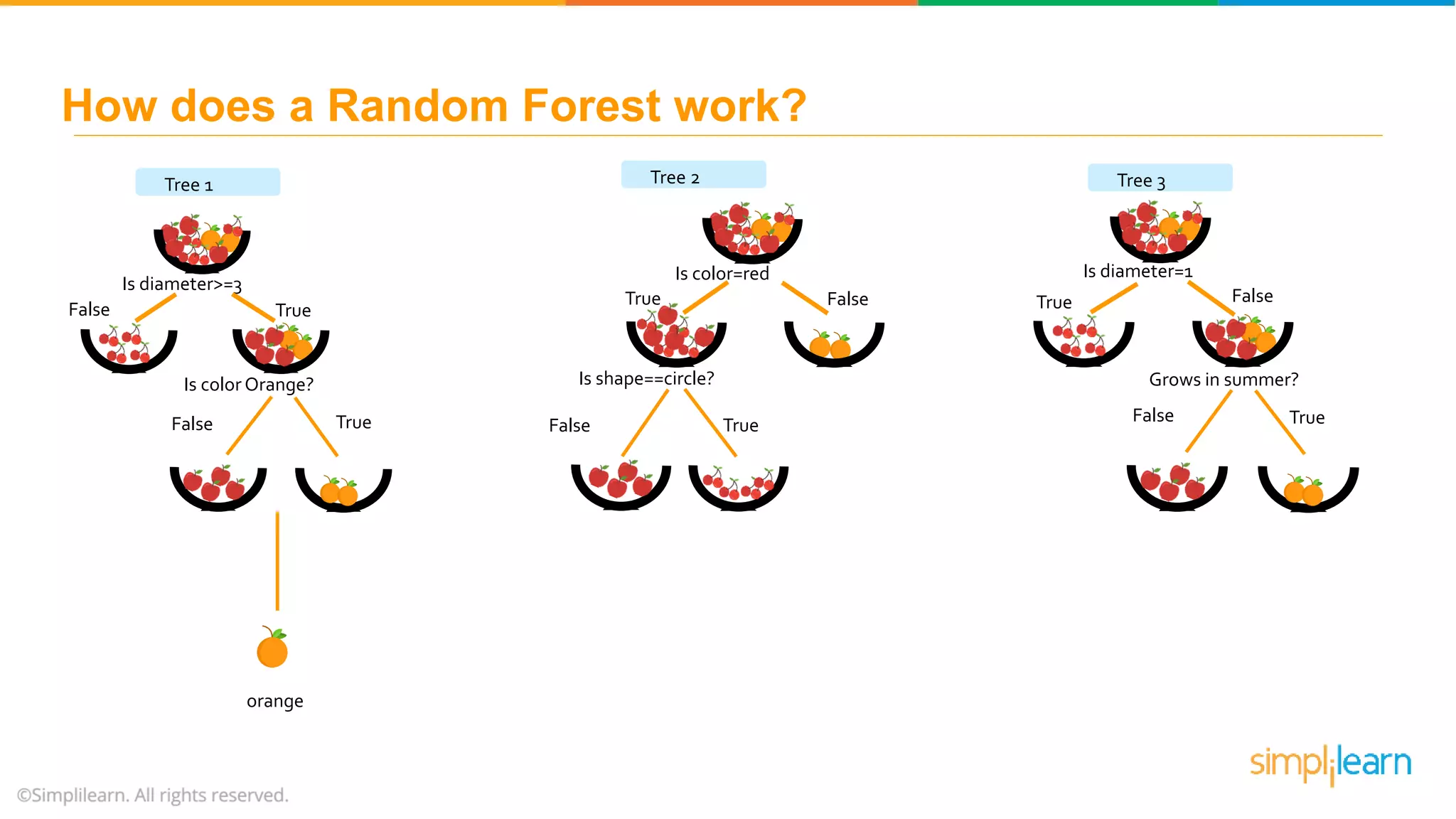

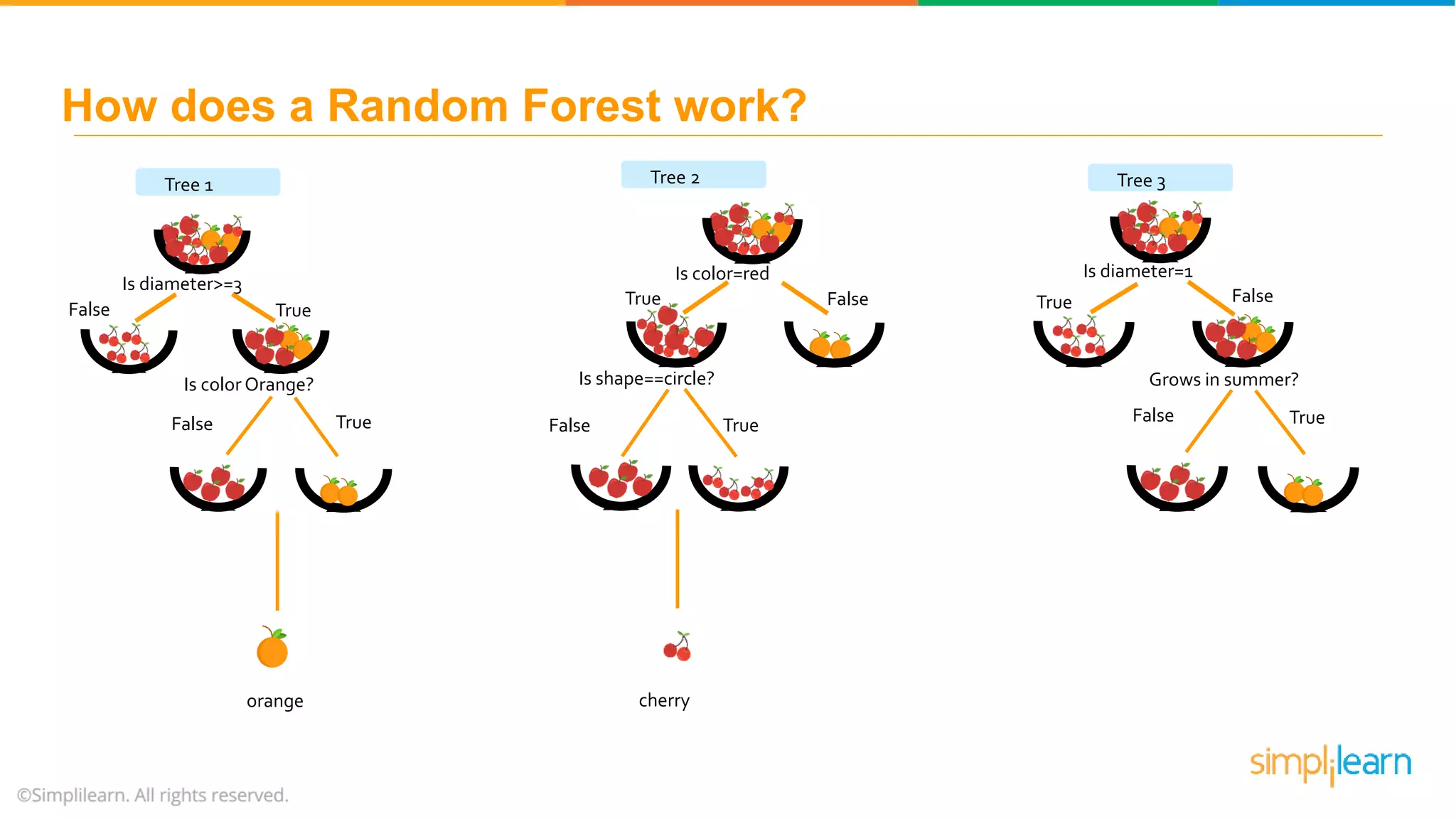

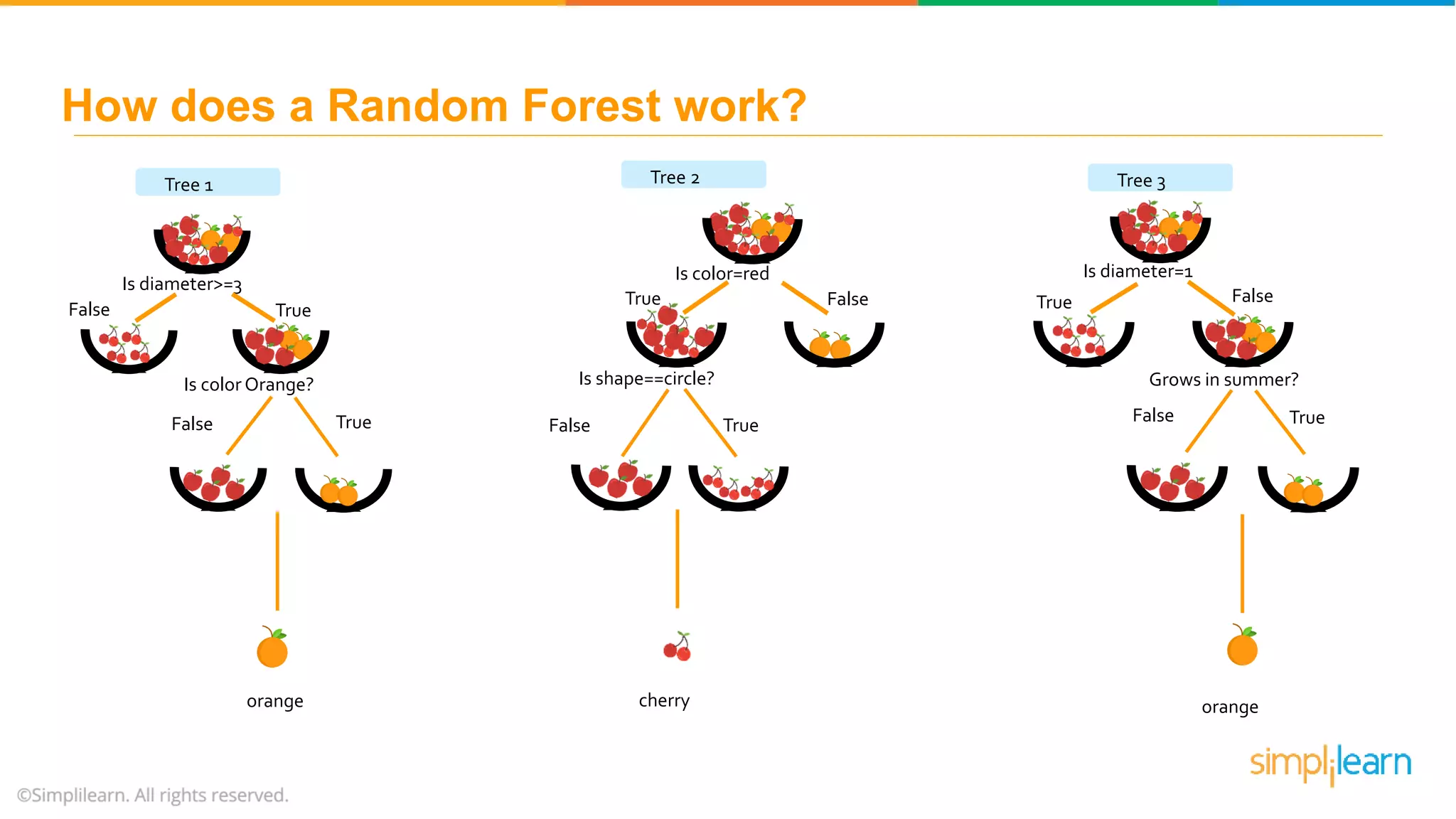

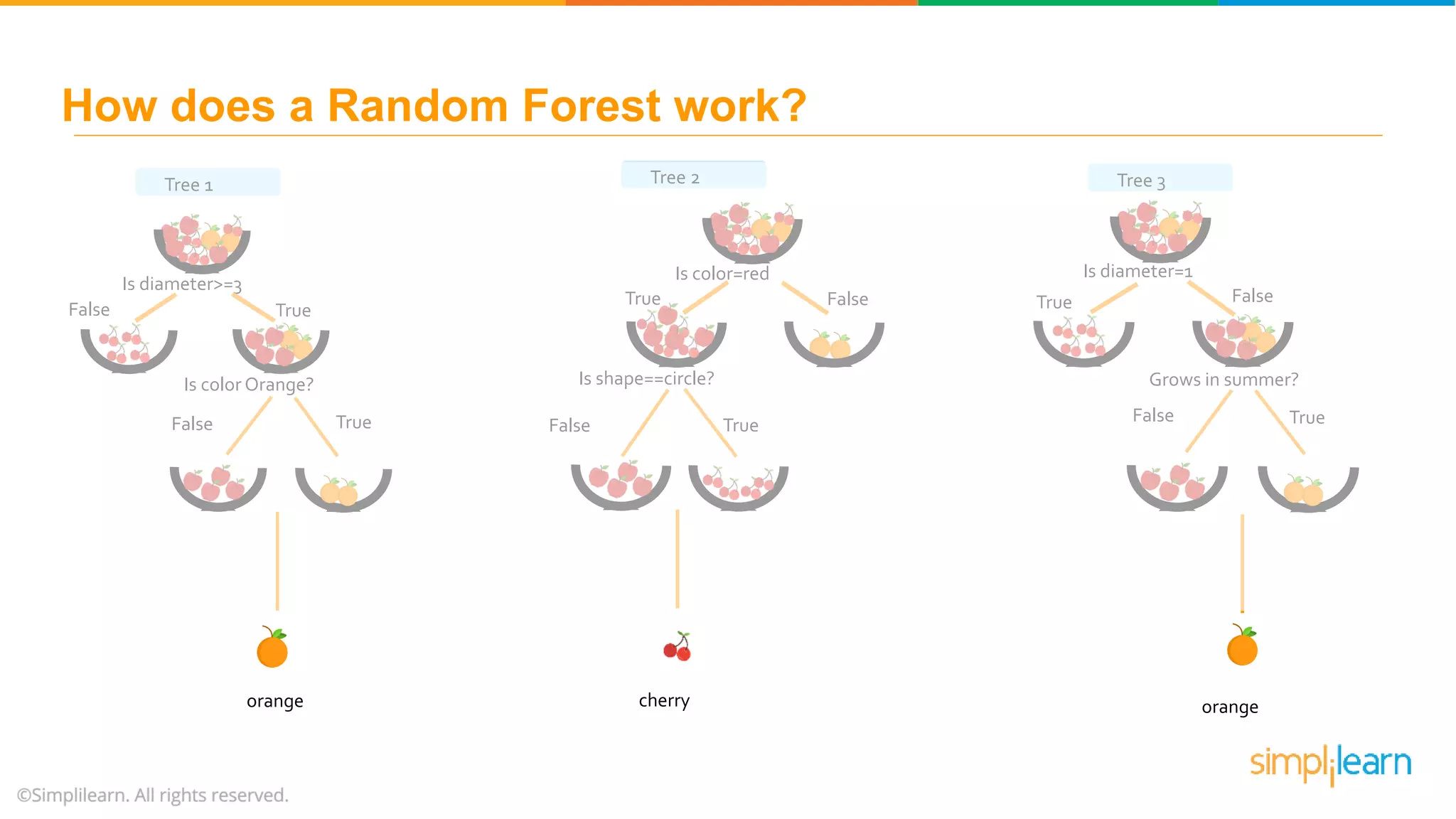

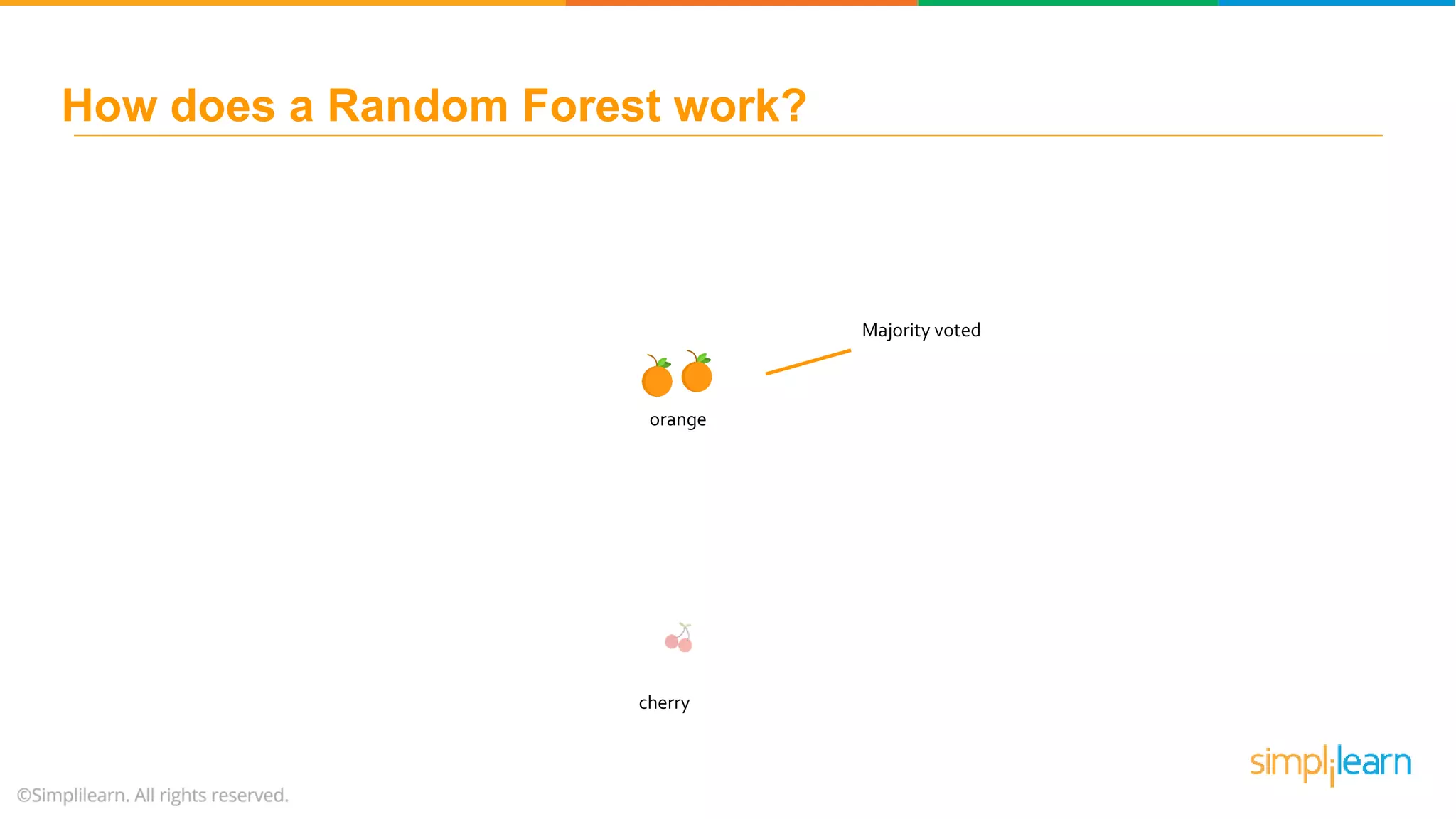

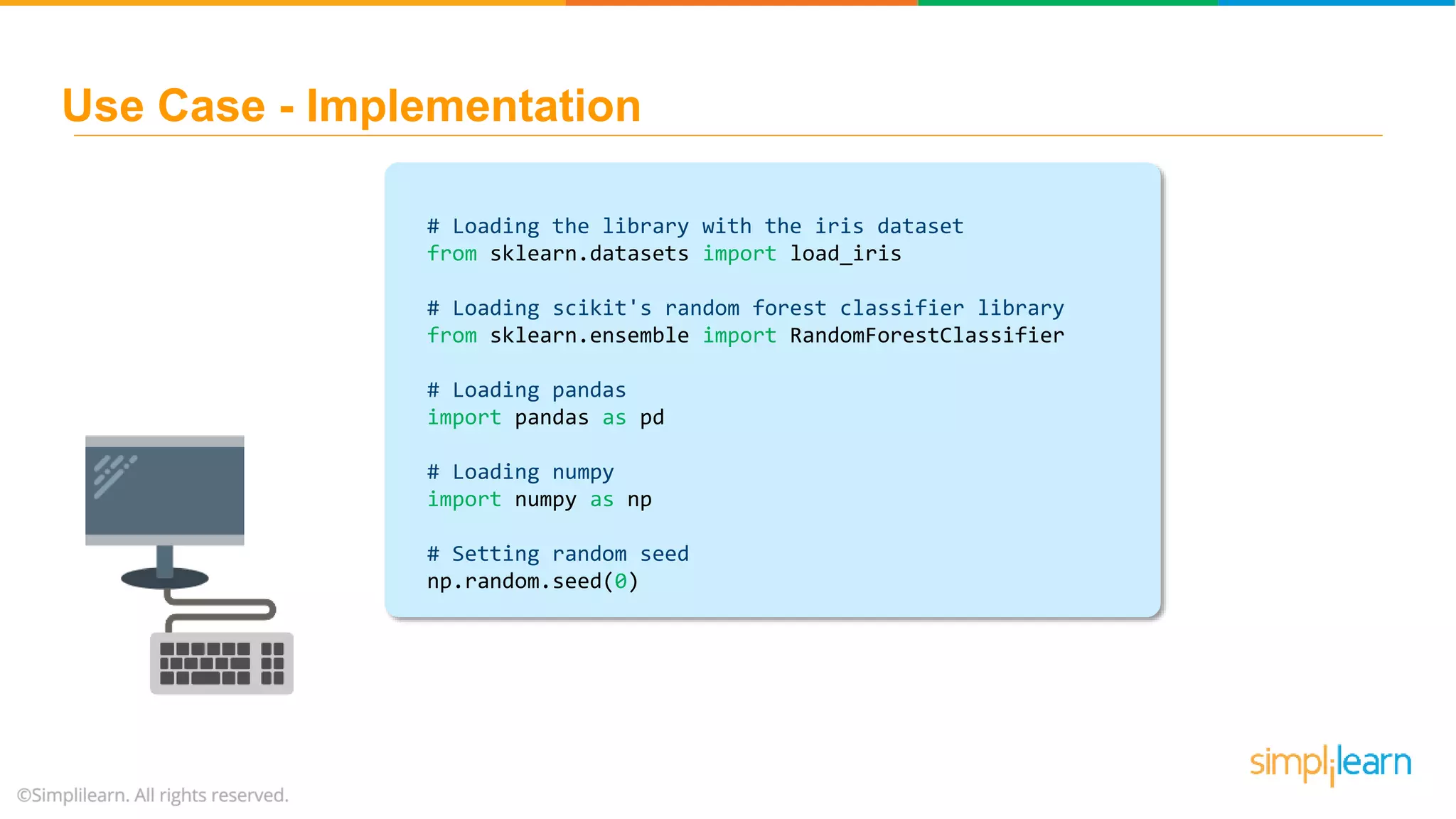

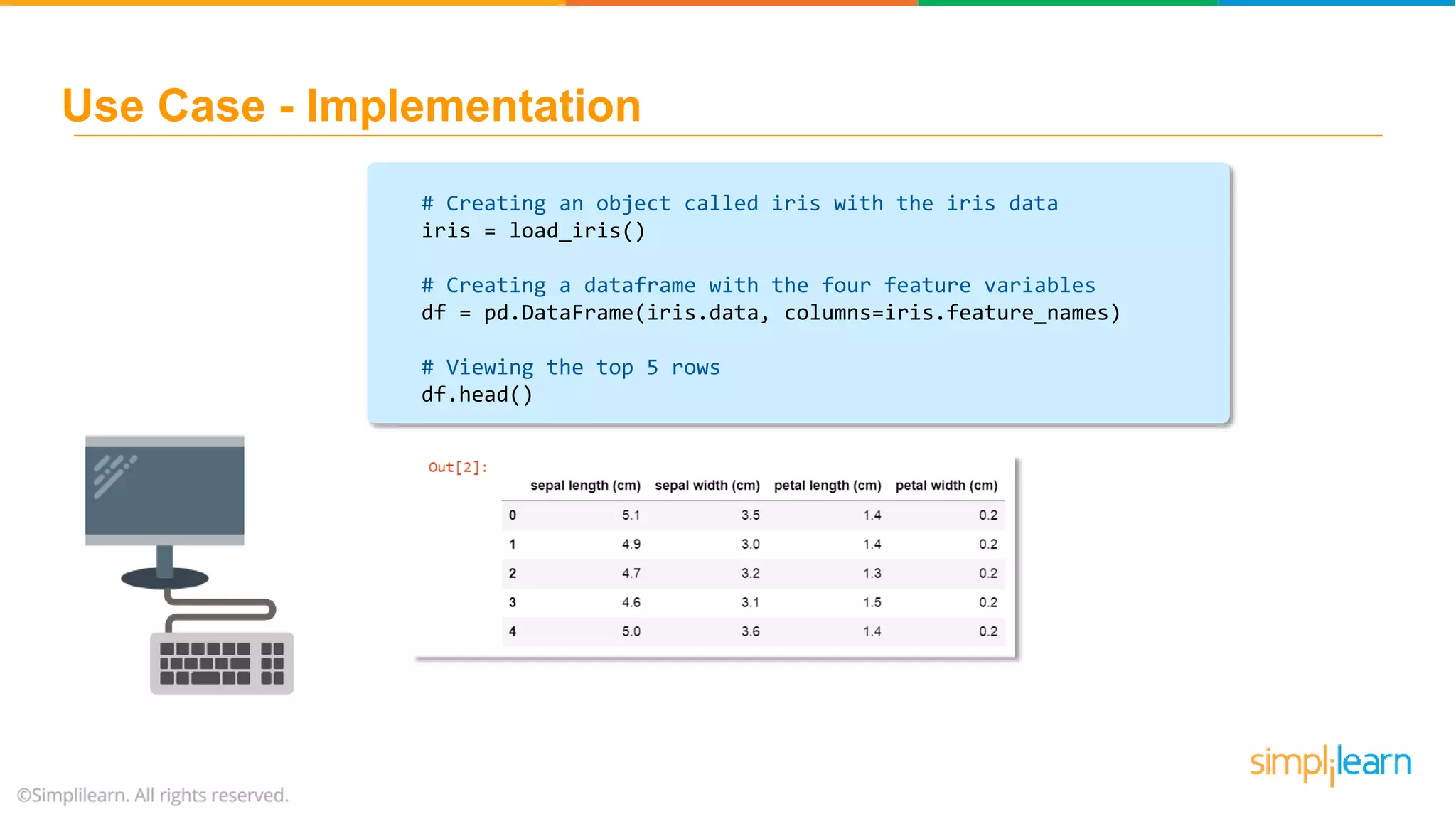

The document discusses machine learning applications of the Random Forest algorithm, particularly in remote sensing and object detection, emphasizing its efficiency and accuracy in various scenarios, such as in the Kinect gaming console. It outlines the workings of Random Forest, including its use of multiple decision trees to enhance prediction accuracy and prevent overfitting, along with a comparative analysis to decision trees. Additionally, it provides a detailed example using the Iris flower dataset to illustrate the implementation of Random Forest for classification tasks.

![# Adding a new column for the species name df['species'] = pd.Categorical.from_codes(iris.target, iris.target_names) # Viewing the top 5 rows df.head() Use Case - Implementation](https://image.slidesharecdn.com/randomforestalgorithm-randomforestexplainedrandomforestinmachinelearningsimplilearn-180323071347/75/Random-Forest-Algorithm-Random-Forest-Explained-Random-Forest-In-Machine-Learning-Simplilearn-95-2048.jpg)

![# Creating Test and Train Data df['is_train'] = np.random.uniform(0, 1, len(df)) <= .75 # View the top 5 rows df.head() Use Case - Implementation](https://image.slidesharecdn.com/randomforestalgorithm-randomforestexplainedrandomforestinmachinelearningsimplilearn-180323071347/75/Random-Forest-Algorithm-Random-Forest-Explained-Random-Forest-In-Machine-Learning-Simplilearn-96-2048.jpg)

![# Creating dataframes with test rows and training rows train, test = df[df['is_train']==True], df[df['is_train']==False] # Show the number of observations for the test and training dataframes print('Number of observations in the training data:', len(train)) print('Number of observations in the test data:',len(test)) Use Case - Implementation](https://image.slidesharecdn.com/randomforestalgorithm-randomforestexplainedrandomforestinmachinelearningsimplilearn-180323071347/75/Random-Forest-Algorithm-Random-Forest-Explained-Random-Forest-In-Machine-Learning-Simplilearn-97-2048.jpg)

![# Create a list of the feature column's names features = df.columns[:4] # View features features Use Case - Implementation](https://image.slidesharecdn.com/randomforestalgorithm-randomforestexplainedrandomforestinmachinelearningsimplilearn-180323071347/75/Random-Forest-Algorithm-Random-Forest-Explained-Random-Forest-In-Machine-Learning-Simplilearn-98-2048.jpg)

![# Converting each species name into digits y = pd.factorize(train['species'])[0] # Viewing target y Use Case - Implementation](https://image.slidesharecdn.com/randomforestalgorithm-randomforestexplainedrandomforestinmachinelearningsimplilearn-180323071347/75/Random-Forest-Algorithm-Random-Forest-Explained-Random-Forest-In-Machine-Learning-Simplilearn-99-2048.jpg)

![# Creating a random forest Classifier. clf = RandomForestClassifier(n_jobs=2, random_state=0) # Training the classifier clf.fit(train[features], y) Use Case - Implementation](https://image.slidesharecdn.com/randomforestalgorithm-randomforestexplainedrandomforestinmachinelearningsimplilearn-180323071347/75/Random-Forest-Algorithm-Random-Forest-Explained-Random-Forest-In-Machine-Learning-Simplilearn-100-2048.jpg)

![# Applying the trained Classifier to the test clf.predict(test[features]) Use Case - Implementation](https://image.slidesharecdn.com/randomforestalgorithm-randomforestexplainedrandomforestinmachinelearningsimplilearn-180323071347/75/Random-Forest-Algorithm-Random-Forest-Explained-Random-Forest-In-Machine-Learning-Simplilearn-101-2048.jpg)

![# Viewing the predicted probabilities of the first 10 observations clf.predict_proba(test[features])[0:10] Use Case - Implementation](https://image.slidesharecdn.com/randomforestalgorithm-randomforestexplainedrandomforestinmachinelearningsimplilearn-180323071347/75/Random-Forest-Algorithm-Random-Forest-Explained-Random-Forest-In-Machine-Learning-Simplilearn-102-2048.jpg)

![# mapping names for the plants for each predicted plant class preds = iris.target_names[clf.predict(test[features])] # View the PREDICTED species for the first five observations preds[0:5] Use Case - Implementation](https://image.slidesharecdn.com/randomforestalgorithm-randomforestexplainedrandomforestinmachinelearningsimplilearn-180323071347/75/Random-Forest-Algorithm-Random-Forest-Explained-Random-Forest-In-Machine-Learning-Simplilearn-103-2048.jpg)

![# Viewing the ACTUAL species for the first five observations test['species'].head() Use Case - Implementation](https://image.slidesharecdn.com/randomforestalgorithm-randomforestexplainedrandomforestinmachinelearningsimplilearn-180323071347/75/Random-Forest-Algorithm-Random-Forest-Explained-Random-Forest-In-Machine-Learning-Simplilearn-104-2048.jpg)

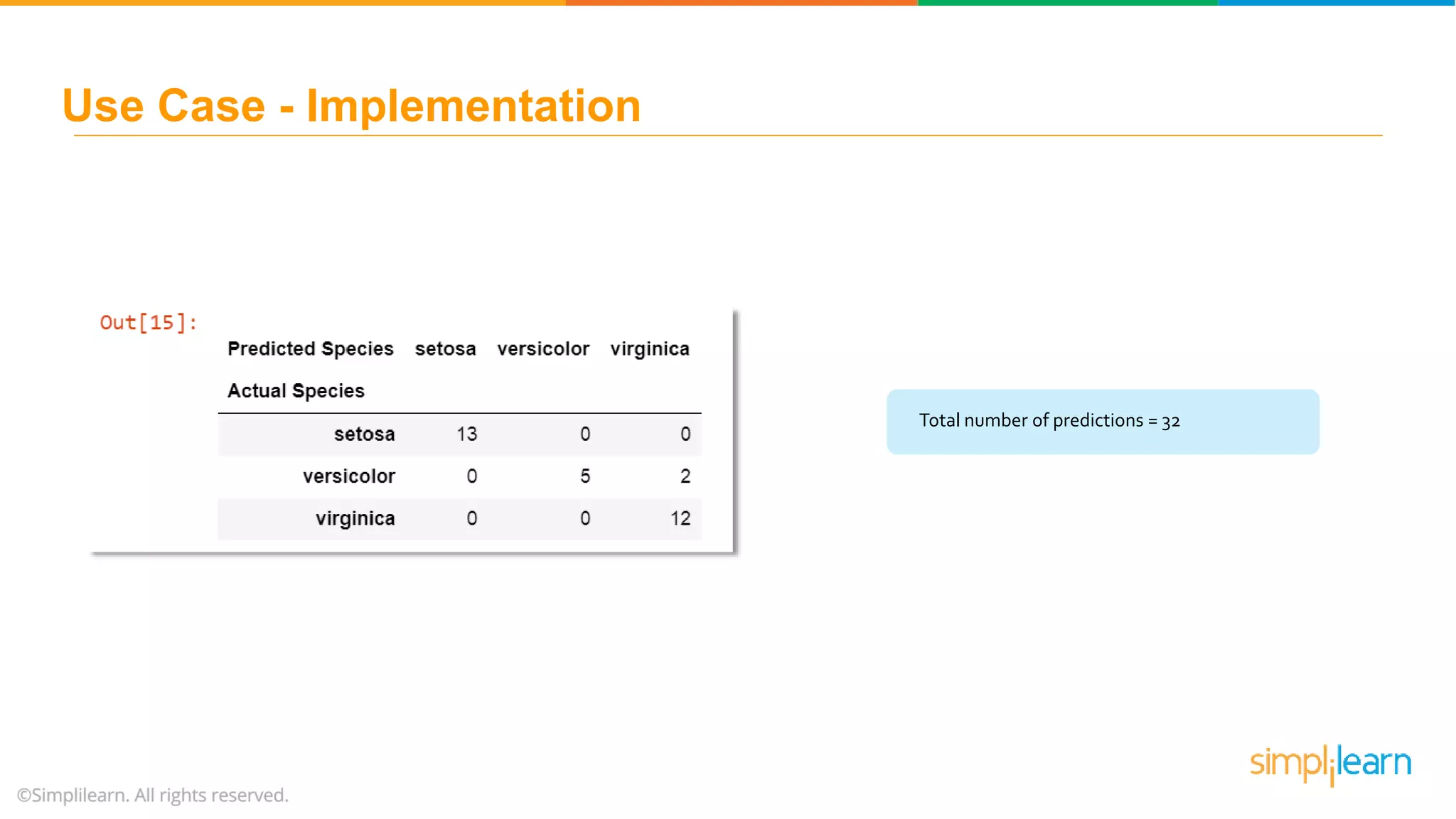

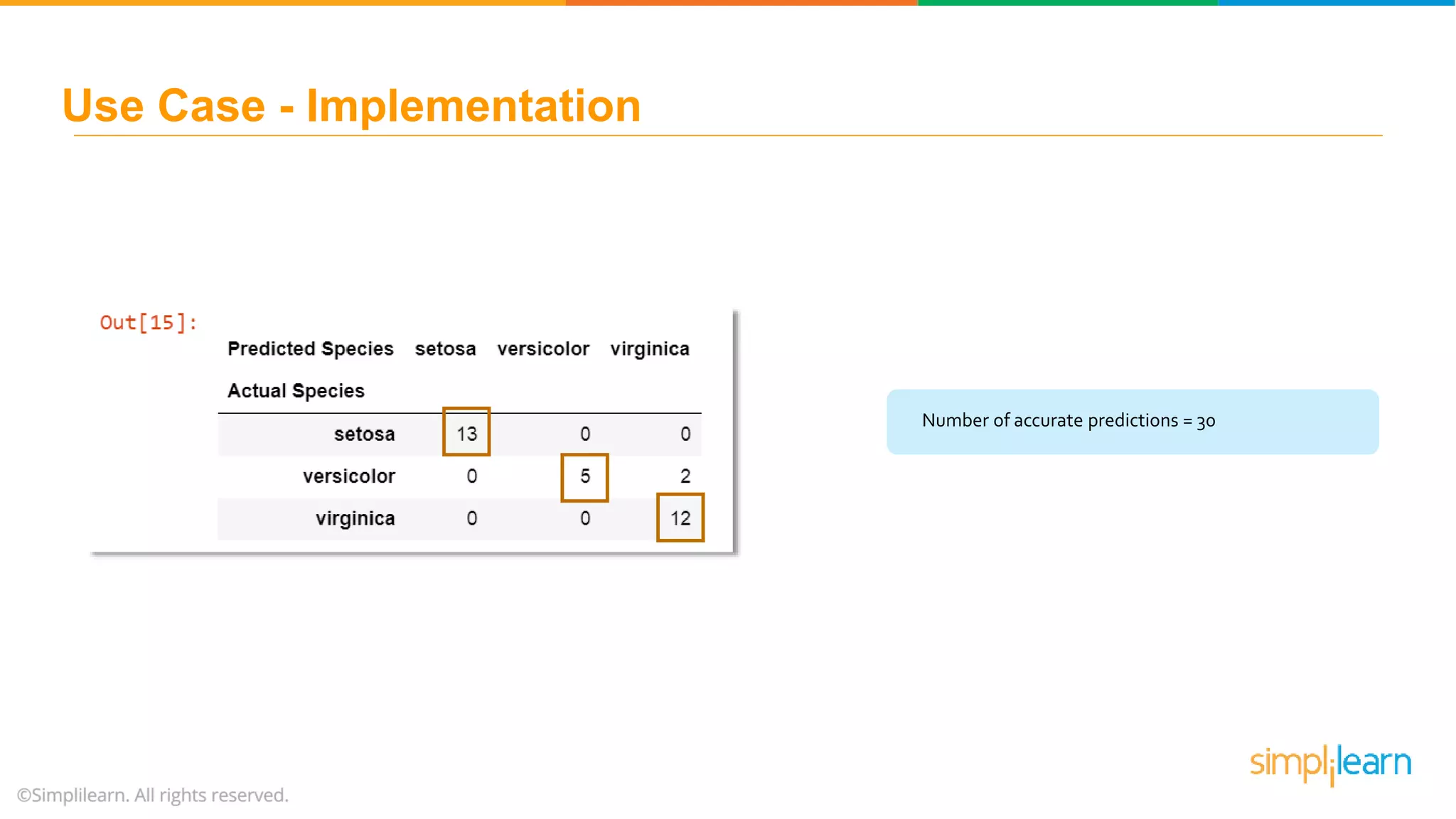

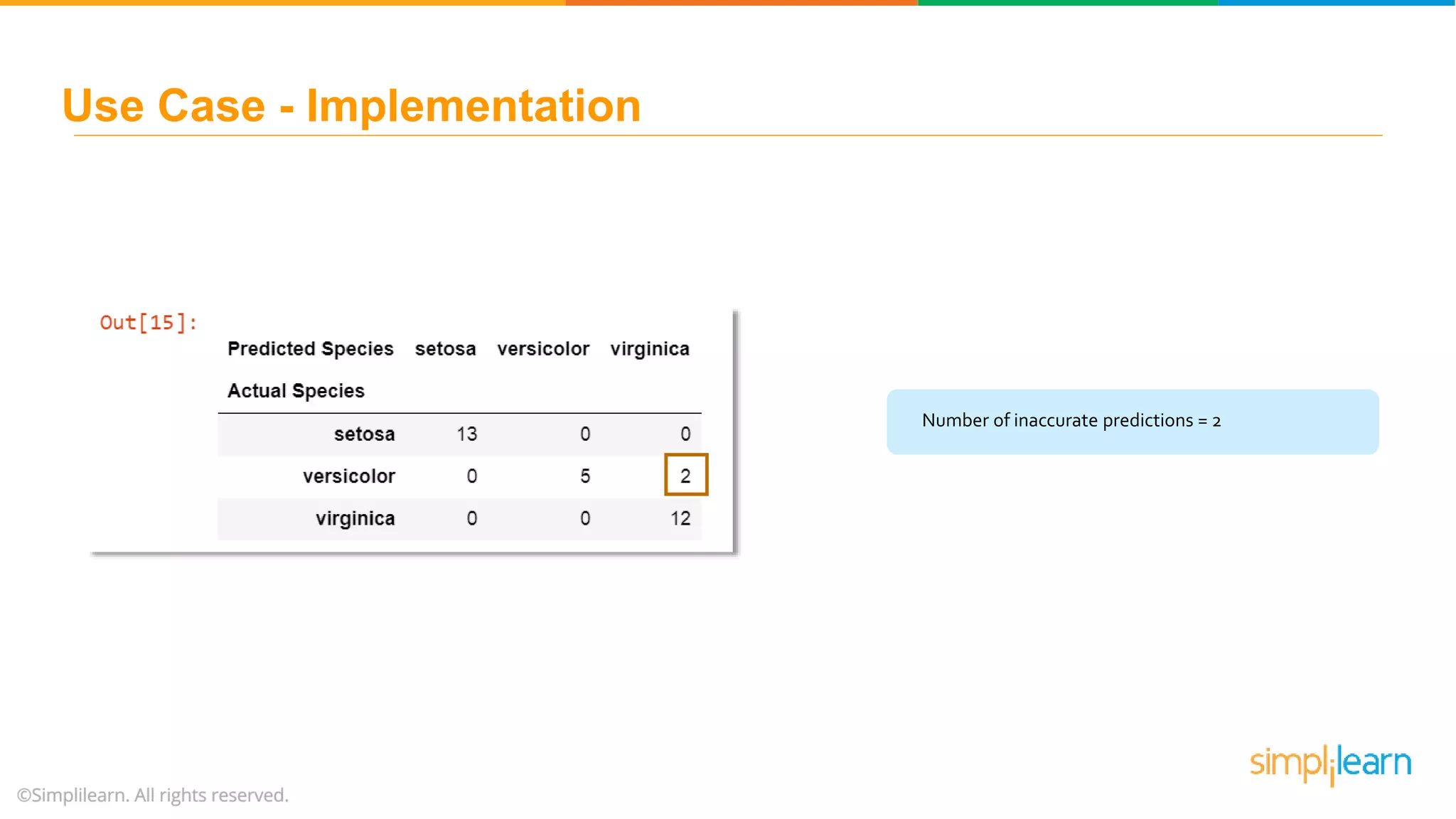

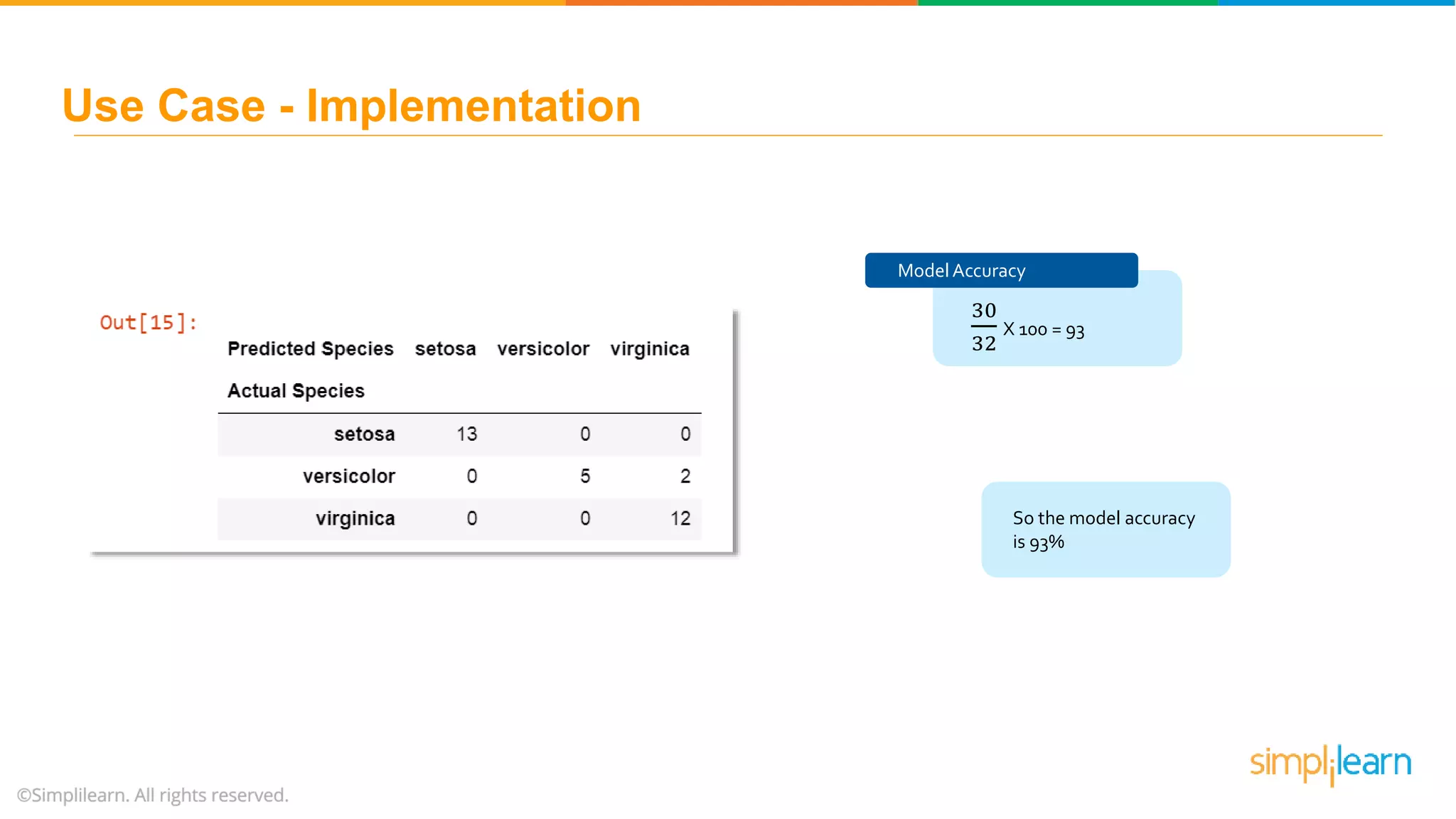

![# Creating confusion matrix pd.crosstab(test['species'], preds, rownames=['Actual Species'], colnames=['Predicted Species']) Use Case - Implementation](https://image.slidesharecdn.com/randomforestalgorithm-randomforestexplainedrandomforestinmachinelearningsimplilearn-180323071347/75/Random-Forest-Algorithm-Random-Forest-Explained-Random-Forest-In-Machine-Learning-Simplilearn-105-2048.jpg)