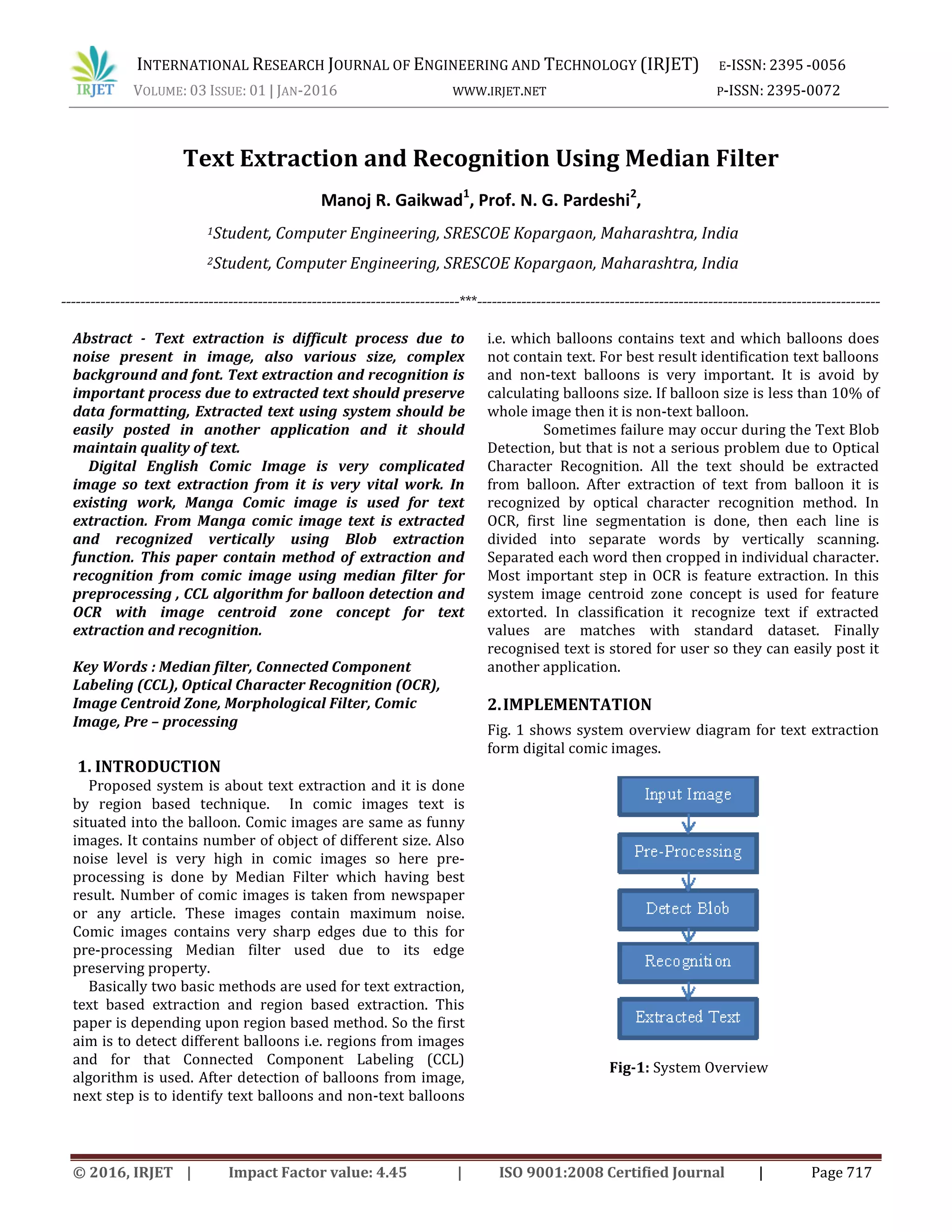

This document discusses a method for extracting and recognizing text from digital comic images. It begins with an introduction describing the challenges of text extraction from complex comic images. It then describes the specific method used, which includes preprocessing the image with a median filter to reduce noise, detecting text "balloons" using connected component labeling algorithms, and then applying optical character recognition with an image centroid concept to extract and recognize the text. The key aspects of the proposed method are preprocessing with median filtering for edge preservation, balloon detection using connected component labeling, and using image centroid zones for feature extraction in optical character recognition of the text.

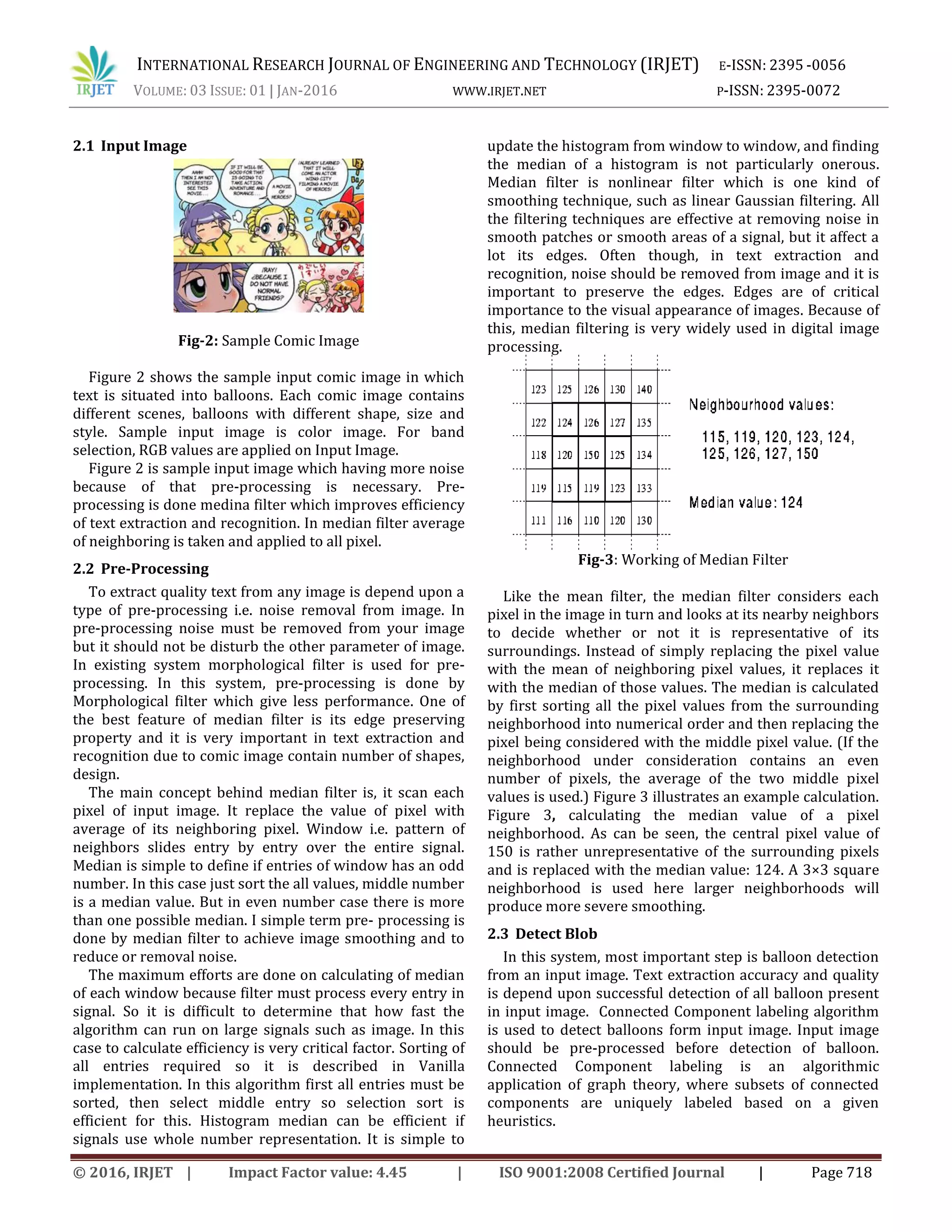

![INTERNATIONAL RESEARCH JOURNAL OF ENGINEERING AND TECHNOLOGY (IRJET) E-ISSN: 2395 -0056 VOLUME: 03 ISSUE: 01 | JAN-2016 WWW.IRJET.NET P-ISSN: 2395-0072 © 2016, IRJET | Impact Factor value: 4.45 | ISO 9001:2008 Certified Journal | Page 719 First step of connected component labeling algorithm is to detect boundaries of different regions. It is useful to extract regions which are not isolated by boundary. Connected components is known as set of pixel which are not separated by a boundary. Each maximal region of connected pixels is called a connected component. In balloon detection phase it produces two types of balloon i.e. text balloon and non-text balloons. The balloon which contain any single character is called as text balloons and other are non-text balloons. Connected-component labeling is used in computer vision to detect connected regions in binary digital images, although color images and data with higher- dimensionality can also be processed. When integrated into an image recognition system or human-computer interaction interface, connected component labeling can operate on a variety of information. Blob extraction is generally performed on the resulting binary image from a thresholding step. Blobs may be counted, filtered, and tracked. Blob extraction is related to but distinct from blob detection. Fig-4: First Pass (Assigning Labels) Figure 4 shows first pass of Connected Component Labeling Algorithm in which each pixel assigned with a label and figure 5 shows the second pass which is useful for aggregation. Fig-5: Second Pass (Aggregation) Working of Connected Component Labeling algorithm is very simple. Initially label is set with new label for e.g. current_label_count=1. Next step is find non background pixel. Select the first non-background pixel and its find neighboring pixel. If not single neighbor is labeled yet, then set value of current pixel to the current_label_count and increment current_label_count. If its neighbors are already labeled then assign its parent’s label to it. Problem occurs when its neighbors have different labels in that case assign lower label count. Continue it for remaining all non- background pixels. It comes under the first pass, in second pass getting the root of each pixel (if labeled) and stores it in patterns list. CCL algorithm detect the balloons available in input image, but it necessary to identify text balloons and non- text balloons. To avoid the false detection and to reduce the complexity the text blobs are to be identified exactly. The identification is done, based on image size and blob size. For that the Area of the blobs are calculated using the following equation. A.TB[i] =TB[i].Width*TB[i].Height After calculation of area of balloon, if area or size of balloon is less than 10% of whole image then it is classified into text blobs and other remaining balloons are classified into non-text balloons. 2.4 Recognition In detection of text balloons, there is a possibility of the false detection. But false detection is not serious issue if text is recognized by Optical Character Recognition. After detection of text balloons OCR is applied on that balloons, matches each character with pre-defined dataset. The process of OCR is simple i.e. segmentation, correlation and classification. After recognition, the extracted text is stored in text file for user convenience. In OCR proposed system used Line segmentation, Word Segmentation and Character Segmentation and Image centroid zone concept. 2.4.1 Line Segmentation Every input image which contain text may contain any number of lines. Thus, we would first need to separate lines from the document and then proceed further. This is what we refer to as line segmentation. To perform line segmentation, we need to scan each horizontal pixel row starting from the top of the document. Lines are separated where we find a horizontal pixel row with no black pixels. This row acts as a separation between two lines.](https://image.slidesharecdn.com/irjet-v3i1125-171020110708/75/Text-Extraction-and-Recognition-Using-Median-Filter-3-2048.jpg)

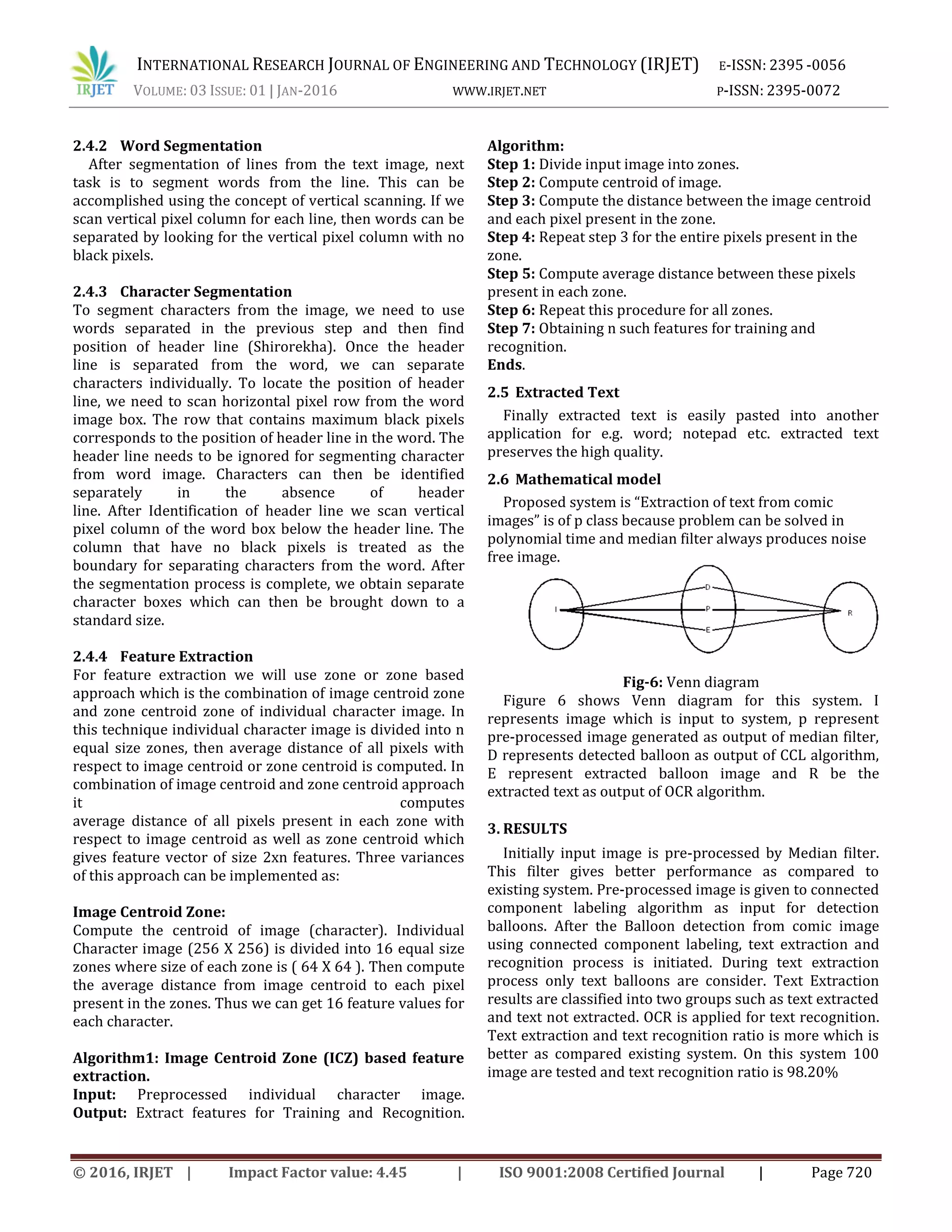

![INTERNATIONAL RESEARCH JOURNAL OF ENGINEERING AND TECHNOLOGY (IRJET) E-ISSN: 2395 -0056 VOLUME: 03 ISSUE: 01 | JAN-2016 WWW.IRJET.NET P-ISSN: 2395-0072 © 2016, IRJET | Impact Factor value: 4.45 | ISO 9001:2008 Certified Journal | Page 721 Table-1: Result Set S N Image Total Char acter Recognized Character Missed Charact er Recognitio n Percentage 1 Image 1 66 63 3 95.45455 2 Image 2 38 36 2 94.73684 3 Image 3 66 64 2 96.9697 4 Image 4 28 28 0 100 5 Image 5 32 31 1 96.875 6 Image 6 81 81 0 100 7 Image 7 74 73 1 98.64865 8 Image 8 83 81 2 97.59036 9 Image 9 60 60 0 100 4. CONCLUSION A proposed system contains region based text extraction technique from digital English comic images. In existing system pre-processing is done by Morphological filter and in proposed system it is done by Median filter which gives better result as compared to the Morphological filter. Text extraction ratio is better in proposed system as compared to existing system due to the CCL algorithm and OCR. Text Extraction ration is 98.20% REFERENCES [1] Sundaresan.M, Ranjini.S. “Text extraction from digital English comic image using the two blobs extraction method” Proceedings on the international conference on Pattern Recognition, Informatics and Medical Engineering (PRIME-2012), pp 467-471, 978-1-4673- 1039-0/12/$31.00 ©2012 IEEE, Mar 2012. [2] Siddhartha Brahma, “Text Extraction Using Shape Context Matching”. COS429: Computer Vision. Vol.1, Jan 12, 2006. [3] Ruini Cao, Chew Lim Tan, “Separation of overlapping text from graphics,” vol.29, no.1, pp.20-31, Jan/Feb 2009. [4] [4] Q. Yuan, C. L. Tan, “Text Extraction from Gray Scale Document Images Using Edge Information,” proceedings of sixth international conference on document analysis and recognition, pp.302-306, 2001. [5] Kohei Arai and Herman Tolle, “Automatic E-Comic Content Adaptation,” International Journal of Ubiquitous Computing (IJUC) vol.1, Issue (1), pp1-11, 2010. [6] Kohei Arai and Herman Tolle, “Method of real time text extraction from digital manga comic image,” International Journal of Image Processing (IJIP), vol.4, Issue (6), pp 669-676, 2010. [7] C. A. Bouman, ”Connected Component Analysis,” Digital Image Processing, pp 1-19, Jan 10, 2011. [8] Tesseractocr.com](https://image.slidesharecdn.com/irjet-v3i1125-171020110708/75/Text-Extraction-and-Recognition-Using-Median-Filter-5-2048.jpg)