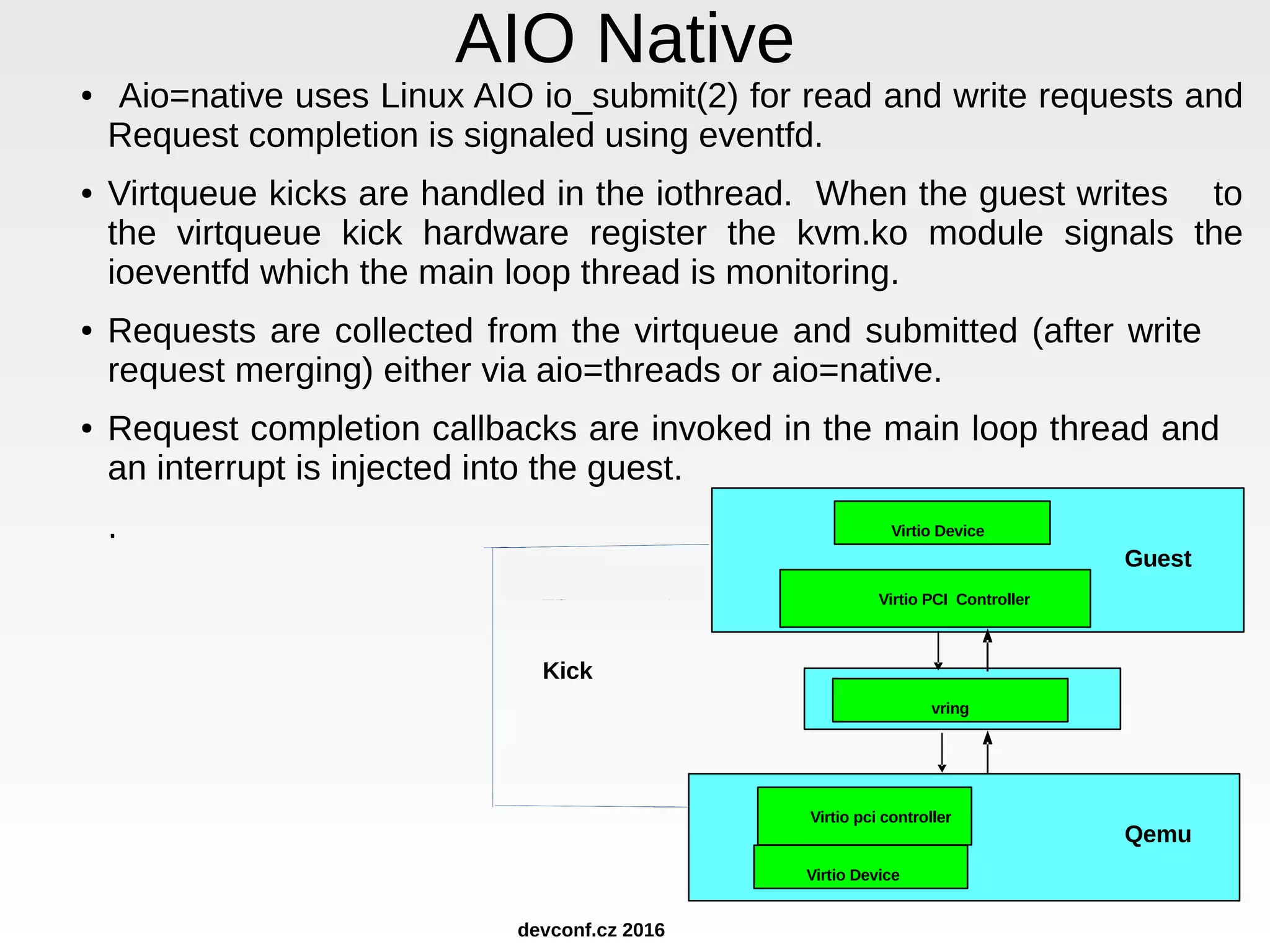

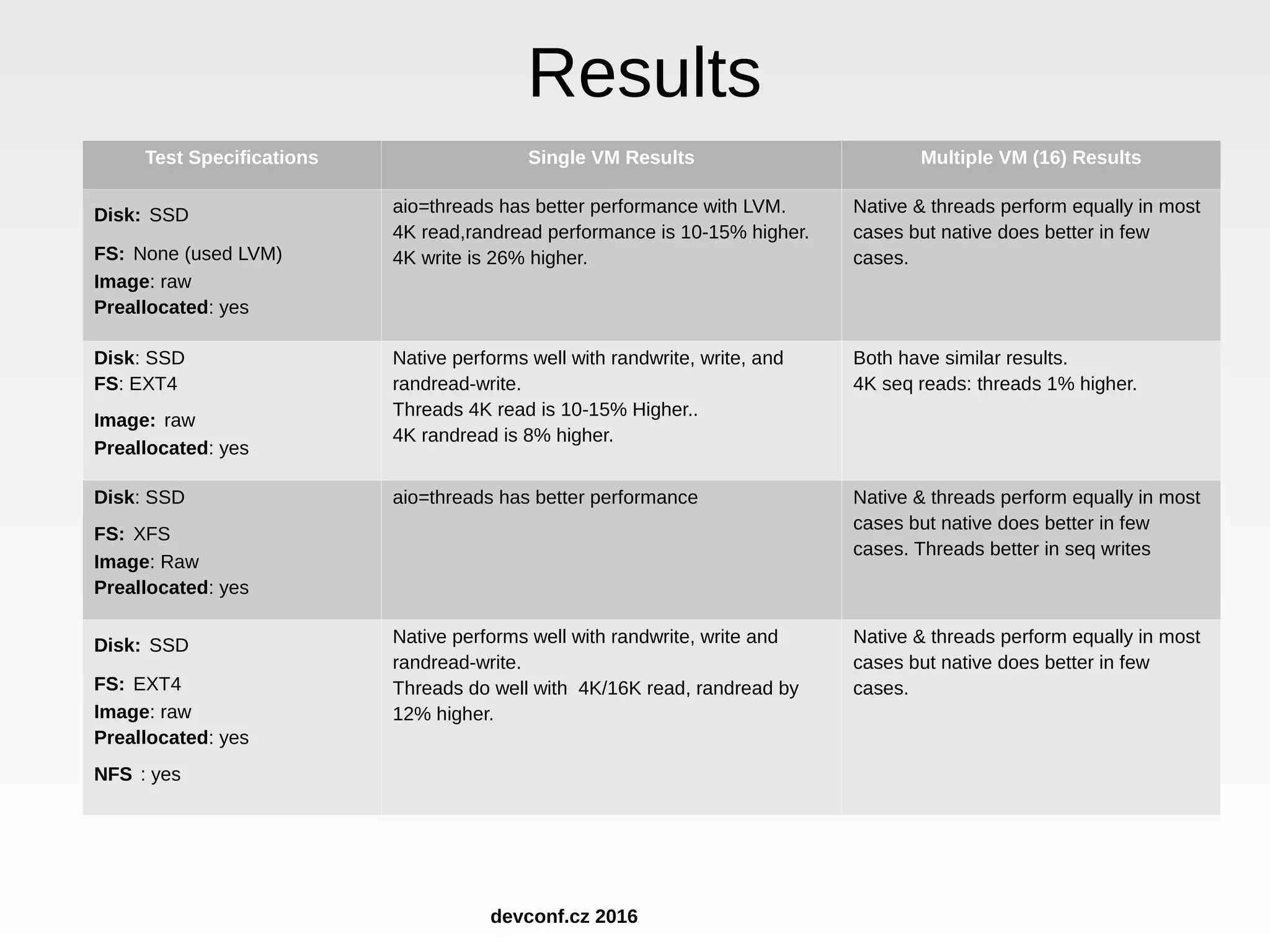

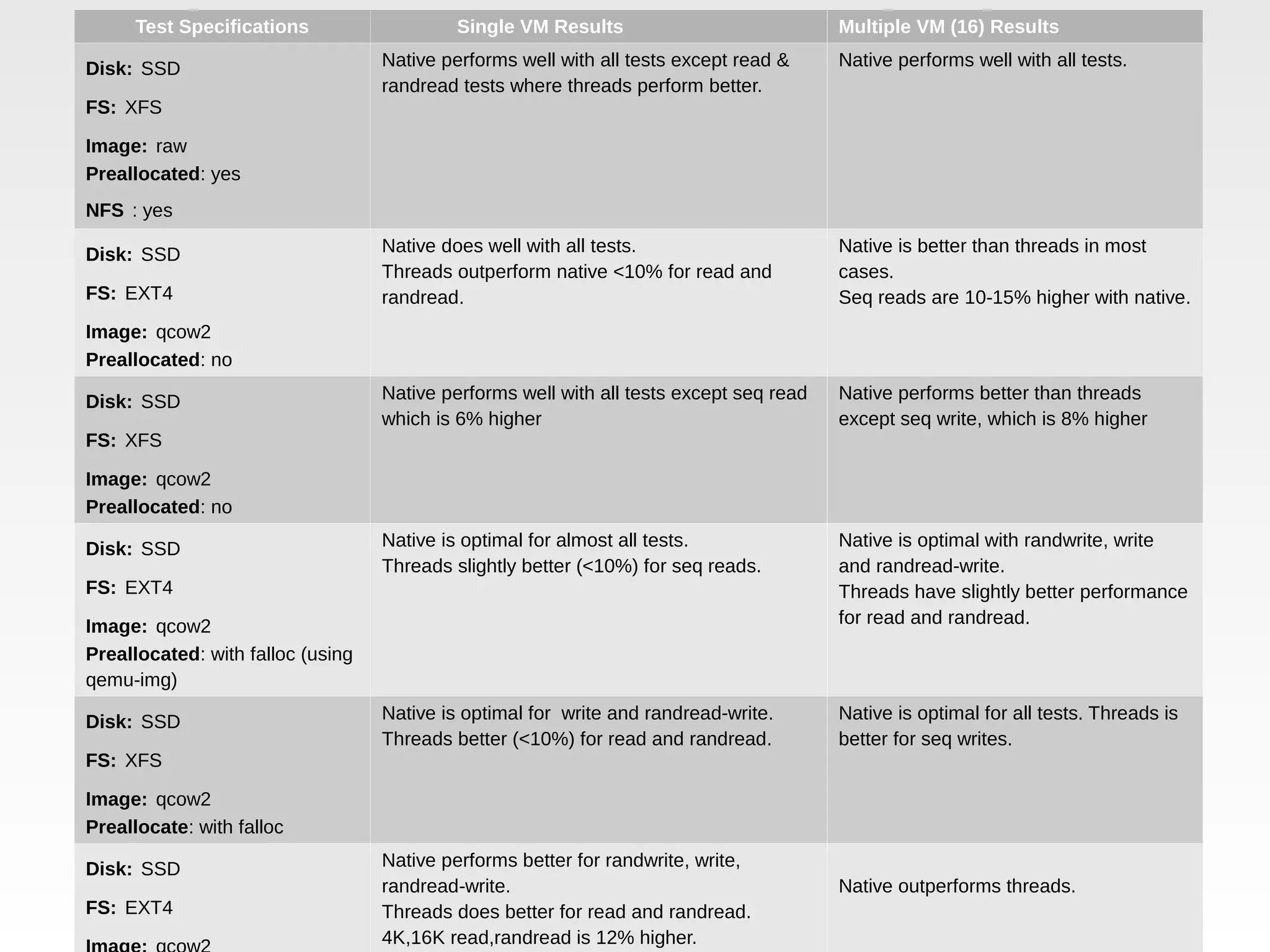

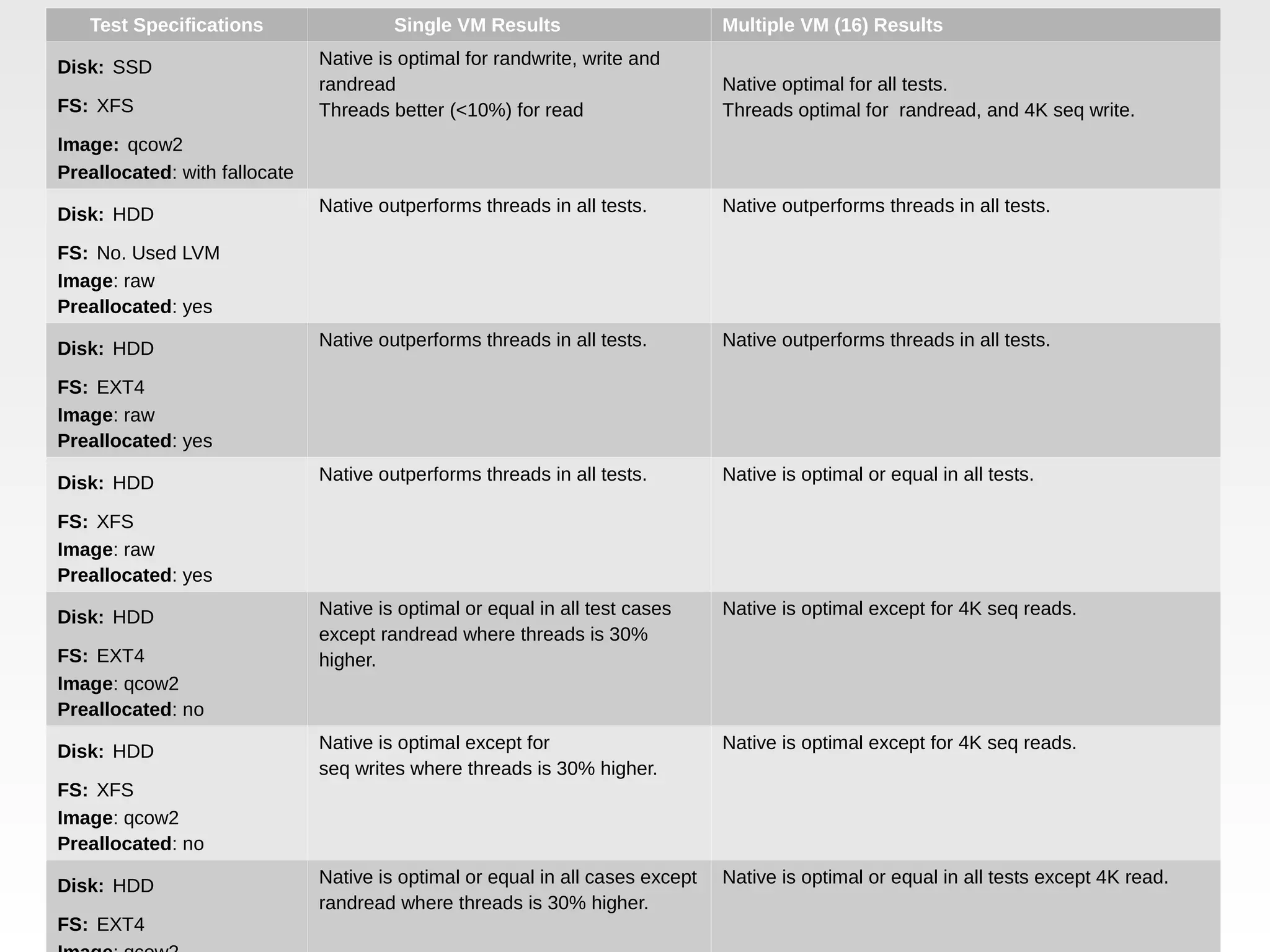

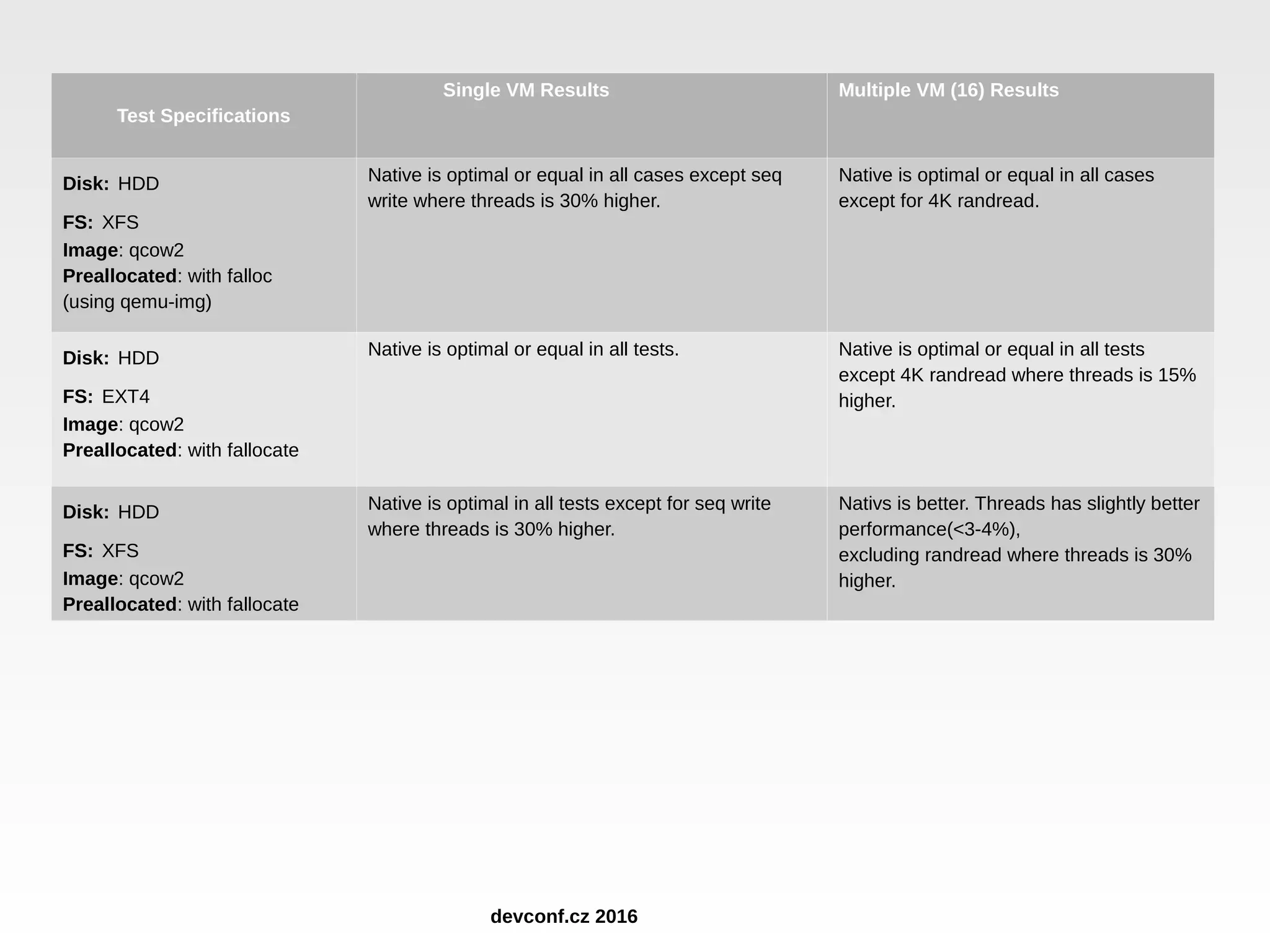

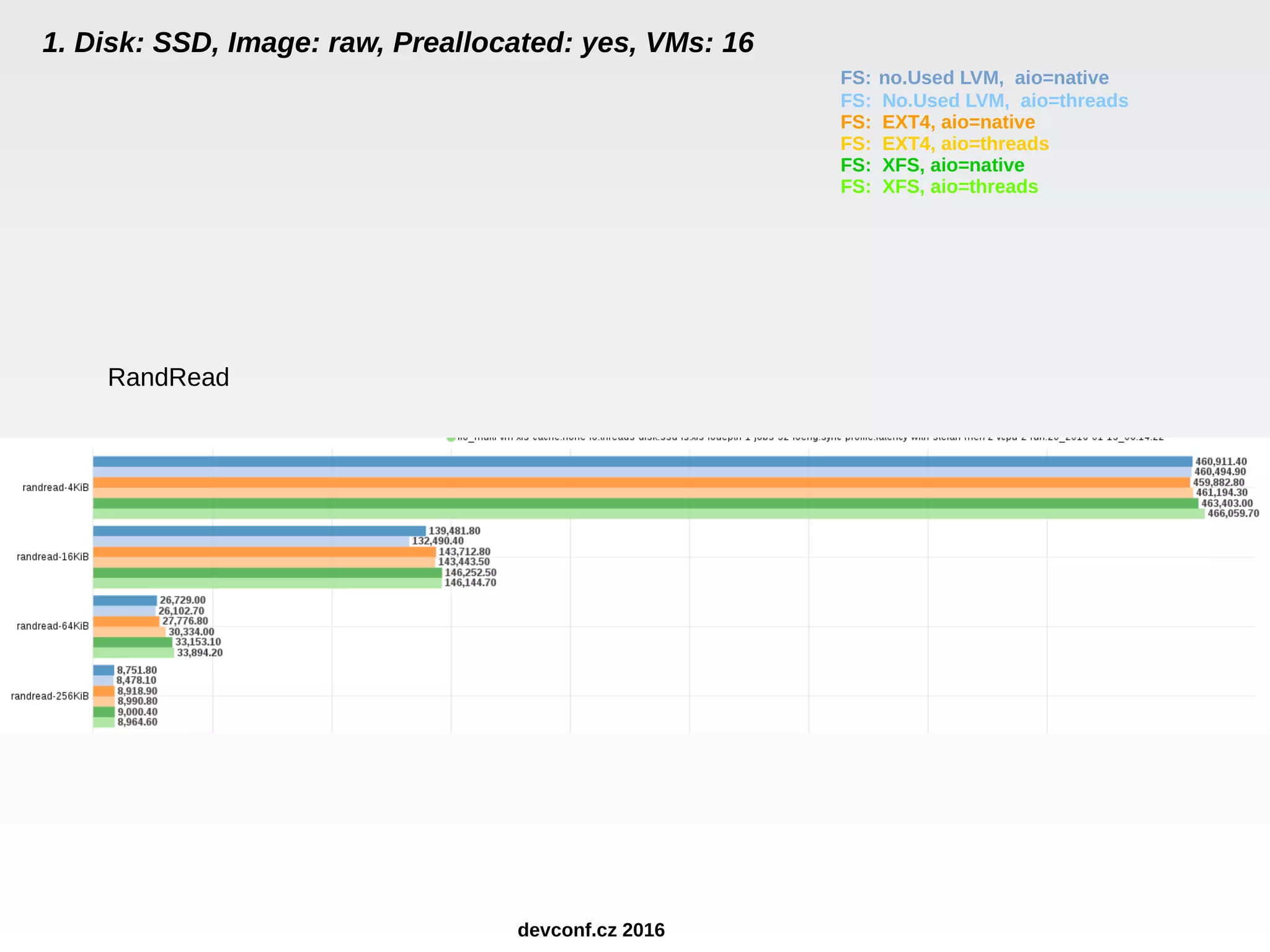

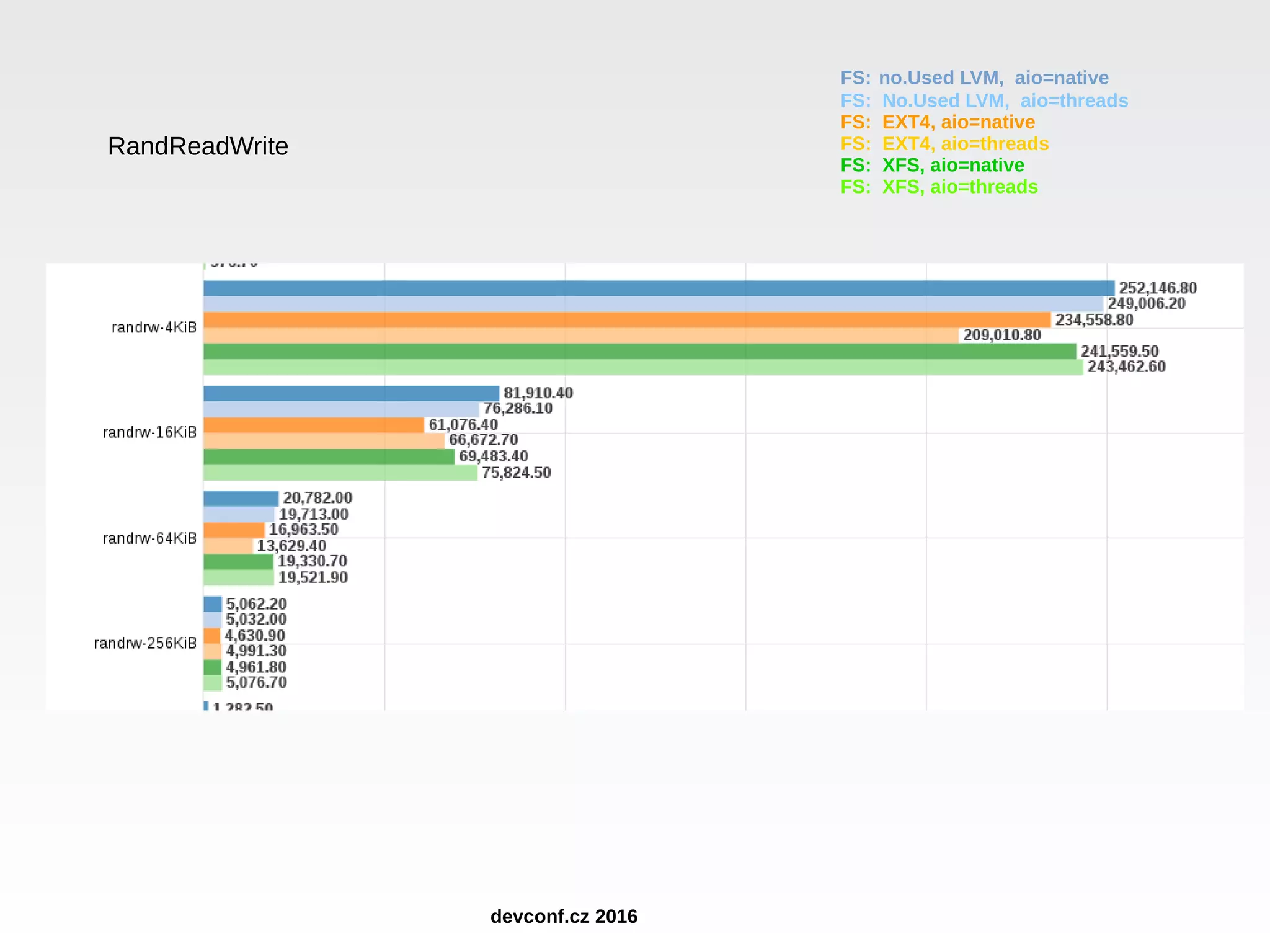

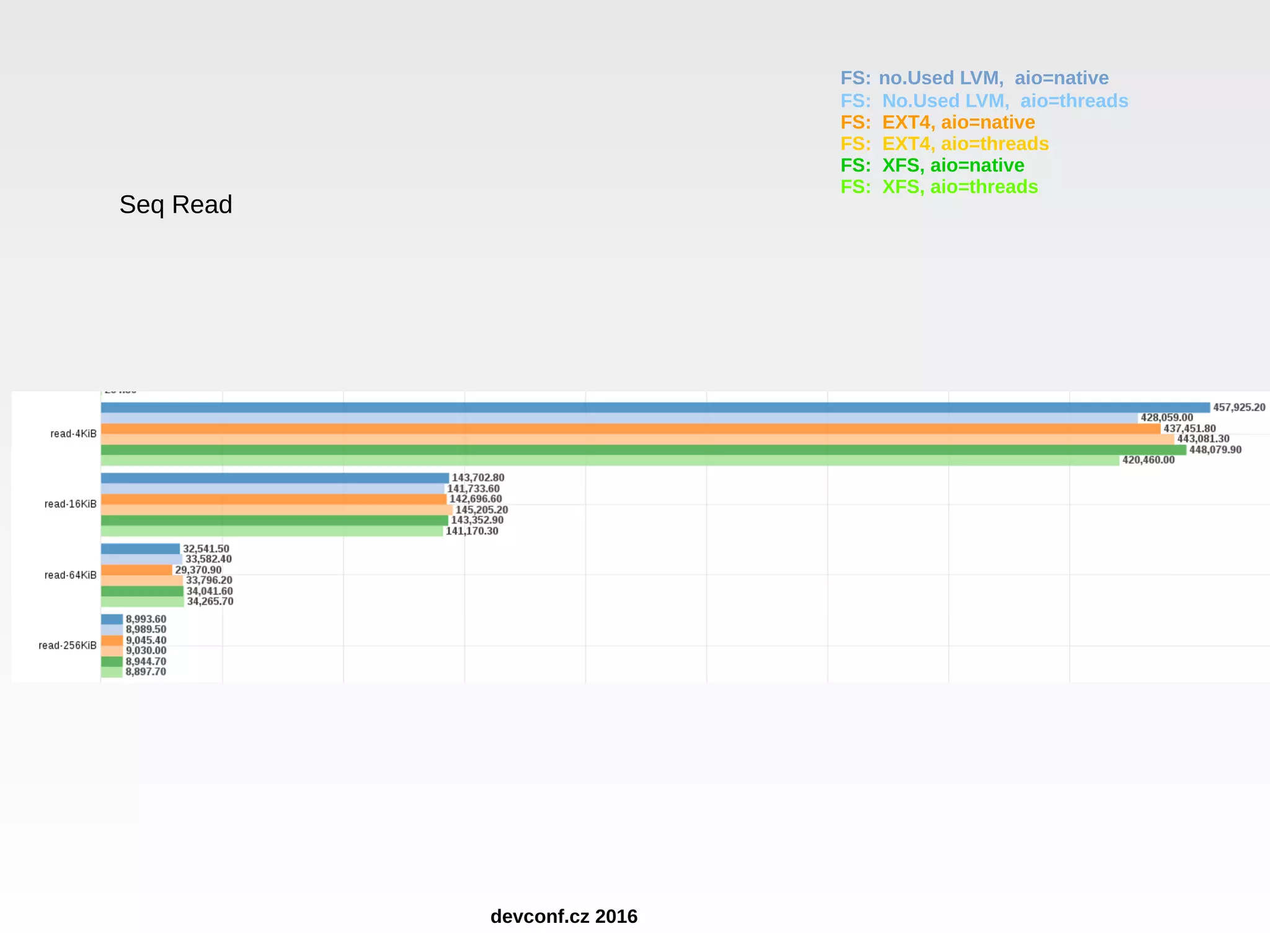

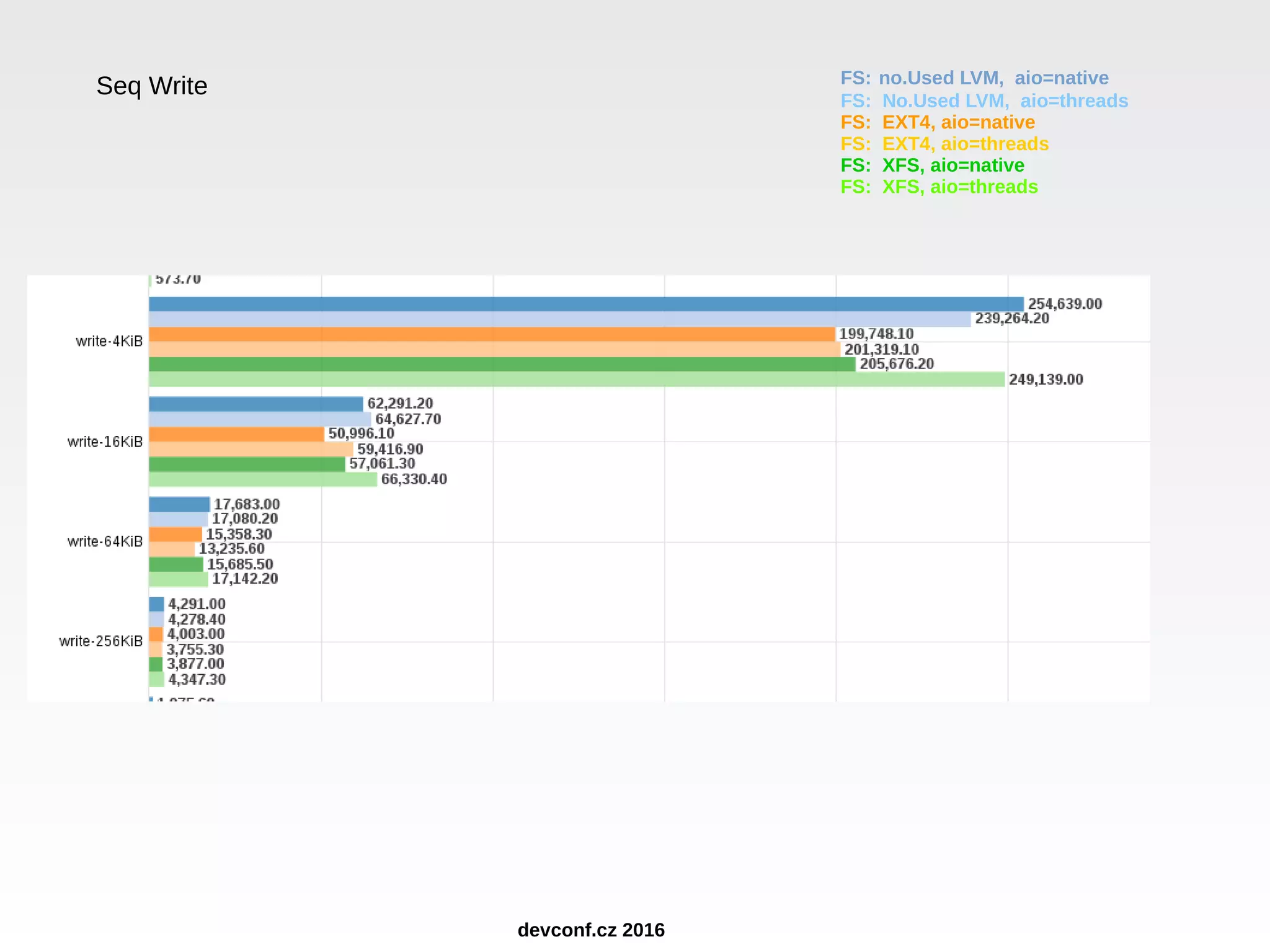

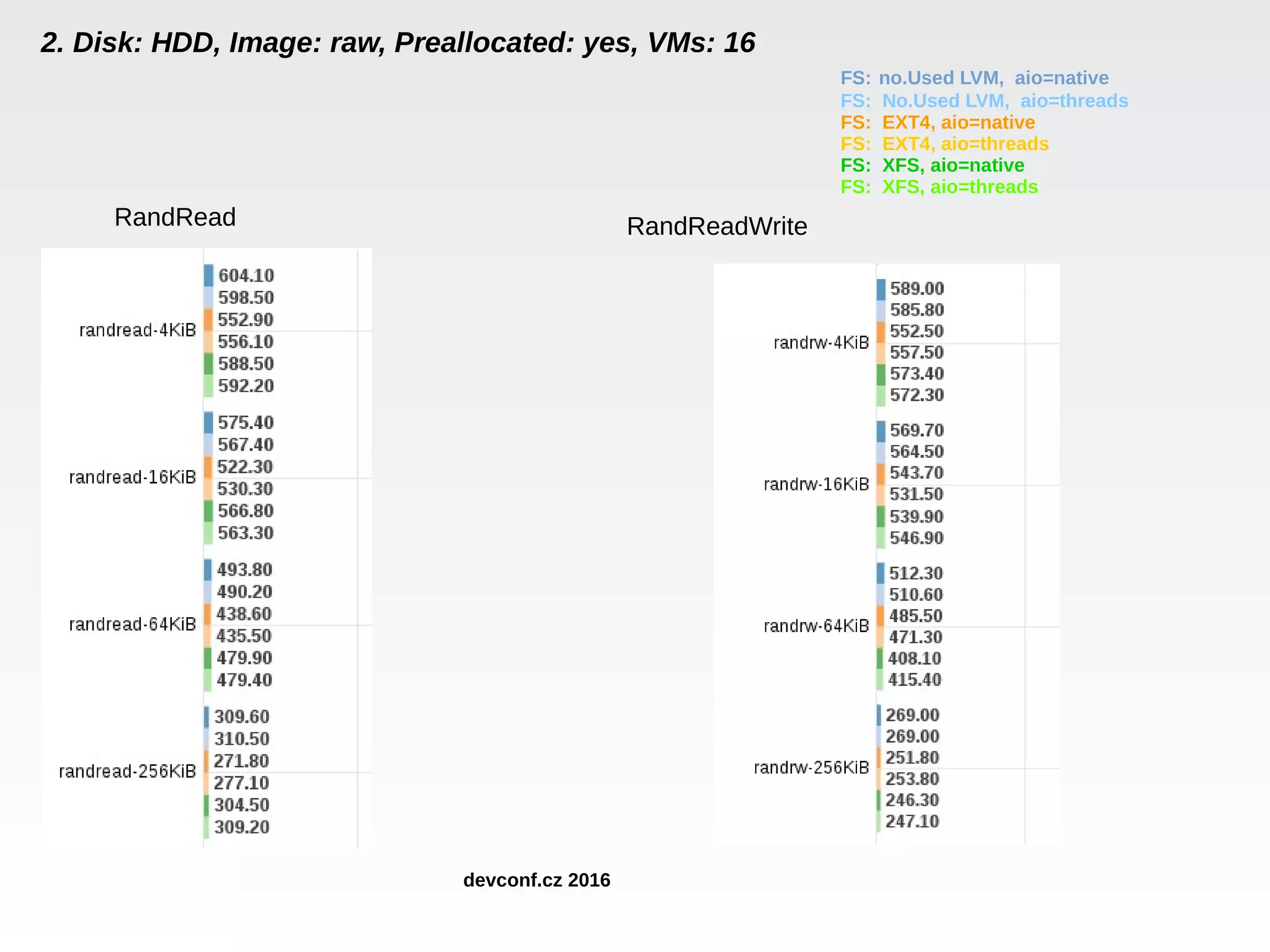

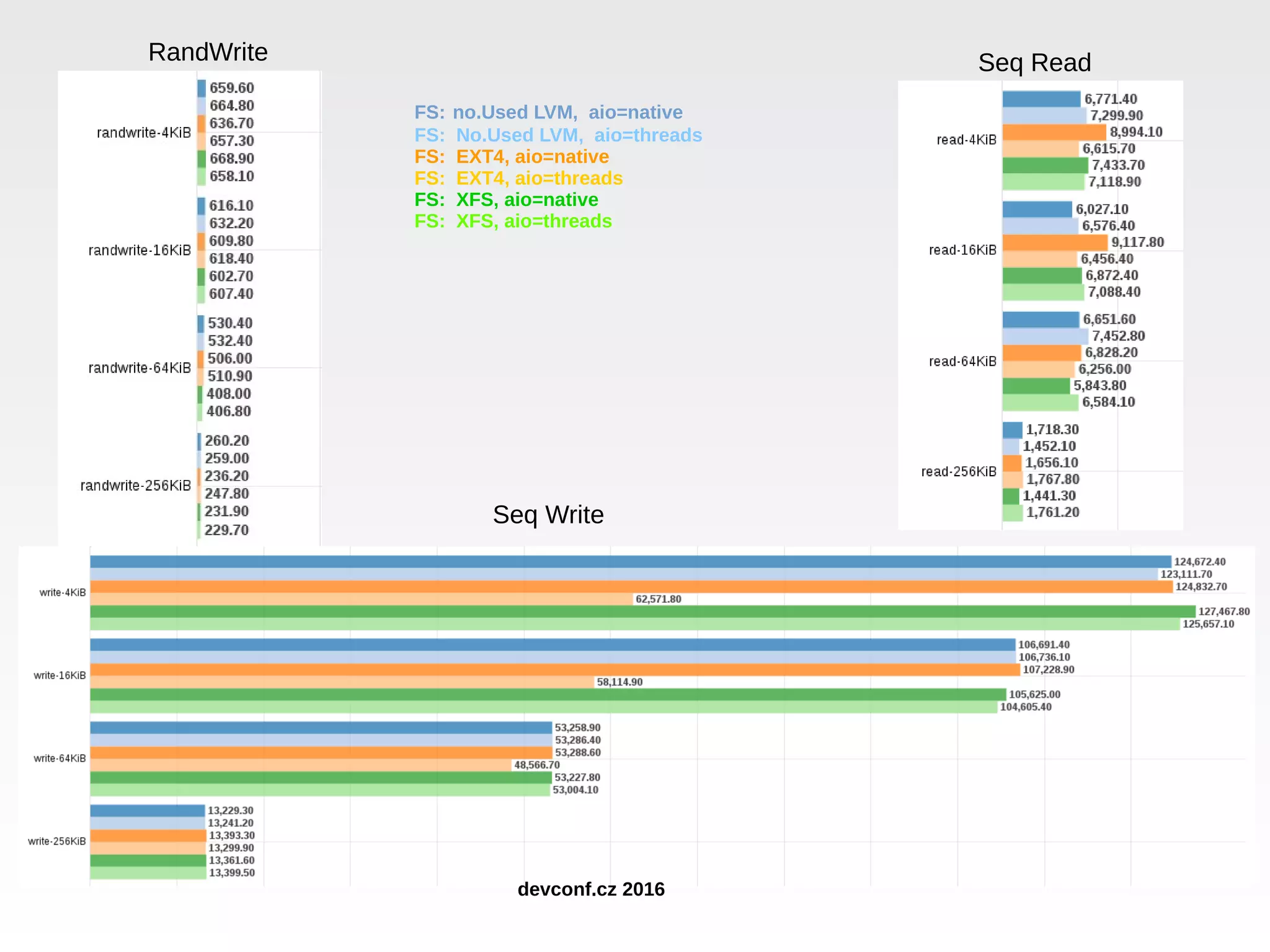

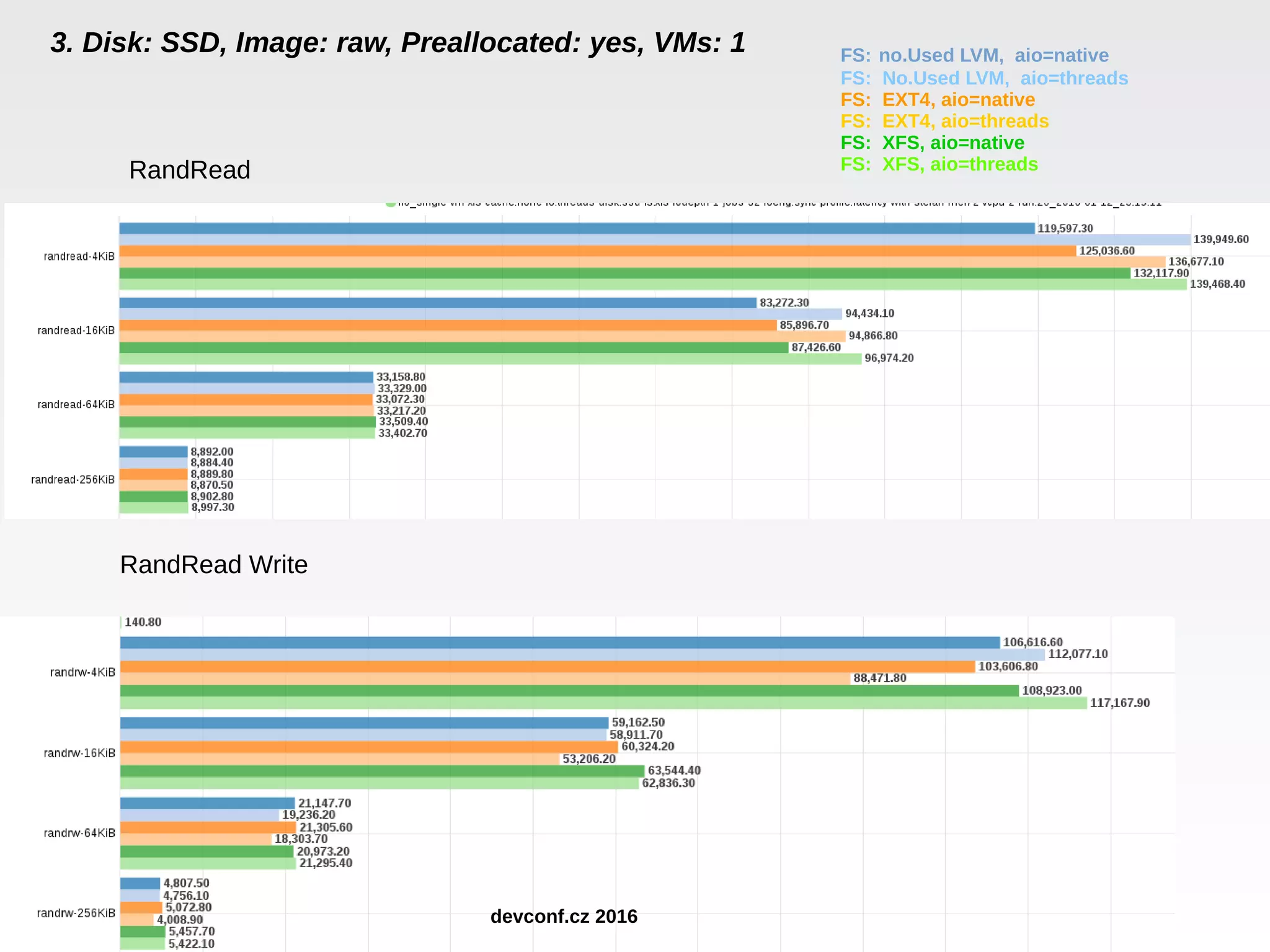

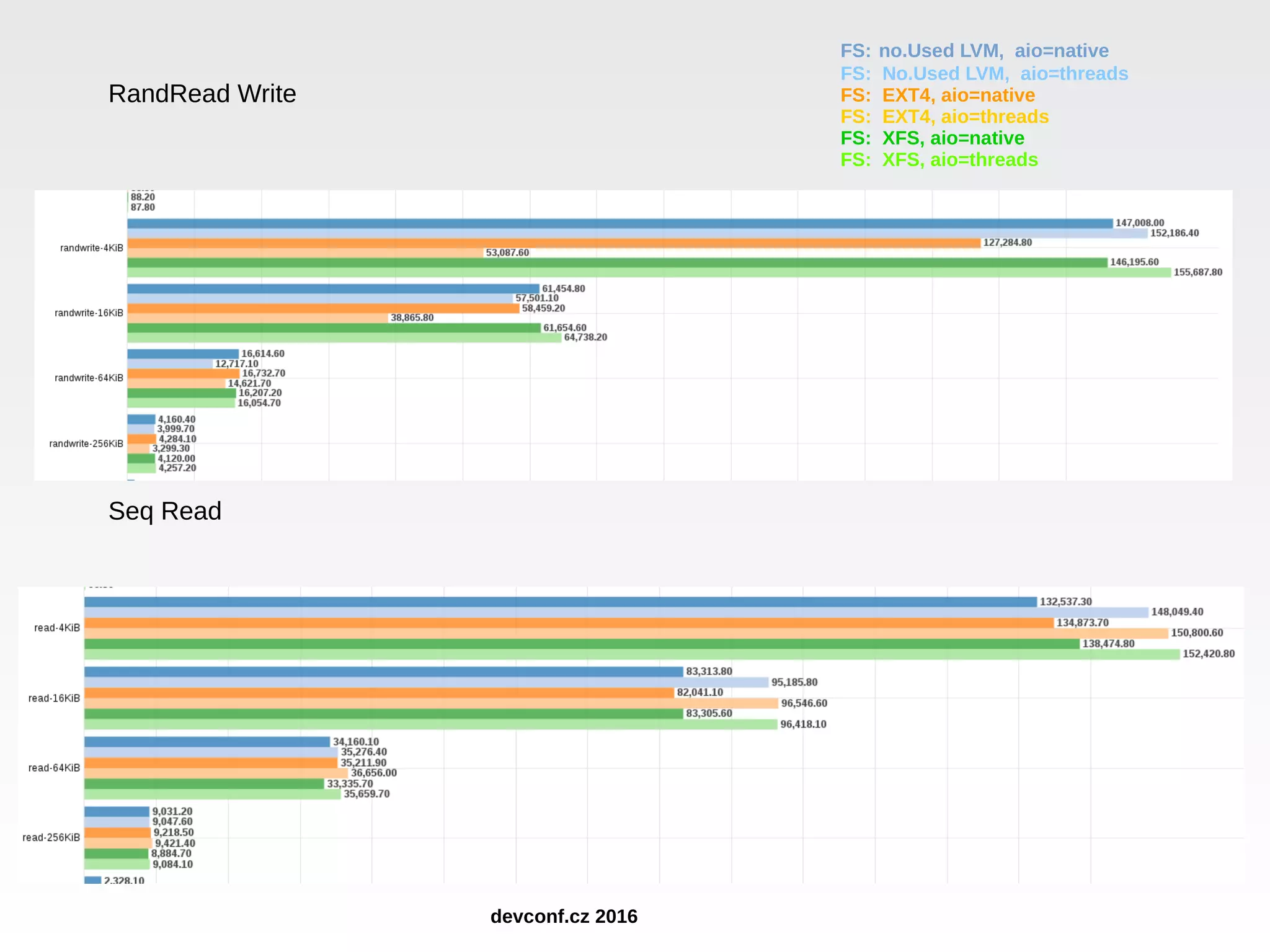

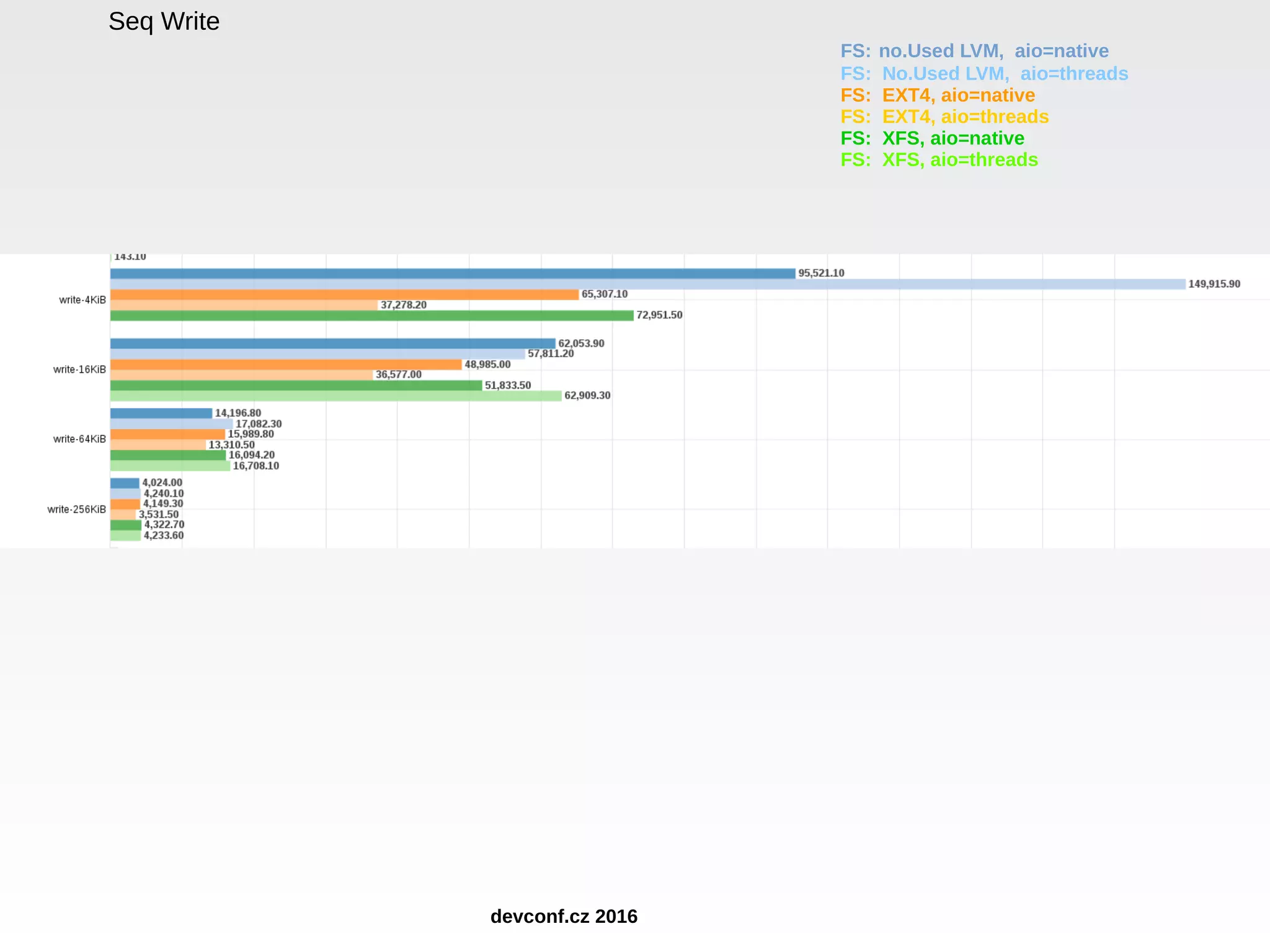

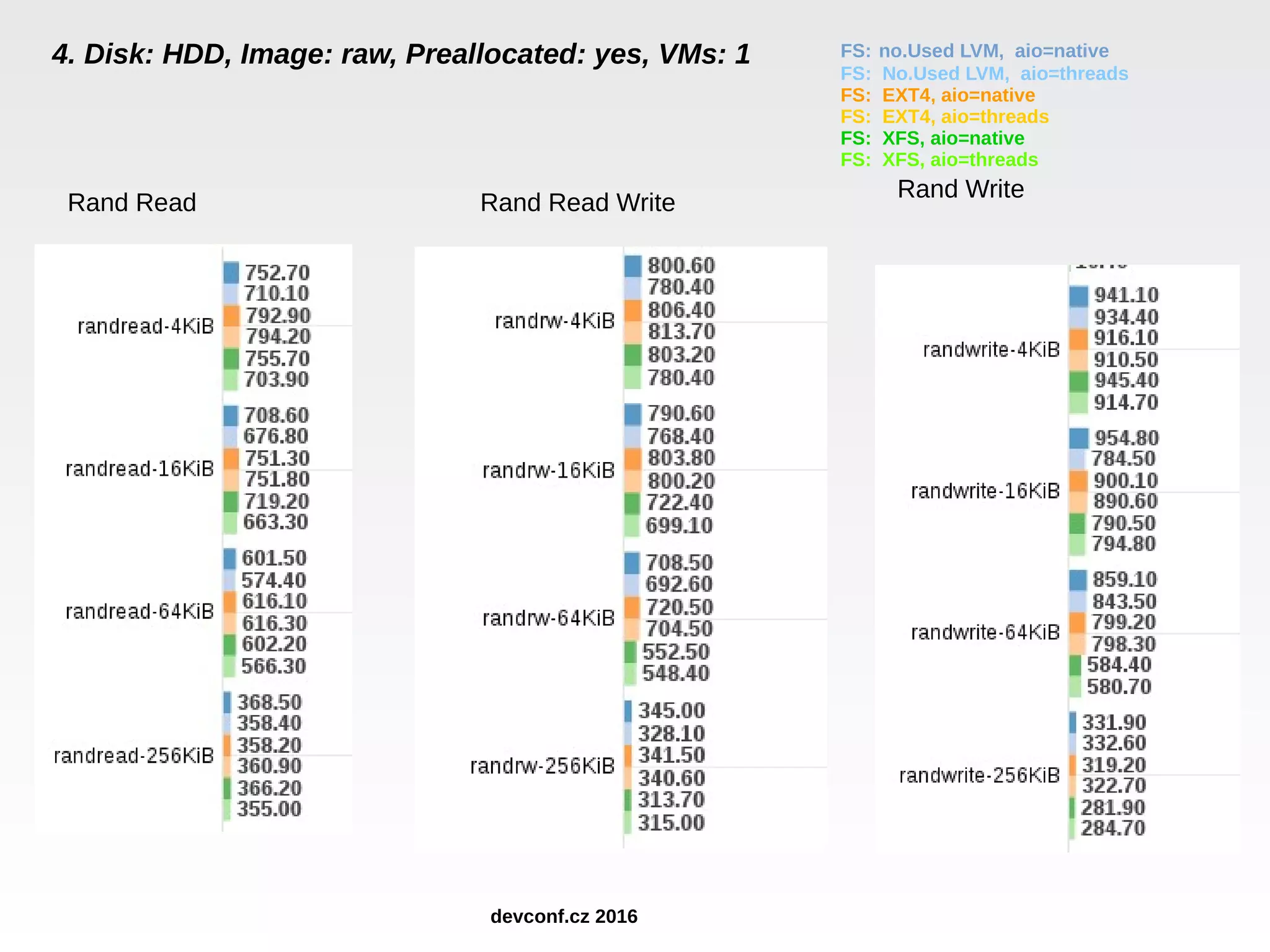

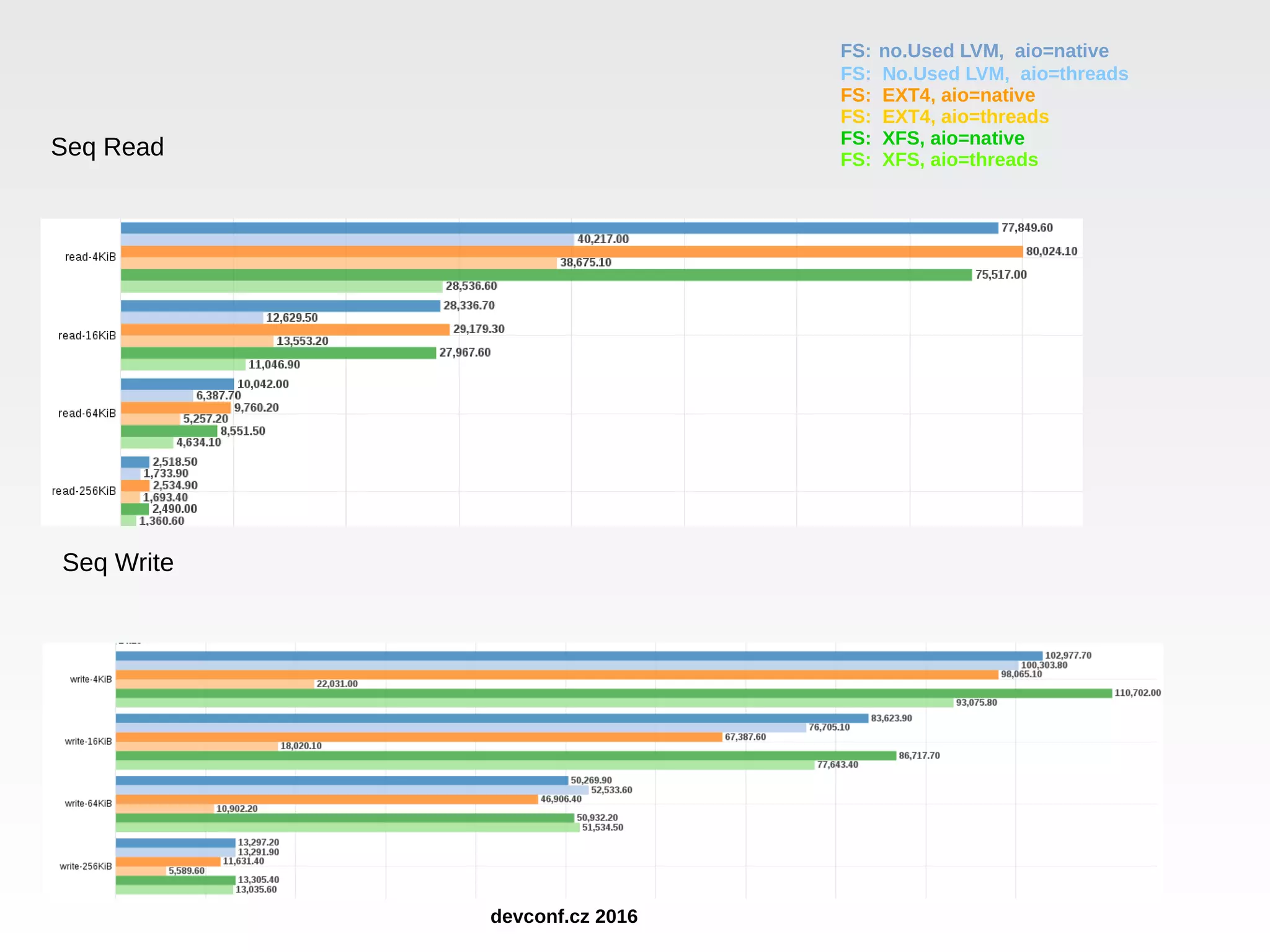

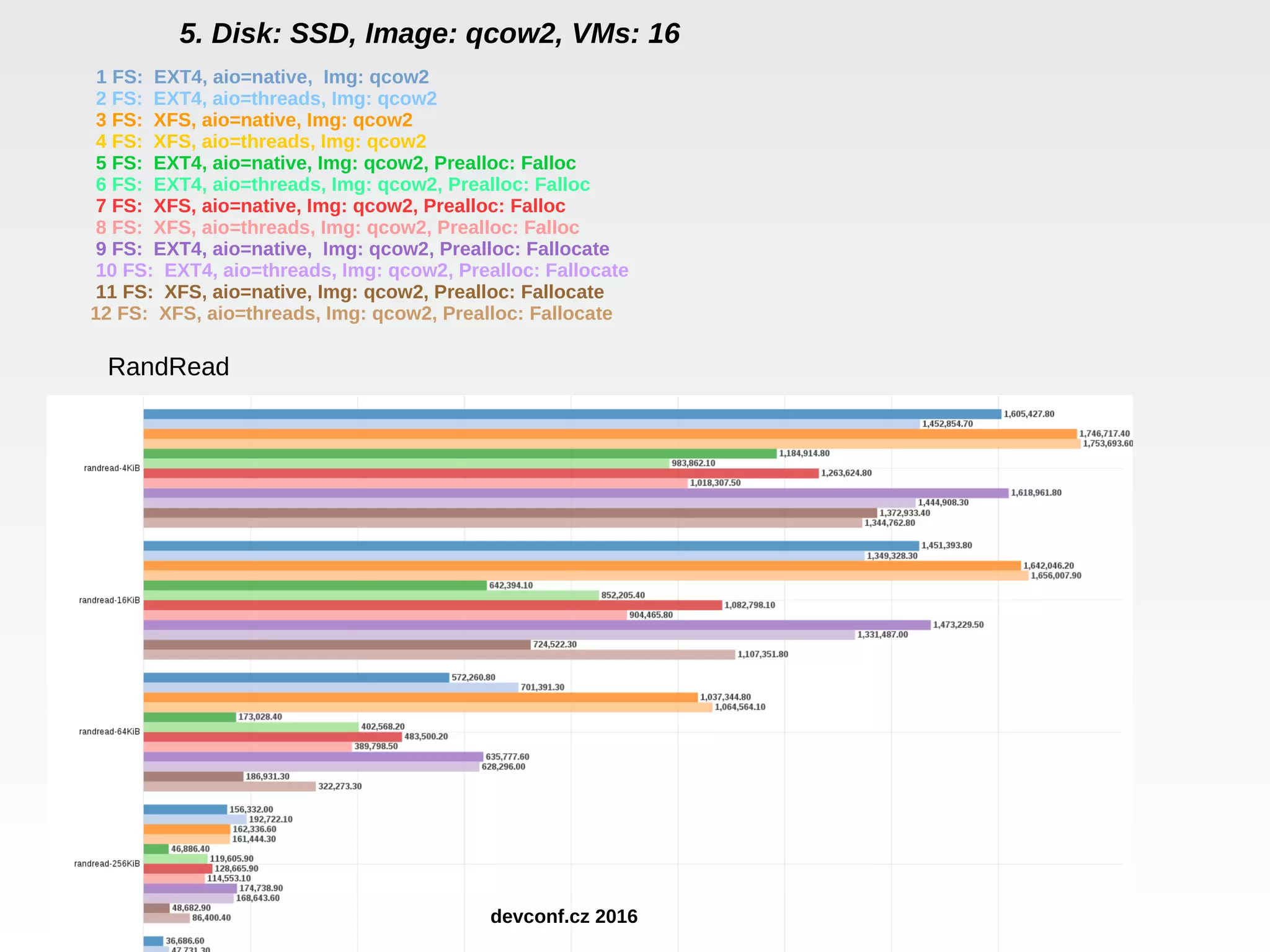

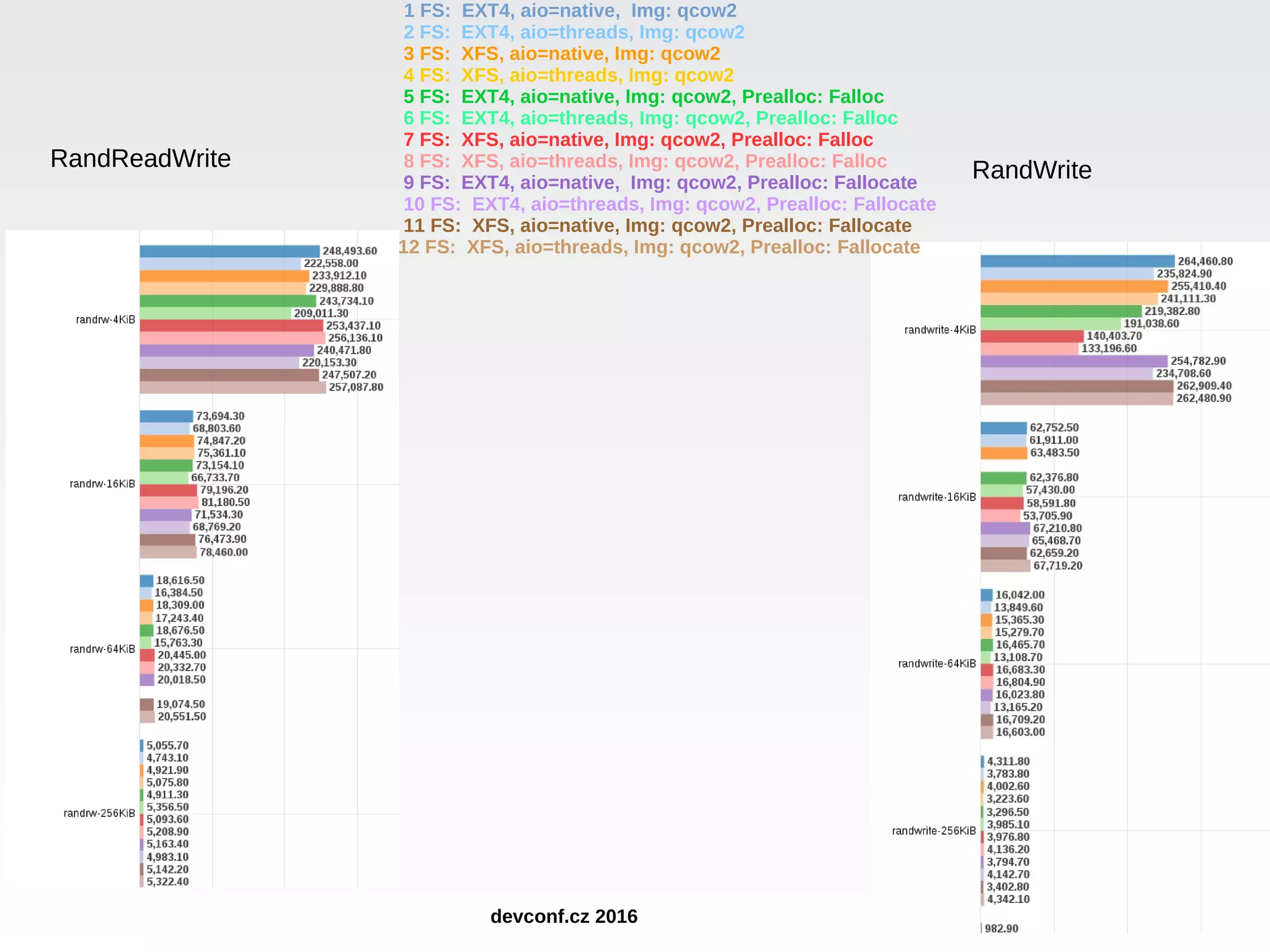

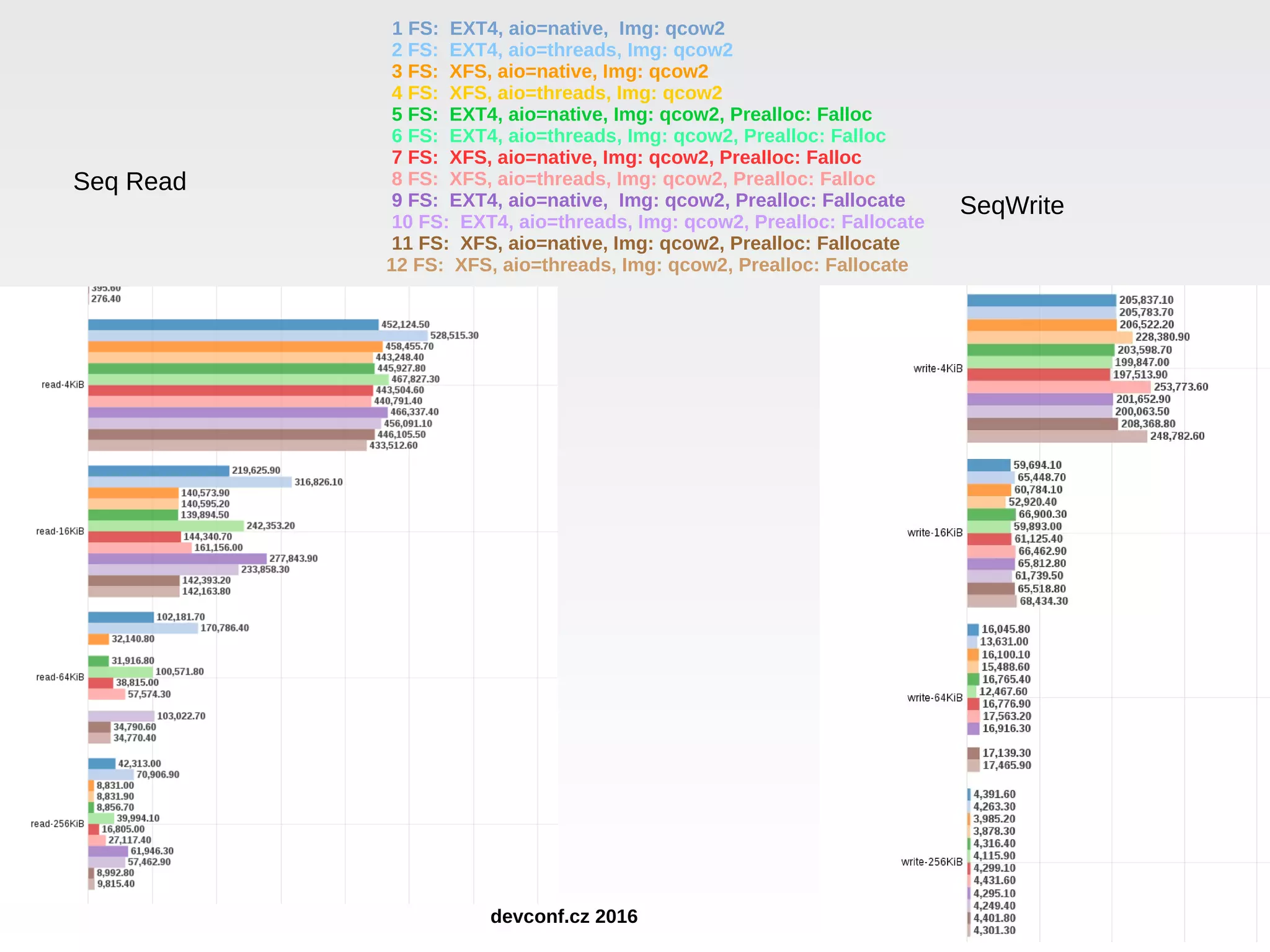

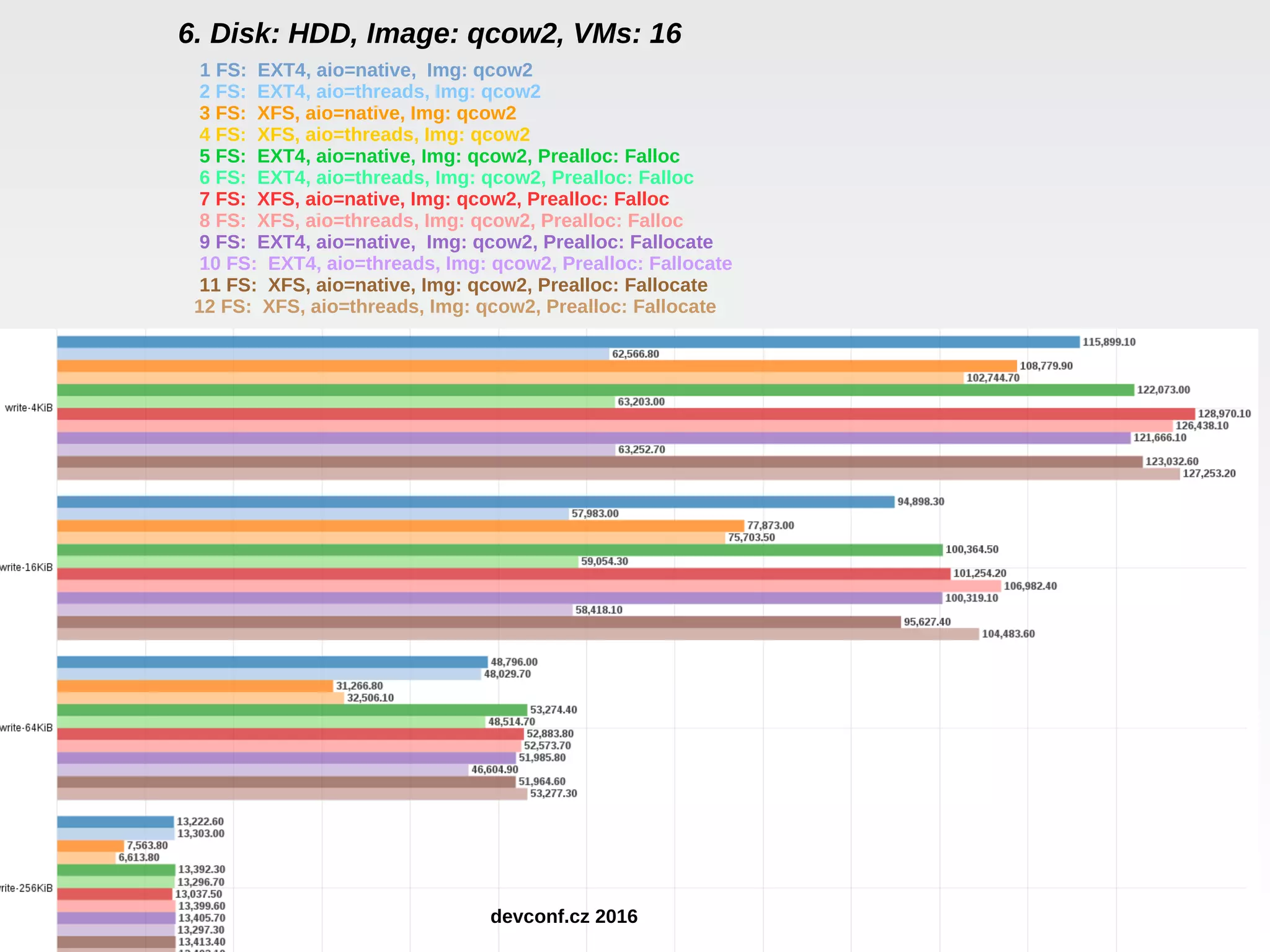

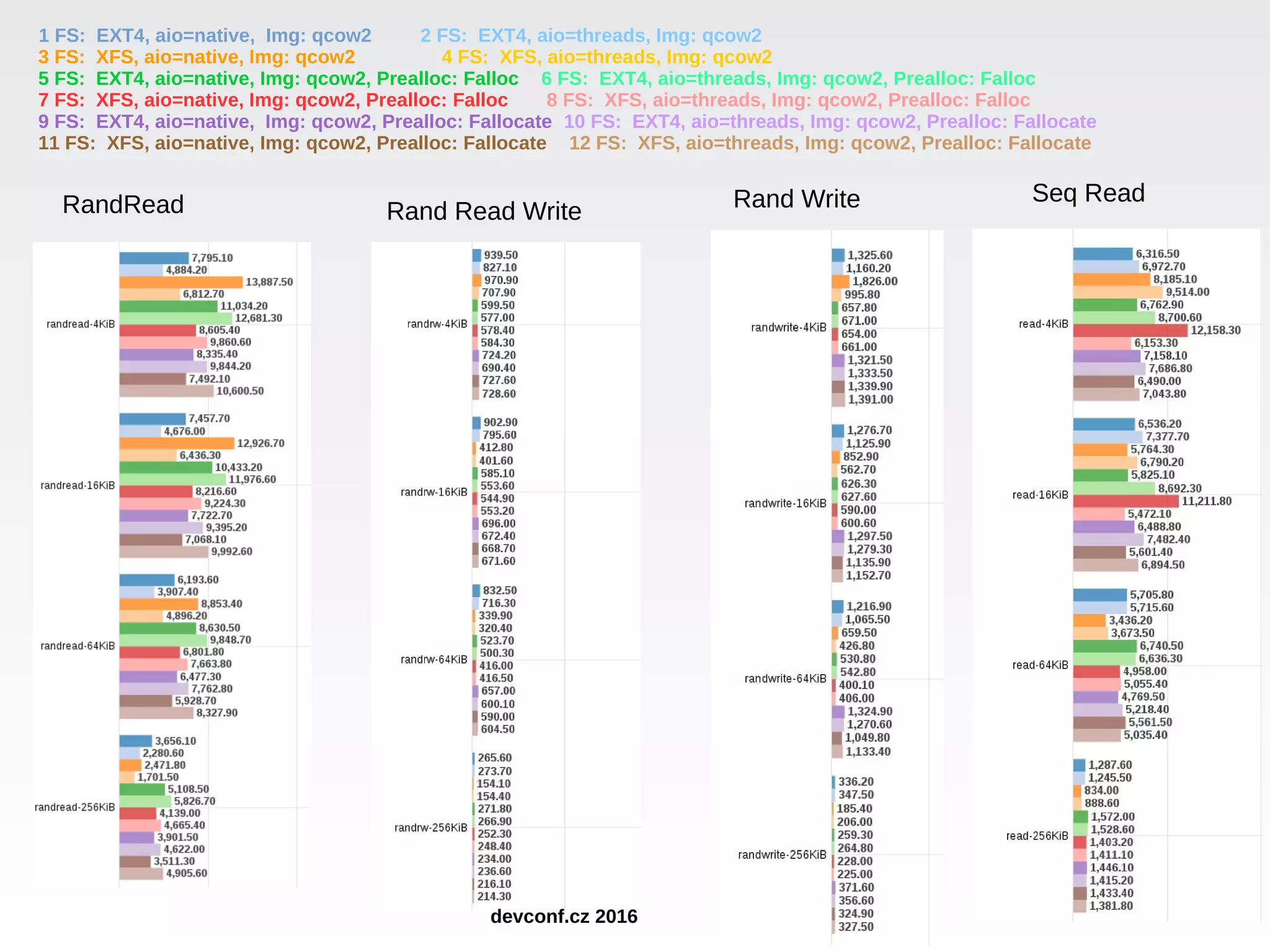

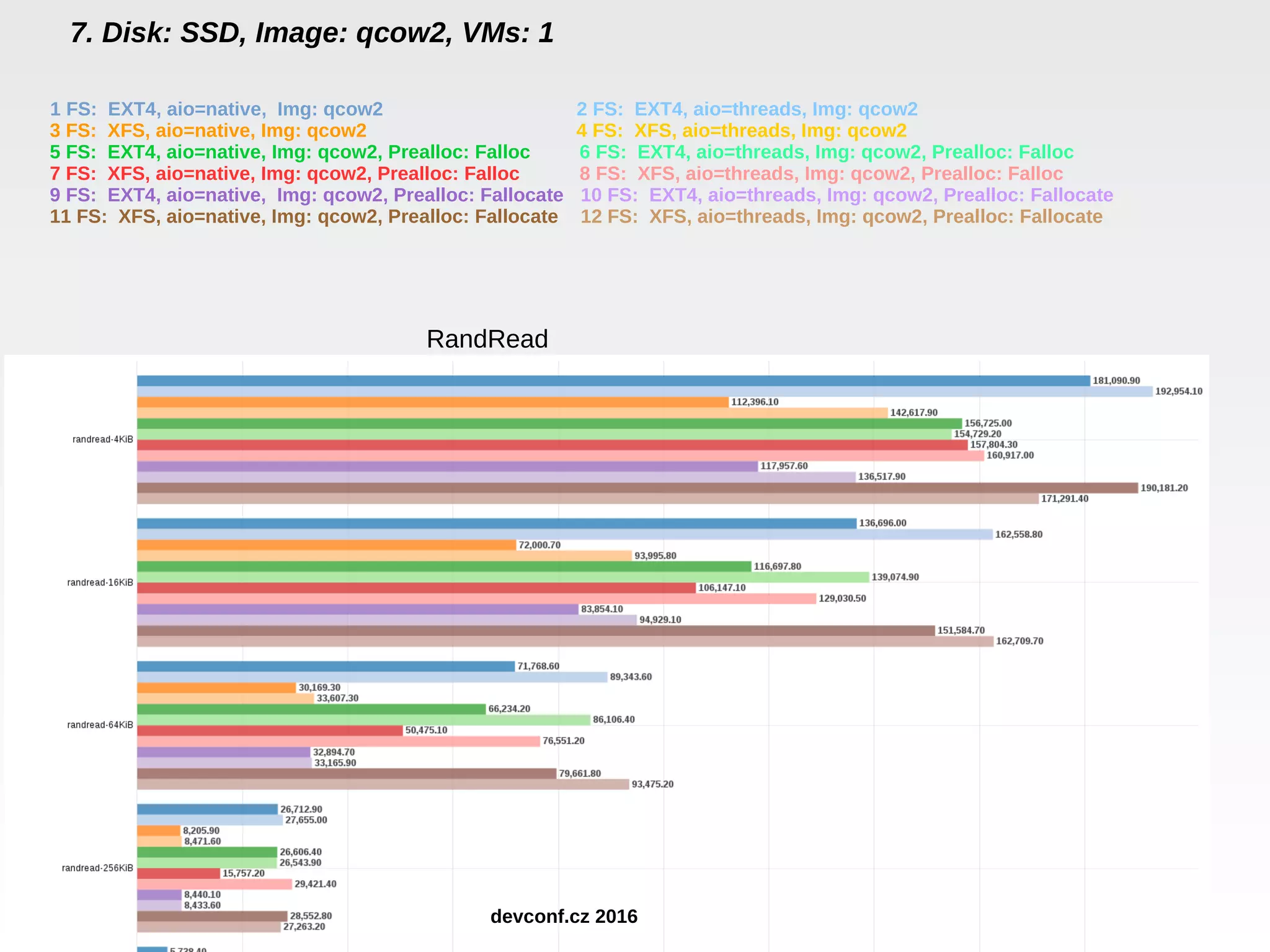

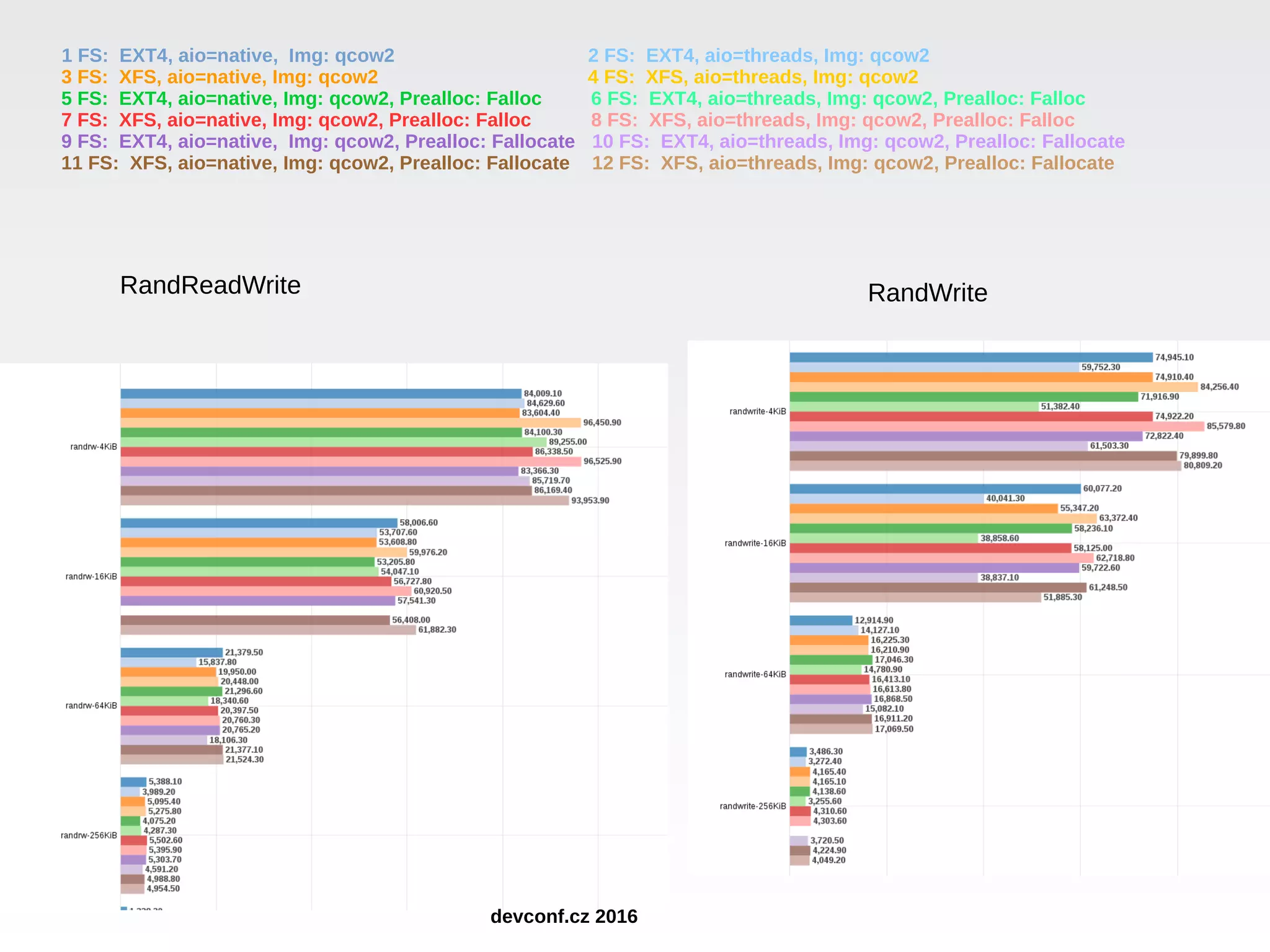

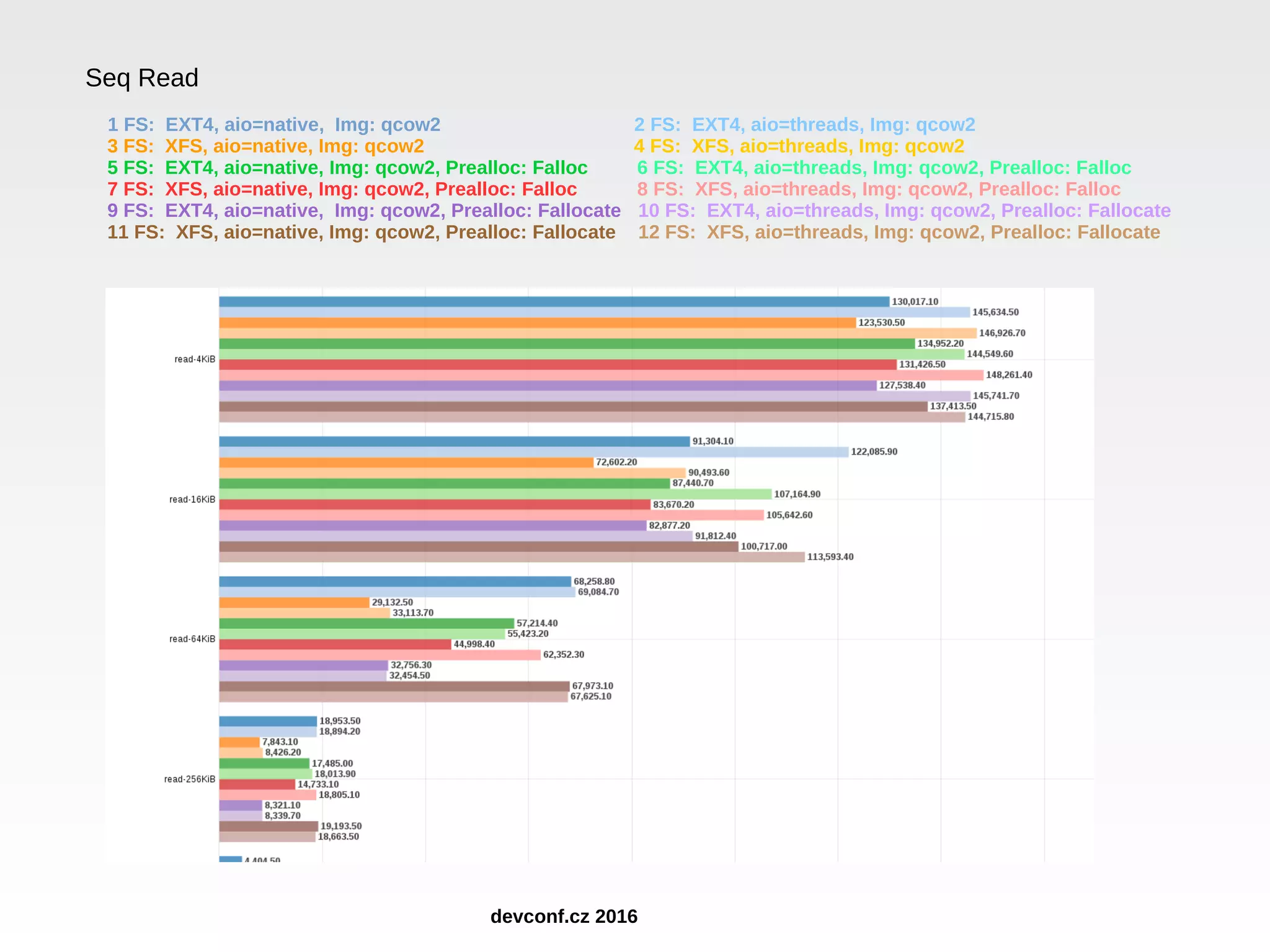

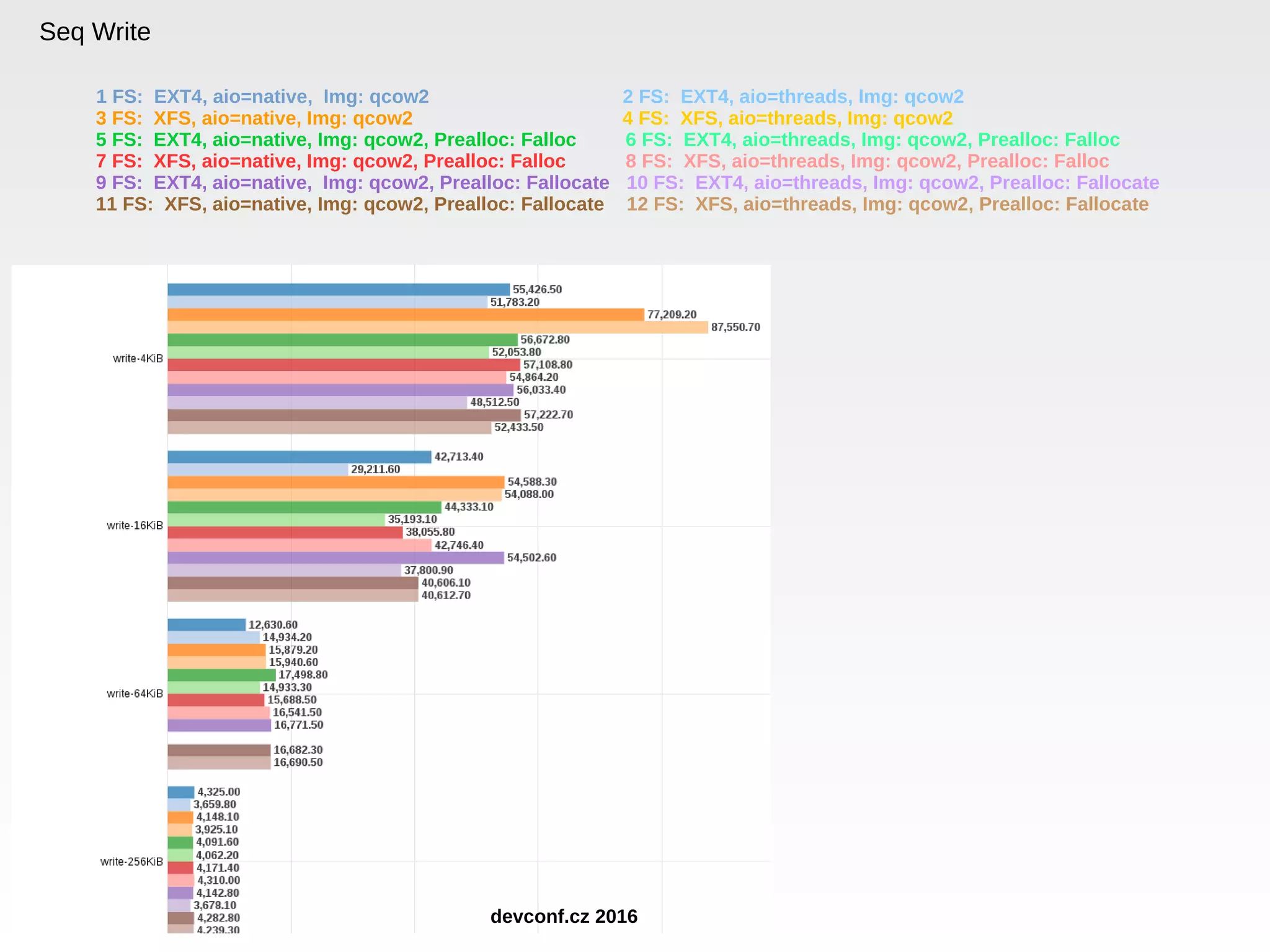

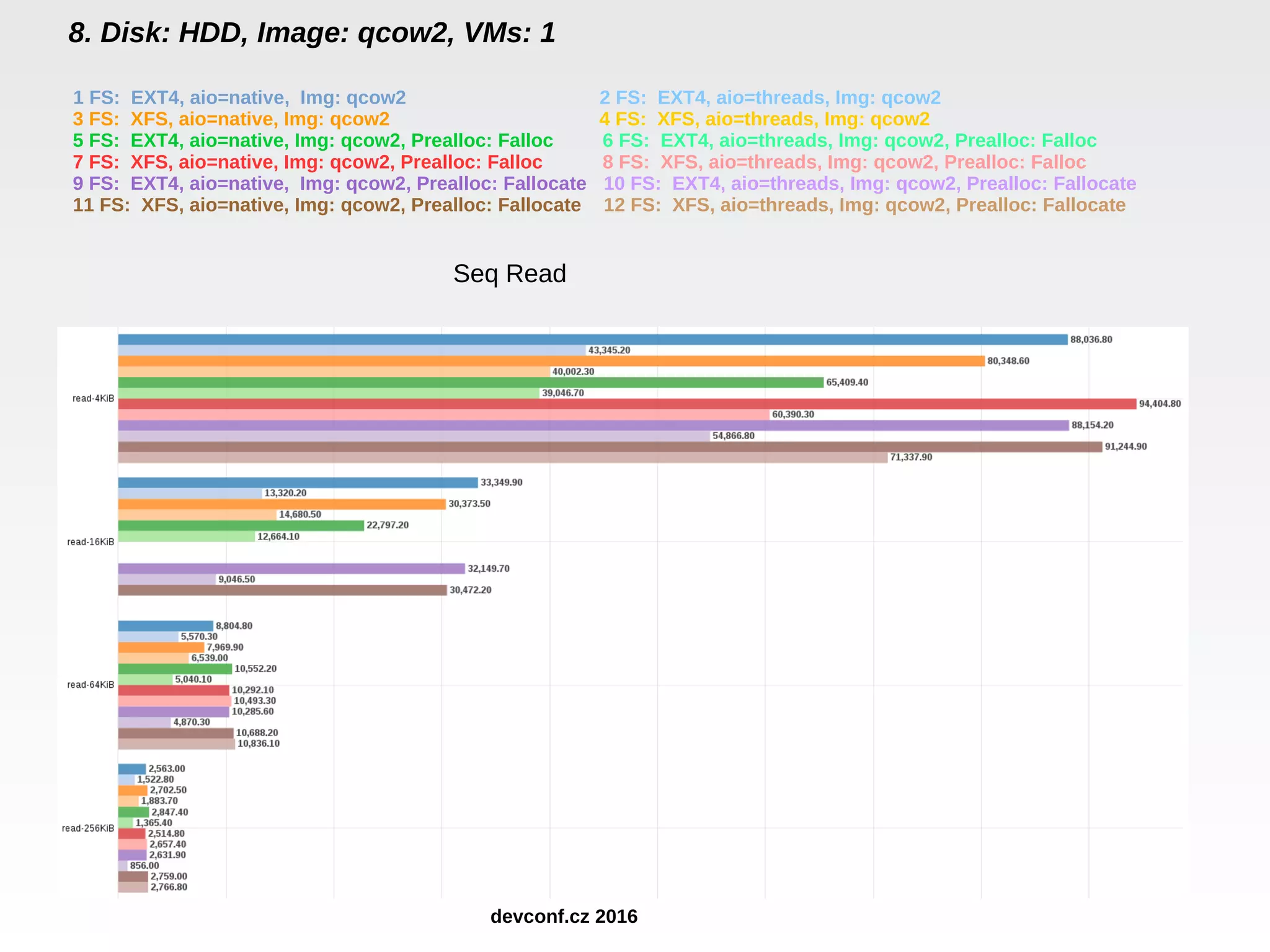

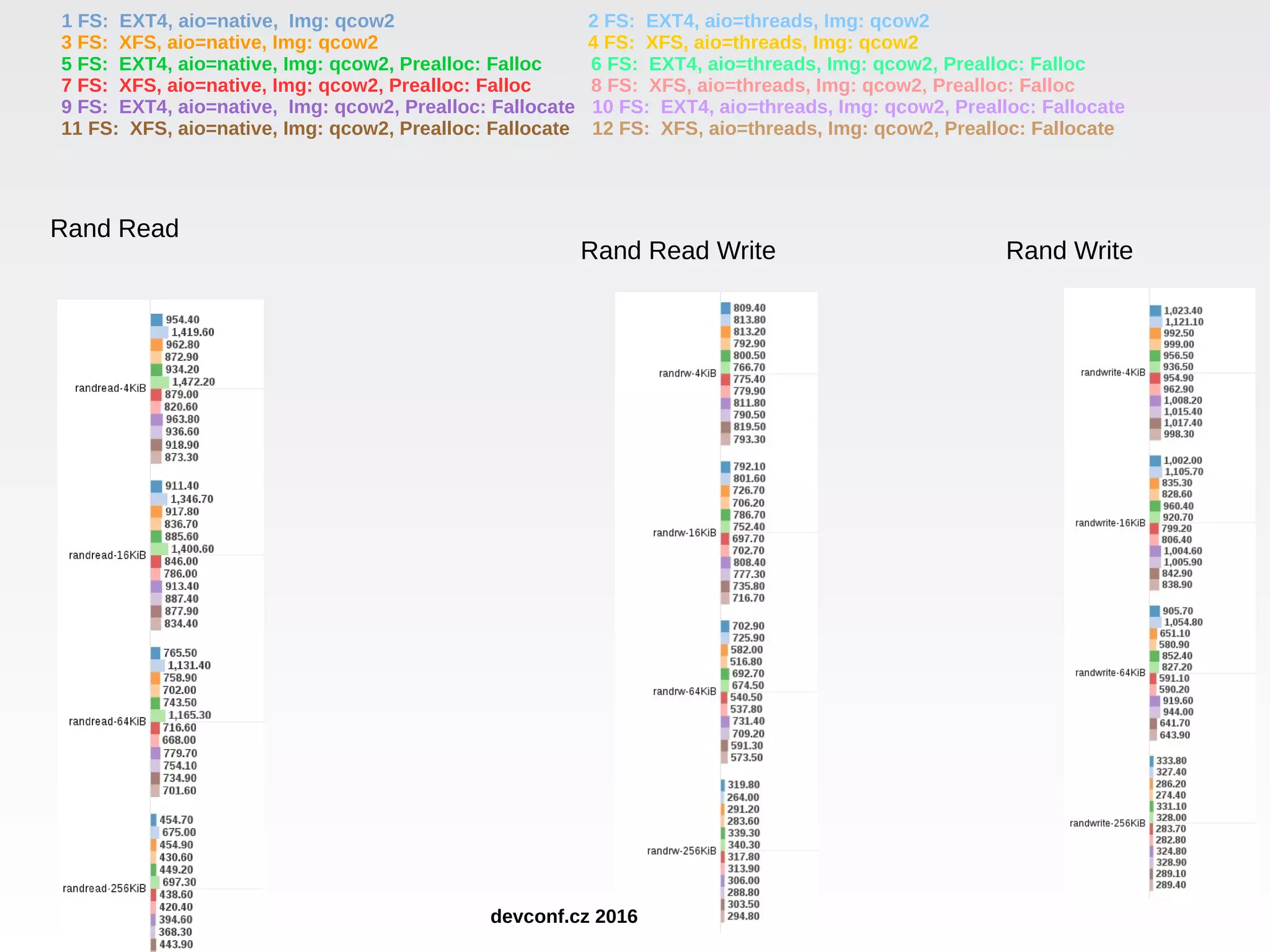

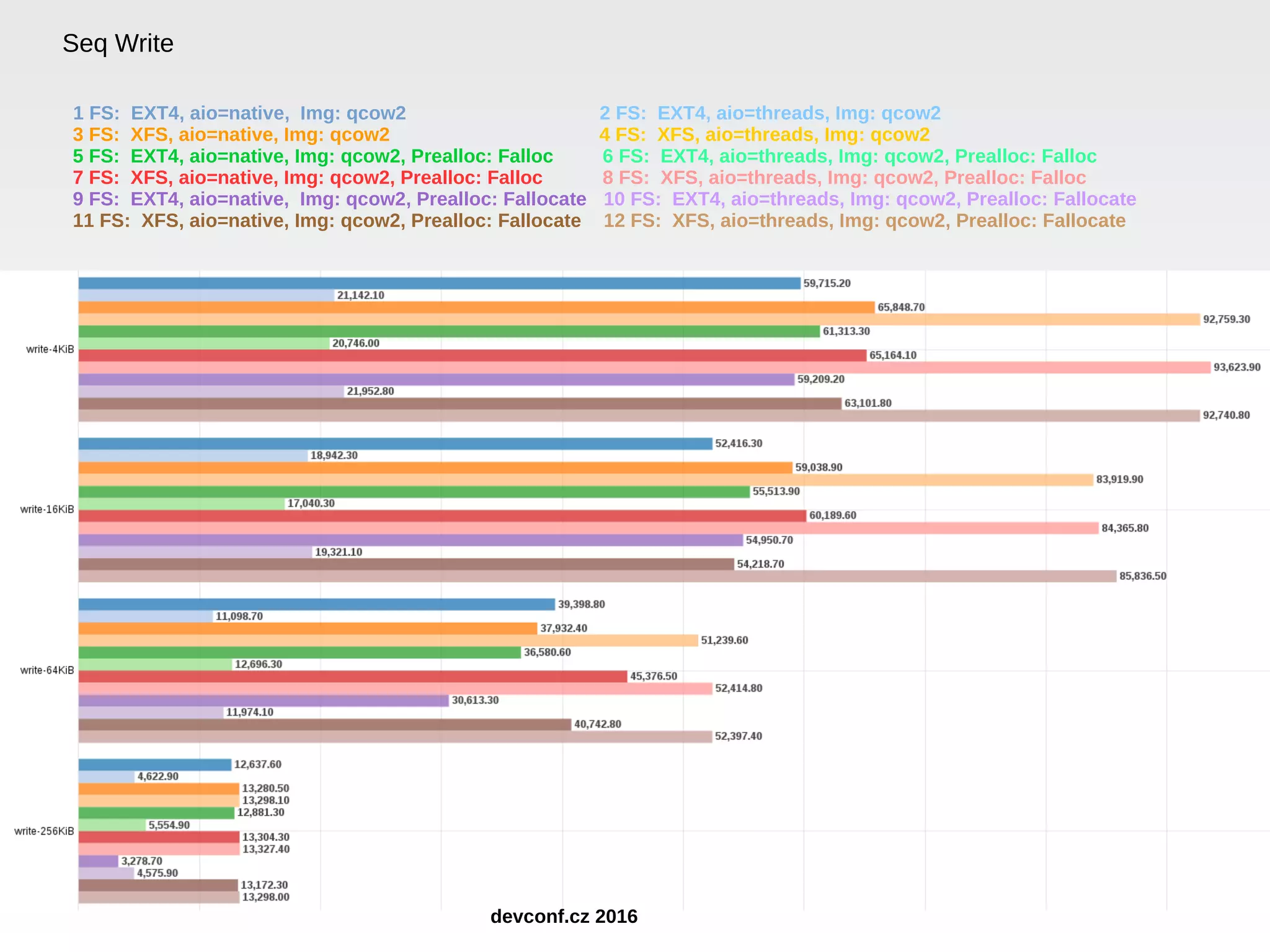

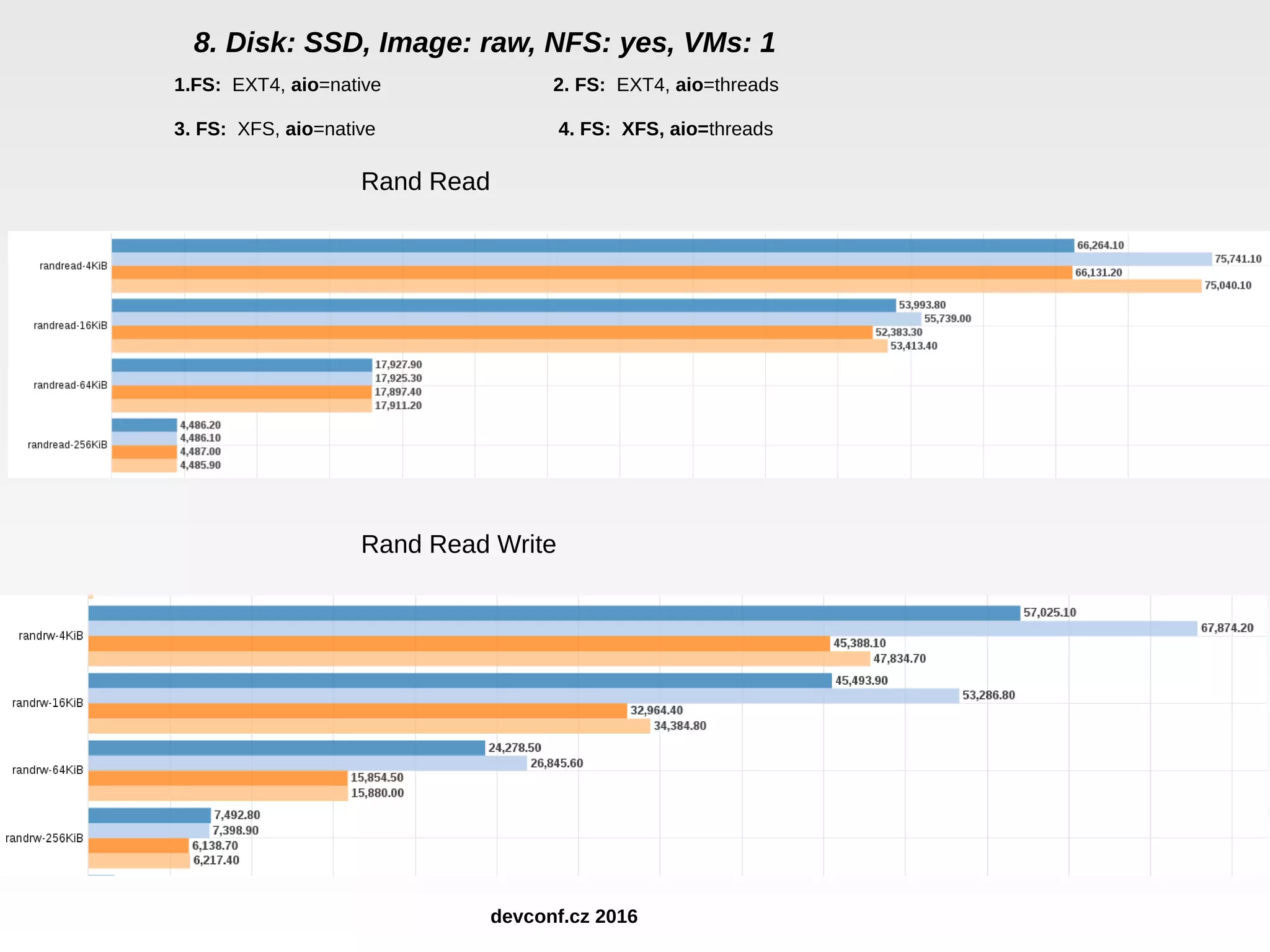

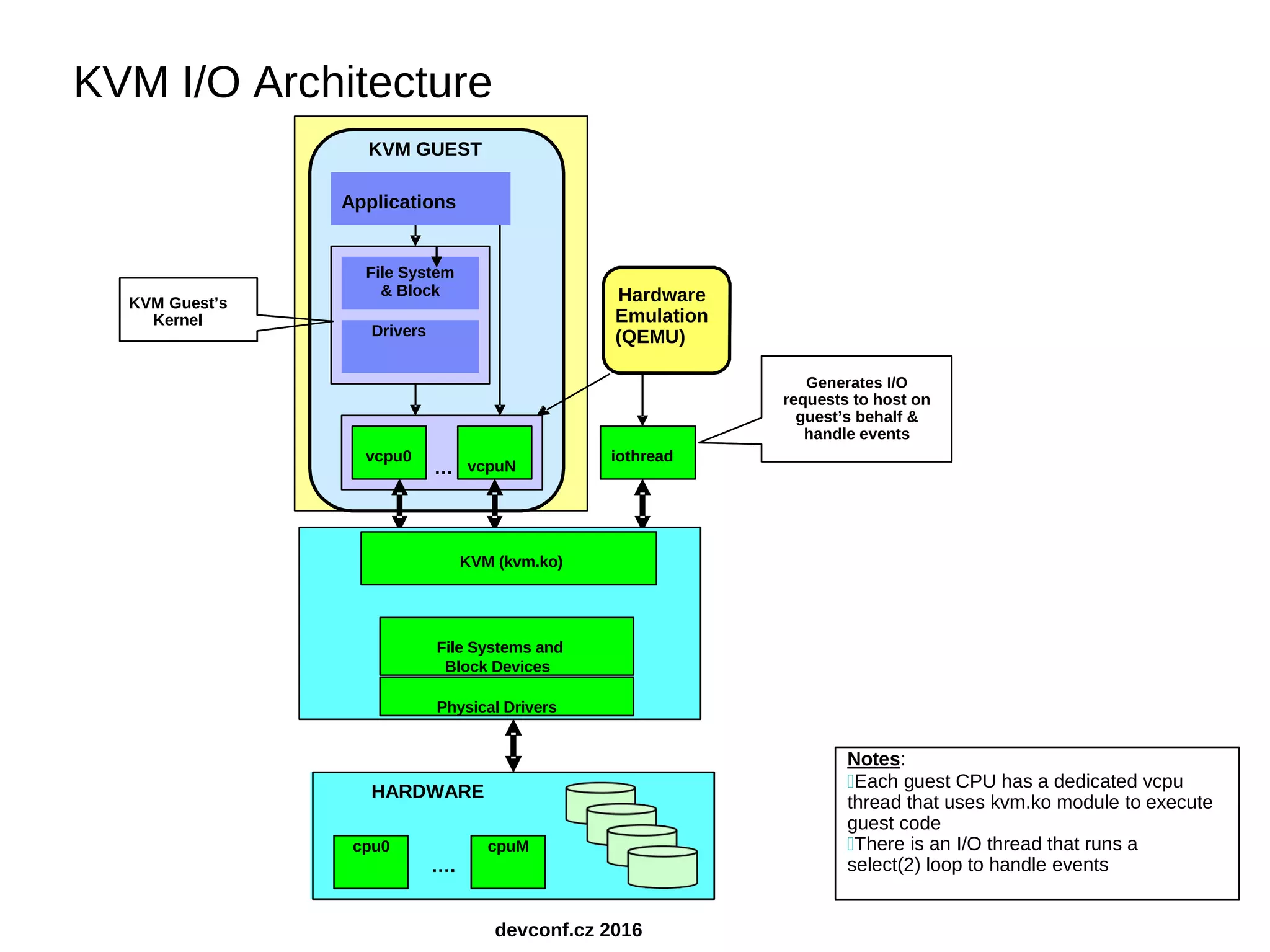

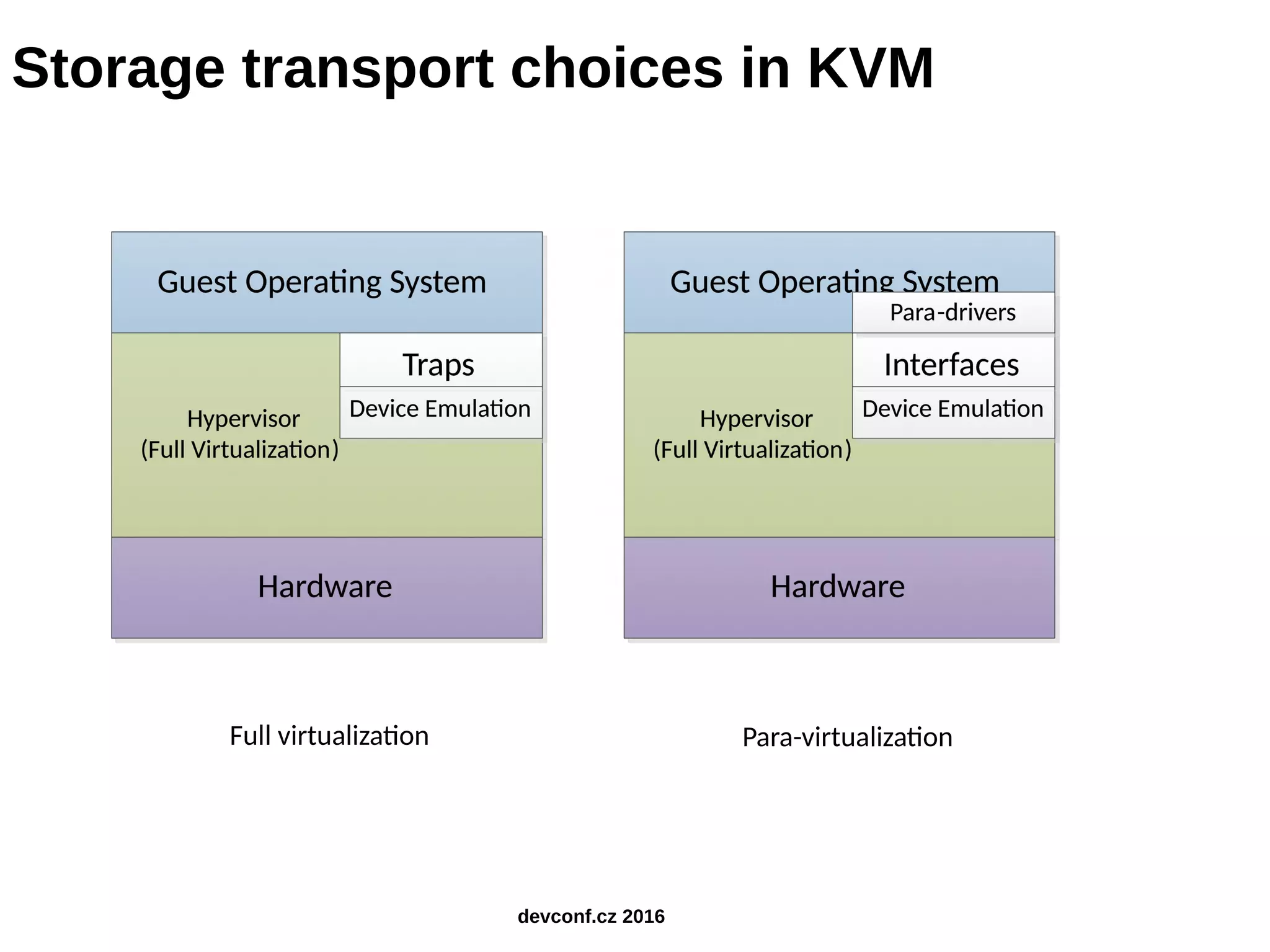

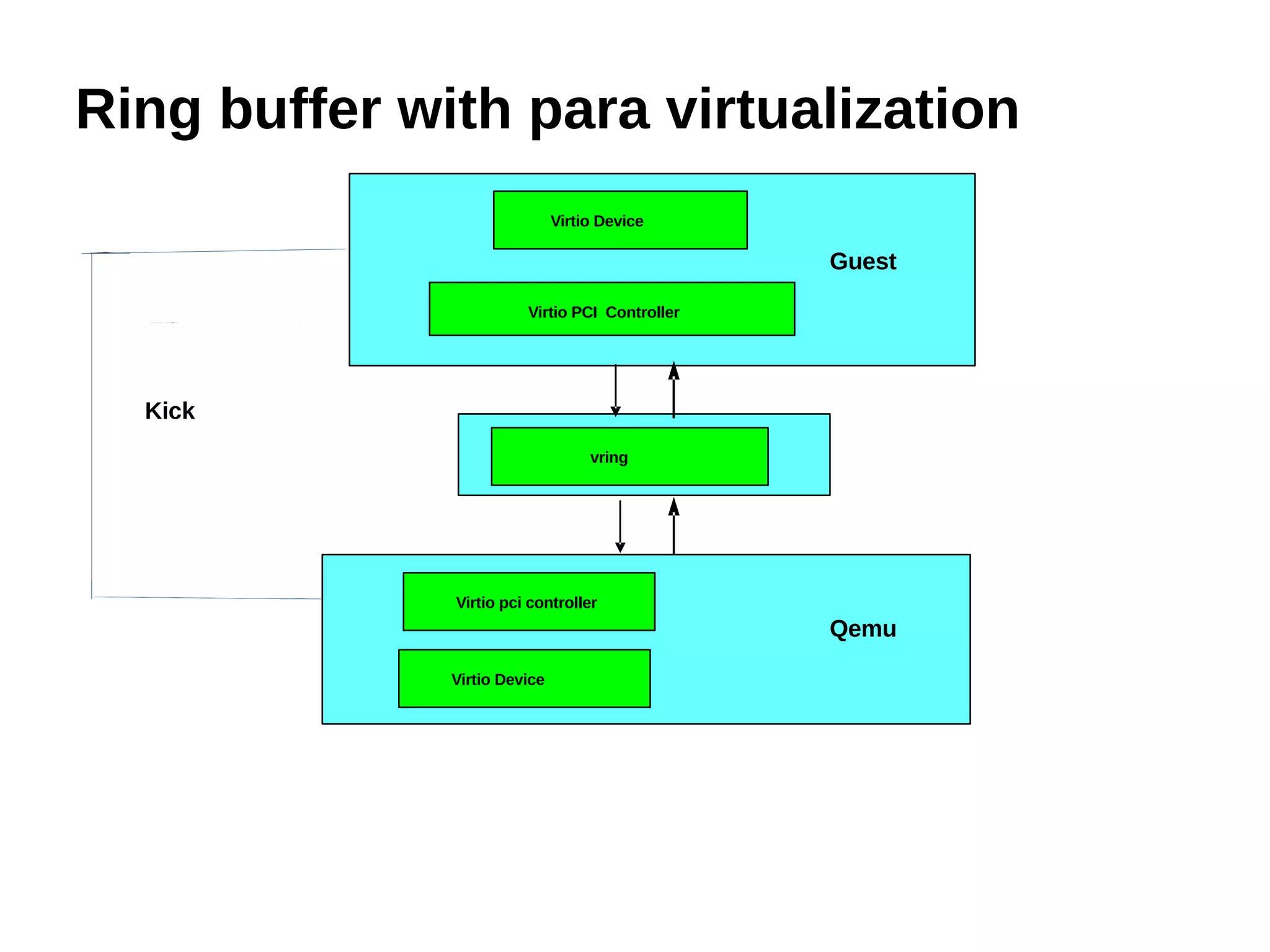

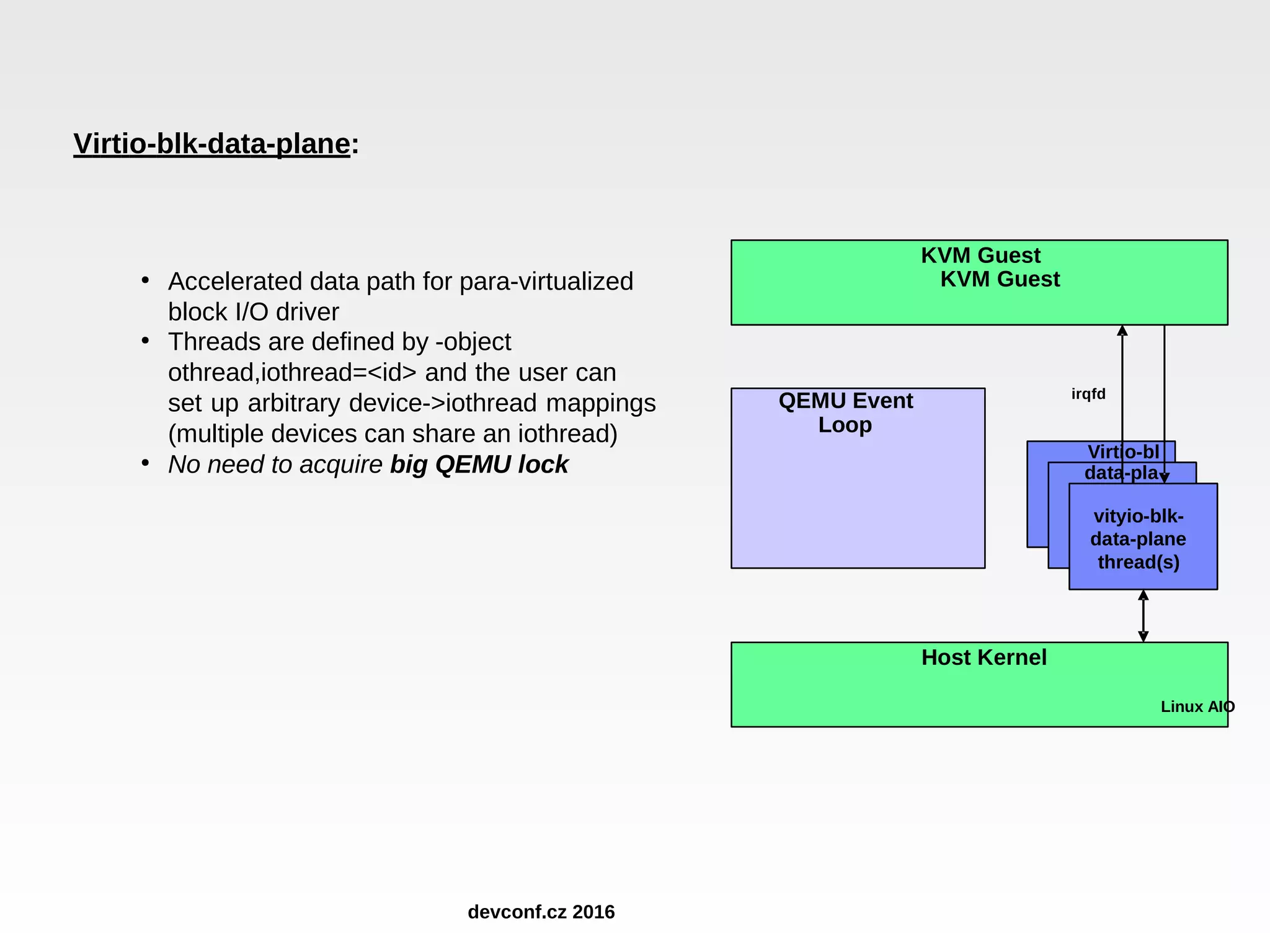

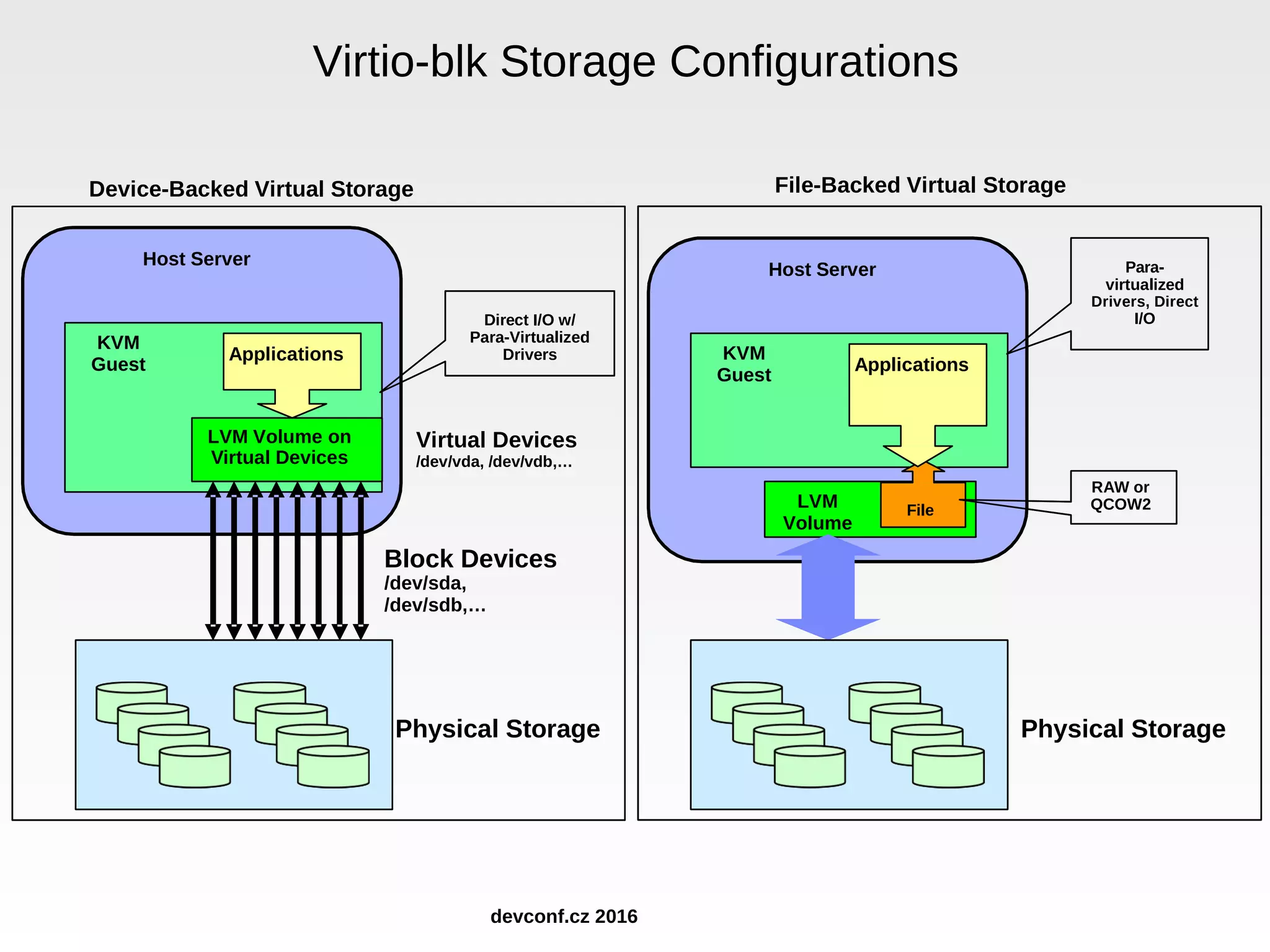

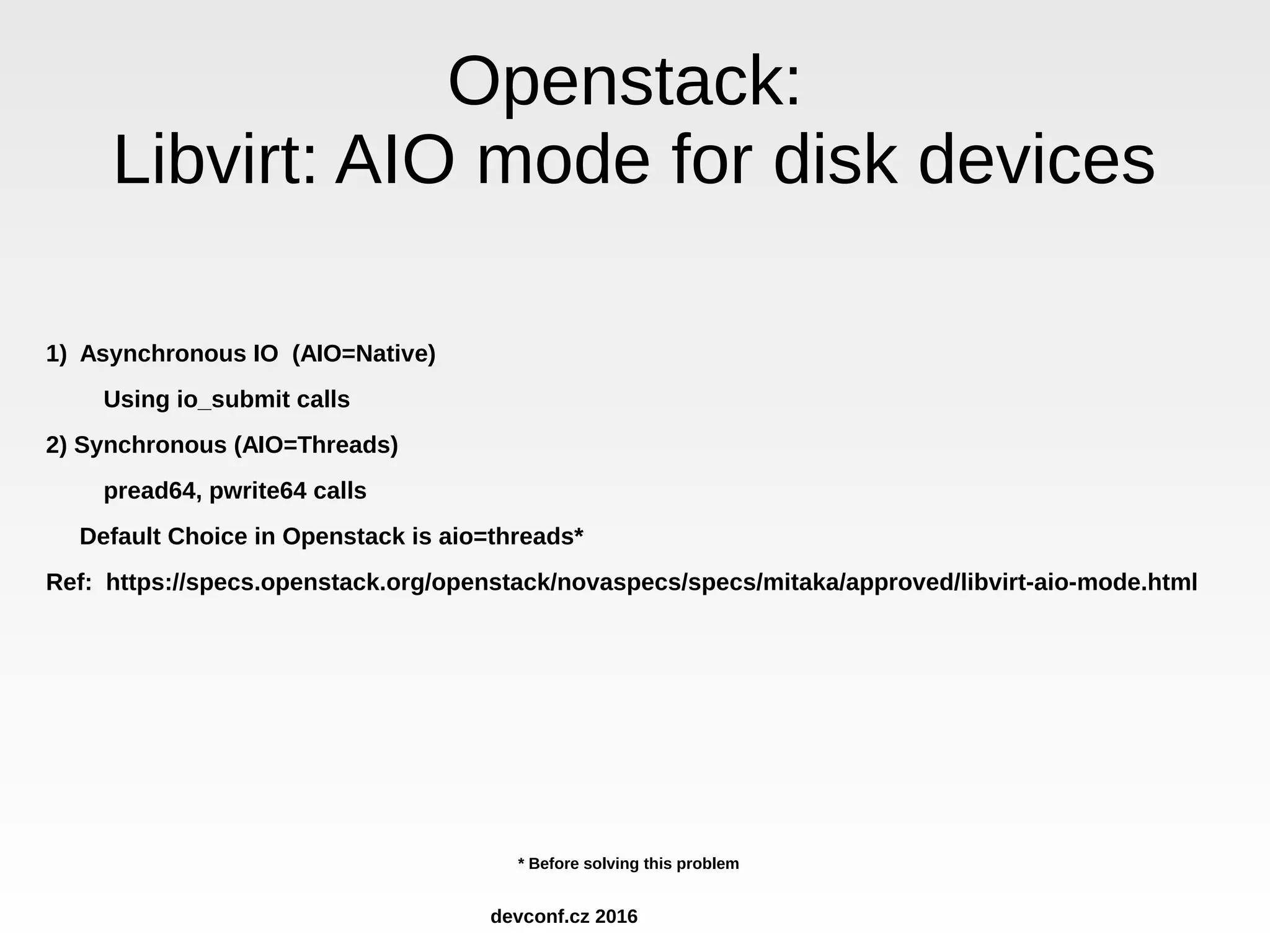

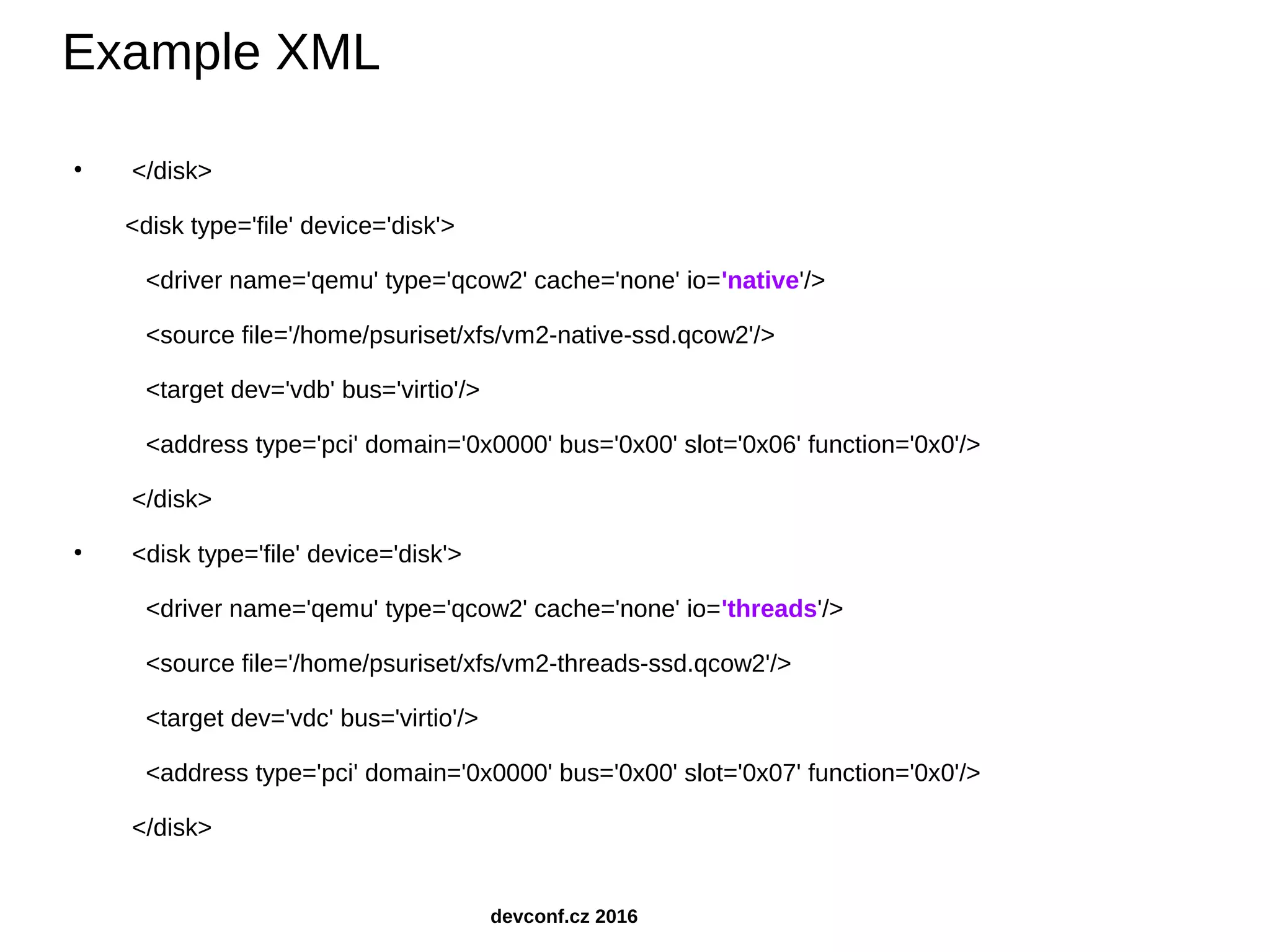

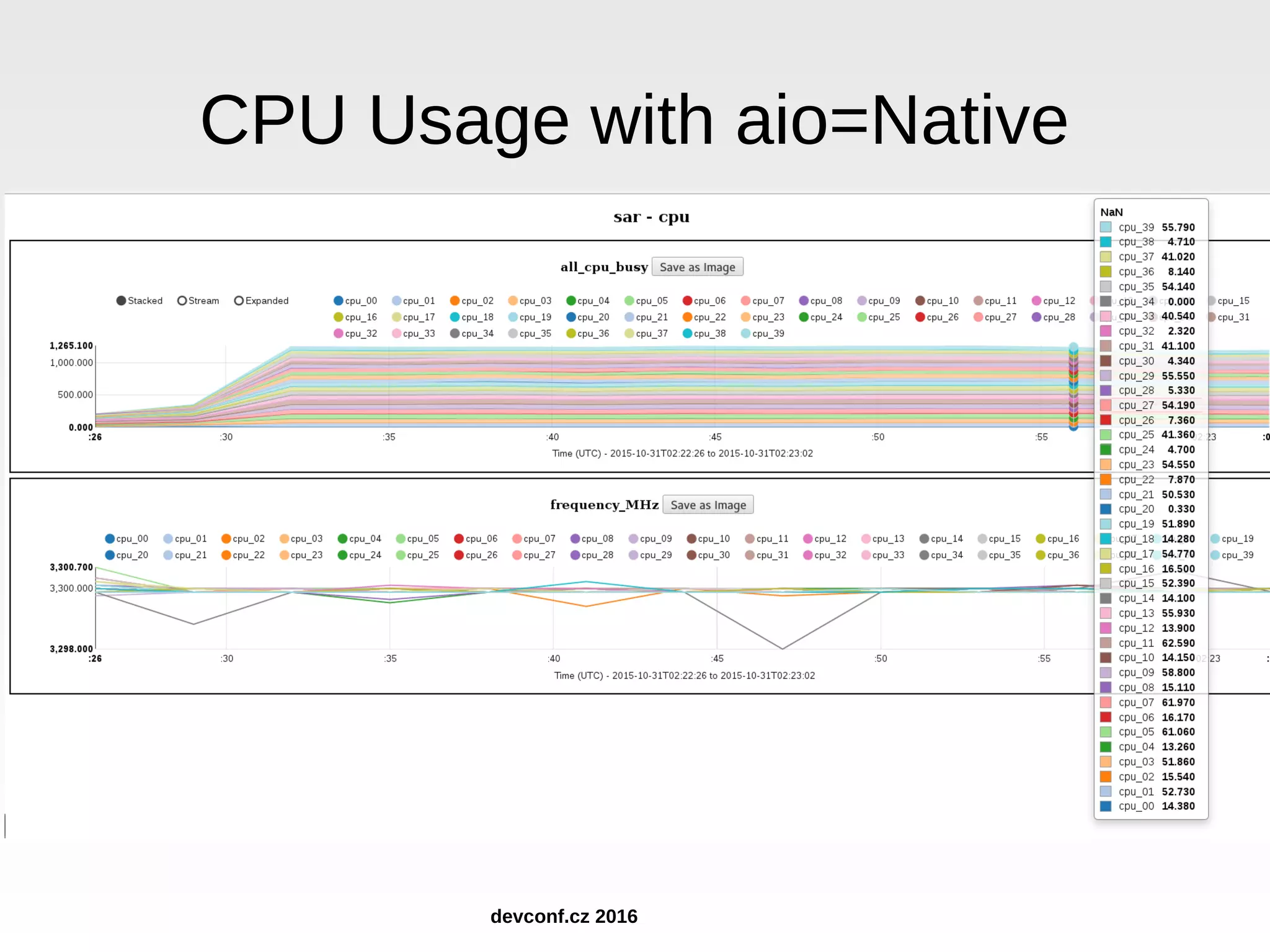

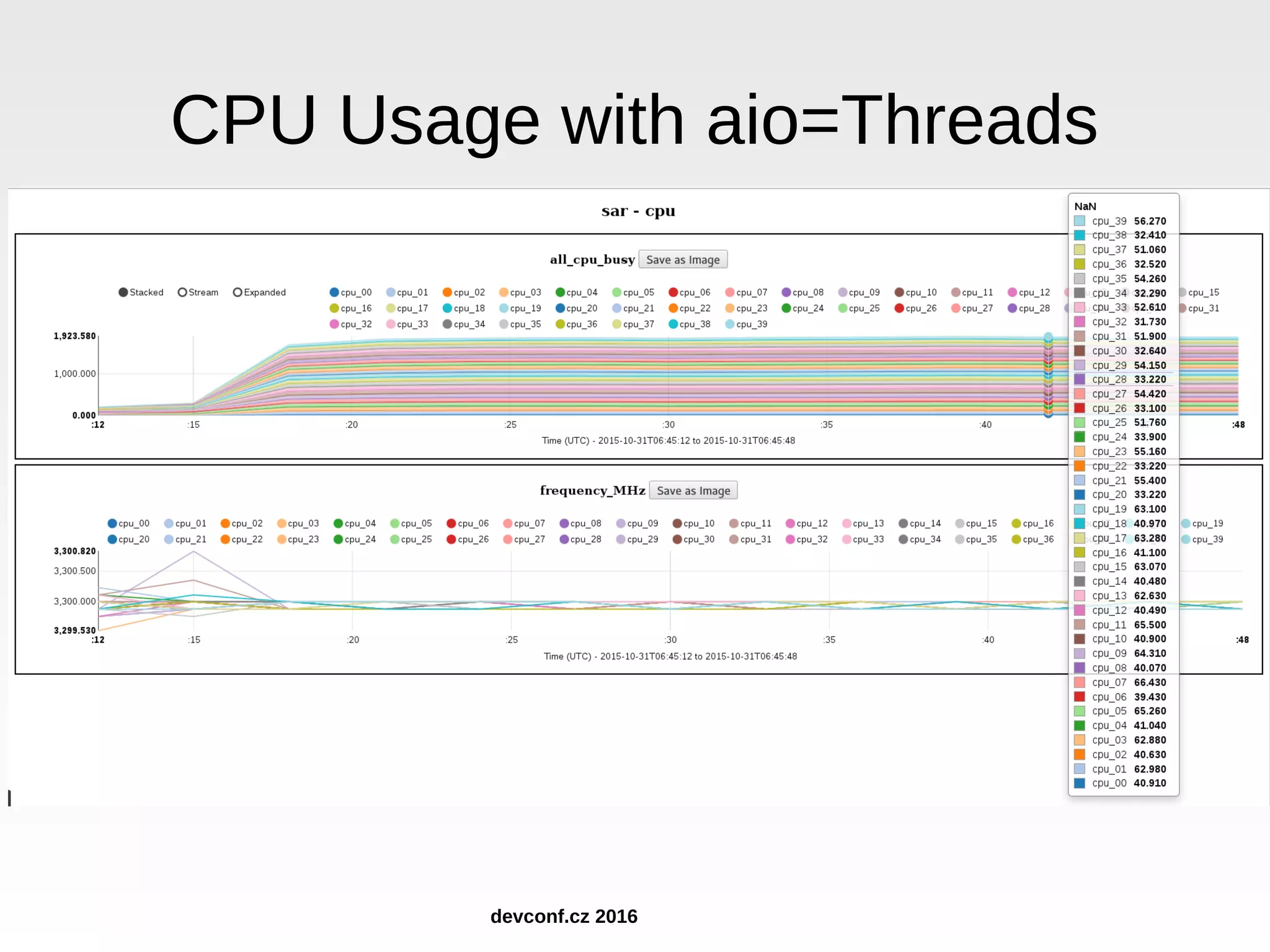

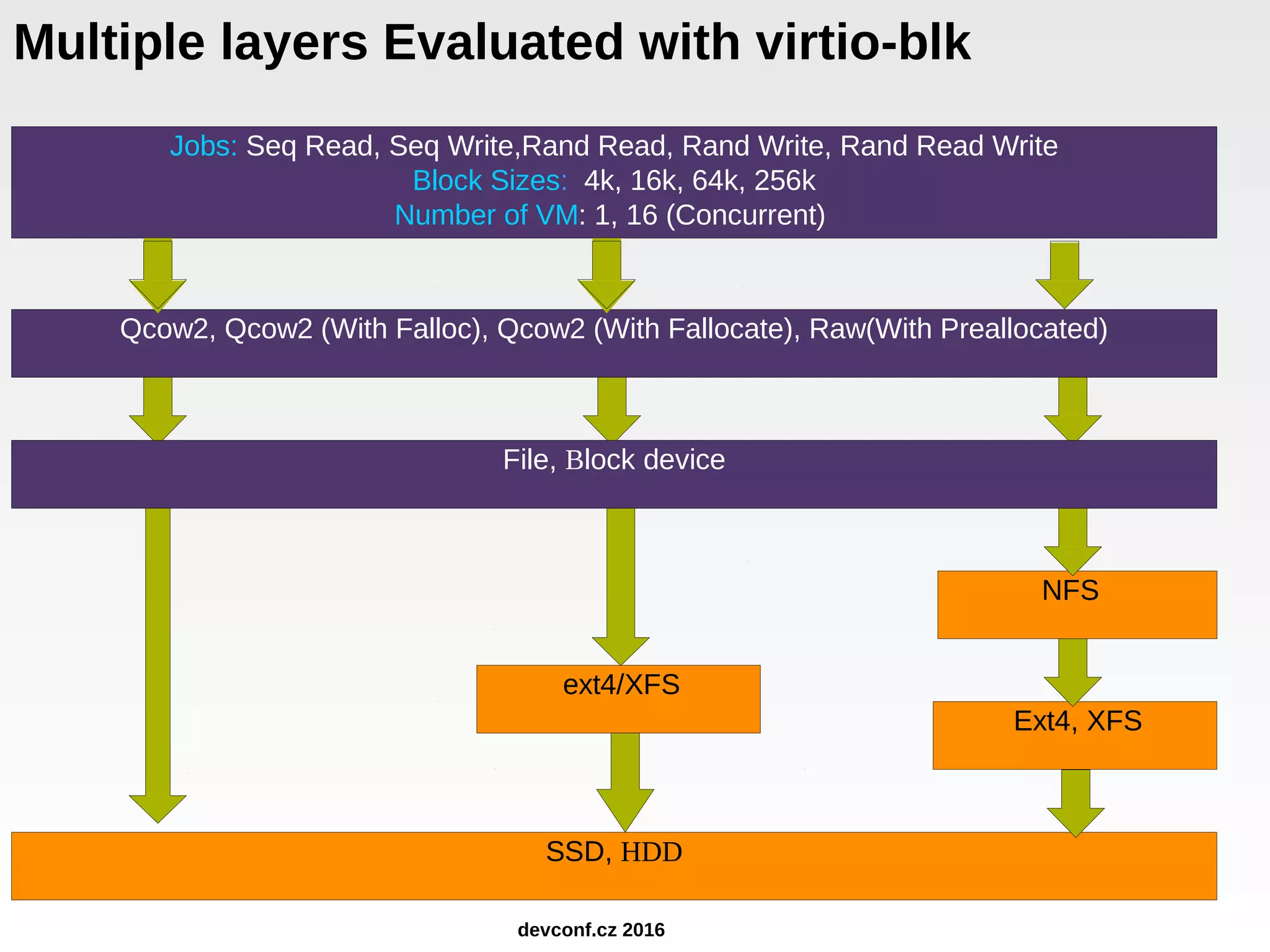

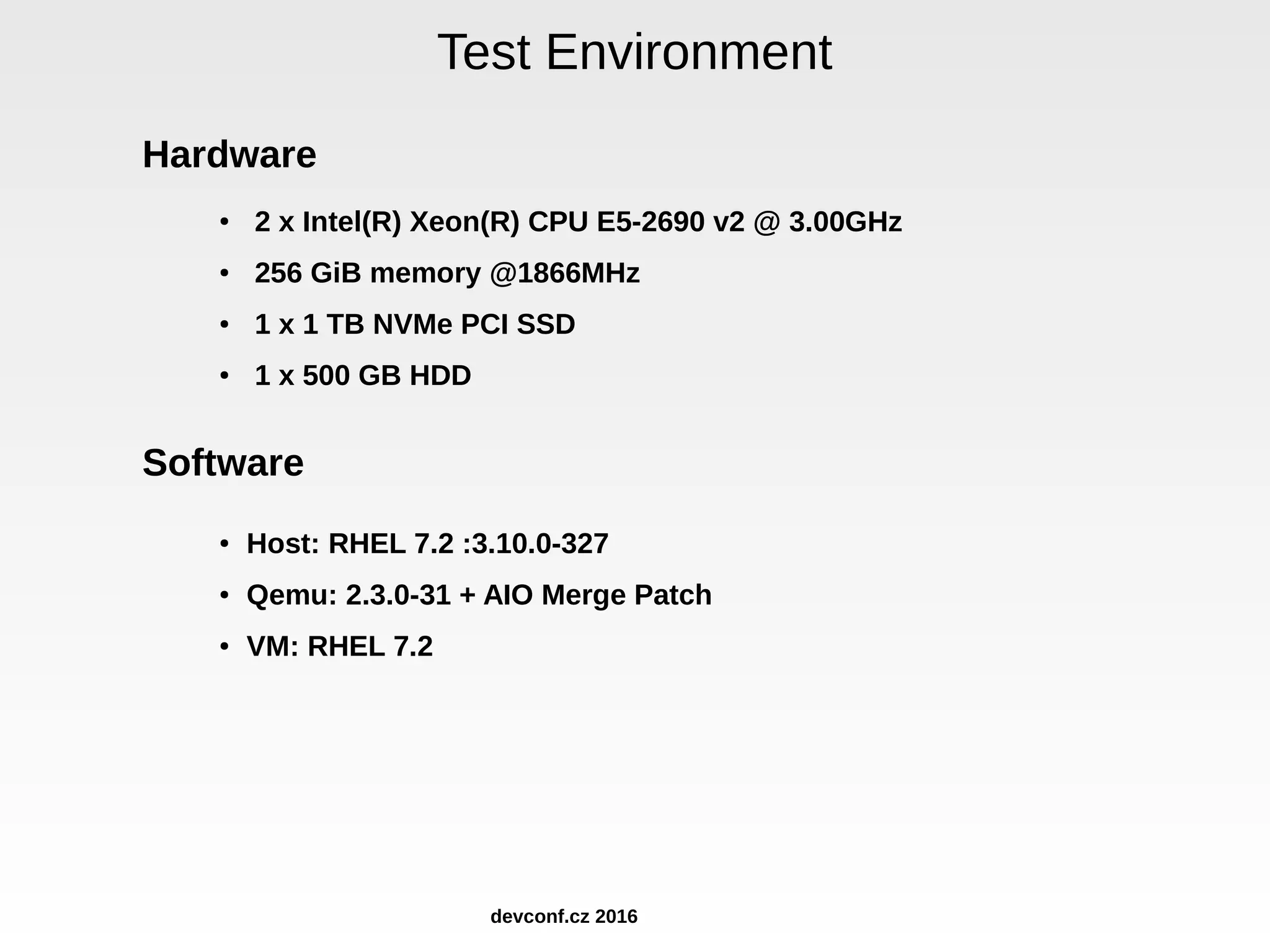

Pradeep Kumar Surisetty from Red Hat presented a comparison of native and threaded I/O performance in QEMU disk I/O. He outlined KVM I/O architecture, storage transport options in KVM including virtio-blk configurations, and benchmark tools used. Performance testing was done with various disk types, file systems, images and configurations. Native generally outperformed threads for random I/O workloads, while threads sometimes showed better performance for sequential reads, especially with multiple VMs.

![Pbench Continued .. pbench benchmarks example: fio benchmark # pbench_fio --config=baremetal-hdd runs a default set of iterations: [read,rand-read]*[4KB, 8KB….64KB] takes 5 samples per iteration and compute avg, stddev handles start/stop/post-process of tools for each iteration other fio options: --targets=<devices or files> --ioengine=[sync, libaio, others] --test-types=[read,randread,write,randwrite,randrw] --block-sizes=[<int>,[<int>]] (in KB) devconf.cz 2016](https://image.slidesharecdn.com/pradeepkumarsurisettyqemu-diskionativeorthreads-160604154008/75/QEMU-Disk-IO-Which-performs-Better-Native-or-threads-21-2048.jpg)