One may be tempted to use the entire training data to select the “optimal” classifier, then estimate the error rate This naïve approach has two fundamental problems The final model will normally overfit the training data: it will not be able to generalize to new data The problem of overfitting is more pronounced with models that have a large number of parameters The error rate estimate will be overly optimistic (lower than the true error rate) In fact, it is not uncommon to have 100% correct classification on training data The techniques presented in this lecture will allow you to make the best use of your (limited) data for Training Model selection and Performance estimation

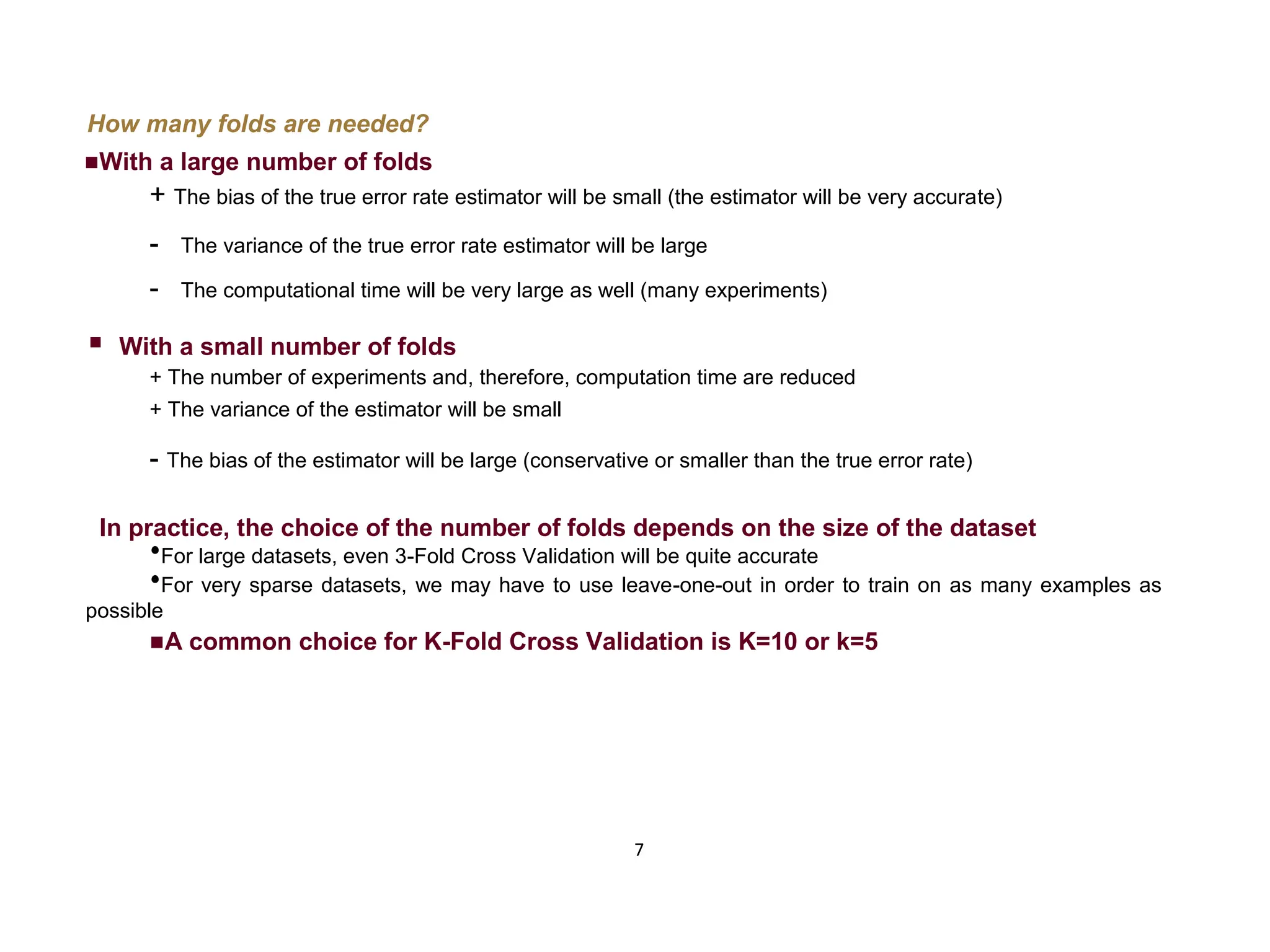

![9 The bootstrap (2) Compared to basic cross-validation, the bootstrap increases the variance that can occur in each fold [Efron and Tibshirani, 1993] This is a desirable property since it is a more realistic simulation of the real-life experiment from which our dataset was obtained Consider a classification problem with C classes, a total of N examples and Ni examples for each class ωi The a priori probability of choosing an example from class ωi is Ni/N Once we choose an example from class ωi, if we do not replace it for the next selection, then the a priori probabilities will have changed since the probability of choosing an example from class ωi will now be (Ni-1)/N Thus, sampling with replacement preserves the a priori probabilities of the classes throughout the random selection process An additional benefit of the bootstrap is its ability to obtain accurate measures of BOTH the bias and variance of the true error estimate](https://image.slidesharecdn.com/lec6crossvalidation-250704122708-67e0169a/75/Resampling-methods-Cross-Validation-Bootstrap-Bias-and-variance-estimation-with-the-Bootstrap-Three-way-data-partitioning-9-2048.jpg)

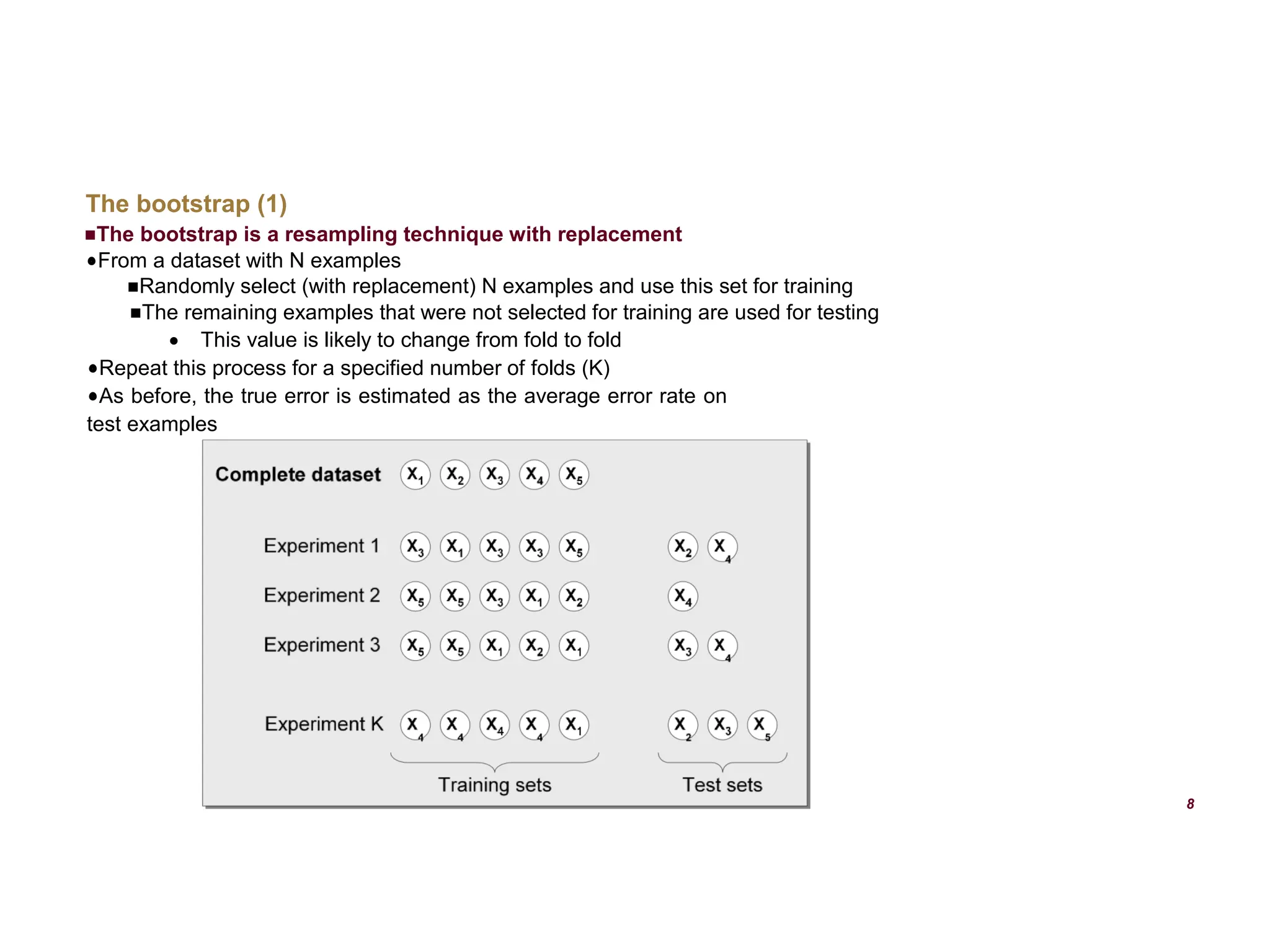

![Three-way data splits (1) If model selection and true error estimates are to be computed simultaneously, the data needs to be divided into three disjoint sets [Ripley, 1996] Training set: a set of examples used for learning: to fit the parameters of the classifier In the MLP case, we would use the training set to find the “optimal” weights with the back-prop rule Validation set: a set of examples used to tune the parameters of the algorithm e.g. a classifier. It also is used to compare the performances of the prediction algorithms that were created based on the training set. We choose the algorithm that has the best performance In the MLP case, we would use the validation set to find the “optimal” number of hidden units or determine a stopping point for the back-propagation algorithm. Use the algorithm with best parameters. Test set: a set of examples used only to assess the performance of a fully-trained classifier In the MLP case, we would use the test to estimate the error rate after we have chosen the final model (MLP size and actual weights) After assessing the final model on the test set, YOU MUST NOT tune the model any further! Why separate test and validation sets? The error rate estimate of the final model on validation data will be biased (smaller than the true error rate) since the validation set is used to select the final model After assessing the final model on the test set, YOU MUST NOT tune the model any further! 0](https://image.slidesharecdn.com/lec6crossvalidation-250704122708-67e0169a/75/Resampling-methods-Cross-Validation-Bootstrap-Bias-and-variance-estimation-with-the-Bootstrap-Three-way-data-partitioning-10-2048.jpg)